Adam Van Etten

Vehicle Vectors and Traffic Patterns from Planet Imagery

Jun 10, 2024Abstract:We explore methods to detect automobiles in Planet imagery and build a large scale vector field for moving objects. Planet operates two distinct constellations: high-resolution SkySat satellites as well as medium-resolution SuperDove satellites. We show that both static and moving cars can be identified reliably in high-resolution SkySat imagery. We are able to estimate the speed and heading of moving vehicles by leveraging the inter-band displacement (or "rainbow" effect) of moving objects. Identifying cars and trucks in medium-resolution SuperDove imagery is far more difficult, though a similar rainbow effect is observed in these satellites and enables moving vehicles to be detected and vectorized. The frequent revisit of Planet satellites enables the categorization of automobile and truck activity patterns over broad areas of interest and lengthy timeframes.

The Weaknesses of Adversarial Camouflage in Overhead Imagery

Jul 06, 2022

Abstract:Machine learning is increasingly critical for analysis of the ever-growing corpora of overhead imagery. Advanced computer vision object detection techniques have demonstrated great success in identifying objects of interest such as ships, automobiles, and aircraft from satellite and drone imagery. Yet relying on computer vision opens up significant vulnerabilities, namely, the susceptibility of object detection algorithms to adversarial attacks. In this paper we explore the efficacy and drawbacks of adversarial camouflage in an overhead imagery context. While a number of recent papers have demonstrated the ability to reliably fool deep learning classifiers and object detectors with adversarial patches, most of this work has been performed on relatively uniform datasets and only a single class of objects. In this work we utilize the VisDrone dataset, which has a large range of perspectives and object sizes. We explore four different object classes: bus, car, truck, van. We build a library of 24 adversarial patches to disguise these objects, and introduce a patch translucency variable to our patches. The translucency (or alpha value) of the patches is highly correlated to their efficacy. Further, we show that while adversarial patches may fool object detectors, the presence of such patches is often easily uncovered, with patches on average 24% more detectable than the objects the patches were meant to hide. This raises the question of whether such patches truly constitute camouflage. Source code is available at https://github.com/IQTLabs/camolo.

The SpaceNet Multi-Temporal Urban Development Challenge

Feb 23, 2021

Abstract:Building footprints provide a useful proxy for a great many humanitarian applications. For example, building footprints are useful for high fidelity population estimates, and quantifying population statistics is fundamental to ~1/4 of the United Nations Sustainable Development Goals Indicators. In this paper we (the SpaceNet Partners) discuss efforts to develop techniques for precise building footprint localization, tracking, and change detection via the SpaceNet Multi-Temporal Urban Development Challenge (also known as SpaceNet 7). In this NeurIPS 2020 competition, participants were asked identify and track buildings in satellite imagery time series collected over rapidly urbanizing areas. The competition centered around a brand new open source dataset of Planet Labs satellite imagery mosaics at 4m resolution, which includes 24 images (one per month) covering ~100 unique geographies. Tracking individual buildings at this resolution is quite challenging, yet the winning participants demonstrated impressive performance with the newly developed SpaceNet Change and Object Tracking (SCOT) metric. This paper details the top-5 winning approaches, as well as analysis of results that yielded a handful of interesting anecdotes such as decreasing performance with latitude.

The Multi-Temporal Urban Development SpaceNet Dataset

Feb 08, 2021

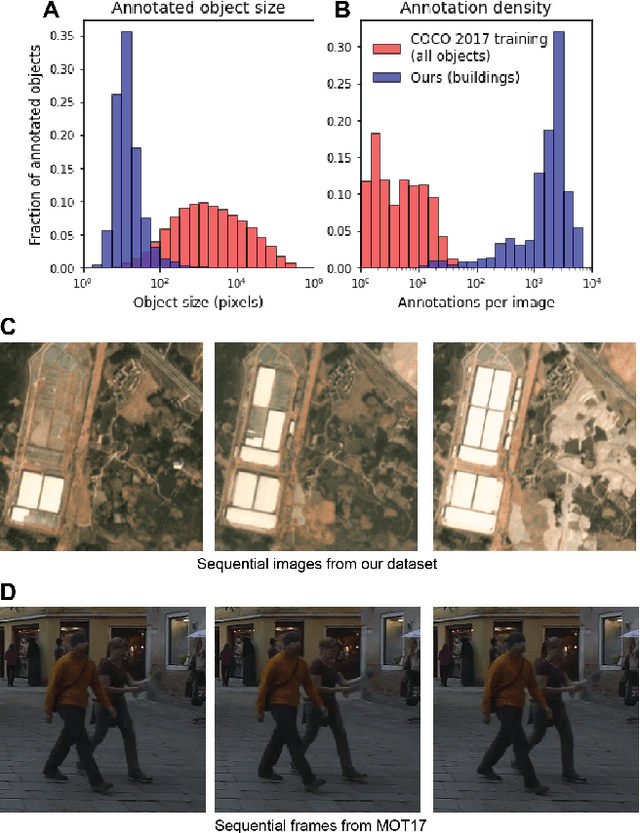

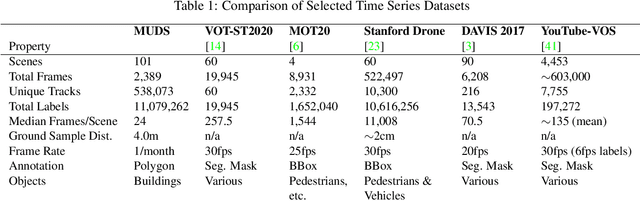

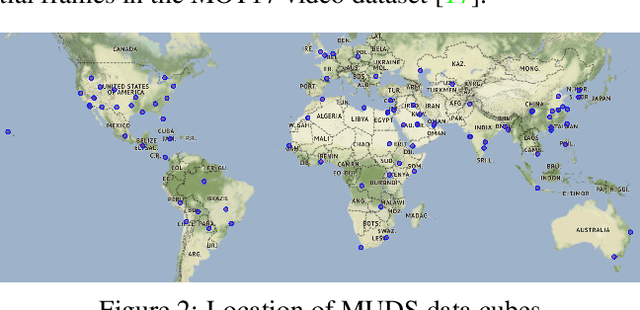

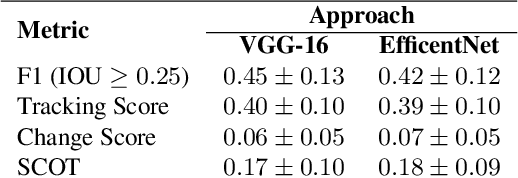

Abstract:Satellite imagery analytics have numerous human development and disaster response applications, particularly when time series methods are involved. For example, quantifying population statistics is fundamental to 67 of the 231 United Nations Sustainable Development Goals Indicators, but the World Bank estimates that over 100 countries currently lack effective Civil Registration systems. To help address this deficit and develop novel computer vision methods for time series data, we present the Multi-Temporal Urban Development SpaceNet (MUDS, also known as SpaceNet 7) dataset. This open source dataset consists of medium resolution (4.0m) satellite imagery mosaics, which includes 24 images (one per month) covering >100 unique geographies, and comprises >40,000 km2 of imagery and exhaustive polygon labels of building footprints therein, totaling over 11M individual annotations. Each building is assigned a unique identifier (i.e. address), which permits tracking of individual objects over time. Label fidelity exceeds image resolution; this "omniscient labeling" is a unique feature of the dataset, and enables surprisingly precise algorithmic models to be crafted. We demonstrate methods to track building footprint construction (or demolition) over time, thereby directly assessing urbanization. Performance is measured with the newly developed SpaceNet Change and Object Tracking (SCOT) metric, which quantifies both object tracking as well as change detection. We demonstrate that despite the moderate resolution of the data, we are able to track individual building identifiers over time. This task has broad implications for disaster preparedness, the environment, infrastructure development, and epidemic prevention.

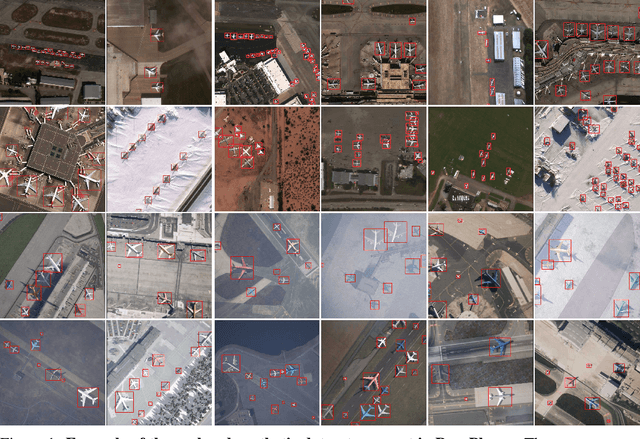

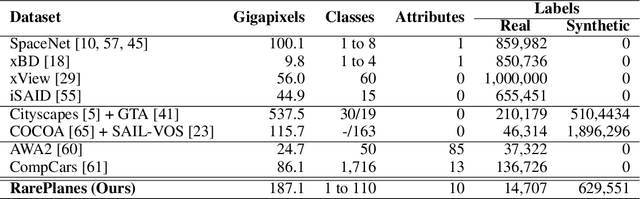

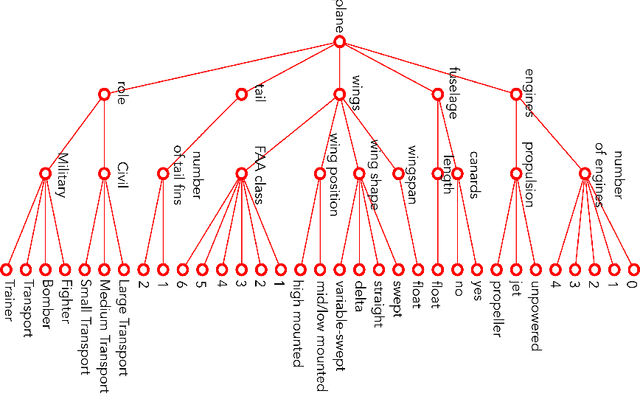

RarePlanes: Synthetic Data Takes Flight

Jun 04, 2020

Abstract:RarePlanes is a unique open-source machine learning dataset that incorporates both real and synthetically generated satellite imagery. The RarePlanes dataset specifically focuses on the value of synthetic data to aid computer vision algorithms in their ability to automatically detect aircraft and their attributes in satellite imagery. Although other synthetic/real combination datasets exist, RarePlanes is the largest openly-available very-high resolution dataset built to test the value of synthetic data from an overhead perspective. Previous research has shown that synthetic data can reduce the amount of real training data needed and potentially improve performance for many tasks in the computer vision domain. The real portion of the dataset consists of 253 Maxar WorldView-3 satellite scenes spanning 112 locations and 2,142 km^2 with 14,700 hand-annotated aircraft. The accompanying synthetic dataset is generated via a novel simulation platform and features 50,000 synthetic satellite images with ~630,000 aircraft annotations. Both the real and synthetically generated aircraft feature 10 fine grain attributes including: aircraft length, wingspan, wing-shape, wing-position, wingspan class, propulsion, number of engines, number of vertical-stabilizers, presence of canards, and aircraft role. Finally, we conduct extensive experiments to evaluate the real and synthetic datasets and compare performances. By doing so, we show the value of synthetic data for the task of detecting and classifying aircraft from an overhead perspective.

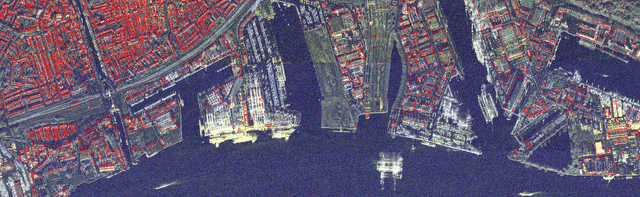

SpaceNet 6: Multi-Sensor All Weather Mapping Dataset

Apr 14, 2020

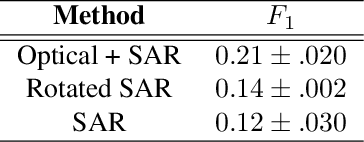

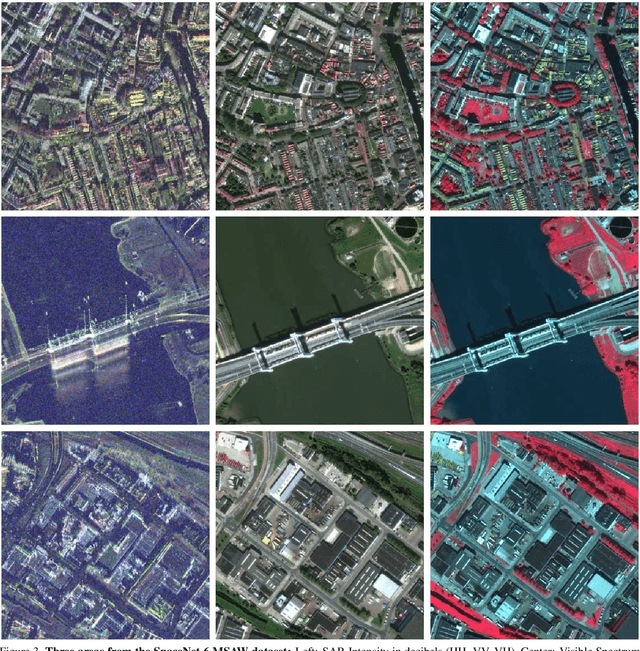

Abstract:Within the remote sensing domain, a diverse set of acquisition modalities exist, each with their own unique strengths and weaknesses. Yet, most of the current literature and open datasets only deal with electro-optical (optical) data for different detection and segmentation tasks at high spatial resolutions. optical data is often the preferred choice for geospatial applications, but requires clear skies and little cloud cover to work well. Conversely, Synthetic Aperture Radar (SAR) sensors have the unique capability to penetrate clouds and collect during all weather, day and night conditions. Consequently, SAR data are particularly valuable in the quest to aid disaster response, when weather and cloud cover can obstruct traditional optical sensors. Despite all of these advantages, there is little open data available to researchers to explore the effectiveness of SAR for such applications, particularly at very-high spatial resolutions, i.e. <1m Ground Sample Distance (GSD). To address this problem, we present an open Multi-Sensor All Weather Mapping (MSAW) dataset and challenge, which features two collection modalities (both SAR and optical). The dataset and challenge focus on mapping and building footprint extraction using a combination of these data sources. MSAW covers 120 km^2 over multiple overlapping collects and is annotated with over 48,000 unique building footprints labels, enabling the creation and evaluation of mapping algorithms for multi-modal data. We present a baseline and benchmark for building footprint extraction with SAR data and find that state-of-the-art segmentation models pre-trained on optical data, and then trained on SAR (F1 score of 0.21) outperform those trained on SAR data alone (F1 score of 0.135).

Road Network and Travel Time Extraction from Multiple Look Angles with SpaceNet Data

Jan 16, 2020

Abstract:Identification of road networks and optimal routes directly from remote sensing is of critical importance to a broad array of humanitarian and commercial applications. Yet while identification of road pixels has been attempted before, estimation of route travel times from overhead imagery remains a novel problem, particularly for off-nadir overhead imagery. To this end, we extract road networks with travel time estimates from the SpaceNet MVOI dataset. Utilizing the CRESIv2 framework, we demonstrate the ability to extract road networks in various observation angles and quantify performance at 27 unique nadir angles with the graph-theoretic APLS_length and APLS_time metrics. A minimal gap of 0.03 between APLS_length and APLS_time scores indicates that our approach yields speed limits and travel times with very high fidelity. We also explore the utility of incorporating all available angles during model training, and find a peak score of APLS_time = 0.56. The combined model exhibits greatly improved robustness over angle-specific models, despite the very different appearance of road networks at extremely oblique off-nadir angles versus images captured from directly overhead.

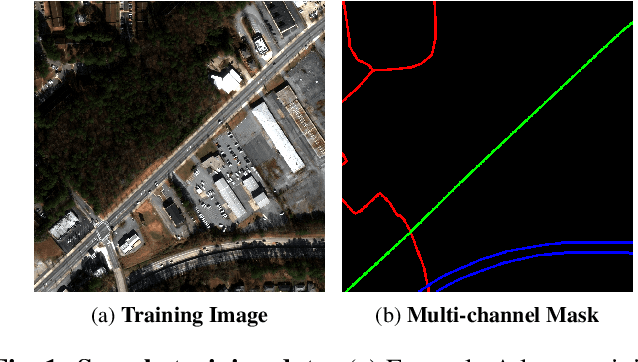

City-Scale Road Extraction from Satellite Imagery v2: Road Speeds and Travel Times

Aug 06, 2019

Abstract:Automated road network extraction from remote sensing imagery remains a significant challenge despite its importance in a broad array of applications. To this end, we explore road network extraction at scale with inference of semantic features of the graph, identifying speed limits and route travel times for each roadway. We call this approach City-Scale Road Extraction from Satellite Imagery v2 (CRESIv2). Including estimates for travel time permits true optimal routing, not just the shortest geographic distance. We compare SpaceNet labels to OpenStreetMap (OSM) labels, and find that models both trained and tested on SpaceNet labels outperform OSM labels by 60% or greater. For a diverse test set of SpaceNet data and a traditional edge weight of geometric distance, we find an aggregate of 5% improvement over existing methods. We also test our algorithm on Google satellite imagery with OpenStreetMap labels, and find a 23% improvement over previous work. Metric scores decrease by only 4% on large graphs when using travel time rather than geometric distance for edge weights, indicating that optimizing routing for travel time is feasible with this approach.

City-scale Road Extraction from Satellite Imagery

Apr 22, 2019

Abstract:Automated road network extraction from remote sensing imagery remains a significant challenge despite its importance in a broad array of applications. To this end, we leverage recent open source advances and the high quality SpaceNet dataset to explore road network extraction at scale, and approach we call City-scale Road Extraction from Satellite Imagery (CRESI). Specifically, we create an algorithm to extract road networks directly from imagery over city-scale regions, which can subsequently be used for routing purposes. We quantify the performance of our algorithm with the APLS and TOPO graph-theoretic metrics over a diverse 608 square kilometer test area covering four cities. We find an aggregate score of APLS = 0.73, and a TOPO score of 0.58 (a significant improvement over existing methods). Inference speed is 160 square kilometers per hour on modest hardware.

SpaceNet MVOI: a Multi-View Overhead Imagery Dataset

Mar 28, 2019

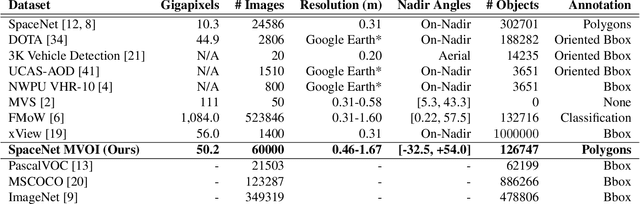

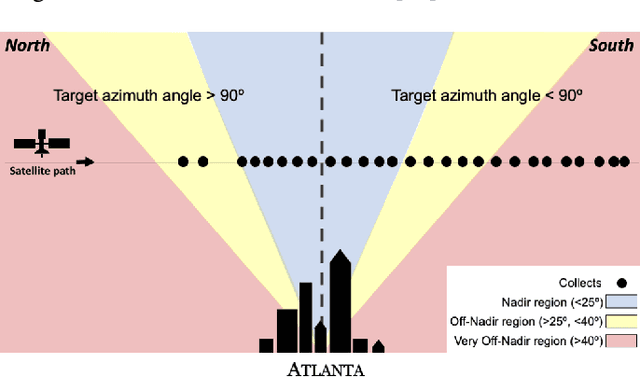

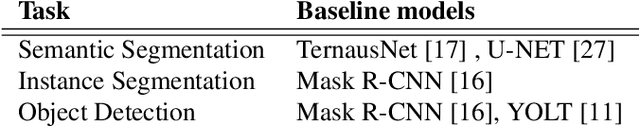

Abstract:Detection and segmentation of objects in overheard imagery is a challenging task. The variable density, random orientation, small size, and instance-to-instance heterogeneity of objects in overhead imagery calls for approaches distinct from existing models designed for natural scene datasets. Though new overhead imagery datasets are being developed, they almost universally comprise a single view taken from directly overhead ("at nadir"), failing to address one critical variable: look angle. By contrast, views vary in real-world overhead imagery, particularly in dynamic scenarios such as natural disasters where first looks are often over 40 degrees off-nadir. This represents an important challenge to computer vision methods, as changing view angle adds distortions, alters resolution, and changes lighting. At present, the impact of these perturbations for algorithmic detection and segmentation of objects is untested. To address this problem, we introduce the SpaceNet Multi-View Overhead Imagery (MVOI) Dataset, an extension of the SpaceNet open source remote sensing dataset. MVOI comprises 27 unique looks from a broad range of viewing angles (-32 to 54 degrees). Each of these images cover the same geography and are annotated with 126,747 building footprint labels, enabling direct assessment of the impact of viewpoint perturbation on model performance. We benchmark multiple leading segmentation and object detection models on: (1) building detection, (2) generalization to unseen viewing angles and resolutions, and (3) sensitivity of building footprint extraction to changes in resolution. We find that segmentation and object detection models struggle to identify buildings in off-nadir imagery and generalize poorly to unseen views, presenting an important benchmark to explore the broadly relevant challenge of detecting small, heterogeneous target objects in visually dynamic contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge