James Mickens

Guillotine: Hypervisors for Isolating Malicious AIs

Apr 22, 2025Abstract:As AI models become more embedded in critical sectors like finance, healthcare, and the military, their inscrutable behavior poses ever-greater risks to society. To mitigate this risk, we propose Guillotine, a hypervisor architecture for sandboxing powerful AI models -- models that, by accident or malice, can generate existential threats to humanity. Although Guillotine borrows some well-known virtualization techniques, Guillotine must also introduce fundamentally new isolation mechanisms to handle the unique threat model posed by existential-risk AIs. For example, a rogue AI may try to introspect upon hypervisor software or the underlying hardware substrate to enable later subversion of that control plane; thus, a Guillotine hypervisor requires careful co-design of the hypervisor software and the CPUs, RAM, NIC, and storage devices that support the hypervisor software, to thwart side channel leakage and more generally eliminate mechanisms for AI to exploit reflection-based vulnerabilities. Beyond such isolation at the software, network, and microarchitectural layers, a Guillotine hypervisor must also provide physical fail-safes more commonly associated with nuclear power plants, avionic platforms, and other types of mission critical systems. Physical fail-safes, e.g., involving electromechanical disconnection of network cables, or the flooding of a datacenter which holds a rogue AI, provide defense in depth if software, network, and microarchitectural isolation is compromised and a rogue AI must be temporarily shut down or permanently destroyed.

TinyML Security: Exploring Vulnerabilities in Resource-Constrained Machine Learning Systems

Nov 11, 2024

Abstract:Tiny Machine Learning (TinyML) systems, which enable machine learning inference on highly resource-constrained devices, are transforming edge computing but encounter unique security challenges. These devices, restricted by RAM and CPU capabilities two to three orders of magnitude smaller than conventional systems, make traditional software and hardware security solutions impractical. The physical accessibility of these devices exacerbates their susceptibility to side-channel attacks and information leakage. Additionally, TinyML models pose security risks, with weights potentially encoding sensitive data and query interfaces that can be exploited. This paper offers the first thorough survey of TinyML security threats. We present a device taxonomy that differentiates between IoT, EdgeML, and TinyML, highlighting vulnerabilities unique to TinyML. We list various attack vectors, assess their threat levels using the Common Vulnerability Scoring System, and evaluate both existing and possible defenses. Our analysis identifies where traditional security measures are adequate and where solutions tailored to TinyML are essential. Our results underscore the pressing need for specialized security solutions in TinyML to ensure robust and secure edge computing applications. We aim to inform the research community and inspire innovative approaches to protecting this rapidly evolving and critical field.

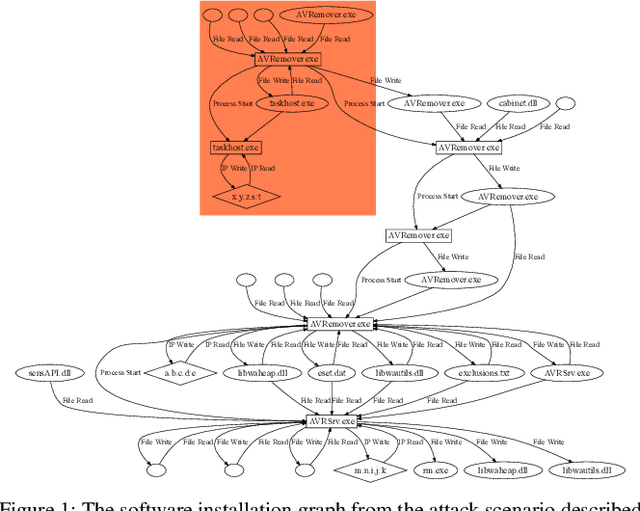

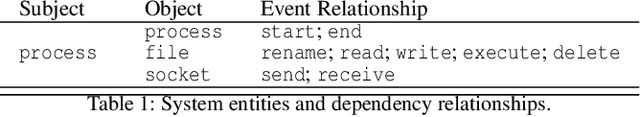

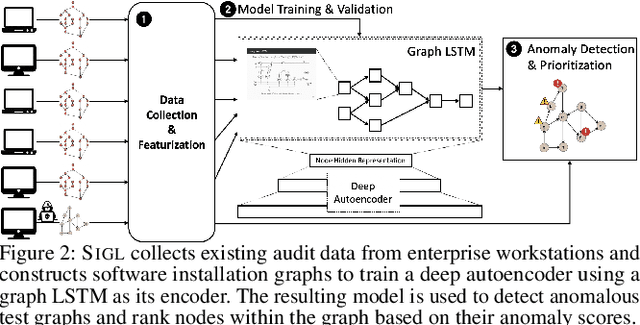

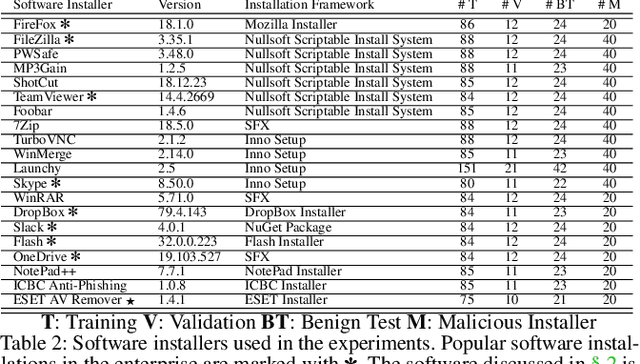

SIGL: Securing Software Installations Through Deep Graph Learning

Aug 26, 2020

Abstract:Many users implicitly assume that software can only be exploited after it is installed. However, recent supply-chain attacks demonstrate that application integrity must be ensured during installation itself. We introduce SIGL, a new tool for detecting malicious behavior during software installation. SIGL collects traces of system call activity, building a data provenance graph that it analyzes using a novel autoencoder architecture with a graph long short-term memory network (graph LSTM) for the encoder and a standard multilayer perceptron for the decoder. SIGL flags suspicious installations as well as the specific installation-time processes that are likely to be malicious. Using a test corpus of 625 malicious installers containing real-world malware, we demonstrate that SIGL has a detection accuracy of 96%, outperforming similar systems from industry and academia by up to 87% in precision and recall and 45% in accuracy. We also demonstrate that SIGL can pinpoint the processes most likely to have triggered malicious behavior, works on different audit platforms and operating systems, and is robust to training data contamination and adversarial attack. It can be used with application-specific models, even in the presence of new software versions, as well as application-agnostic meta-models that encompass a wide range of applications and installers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge