Jaime Arguello

Overview of the TREC 2025 Tip-of-the-Tongue track

Jan 28, 2026Abstract:Tip-of-the-tongue (ToT) known-item retrieval involves re-finding an item for which the searcher does not reliably recall an identifier. ToT information requests (or queries) are verbose and tend to include several complex phenomena, making them especially difficult for existing information retrieval systems. The TREC 2025 ToT track focused on a single ad-hoc retrieval task. This year, we extended the track to general domain and incorporated different sets of test queries from diverse sources, namely from the MS-ToT dataset, manual topic development, and LLM-based synthetic query generation. This year, 9 groups (including the track coordinators) submitted 32 runs.

Tip of the Tongue Query Elicitation for Simulated Evaluation

Feb 25, 2025

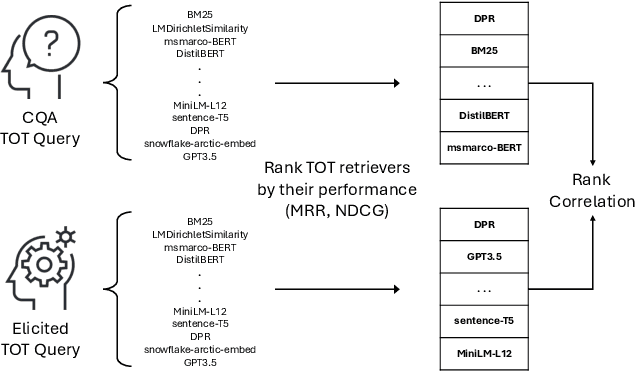

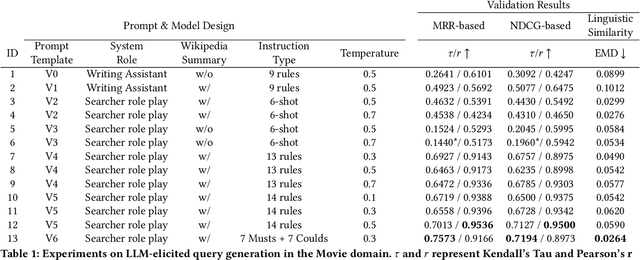

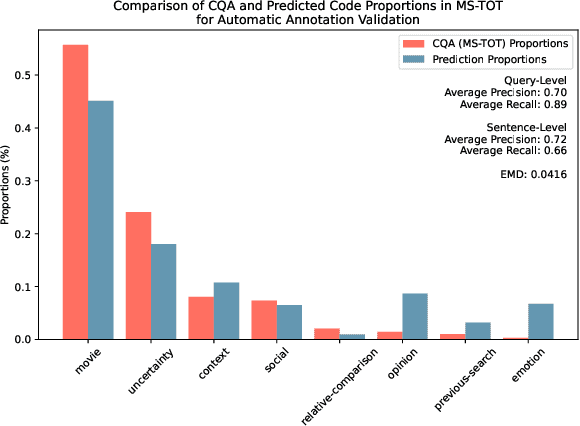

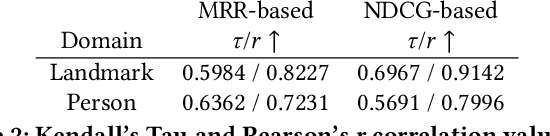

Abstract:Tip-of-the-tongue (TOT) search occurs when a user struggles to recall a specific identifier, such as a document title. While common, existing search systems often fail to effectively support TOT scenarios. Research on TOT retrieval is further constrained by the challenge of collecting queries, as current approaches rely heavily on community question-answering (CQA) websites, leading to labor-intensive evaluation and domain bias. To overcome these limitations, we introduce two methods for eliciting TOT queries - leveraging large language models (LLMs) and human participants - to facilitate simulated evaluations of TOT retrieval systems. Our LLM-based TOT user simulator generates synthetic TOT queries at scale, achieving high correlations with how CQA-based TOT queries rank TOT retrieval systems when tested in the Movie domain. Additionally, these synthetic queries exhibit high linguistic similarity to CQA-derived queries. For human-elicited queries, we developed an interface that uses visual stimuli to place participants in a TOT state, enabling the collection of natural queries. In the Movie domain, system rank correlation and linguistic similarity analyses confirm that human-elicited queries are both effective and closely resemble CQA-based queries. These approaches reduce reliance on CQA-based data collection while expanding coverage to underrepresented domains, such as Landmark and Person. LLM-elicited queries for the Movie, Landmark, and Person domains have been released as test queries in the TREC 2024 TOT track, with human-elicited queries scheduled for inclusion in the TREC 2025 TOT track. Additionally, we provide source code for synthetic query generation and the human query collection interface, along with curated visual stimuli used for eliciting TOT queries.

Why is "Problems" Predictive of Positive Sentiment? A Case Study of Explaining Unintuitive Features in Sentiment Classification

Jun 05, 2024Abstract:Explainable AI (XAI) algorithms aim to help users understand how a machine learning model makes predictions. To this end, many approaches explain which input features are most predictive of a target label. However, such explanations can still be puzzling to users (e.g., in product reviews, the word "problems" is predictive of positive sentiment). If left unexplained, puzzling explanations can have negative impacts. Explaining unintuitive associations between an input feature and a target label is an underexplored area in XAI research. We take an initial effort in this direction using unintuitive associations learned by sentiment classifiers as a case study. We propose approaches for (1) automatically detecting associations that can appear unintuitive to users and (2) generating explanations to help users understand why an unintuitive feature is predictive. Results from a crowdsourced study (N=300) found that our proposed approaches can effectively detect and explain predictive but unintuitive features in sentiment classification.

How does AI chat change search behaviors?

Jul 07, 2023

Abstract:Generative AI tools such as chatGPT are poised to change the way people engage with online information. Recently, Microsoft announced their "new Bing" search system which incorporates chat and generative AI technology from OpenAI. Google has announced plans to deploy search interfaces that incorporate similar types of technology. These new technologies will transform how people can search for information. The research presented here is an early investigation into how people make use of a generative AI chat system (referred to simply as chat from here on) as part of a search process, and how the incorporation of chat systems with existing search tools may effect users search behaviors and strategies. We report on an exploratory user study with 10 participants who used a combined Chat+Search system that utilized the OpenAI GPT-3.5 API and the Bing Web Search v5 API. Participants completed three search tasks. In this pre-print paper of preliminary results, we report on ways that users integrated AI chat into their search process, things they liked and disliked about the chat system, their trust in the chat responses, and their mental models of how the chat system generated responses.

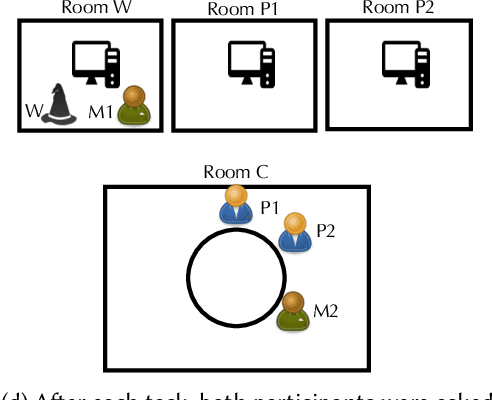

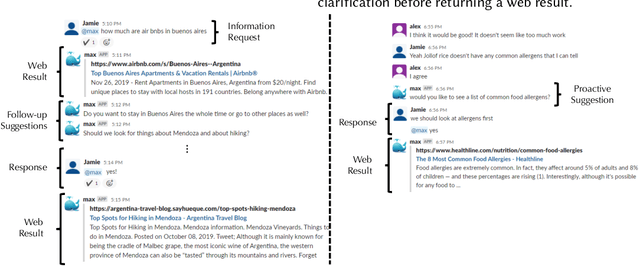

Why and When: Understanding System Initiative during Conversational Collaborative Search

Mar 23, 2023Abstract:In the last decade, conversational search has attracted considerable attention. However, most research has focused on systems that can support a \emph{single} searcher. In this paper, we explore how systems can support \emph{multiple} searchers collaborating over an instant messaging platform (i.e., Slack). We present a ``Wizard of Oz'' study in which 27 participant pairs collaborated on three information-seeking tasks over Slack. Participants were unable to search on their own and had to gather information by interacting with a \emph{searchbot} directly from the Slack channel. The role of the searchbot was played by a reference librarian. Conversational search systems must be capable of engaging in \emph{mixed-initiative} interaction by taking and relinquishing control of the conversation to fulfill different objectives. Discourse analysis research suggests that conversational agents can take \emph{two} levels of initiative: dialog- and task-level initiative. Agents take dialog-level initiative to establish mutual belief between agents and task-level initiative to influence the goals of the other agents. During the study, participants were exposed to three experimental conditions in which the searchbot could take different levels of initiative: (1) no initiative, (2) only dialog-level initiative, and (3) both dialog- and task-level initiative. In this paper, we focus on understanding the Wizard's actions. Specifically, we focus on understanding the Wizard's motivations for taking initiative and their rationale for the timing of each intervention. To gain insights about the Wizard's actions, we conducted a stimulated recall interview with the Wizard. We present findings from a qualitative analysis of this interview data and discuss implications for designing conversational search systems to support collaborative search.

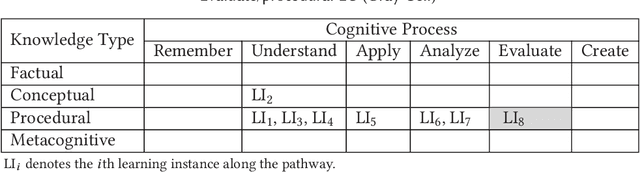

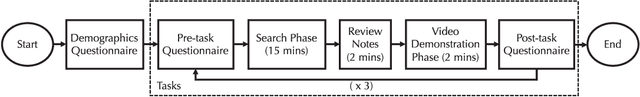

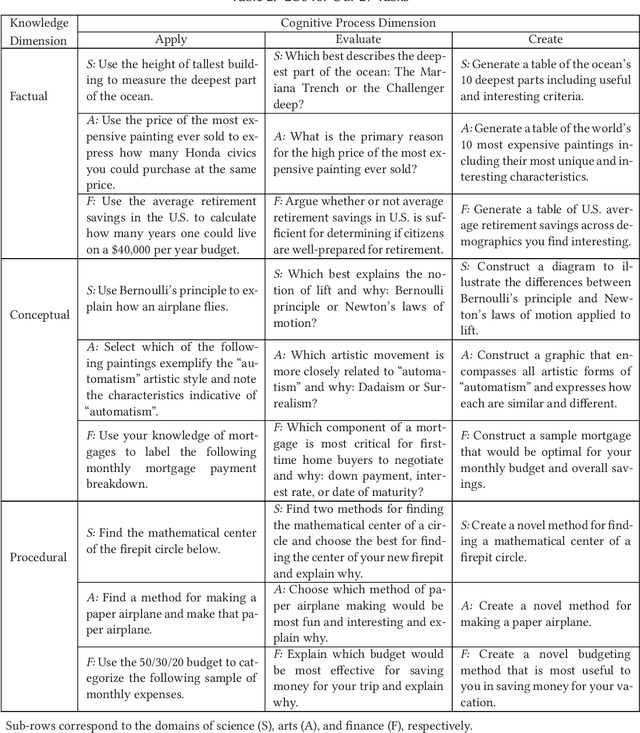

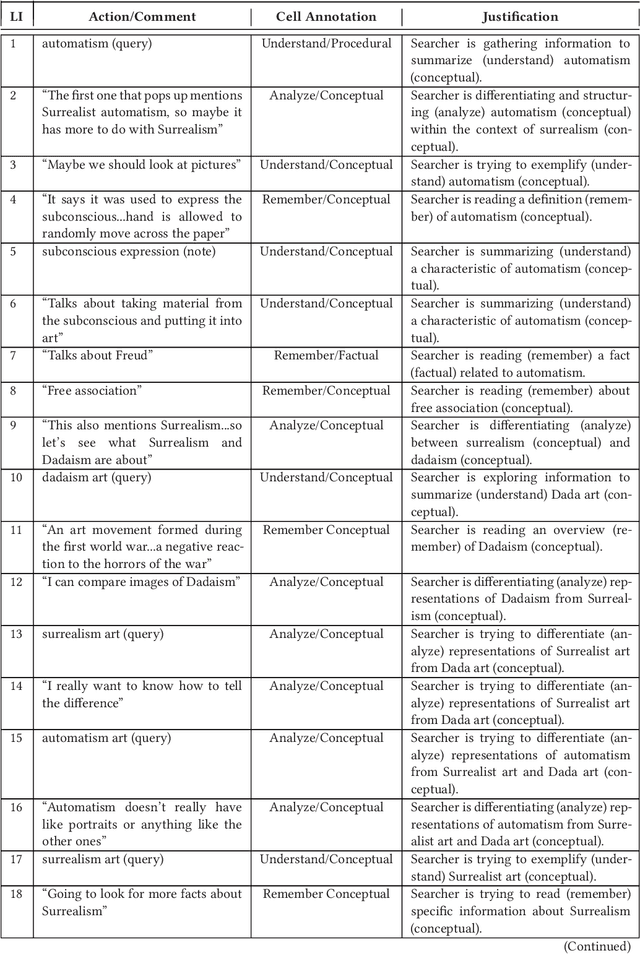

Understanding the "Pathway" Towards a Searcher's Learning Objective

Aug 15, 2022

Abstract:Search systems are often used to support learning-oriented goals. This trend has given rise to the "search-as-learning" movement, which proposes that search systems should be designed to support learning. To this end, an important research question is: How does a searcher's \emph{type} of learning objective influence their trajectory (or \emph{pathway}) towards that objective? We report on a lab study ($N=36$) in which participants gathered information to meet a specific type of learning objective. To characterize learning objectives \emph{and pathways}, we leveraged Anderson and Krathwohl's (A\&K's) taxonomy \cite{anderson2001taxonomy}. Participants completed learning-oriented search tasks that varied along three cognitive processes (apply, evaluate, create) and three knowledge types (factual, conceptual, procedural knowledge). A \emph{pathway} is defined as a sequence of \emph{learning instances} (e.g., subgoals) that were also each classified into cells from A\&K's taxonomy. Our study used a think-aloud protocol, and pathways were generated through a qualitative analysis of participants' think-aloud comments and recorded screen activities. We investigate three research questions. First, in RQ1, we study the impact of the learning objective on pathway characteristics (e.g., pathway length). Second, in RQ2, we study the impact of the learning objective on the types of A\&K cells traversed along the pathway. Third, in RQ3, we study common and uncommon \emph{transitions} between A\&K cells along pathways conditioned on the knowledge type of the objective. We discuss implications of our results for designing search systems to support learning.

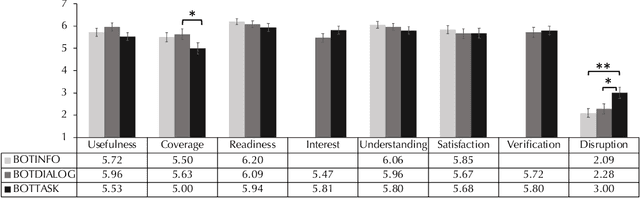

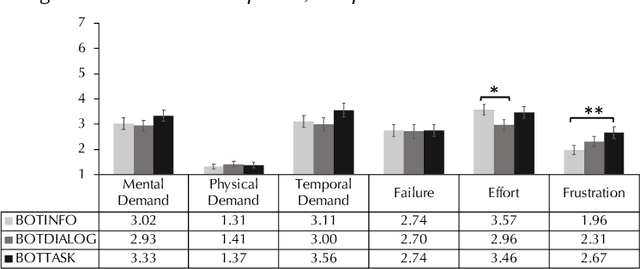

The Effects of System Initiative during Conversational Collaborative Search

Feb 20, 2022

Abstract:Our research in this paper lies at the intersection of collaborative and conversational search. We report on a Wizard of Oz lab study in which 27 pairs of participants collaborated on search tasks over the Slack messaging platform. To complete tasks, pairs of collaborators interacted with a so-called \emph{searchbot} with conversational capabilities. The role of the searchbot was played by a reference librarian. It is widely accepted that conversational search systems should be able to engage in \emph{mixed-initiative interaction} -- take and relinquish control of a multi-agent conversation as appropriate. Research in discourse analysis differentiates between dialog- and task-level initiative. Taking \emph{dialog-level} initiative involves leading a conversation for the sole purpose of establishing mutual belief between agents. Conversely, taking \emph{task-level} initiative involves leading a conversation with the intent to influence the goals of the other agent(s). Participants in our study experienced three \emph{searchbot conditions}, which varied based on the level of initiative the human searchbot was able to take: (1) no initiative, (2) only dialog-level initiative, and (3) both dialog- and task-level initiative. We investigate the effects of the searchbot condition on six different types of outcomes: (RQ1) perceptions of the searchbot's utility, (RQ2) perceptions of workload, (RQ3) perceptions of the collaboration, (RQ4) patterns of communication and collaboration, and perceived (RQ5) benefits and (RQ6) challenges from engaging with the searchbot.

Tip of the Tongue Known-Item Retrieval: A Case Study in Movie Identification

Jan 18, 2021

Abstract:While current information retrieval systems are effective for known-item retrieval where the searcher provides a precise name or identifier for the item being sought, systems tend to be much less effective for cases where the searcher is unable to express a precise name or identifier. We refer to this as tip of the tongue (TOT) known-item retrieval, named after the cognitive state of not being able to retrieve an item from memory. Using movie search as a case study, we explore the characteristics of questions posed by searchers in TOT states in a community question answering website. We analyze how searchers express their information needs during TOT states in the movie domain. Specifically, what information do searchers remember about the item being sought and how do they convey this information? Our results suggest that searchers use a combination of information about: (1) the content of the item sought, (2) the context in which they previously engaged with the item, and (3) previous attempts to find the item using other resources (e.g., search engines). Additionally, searchers convey information by sometimes expressing uncertainty (i.e., hedging), opinions, emotions, and by performing relative (vs. absolute) comparisons with attributes of the item. As a result of our analysis, we believe that searchers in TOT states may require specialized query understanding methods or document representations. Finally, our preliminary retrieval experiments show the impact of each information type presented in information requests on retrieval performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge