Jaideep Srivastava

The Psychology of Falsehood: A Human-Centric Survey of Misinformation Detection

Sep 19, 2025Abstract:Misinformation remains one of the most significant issues in the digital age. While automated fact-checking has emerged as a viable solution, most current systems are limited to evaluating factual accuracy. However, the detrimental effect of misinformation transcends simple falsehoods; it takes advantage of how individuals perceive, interpret, and emotionally react to information. This underscores the need to move beyond factuality and adopt more human-centered detection frameworks. In this survey, we explore the evolving interplay between traditional fact-checking approaches and psychological concepts such as cognitive biases, social dynamics, and emotional responses. By analyzing state-of-the-art misinformation detection systems through the lens of human psychology and behavior, we reveal critical limitations of current methods and identify opportunities for improvement. Additionally, we outline future research directions aimed at creating more robust and adaptive frameworks, such as neuro-behavioural models that integrate technological factors with the complexities of human cognition and social influence. These approaches offer promising pathways to more effectively detect and mitigate the societal harms of misinformation.

Enhancing In-Hospital Mortality Prediction Using Multi-Representational Learning with LLM-Generated Expert Summaries

Nov 25, 2024Abstract:In-hospital mortality (IHM) prediction for ICU patients is critical for timely interventions and efficient resource allocation. While structured physiological data provides quantitative insights, clinical notes offer unstructured, context-rich narratives. This study integrates these modalities with Large Language Model (LLM)-generated expert summaries to improve IHM prediction accuracy. Using the MIMIC-III database, we analyzed time-series physiological data and clinical notes from the first 48 hours of ICU admission. Clinical notes were concatenated chronologically for each patient and transformed into expert summaries using Med42-v2 70B. A multi-representational learning framework was developed to integrate these data sources, leveraging LLMs to enhance textual data while mitigating direct reliance on LLM predictions, which can introduce challenges in uncertainty quantification and interpretability. The proposed model achieved an AUPRC of 0.6156 (+36.41%) and an AUROC of 0.8955 (+7.64%) compared to a time-series-only baseline. Expert summaries outperformed clinical notes or time-series data alone, demonstrating the value of LLM-generated knowledge. Performance gains were consistent across demographic groups, with notable improvements in underrepresented populations, underscoring the framework's equitable application potential. By integrating LLM-generated summaries with structured and unstructured data, the framework captures complementary patient information, significantly improving predictive performance. This approach showcases the potential of LLMs to augment critical care prediction models, emphasizing the need for domain-specific validation and advanced integration strategies for broader clinical adoption.

LoDIP: Low light phase retrieval with deep image prior

Feb 27, 2024Abstract:Phase retrieval (PR) is a fundamental challenge in scientific imaging, enabling nanoscale techniques like coherent diffractive imaging (CDI). Imaging at low radiation doses becomes important in applications where samples are susceptible to radiation damage. However, most PR methods struggle in low dose scenario due to the presence of very high shot noise. Advancements in the optical data acquisition setup, exemplified by in-situ CDI, have shown potential for low-dose imaging. But these depend on a time series of measurements, rendering them unsuitable for single-image applications. Similarly, on the computational front, data-driven phase retrieval techniques are not readily adaptable to the single-image context. Deep learning based single-image methods, such as deep image prior, have been effective for various imaging tasks but have exhibited limited success when applied to PR. In this work, we propose LoDIP which combines the in-situ CDI setup with the power of implicit neural priors to tackle the problem of single-image low-dose phase retrieval. Quantitative evaluations demonstrate the superior performance of LoDIP on this task as well as applicability to real experimental scenarios.

Explain Variance of Prediction in Variational Time Series Models for Clinical Deterioration Prediction

Feb 15, 2024

Abstract:Missingness and measurement frequency are two sides of the same coin. How frequent should we measure clinical variables and conduct laboratory tests? It depends on many factors such as the stability of patient conditions, diagnostic process, treatment plan and measurement costs. The utility of measurements varies disease by disease, patient by patient. In this study we propose a novel view of clinical variable measurement frequency from a predictive modeling perspective, namely the measurements of clinical variables reduce uncertainty in model predictions. To achieve this goal, we propose variance SHAP with variational time series models, an application of Shapley Additive Expanation(SHAP) algorithm to attribute epistemic prediction uncertainty. The prediction variance is estimated by sampling the conditional hidden space in variational models and can be approximated deterministically by delta's method. This approach works with variational time series models such as variational recurrent neural networks and variational transformers. Since SHAP values are additive, the variance SHAP of binary data imputation masks can be directly interpreted as the contribution to prediction variance by measurements. We tested our ideas on a public ICU dataset with deterioration prediction task and study the relation between variance SHAP and measurement time intervals.

A Kalman Filter Based Framework for Monitoring the Performance of In-Hospital Mortality Prediction Models Over Time

Feb 09, 2024

Abstract:Unlike in a clinical trial, where researchers get to determine the least number of positive and negative samples required, or in a machine learning study where the size and the class distribution of the validation set is static and known, in a real-world scenario, there is little control over the size and distribution of incoming patients. As a result, when measured during different time periods, evaluation metrics like Area under the Receiver Operating Curve (AUCROC) and Area Under the Precision-Recall Curve(AUCPR) may not be directly comparable. Therefore, in this study, for binary classifiers running in a long time period, we proposed to adjust these performance metrics for sample size and class distribution, so that a fair comparison can be made between two time periods. Note that the number of samples and the class distribution, namely the ratio of positive samples, are two robustness factors which affect the variance of AUCROC. To better estimate the mean of performance metrics and understand the change of performance over time, we propose a Kalman filter based framework with extrapolated variance adjusted for the total number of samples and the number of positive samples during different time periods. The efficacy of this method is demonstrated first on a synthetic dataset and then retrospectively applied to a 2-days ahead in-hospital mortality prediction model for COVID-19 patients during 2021 and 2022. Further, we conclude that our prediction model is not significantly affected by the evolution of the disease, improved treatments and changes in hospital operational plans.

Which Modality should I use -- Text, Motif, or Image? : Understanding Graphs with Large Language Models

Nov 16, 2023

Abstract:Large language models (LLMs) are revolutionizing various fields by leveraging large text corpora for context-aware intelligence. Due to the context size, however, encoding an entire graph with LLMs is fundamentally limited. This paper explores how to better integrate graph data with LLMs and presents a novel approach using various encoding modalities (e.g., text, image, and motif) and approximation of global connectivity of a graph using different prompting methods to enhance LLMs' effectiveness in handling complex graph structures. The study also introduces GraphTMI, a new benchmark for evaluating LLMs in graph structure analysis, focusing on factors such as homophily, motif presence, and graph difficulty. Key findings reveal that image modality, supported by advanced vision-language models like GPT-4V, is more effective than text in managing token limits while retaining critical information. The research also examines the influence of different factors on each encoding modality's performance. This study highlights the current limitations and charts future directions for LLMs in graph understanding and reasoning tasks.

Filling out the missing gaps: Time Series Imputation with Semi-Supervised Learning

Apr 09, 2023

Abstract:Missing data in time series is a challenging issue affecting time series analysis. Missing data occurs due to problems like data drops or sensor malfunctioning. Imputation methods are used to fill in these values, with quality of imputation having a significant impact on downstream tasks like classification. In this work, we propose a semi-supervised imputation method, ST-Impute, that uses both unlabeled data along with downstream task's labeled data. ST-Impute is based on sparse self-attention and trains on tasks that mimic the imputation process. Our results indicate that the proposed method outperforms the existing supervised and unsupervised time series imputation methods measured on the imputation quality as well as on the downstream tasks ingesting imputed time series.

Embarrassingly Simple MixUp for Time-series

Apr 09, 2023

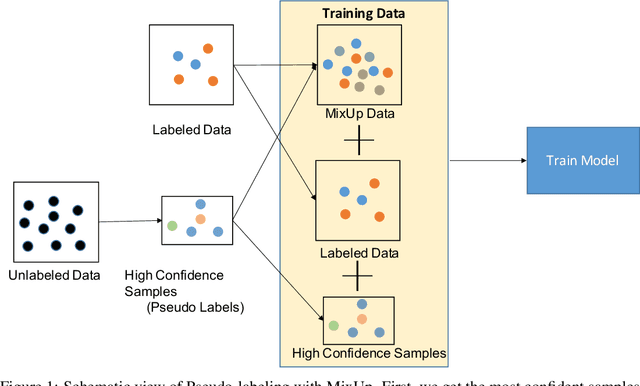

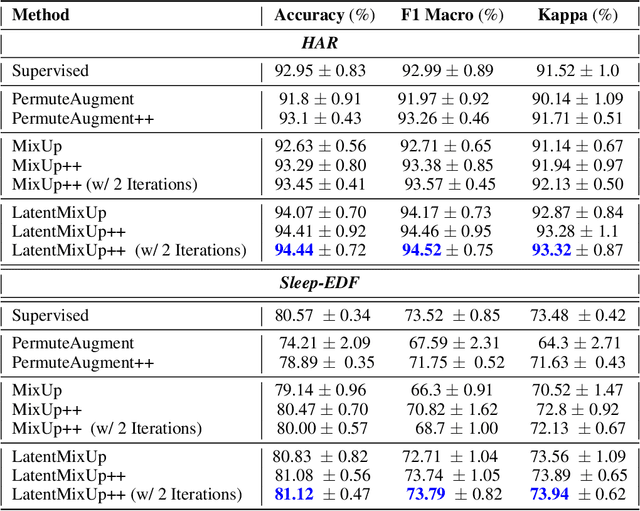

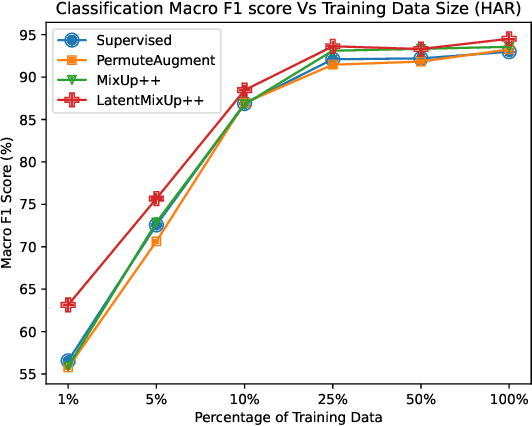

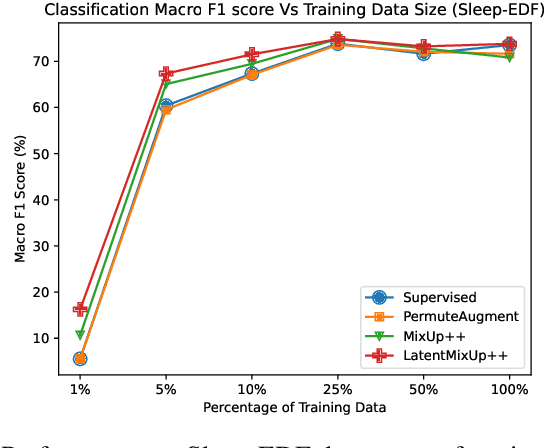

Abstract:Labeling time series data is an expensive task because of domain expertise and dynamic nature of the data. Hence, we often have to deal with limited labeled data settings. Data augmentation techniques have been successfully deployed in domains like computer vision to exploit the use of existing labeled data. We adapt one of the most commonly used technique called MixUp, in the time series domain. Our proposed, MixUp++ and LatentMixUp++, use simple modifications to perform interpolation in raw time series and classification model's latent space, respectively. We also extend these methods with semi-supervised learning to exploit unlabeled data. We observe significant improvements of 1\% - 15\% on time series classification on two public datasets, for both low labeled data as well as high labeled data regimes, with LatentMixUp++.

Hierarchical clustering by aggregating representatives in sub-minimum-spanning-trees

Nov 11, 2021

Abstract:One of the main challenges for hierarchical clustering is how to appropriately identify the representative points in the lower level of the cluster tree, which are going to be utilized as the roots in the higher level of the cluster tree for further aggregation. However, conventional hierarchical clustering approaches have adopted some simple tricks to select the "representative" points which might not be as representative as enough. Thus, the constructed cluster tree is less attractive in terms of its poor robustness and weak reliability. Aiming at this issue, we propose a novel hierarchical clustering algorithm, in which, while building the clustering dendrogram, we can effectively detect the representative point based on scoring the reciprocal nearest data points in each sub-minimum-spanning-tree. Extensive experiments on UCI datasets show that the proposed algorithm is more accurate than other benchmarks. Meanwhile, under our analysis, the proposed algorithm has O(nlogn) time-complexity and O(logn) space-complexity, indicating that it has the scalability in handling massive data with less time and storage consumptions.

PARIS: Personalized Activity Recommendation for Improving Sleep Quality

Oct 26, 2021

Abstract:The quality of sleep has a deep impact on people's physical and mental health. People with insufficient sleep are more likely to report physical and mental distress, activity limitation, anxiety, and pain. Moreover, in the past few years, there has been an explosion of applications and devices for activity monitoring and health tracking. Signals collected from these wearable devices can be used to study and improve sleep quality. In this paper, we utilize the relationship between physical activity and sleep quality to find ways of assisting people improve their sleep using machine learning techniques. People usually have several behavior modes that their bio-functions can be divided into. Performing time series clustering on activity data, we find cluster centers that would correlate to the most evident behavior modes for a specific subject. Activity recipes are then generated for good sleep quality for each behavior mode within each cluster. These activity recipes are supplied to an activity recommendation engine for suggesting a mix of relaxed to intense activities to subjects during their daily routines. The recommendations are further personalized based on the subjects' lifestyle constraints, i.e. their age, gender, body mass index (BMI), resting heart rate, etc, with the objective of the recommendation being the improvement of that night's quality of sleep. This would in turn serve a longer-term health objective, like lowering heart rate, improving the overall quality of sleep, etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge