Jack Tumblin

Can Deep Learning Assist Automatic Identification of Layered Pigments From XRF Data?

Jul 26, 2022

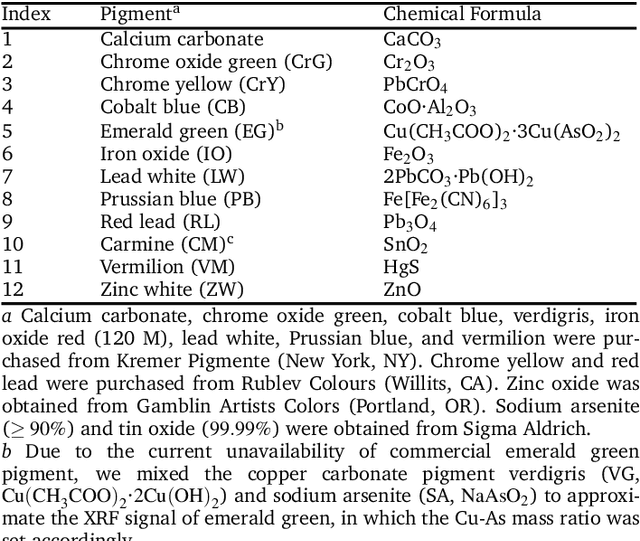

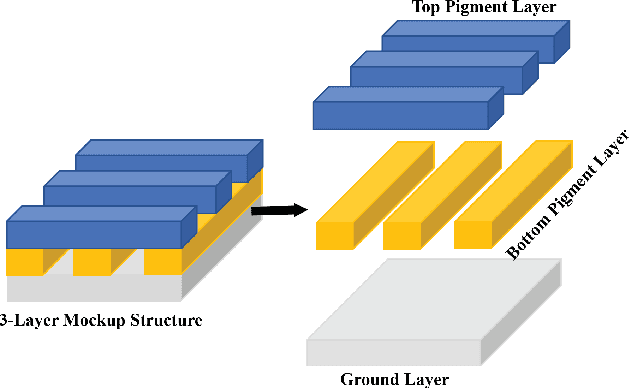

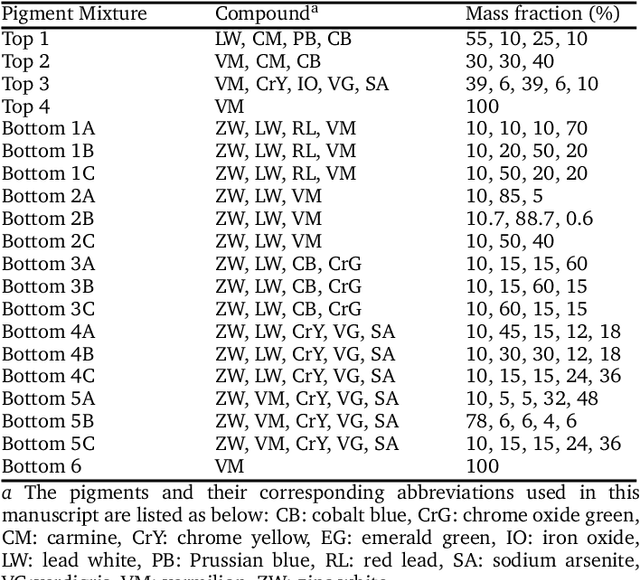

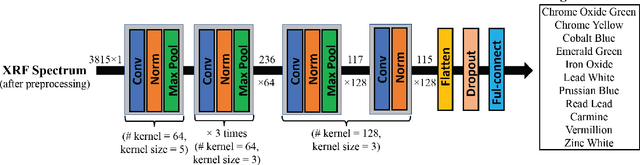

Abstract:X-ray fluorescence spectroscopy (XRF) plays an important role for elemental analysis in a wide range of scientific fields, especially in cultural heritage. XRF imaging, which uses a raster scan to acquire spectra across artworks, provides the opportunity for spatial analysis of pigment distributions based on their elemental composition. However, conventional XRF-based pigment identification relies on time-consuming elemental mapping by expert interpretations of measured spectra. To reduce the reliance on manual work, recent studies have applied machine learning techniques to cluster similar XRF spectra in data analysis and to identify the most likely pigments. Nevertheless, it is still challenging for automatic pigment identification strategies to directly tackle the complex structure of real paintings, e.g. pigment mixtures and layered pigments. In addition, pixel-wise pigment identification based on XRF imaging remains an obstacle due to the high noise level compared with averaged spectra. Therefore, we developed a deep-learning-based end-to-end pigment identification framework to fully automate the pigment identification process. In particular, it offers high sensitivity to the underlying pigments and to the pigments with a low concentration, therefore enabling satisfying results in mapping the pigments based on single-pixel XRF spectrum. As case studies, we applied our framework to lab-prepared mock-up paintings and two 19th-century paintings: Paul Gauguin's Po\`emes Barbares (1896) that contains layered pigments with an underlying painting, and Paul Cezanne's The Bathers (1899-1904). The pigment identification results demonstrated that our model achieved comparable results to the analysis by elemental mapping, suggesting the generalizability and stability of our model.

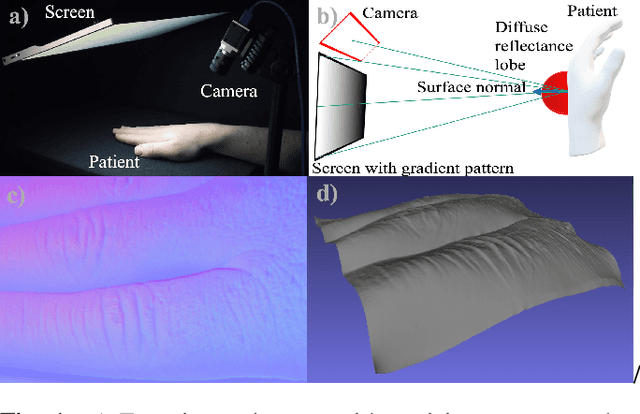

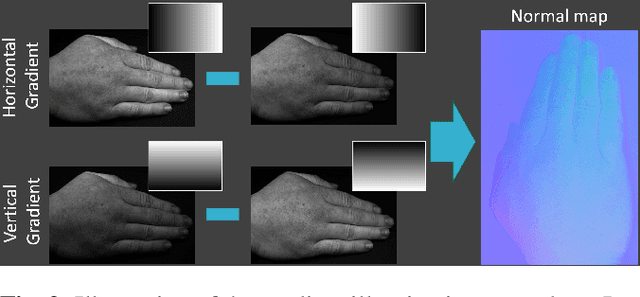

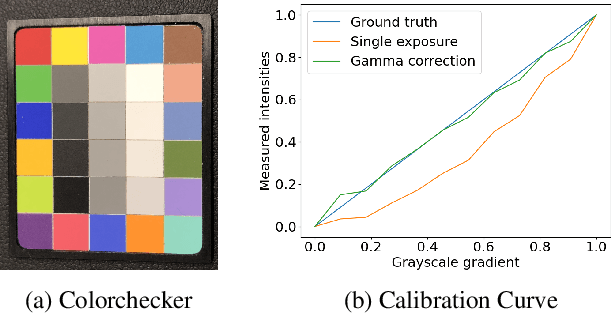

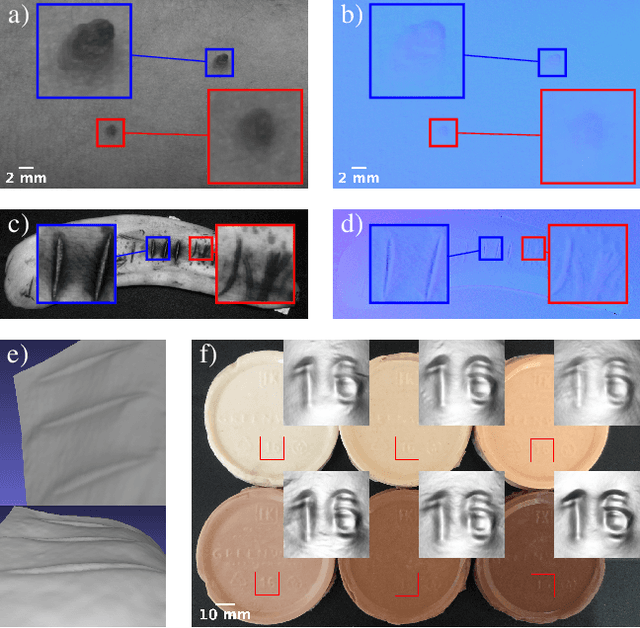

SkinScan: Low-Cost 3D-Scanning for Dermatologic Diagnosis and Documentation

Jan 31, 2021

Abstract:The utilization of computational photography becomes increasingly essential in the medical field. Today, imaging techniques for dermatology range from two-dimensional (2D) color imagery with a mobile device to professional clinical imaging systems measuring additional detailed three-dimensional (3D) data. The latter are commonly expensive and not accessible to a broad audience. In this work, we propose a novel system and software framework that relies only on low-cost (and even mobile) commodity devices present in every household to measure detailed 3D information of the human skin with a 3D-gradient-illumination-based method. We believe that our system has great potential for early-stage diagnosis and monitoring of skin diseases, especially in vastly populated or underdeveloped areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge