Ivan Zakazov

Cmprsr: Abstractive Token-Level Question-Agnostic Prompt Compressor

Nov 15, 2025Abstract:Motivated by the high costs of using black-box Large Language Models (LLMs), we introduce a novel prompt compression paradigm, under which we use smaller LLMs to compress inputs for the larger ones. We present the first comprehensive LLM-as-a-compressor benchmark spanning 25 open- and closed-source models, which reveals significant disparity in models' compression ability in terms of (i) preserving semantically important information (ii) following the user-provided compression rate (CR). We further improve the performance of gpt-4.1-mini, the best overall vanilla compressor, with Textgrad-based compression meta-prompt optimization. We also identify the most promising open-source vanilla LLM - Qwen3-4B - and post-train it with a combination of supervised fine-tuning (SFT) and Group Relative Policy Optimization (GRPO), pursuing the dual objective of CR adherence and maximizing the downstream task performance. We call the resulting model Cmprsr and demonstrate its superiority over both extractive and vanilla abstractive compression across the entire range of compression rates on lengthy inputs from MeetingBank and LongBench as well as short prompts from GSM8k. The latter highlights Cmprsr's generalizability across varying input lengths and domains. Moreover, Cmprsr closely follows the requested compression rate, offering fine control over the cost-quality trade-off.

TRPrompt: Bootstrapping Query-Aware Prompt Optimization from Textual Rewards

Jul 24, 2025Abstract:Prompt optimization improves the reasoning abilities of large language models (LLMs) without requiring parameter updates to the target model. Following heuristic-based "Think step by step" approaches, the field has evolved in two main directions: while one group of methods uses textual feedback to elicit improved prompts from general-purpose LLMs in a training-free way, a concurrent line of research relies on numerical rewards to train a special prompt model, tailored for providing optimal prompts to the target model. In this paper, we introduce the Textual Reward Prompt framework (TRPrompt), which unifies these approaches by directly incorporating textual feedback into training of the prompt model. Our framework does not require prior dataset collection and is being iteratively improved with the feedback on the generated prompts. When coupled with the capacity of an LLM to internalize the notion of what a "good" prompt is, the high-resolution signal provided by the textual rewards allows us to train a prompt model yielding state-of-the-art query-specific prompts for the problems from the challenging math datasets GSMHard and MATH.

Assessing Social Alignment: Do Personality-Prompted Large Language Models Behave Like Humans?

Dec 21, 2024

Abstract:The ongoing revolution in language modelling has led to various novel applications, some of which rely on the emerging "social abilities" of large language models (LLMs). Already, many turn to the new "cyber friends" for advice during pivotal moments of their lives and trust them with their deepest secrets, implying that accurate shaping of LLMs' "personalities" is paramount. Leveraging the vast diversity of data on which LLMs are pretrained, state-of-the-art approaches prompt them to adopt a particular personality. We ask (i) if personality-prompted models behave (i.e. "make" decisions when presented with a social situation) in line with the ascribed personality, and (ii) if their behavior can be finely controlled. We use classic psychological experiments - the Milgram Experiment and the Ultimatum Game - as social interaction testbeds and apply personality prompting to GPT-3.5/4/4o-mini/4o. Our experiments reveal failure modes of the prompt-based modulation of the models' "behavior", thus challenging the feasibility of personality prompting with today's LLMs.

Feather-Light Fourier Domain Adaptation in Magnetic Resonance Imaging

Jul 31, 2022

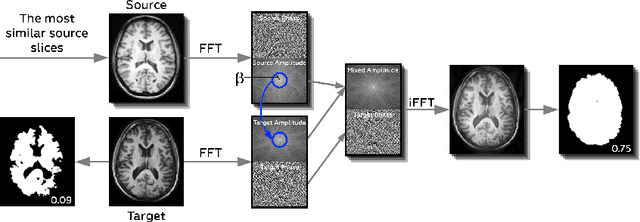

Abstract:Generalizability of deep learning models may be severely affected by the difference in the distributions of the train (source domain) and the test (target domain) sets, e.g., when the sets are produced by different hardware. As a consequence of this domain shift, a certain model might perform well on data from one clinic, and then fail when deployed in another. We propose a very light and transparent approach to perform test-time domain adaptation. The idea is to substitute the target low-frequency Fourier space components that are deemed to reflect the style of an image. To maximize the performance, we implement the "optimal style donor" selection technique, and use a number of source data points for altering a single target scan appearance (Multi-Source Transferring). We study the effect of severity of domain shift on the performance of the method, and show that our training-free approach reaches the state-of-the-art level of complicated deep domain adaptation models. The code for our experiments is released.

Anatomy of Domain Shift Impact on U-Net Layers in MRI Segmentation

Jul 10, 2021

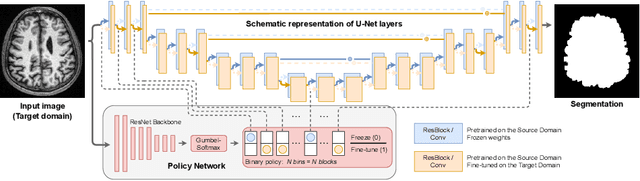

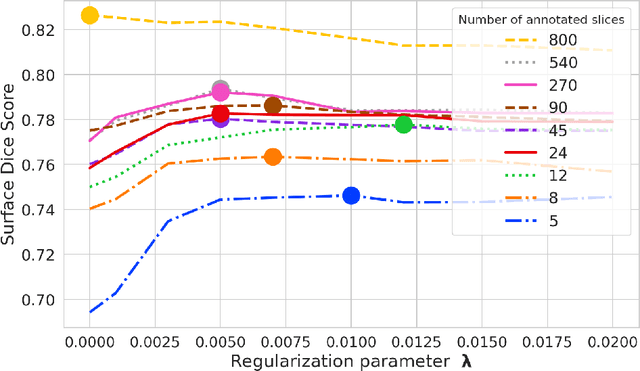

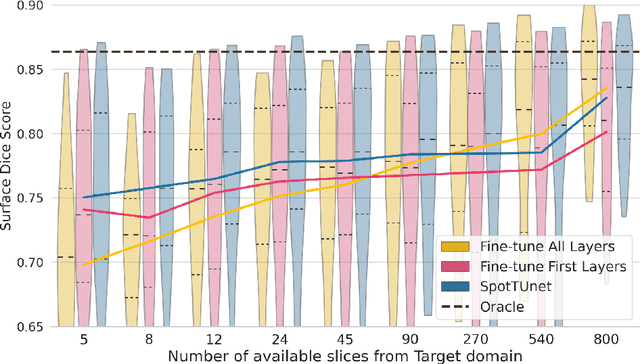

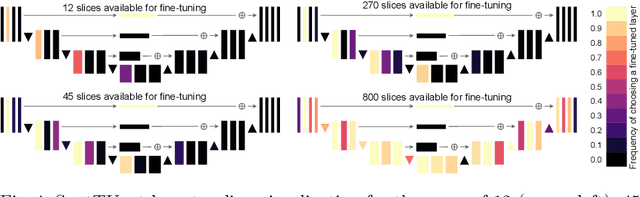

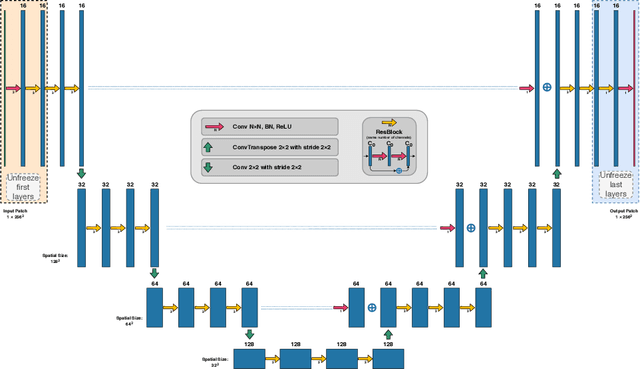

Abstract:Domain Adaptation (DA) methods are widely used in medical image segmentation tasks to tackle the problem of differently distributed train (source) and test (target) data. We consider the supervised DA task with a limited number of annotated samples from the target domain. It corresponds to one of the most relevant clinical setups: building a sufficiently accurate model on the minimum possible amount of annotated data. Existing methods mostly fine-tune specific layers of the pretrained Convolutional Neural Network (CNN). However, there is no consensus on which layers are better to fine-tune, e.g. the first layers for images with low-level domain shift or the deeper layers for images with high-level domain shift. To this end, we propose SpotTUnet - a CNN architecture that automatically chooses the layers which should be optimally fine-tuned. More specifically, on the target domain, our method additionally learns the policy that indicates whether a specific layer should be fine-tuned or reused from the pretrained network. We show that our method performs at the same level as the best of the nonflexible fine-tuning methods even under the extreme scarcity of annotated data. Secondly, we show that SpotTUnet policy provides a layer-wise visualization of the domain shift impact on the network, which could be further used to develop robust domain generalization methods. In order to extensively evaluate SpotTUnet performance, we use a publicly available dataset of brain MR images (CC359), characterized by explicit domain shift. We release a reproducible experimental pipeline.

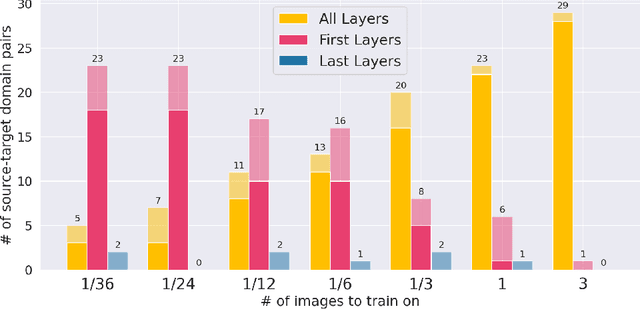

First U-Net Layers Contain More Domain Specific Information Than The Last Ones

Aug 17, 2020

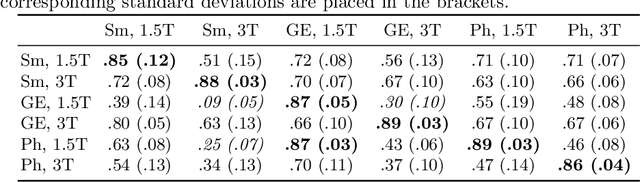

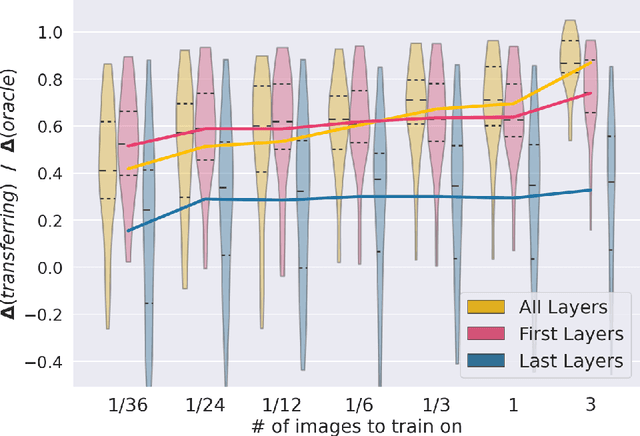

Abstract:MRI scans appearance significantly depends on scanning protocols and, consequently, the data-collection institution. These variations between clinical sites result in dramatic drops of CNN segmentation quality on unseen domains. Many of the recently proposed MRI domain adaptation methods operate with the last CNN layers to suppress domain shift. At the same time, the core manifestation of MRI variability is a considerable diversity of image intensities. We hypothesize that these differences can be eliminated by modifying the first layers rather than the last ones. To validate this simple idea, we conducted a set of experiments with brain MRI scans from six domains. Our results demonstrate that 1) domain-shift may deteriorate the quality even for a simple brain extraction segmentation task (surface Dice Score drops from 0.85-0.89 even to 0.09); 2) fine-tuning of the first layers significantly outperforms fine-tuning of the last layers in almost all supervised domain adaptation setups. Moreover, fine-tuning of the first layers is a better strategy than fine-tuning of the whole network, if the amount of annotated data from the new domain is strictly limited.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge