Ismini Psychoula

ExMo: Explainable AI Model using Inverse Frequency Decision Rules

May 20, 2022Abstract:In this paper, we present a novel method to compute decision rules to build a more accurate interpretable machine learning model, denoted as ExMo. The ExMo interpretable machine learning model consists of a list of IF...THEN... statements with a decision rule in the condition. This way, ExMo naturally provides an explanation for a prediction using the decision rule that was triggered. ExMo uses a new approach to extract decision rules from the training data using term frequency-inverse document frequency (TF-IDF) features. With TF-IDF, decision rules with feature values that are more relevant to each class are extracted. Hence, the decision rules obtained by ExMo can distinguish the positive and negative classes better than the decision rules used in the existing Bayesian Rule List (BRL) algorithm, obtained using the frequent pattern mining approach. The paper also shows that ExMo learns a qualitatively better model than BRL. Furthermore, ExMo demonstrates that the textual explanation can be provided in a human-friendly way so that the explanation can be easily understood by non-expert users. We validate ExMo on several datasets with different sizes to evaluate its efficacy. Experimental validation on a real-world fraud detection application shows that ExMo is 20% more accurate than BRL and that it achieves accuracy similar to those of deep learning models.

Explainable Machine Learning for Fraud Detection

May 13, 2021

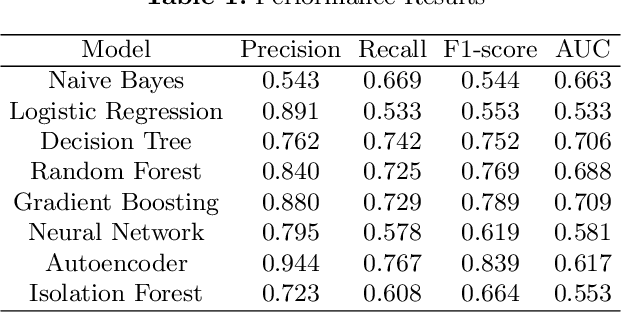

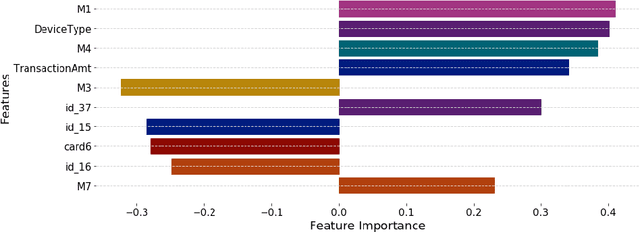

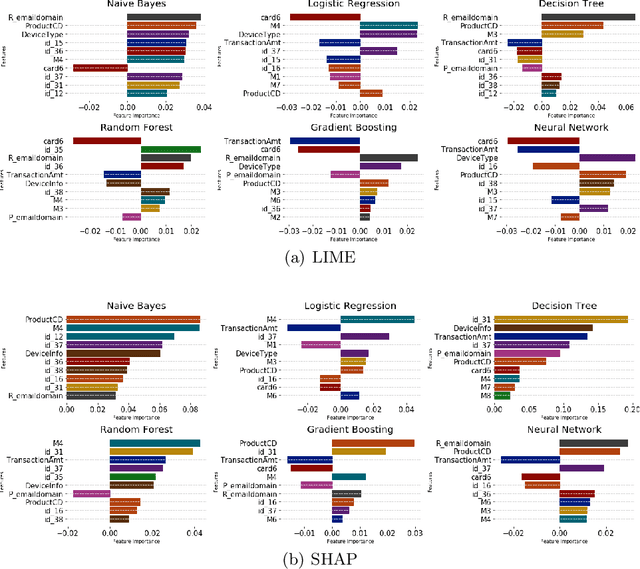

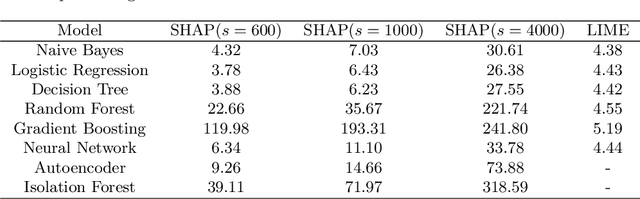

Abstract:The application of machine learning to support the processing of large datasets holds promise in many industries, including financial services. However, practical issues for the full adoption of machine learning remain with the focus being on understanding and being able to explain the decisions and predictions made by complex models. In this paper, we explore explainability methods in the domain of real-time fraud detection by investigating the selection of appropriate background datasets and runtime trade-offs on both supervised and unsupervised models.

Human Activity Recognition using Recurrent Neural Networks

Apr 19, 2018

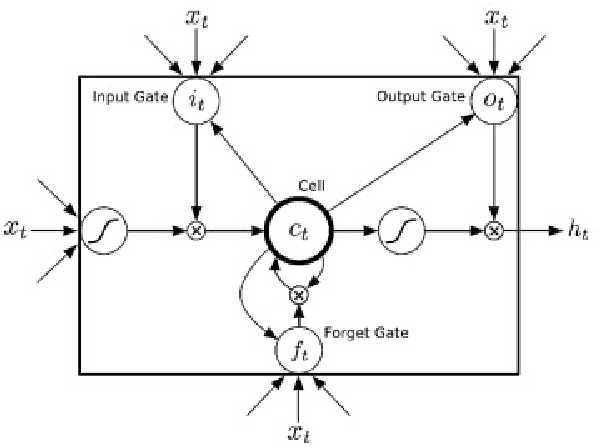

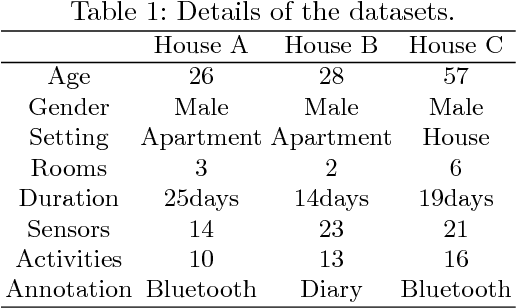

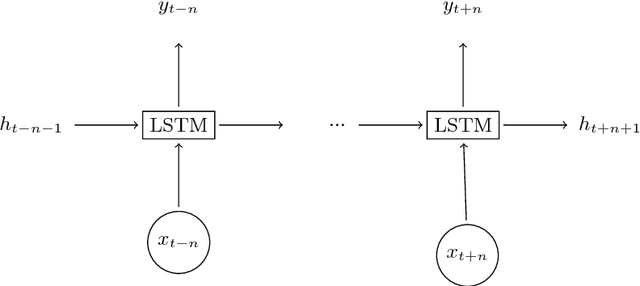

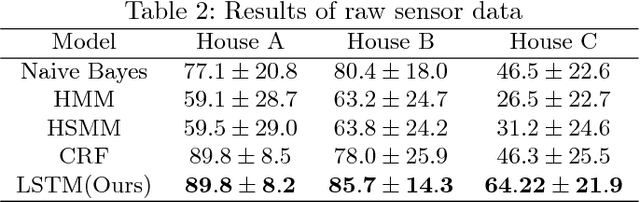

Abstract:Human activity recognition using smart home sensors is one of the bases of ubiquitous computing in smart environments and a topic undergoing intense research in the field of ambient assisted living. The increasingly large amount of data sets calls for machine learning methods. In this paper, we introduce a deep learning model that learns to classify human activities without using any prior knowledge. For this purpose, a Long Short Term Memory (LSTM) Recurrent Neural Network was applied to three real world smart home datasets. The results of these experiments show that the proposed approach outperforms the existing ones in terms of accuracy and performance.

A Deep Learning Approach for Privacy Preservation in Assisted Living

Feb 22, 2018

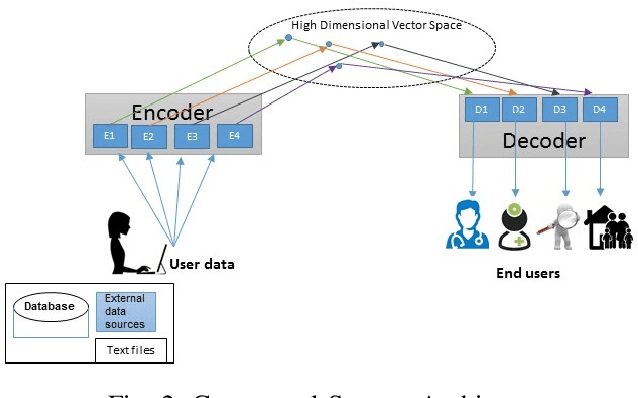

Abstract:In the era of Internet of Things (IoT) technologies the potential for privacy invasion is becoming a major concern especially in regards to healthcare data and Ambient Assisted Living (AAL) environments. Systems that offer AAL technologies make extensive use of personal data in order to provide services that are context-aware and personalized. This makes privacy preservation a very important issue especially since the users are not always aware of the privacy risks they could face. A lot of progress has been made in the deep learning field, however, there has been lack of research on privacy preservation of sensitive personal data with the use of deep learning. In this paper we focus on a Long Short Term Memory (LSTM) Encoder-Decoder, which is a principal component of deep learning, and propose a new encoding technique that allows the creation of different AAL data views, depending on the access level of the end user and the information they require access to. The efficiency and effectiveness of the proposed method are demonstrated with experiments on a simulated AAL dataset. Qualitatively, we show that the proposed model learns privacy operations such as disclosure, deletion and generalization and can perform encoding and decoding of the data with almost perfect recovery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge