Ilias Diakonikolas

Learning Intersections of Two Margin Halfspaces under Factorizable Distributions

Nov 16, 2025

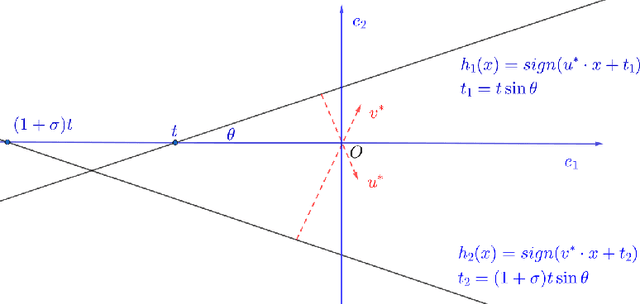

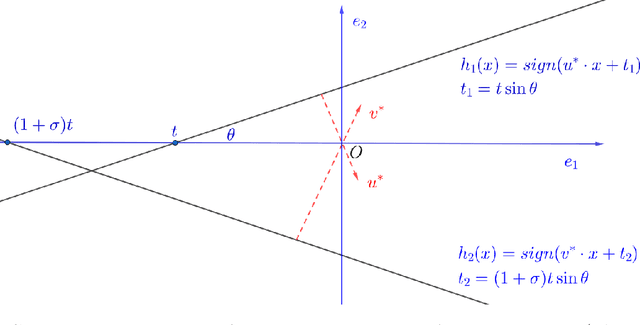

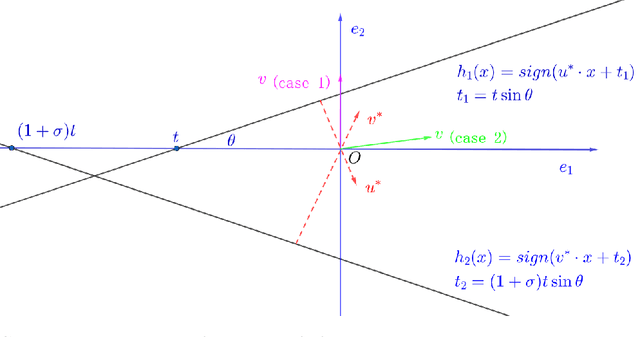

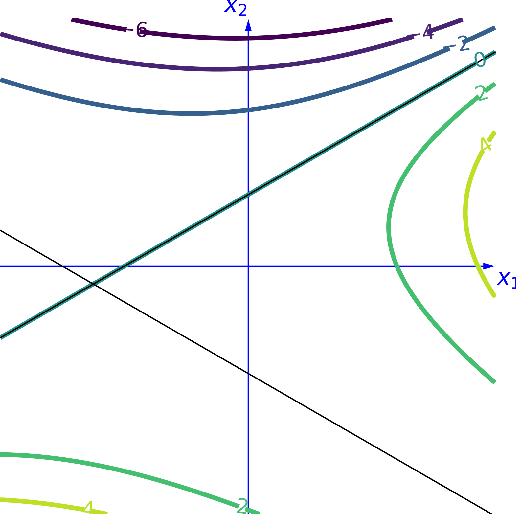

Abstract:Learning intersections of halfspaces is a central problem in Computational Learning Theory. Even for just two halfspaces, it remains a major open question whether learning is possible in polynomial time with respect to the margin $γ$ of the data points and their dimensionality $d$. The best-known algorithms run in quasi-polynomial time $d^{O(\log(1/γ))}$, and it has been shown that this complexity is unavoidable for any algorithm relying solely on correlational statistical queries (CSQ). In this work, we introduce a novel algorithm that provably circumvents the CSQ hardness barrier. Our approach applies to a broad class of distributions satisfying a natural, previously studied, factorizability assumption. Factorizable distributions lie between distribution-specific and distribution-free settings, and significantly extend previously known tractable cases. Under these distributions, we show that CSQ-based methods still require quasipolynomial time even for weakly learning, whereas our algorithm achieves $poly(d,1/γ)$ time by leveraging more general statistical queries (SQ), establishing a strong separation between CSQ and SQ for this simple realizable PAC learning problem. Our result is grounded in a rigorous analysis utilizing a novel duality framework that characterizes the moment tensor structure induced by the marginal distributions. Building on these structural insights, we propose new, efficient learning algorithms. These algorithms combine a refined variant of Jennrich's Algorithm with PCA over random projections of the moment tensor, along with a gradient-descent-based non-convex optimization framework.

Robustly Learning Monotone Single-Index Models

Aug 06, 2025Abstract:We consider the basic problem of learning Single-Index Models with respect to the square loss under the Gaussian distribution in the presence of adversarial label noise. Our main contribution is the first computationally efficient algorithm for this learning task, achieving a constant factor approximation, that succeeds for the class of {\em all} monotone activations with bounded moment of order $2 + \zeta,$ for $\zeta > 0.$ This class in particular includes all monotone Lipschitz functions and even discontinuous functions like (possibly biased) halfspaces. Prior work for the case of unknown activation either does not attain constant factor approximation or succeeds for a substantially smaller family of activations. The main conceptual novelty of our approach lies in developing an optimization framework that steps outside the boundaries of usual gradient methods and instead identifies a useful vector field to guide the algorithm updates by directly leveraging the problem structure, properties of Gaussian spaces, and regularity of monotone functions.

Replicable Distribution Testing

Jul 03, 2025Abstract:We initiate a systematic investigation of distribution testing in the framework of algorithmic replicability. Specifically, given independent samples from a collection of probability distributions, the goal is to characterize the sample complexity of replicably testing natural properties of the underlying distributions. On the algorithmic front, we develop new replicable algorithms for testing closeness and independence of discrete distributions. On the lower bound front, we develop a new methodology for proving sample complexity lower bounds for replicable testing that may be of broader interest. As an application of our technique, we establish near-optimal sample complexity lower bounds for replicable uniformity testing -- answering an open question from prior work -- and closeness testing.

Algorithms and SQ Lower Bounds for Robustly Learning Real-valued Multi-index Models

May 27, 2025Abstract:We study the complexity of learning real-valued Multi-Index Models (MIMs) under the Gaussian distribution. A $K$-MIM is a function $f:\mathbb{R}^d\to \mathbb{R}$ that depends only on the projection of its input onto a $K$-dimensional subspace. We give a general algorithm for PAC learning a broad class of MIMs with respect to the square loss, even in the presence of adversarial label noise. Moreover, we establish a nearly matching Statistical Query (SQ) lower bound, providing evidence that the complexity of our algorithm is qualitatively optimal as a function of the dimension. Specifically, we consider the class of bounded variation MIMs with the property that degree at most $m$ distinguishing moments exist with respect to projections onto any subspace. In the presence of adversarial label noise, the complexity of our learning algorithm is $d^{O(m)}2^{\mathrm{poly}(K/\epsilon)}$. For the realizable and independent noise settings, our algorithm incurs complexity $d^{O(m)}2^{\mathrm{poly}(K)}(1/\epsilon)^{O(K)}$. To complement our upper bound, we show that if for some subspace degree-$m$ distinguishing moments do not exist, then any SQ learner for the corresponding class of MIMs requires complexity $d^{\Omega(m)}$. As an application, we give the first efficient learner for the class of positive-homogeneous $L$-Lipschitz $K$-MIMs. The resulting algorithm has complexity $\mathrm{poly}(d) 2^{\mathrm{poly}(KL/\epsilon)}$. This gives a new PAC learning algorithm for Lipschitz homogeneous ReLU networks with complexity independent of the network size, removing the exponential dependence incurred in prior work.

Faster Algorithms for Agnostically Learning Disjunctions and their Implications

Apr 21, 2025Abstract:We study the algorithmic task of learning Boolean disjunctions in the distribution-free agnostic PAC model. The best known agnostic learner for the class of disjunctions over $\{0, 1\}^n$ is the $L_1$-polynomial regression algorithm, achieving complexity $2^{\tilde{O}(n^{1/2})}$. This complexity bound is known to be nearly best possible within the class of Correlational Statistical Query (CSQ) algorithms. In this work, we develop an agnostic learner for this concept class with complexity $2^{\tilde{O}(n^{1/3})}$. Our algorithm can be implemented in the Statistical Query (SQ) model, providing the first separation between the SQ and CSQ models in distribution-free agnostic learning.

On Learning Parallel Pancakes with Mostly Uniform Weights

Apr 21, 2025Abstract:We study the complexity of learning $k$-mixtures of Gaussians ($k$-GMMs) on $\mathbb{R}^d$. This task is known to have complexity $d^{\Omega(k)}$ in full generality. To circumvent this exponential lower bound on the number of components, research has focused on learning families of GMMs satisfying additional structural properties. A natural assumption posits that the component weights are not exponentially small and that the components have the same unknown covariance. Recent work gave a $d^{O(\log(1/w_{\min}))}$-time algorithm for this class of GMMs, where $w_{\min}$ is the minimum weight. Our first main result is a Statistical Query (SQ) lower bound showing that this quasi-polynomial upper bound is essentially best possible, even for the special case of uniform weights. Specifically, we show that it is SQ-hard to distinguish between such a mixture and the standard Gaussian. We further explore how the distribution of weights affects the complexity of this task. Our second main result is a quasi-polynomial upper bound for the aforementioned testing task when most of the weights are uniform while a small fraction of the weights are potentially arbitrary.

Batch List-Decodable Linear Regression via Higher Moments

Mar 12, 2025Abstract:We study the task of list-decodable linear regression using batches. A batch is called clean if it consists of i.i.d. samples from an unknown linear regression distribution. For a parameter $\alpha \in (0, 1/2)$, an unknown $\alpha$-fraction of the batches are clean and no assumptions are made on the remaining ones. The goal is to output a small list of vectors at least one of which is close to the true regressor vector in $\ell_2$-norm. [DJKS23] gave an efficient algorithm, under natural distributional assumptions, with the following guarantee. Assuming that the batch size $n$ satisfies $n \geq \tilde{\Omega}(\alpha^{-1})$ and the number of batches is $m = \mathrm{poly}(d, n, 1/\alpha)$, their algorithm runs in polynomial time and outputs a list of $O(1/\alpha^2)$ vectors at least one of which is $\tilde{O}(\alpha^{-1/2}/\sqrt{n})$ close to the target regressor. Here we design a new polynomial time algorithm with significantly stronger guarantees under the assumption that the low-degree moments of the covariates distribution are Sum-of-Squares (SoS) certifiably bounded. Specifically, for any constant $\delta>0$, as long as the batch size is $n \geq \Omega_{\delta}(\alpha^{-\delta})$ and the degree-$\Theta(1/\delta)$ moments of the covariates are SoS certifiably bounded, our algorithm uses $m = \mathrm{poly}((dn)^{1/\delta}, 1/\alpha)$ batches, runs in polynomial-time, and outputs an $O(1/\alpha)$-sized list of vectors one of which is $O(\alpha^{-\delta/2}/\sqrt{n})$ close to the target. That is, our algorithm achieves substantially smaller minimum batch size and final error, while achieving the optimal list size. Our approach uses higher-order moment information by carefully combining the SoS paradigm interleaved with an iterative method and a novel list pruning procedure. In the process, we give an SoS proof of the Marcinkiewicz-Zygmund inequality that may be of broader applicability.

Efficient Multivariate Robust Mean Estimation Under Mean-Shift Contamination

Feb 20, 2025Abstract:We study the algorithmic problem of robust mean estimation of an identity covariance Gaussian in the presence of mean-shift contamination. In this contamination model, we are given a set of points in $\mathbb{R}^d$ generated i.i.d. via the following process. For a parameter $\alpha<1/2$, the $i$-th sample $x_i$ is obtained as follows: with probability $1-\alpha$, $x_i$ is drawn from $\mathcal{N}(\mu, I)$, where $\mu \in \mathbb{R}^d$ is the target mean; and with probability $\alpha$, $x_i$ is drawn from $\mathcal{N}(z_i, I)$, where $z_i$ is unknown and potentially arbitrary. Prior work characterized the information-theoretic limits of this task. Specifically, it was shown that, in contrast to Huber contamination, in the presence of mean-shift contamination consistent estimation is possible. On the other hand, all known robust estimators in the mean-shift model have running times exponential in the dimension. Here we give the first computationally efficient algorithm for high-dimensional robust mean estimation with mean-shift contamination that can tolerate a constant fraction of outliers. In particular, our algorithm has near-optimal sample complexity, runs in sample-polynomial time, and approximates the target mean to any desired accuracy. Conceptually, our result contributes to a growing body of work that studies inference with respect to natural noise models lying in between fully adversarial and random settings.

Statistical Query Hardness of Multiclass Linear Classification with Random Classification Noise

Feb 17, 2025

Abstract:We study the task of Multiclass Linear Classification (MLC) in the distribution-free PAC model with Random Classification Noise (RCN). Specifically, the learner is given a set of labeled examples $(x, y)$, where $x$ is drawn from an unknown distribution on $R^d$ and the labels are generated by a multiclass linear classifier corrupted with RCN. That is, the label $y$ is flipped from $i$ to $j$ with probability $H_{ij}$ according to a known noise matrix $H$ with non-negative separation $\sigma: = \min_{i \neq j} H_{ii}-H_{ij}$. The goal is to compute a hypothesis with small 0-1 error. For the special case of two labels, prior work has given polynomial-time algorithms achieving the optimal error. Surprisingly, little is known about the complexity of this task even for three labels. As our main contribution, we show that the complexity of MLC with RCN becomes drastically different in the presence of three or more labels. Specifically, we prove super-polynomial Statistical Query (SQ) lower bounds for this problem. In more detail, even for three labels and constant separation, we give a super-polynomial lower bound on the complexity of any SQ algorithm achieving optimal error. For a larger number of labels and smaller separation, we show a super-polynomial SQ lower bound even for the weaker goal of achieving any constant factor approximation to the optimal loss or even beating the trivial hypothesis.

Robust Learning of Multi-index Models via Iterative Subspace Approximation

Feb 13, 2025Abstract:We study the task of learning Multi-Index Models (MIMs) with label noise under the Gaussian distribution. A $K$-MIM is any function $f$ that only depends on a $K$-dimensional subspace. We focus on well-behaved MIMs with finite ranges that satisfy certain regularity properties. Our main contribution is a general robust learner that is qualitatively optimal in the Statistical Query (SQ) model. Our algorithm iteratively constructs better approximations to the defining subspace by computing low-degree moments conditional on the projection to the subspace computed thus far, and adding directions with relatively large empirical moments. This procedure efficiently finds a subspace $V$ so that $f(\mathbf{x})$ is close to a function of the projection of $\mathbf{x}$ onto $V$. Conversely, for functions for which these conditional moments do not help, we prove an SQ lower bound suggesting that no efficient learner exists. As applications, we provide faster robust learners for the following concept classes: * {\bf Multiclass Linear Classifiers} We give a constant-factor approximate agnostic learner with sample complexity $N = O(d) 2^{\mathrm{poly}(K/\epsilon)}$ and computational complexity $\mathrm{poly}(N ,d)$. This is the first constant-factor agnostic learner for this class whose complexity is a fixed-degree polynomial in $d$. * {\bf Intersections of Halfspaces} We give an approximate agnostic learner for this class achieving 0-1 error $K \tilde{O}(\mathrm{OPT}) + \epsilon$ with sample complexity $N=O(d^2) 2^{\mathrm{poly}(K/\epsilon)}$ and computational complexity $\mathrm{poly}(N ,d)$. This is the first agnostic learner for this class with near-linear error dependence and complexity a fixed-degree polynomial in $d$. Furthermore, we show that in the presence of random classification noise, the complexity of our algorithm scales polynomially with $1/\epsilon$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge