Igor Mozetič

Affective Polarization across European Parliaments

Aug 26, 2025

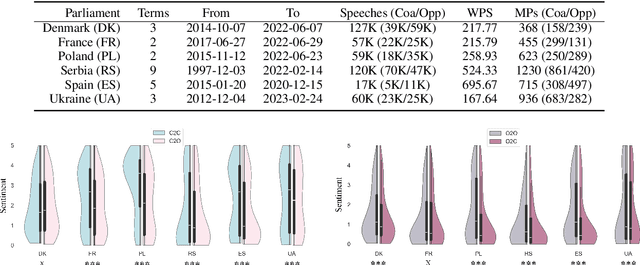

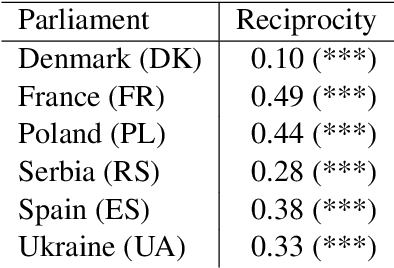

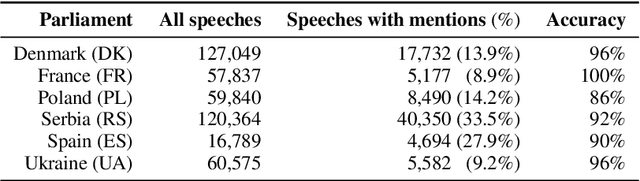

Abstract:Affective polarization, characterized by increased negativity and hostility towards opposing groups, has become a prominent feature of political discourse worldwide. Our study examines the presence of this type of polarization in a selection of European parliaments in a fully automated manner. Utilizing a comprehensive corpus of parliamentary speeches from the parliaments of six European countries, we employ natural language processing techniques to estimate parliamentarian sentiment. By comparing the levels of negativity conveyed in references to individuals from opposing groups versus one's own, we discover patterns of affectively polarized interactions. The findings demonstrate the existence of consistent affective polarization across all six European parliaments. Although activity correlates with negativity, there is no observed difference in affective polarization between less active and more active members of parliament. Finally, we show that reciprocity is a contributing mechanism in affective polarization between parliamentarians across all six parliaments.

ChatGPT: Beginning of an End of Manual Linguistic Data Annotation? Use Case of Automatic Genre Identification

Mar 08, 2023

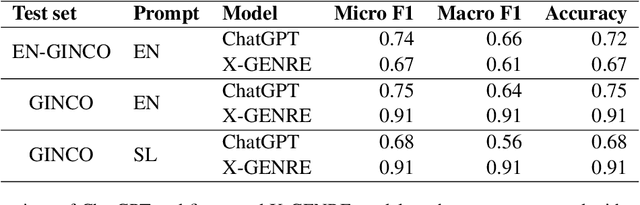

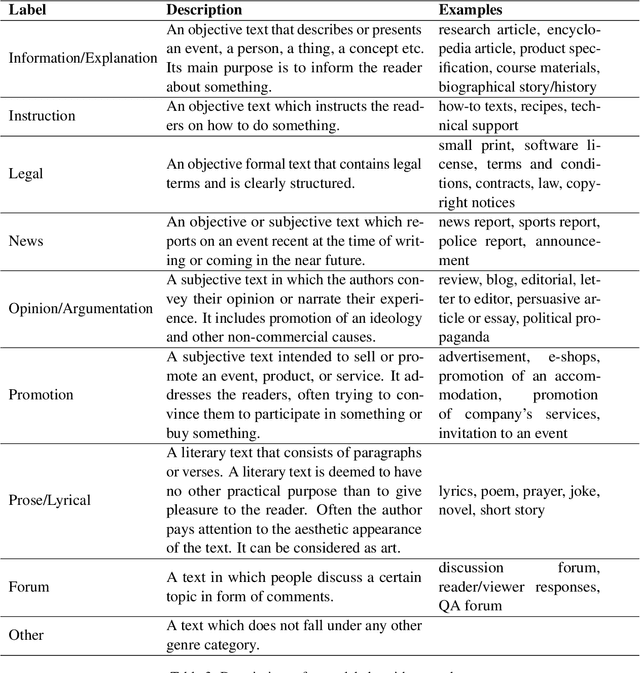

Abstract:ChatGPT has shown strong capabilities in natural language generation tasks, which naturally leads researchers to explore where its abilities end. In this paper, we examine whether ChatGPT can be used for zero-shot text classification, more specifically, automatic genre identification. We compare ChatGPT with a multilingual XLM-RoBERTa language model that was fine-tuned on datasets, manually annotated with genres. The models are compared on test sets in two languages: English and Slovenian. Results show that ChatGPT outperforms the fine-tuned model when applied to the dataset which was not seen before by either of the models. Even when applied on Slovenian language as an under-resourced language, ChatGPT's performance is no worse than when applied to English. However, if the model is fully prompted in Slovenian, the performance drops significantly, showing the current limitations of ChatGPT usage on smaller languages. The presented results lead us to questioning whether this is the beginning of an end of laborious manual annotation campaigns even for smaller languages, such as Slovenian.

Online Hate: Behavioural Dynamics and Relationship with Misinformation

May 28, 2021

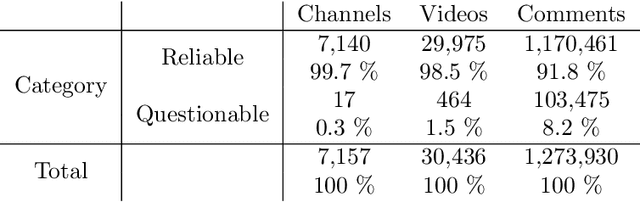

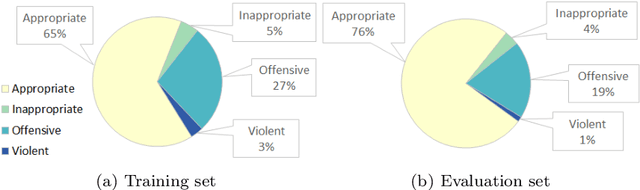

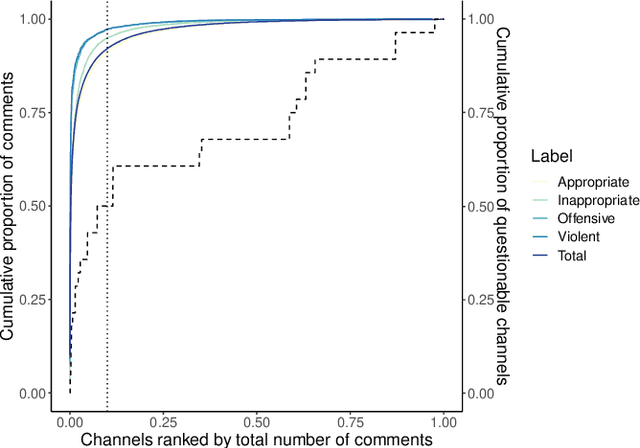

Abstract:Online debates are often characterised by extreme polarisation and heated discussions among users. The presence of hate speech online is becoming increasingly problematic, making necessary the development of appropriate countermeasures. In this work, we perform hate speech detection on a corpus of more than one million comments on YouTube videos through a machine learning model fine-tuned on a large set of hand-annotated data. Our analysis shows that there is no evidence of the presence of "serial haters", intended as active users posting exclusively hateful comments. Moreover, coherently with the echo chamber hypothesis, we find that users skewed towards one of the two categories of video channels (questionable, reliable) are more prone to use inappropriate, violent, or hateful language within their opponents community. Interestingly, users loyal to reliable sources use on average a more toxic language than their counterpart. Finally, we find that the overall toxicity of the discussion increases with its length, measured both in terms of number of comments and time. Our results show that, coherently with Godwin's law, online debates tend to degenerate towards increasingly toxic exchanges of views.

Forex trading and Twitter: Spam, bots, and reputation manipulation

Apr 16, 2018

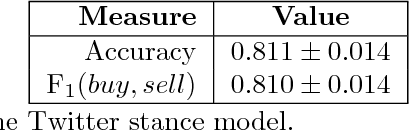

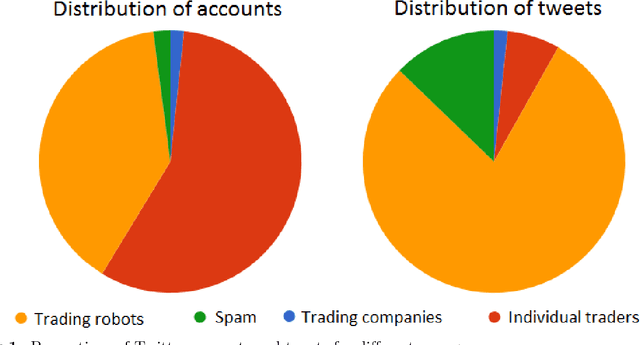

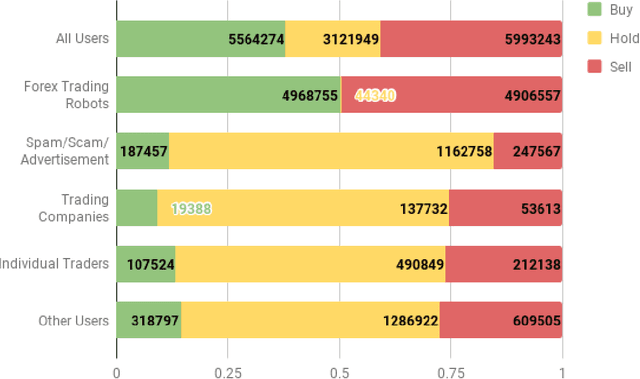

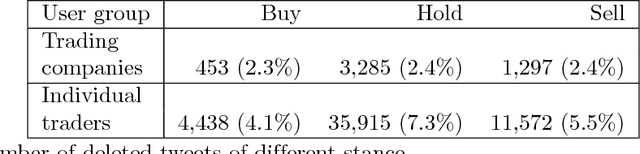

Abstract:Currency trading (Forex) is the largest world market in terms of volume. We analyze trading and tweeting about the EUR-USD currency pair over a period of three years. First, a large number of tweets were manually labeled, and a Twitter stance classification model is constructed. The model then classifies all the tweets by the trading stance signal: buy, hold, or sell (EUR vs. USD). The Twitter stance is compared to the actual currency rates by applying the event study methodology, well-known in financial economics. It turns out that there are large differences in Twitter stance distribution and potential trading returns between the four groups of Twitter users: trading robots, spammers, trading companies, and individual traders. Additionally, we observe attempts of reputation manipulation by post festum removal of tweets with poor predictions, and deleting/reposting of identical tweets to increase the visibility without tainting one's Twitter timeline.

How to evaluate sentiment classifiers for Twitter time-ordered data?

Mar 14, 2018

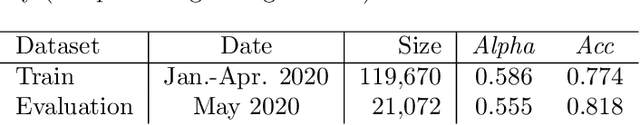

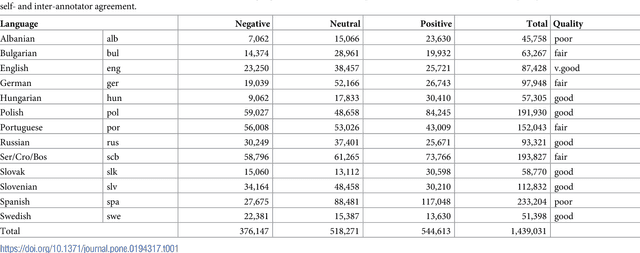

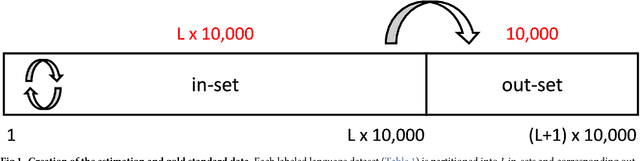

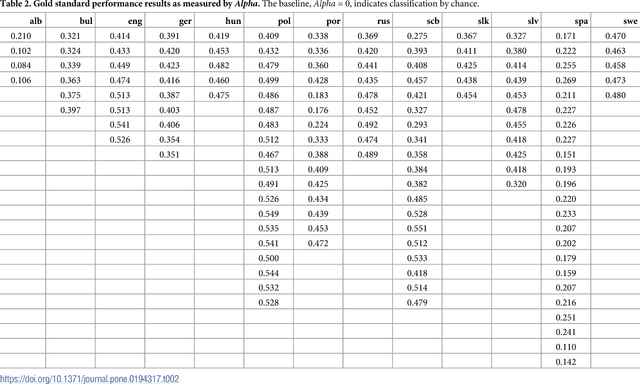

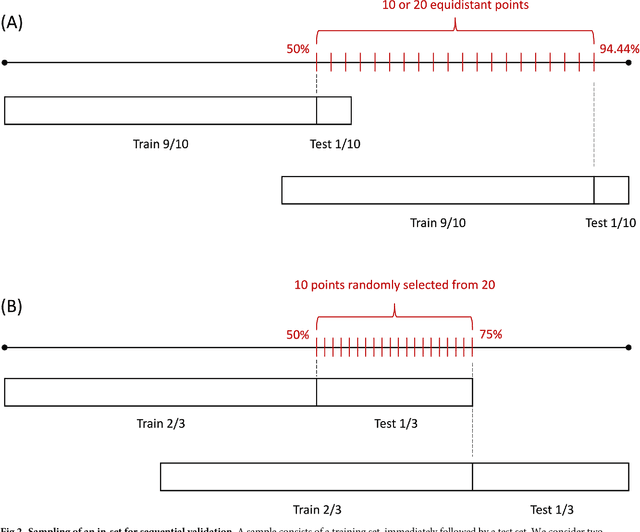

Abstract:Social media are becoming an increasingly important source of information about the public mood regarding issues such as elections, Brexit, stock market, etc. In this paper we focus on sentiment classification of Twitter data. Construction of sentiment classifiers is a standard text mining task, but here we address the question of how to properly evaluate them as there is no settled way to do so. Sentiment classes are ordered and unbalanced, and Twitter produces a stream of time-ordered data. The problem we address concerns the procedures used to obtain reliable estimates of performance measures, and whether the temporal ordering of the training and test data matters. We collected a large set of 1.5 million tweets in 13 European languages. We created 138 sentiment models and out-of-sample datasets, which are used as a gold standard for evaluations. The corresponding 138 in-sample datasets are used to empirically compare six different estimation procedures: three variants of cross-validation, and three variants of sequential validation (where test set always follows the training set). We find no significant difference between the best cross-validation and sequential validation. However, we observe that all cross-validation variants tend to overestimate the performance, while the sequential methods tend to underestimate it. Standard cross-validation with random selection of examples is significantly worse than the blocked cross-validation, and should not be used to evaluate classifiers in time-ordered data scenarios.

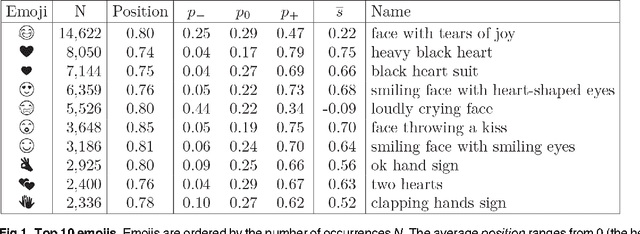

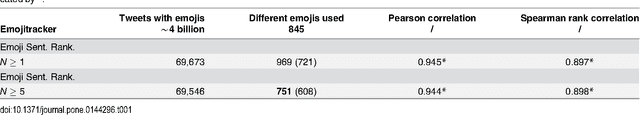

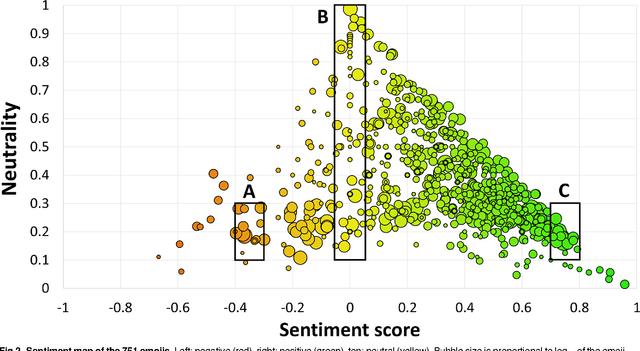

Sentiment of Emojis

Dec 08, 2015

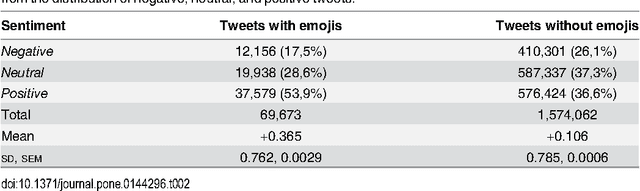

Abstract:There is a new generation of emoticons, called emojis, that is increasingly being used in mobile communications and social media. In the past two years, over ten billion emojis were used on Twitter. Emojis are Unicode graphic symbols, used as a shorthand to express concepts and ideas. In contrast to the small number of well-known emoticons that carry clear emotional contents, there are hundreds of emojis. But what are their emotional contents? We provide the first emoji sentiment lexicon, called the Emoji Sentiment Ranking, and draw a sentiment map of the 751 most frequently used emojis. The sentiment of the emojis is computed from the sentiment of the tweets in which they occur. We engaged 83 human annotators to label over 1.6 million tweets in 13 European languages by the sentiment polarity (negative, neutral, or positive). About 4% of the annotated tweets contain emojis. The sentiment analysis of the emojis allows us to draw several interesting conclusions. It turns out that most of the emojis are positive, especially the most popular ones. The sentiment distribution of the tweets with and without emojis is significantly different. The inter-annotator agreement on the tweets with emojis is higher. Emojis tend to occur at the end of the tweets, and their sentiment polarity increases with the distance. We observe no significant differences in the emoji rankings between the 13 languages and the Emoji Sentiment Ranking. Consequently, we propose our Emoji Sentiment Ranking as a European language-independent resource for automated sentiment analysis. Finally, the paper provides a formalization of sentiment and a novel visualization in the form of a sentiment bar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge