Jasmina Smailović

How to evaluate sentiment classifiers for Twitter time-ordered data?

Mar 14, 2018

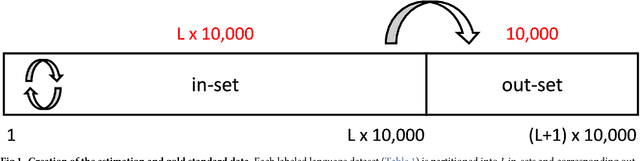

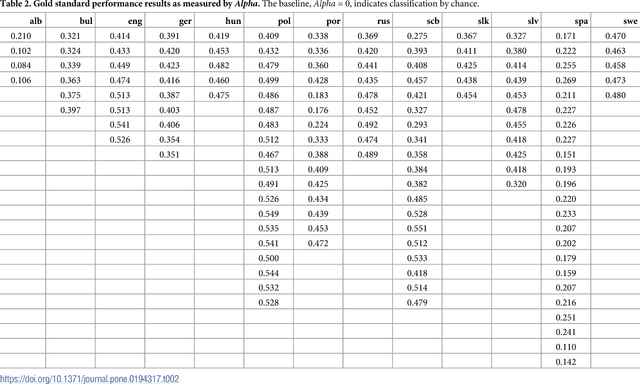

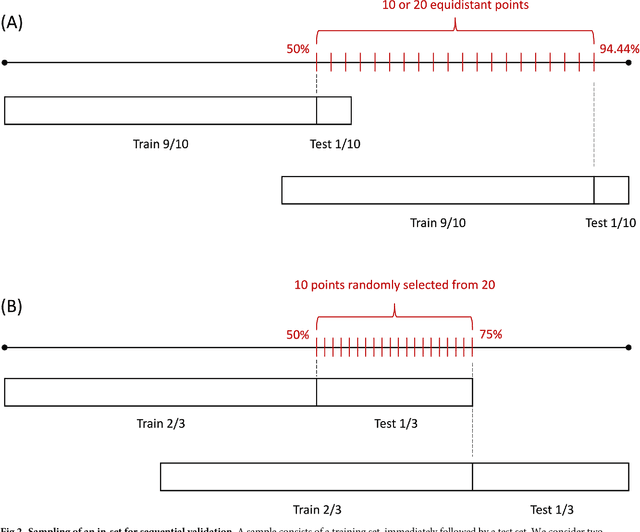

Abstract:Social media are becoming an increasingly important source of information about the public mood regarding issues such as elections, Brexit, stock market, etc. In this paper we focus on sentiment classification of Twitter data. Construction of sentiment classifiers is a standard text mining task, but here we address the question of how to properly evaluate them as there is no settled way to do so. Sentiment classes are ordered and unbalanced, and Twitter produces a stream of time-ordered data. The problem we address concerns the procedures used to obtain reliable estimates of performance measures, and whether the temporal ordering of the training and test data matters. We collected a large set of 1.5 million tweets in 13 European languages. We created 138 sentiment models and out-of-sample datasets, which are used as a gold standard for evaluations. The corresponding 138 in-sample datasets are used to empirically compare six different estimation procedures: three variants of cross-validation, and three variants of sequential validation (where test set always follows the training set). We find no significant difference between the best cross-validation and sequential validation. However, we observe that all cross-validation variants tend to overestimate the performance, while the sequential methods tend to underestimate it. Standard cross-validation with random selection of examples is significantly worse than the blocked cross-validation, and should not be used to evaluate classifiers in time-ordered data scenarios.

Sentiment of Emojis

Dec 08, 2015

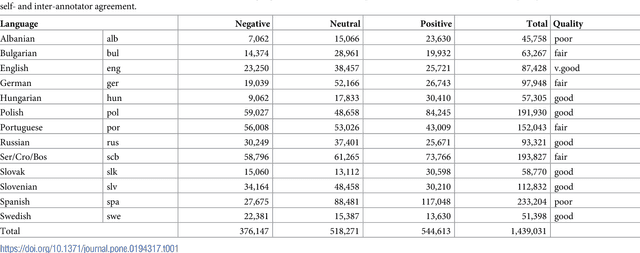

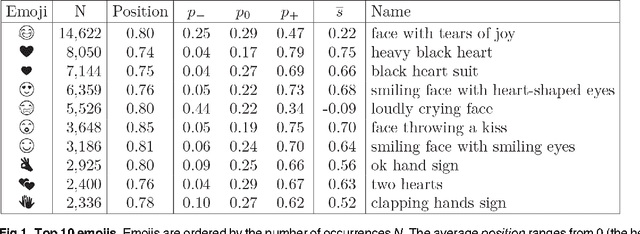

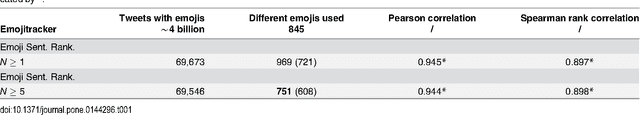

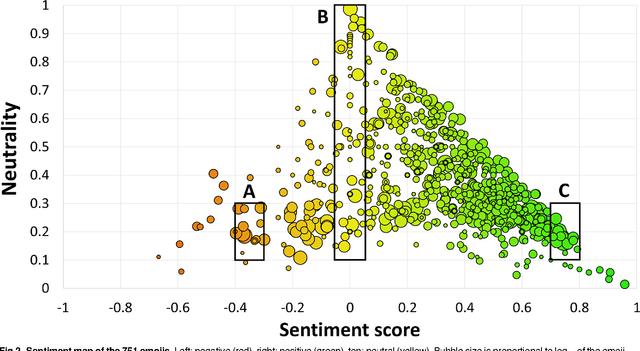

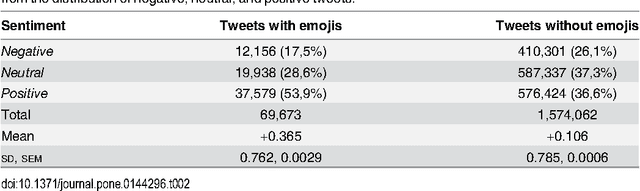

Abstract:There is a new generation of emoticons, called emojis, that is increasingly being used in mobile communications and social media. In the past two years, over ten billion emojis were used on Twitter. Emojis are Unicode graphic symbols, used as a shorthand to express concepts and ideas. In contrast to the small number of well-known emoticons that carry clear emotional contents, there are hundreds of emojis. But what are their emotional contents? We provide the first emoji sentiment lexicon, called the Emoji Sentiment Ranking, and draw a sentiment map of the 751 most frequently used emojis. The sentiment of the emojis is computed from the sentiment of the tweets in which they occur. We engaged 83 human annotators to label over 1.6 million tweets in 13 European languages by the sentiment polarity (negative, neutral, or positive). About 4% of the annotated tweets contain emojis. The sentiment analysis of the emojis allows us to draw several interesting conclusions. It turns out that most of the emojis are positive, especially the most popular ones. The sentiment distribution of the tweets with and without emojis is significantly different. The inter-annotator agreement on the tweets with emojis is higher. Emojis tend to occur at the end of the tweets, and their sentiment polarity increases with the distance. We observe no significant differences in the emoji rankings between the 13 languages and the Emoji Sentiment Ranking. Consequently, we propose our Emoji Sentiment Ranking as a European language-independent resource for automated sentiment analysis. Finally, the paper provides a formalization of sentiment and a novel visualization in the form of a sentiment bar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge