Ida Häggström

Explainable vertebral fracture analysis with uncertainty estimation using differentiable rule-based classification

Jul 03, 2024

Abstract:We present a novel method for explainable vertebral fracture assessment (XVFA) in low-dose radiographs using deep neural networks, incorporating vertebra detection and keypoint localization with uncertainty estimates. We incorporate Genant's semi-quantitative criteria as a differentiable rule-based means of classifying both vertebra fracture grade and morphology. Unlike previous work, XVFA provides explainable classifications relatable to current clinical methodology, as well as uncertainty estimations, while at the same time surpassing state-of-the art methods with a vertebra-level sensitivity of 93% and end-to-end AUC of 97% in a challenging setting. Moreover, we compare intra-reader agreement with model uncertainty estimates, with model reliability on par with human annotators.

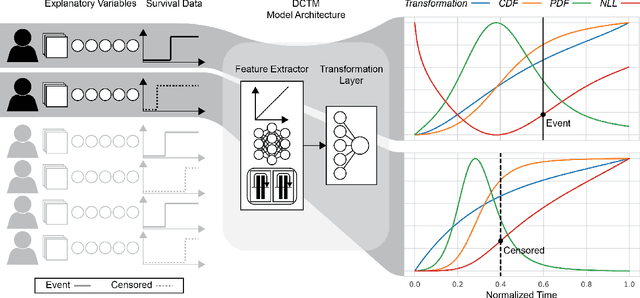

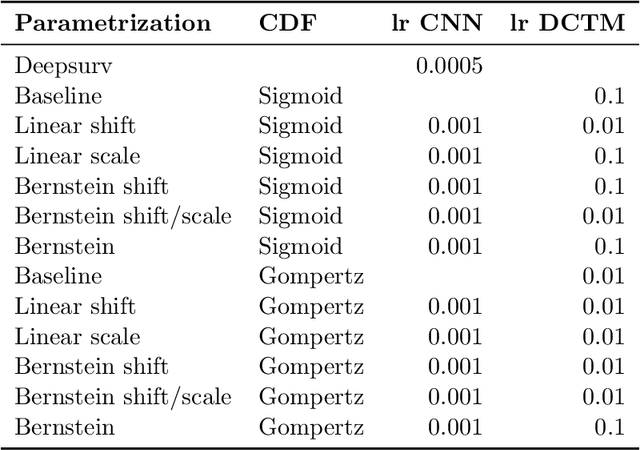

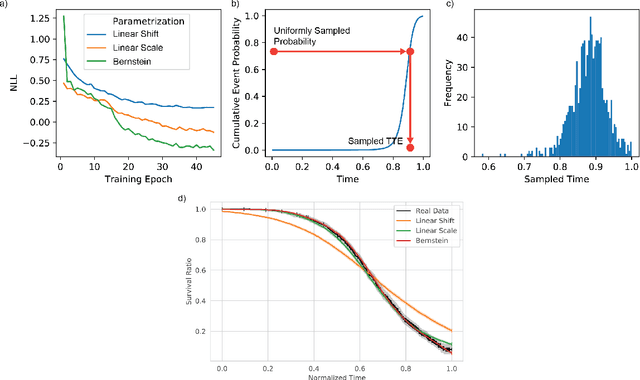

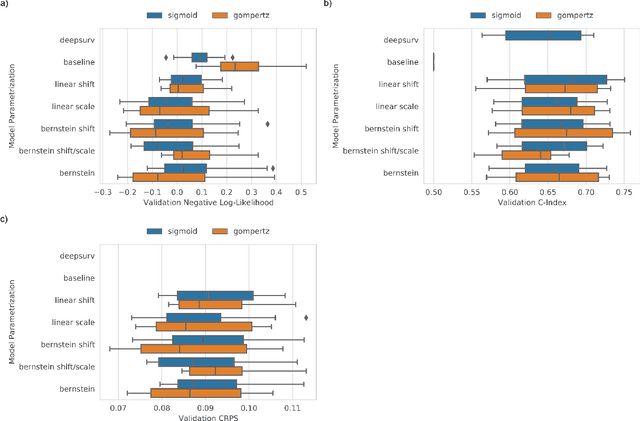

Deep conditional transformation models for survival analysis

Oct 20, 2022

Abstract:An every increasing number of clinical trials features a time-to-event outcome and records non-tabular patient data, such as magnetic resonance imaging or text data in the form of electronic health records. Recently, several neural-network based solutions have been proposed, some of which are binary classifiers. Parametric, distribution-free approaches which make full use of survival time and censoring status have not received much attention. We present deep conditional transformation models (DCTMs) for survival outcomes as a unifying approach to parametric and semiparametric survival analysis. DCTMs allow the specification of non-linear and non-proportional hazards for both tabular and non-tabular data and extend to all types of censoring and truncation. On real and semi-synthetic data, we show that DCTMs compete with state-of-the-art DL approaches to survival analysis.

H&E-based Computational Biomarker Enables Universal EGFR Screening for Lung Adenocarcinoma

Jun 21, 2022

Abstract:Lung cancer is the leading cause of cancer death worldwide, with lung adenocarcinoma being the most prevalent form of lung cancer. EGFR positive lung adenocarcinomas have been shown to have high response rates to TKI therapy, underlying the essential nature of molecular testing for lung cancers. Despite current guidelines consider testing necessary, a large portion of patients are not routinely profiled, resulting in millions of people not receiving the optimal treatment for their lung cancer. Sequencing is the gold standard for molecular testing of EGFR mutations, but it can take several weeks for results to come back, which is not ideal in a time constrained scenario. The development of alternative screening tools capable of detecting EGFR mutations quickly and cheaply while preserving tissue for sequencing could help reduce the amount of sub-optimally treated patients. We propose a multi-modal approach which integrates pathology images and clinical variables to predict EGFR mutational status achieving an AUC of 84% on the largest clinical cohort to date. Such a computational model could be deployed at large at little additional cost. Its clinical application could reduce the number of patients who receive sub-optimal treatments by 53.1% in China, and up to 96.6% in the US.

Accelerating Prostate Diffusion Weighted MRI using Guided Denoising Convolutional Neural Network: Retrospective Feasibility Study

Jun 30, 2020

Abstract:Purpose: To investigate feasibility of accelerating prostate diffusion-weighted imaging (DWI) by reducing the number of acquired averages and denoising the resulting image using a proposed guided denoising convolutional neural network (DnCNN). Materials and Methods: Raw data from the prostate DWI scans were retrospectively gathered (between July 2018 and July 2019) from six single-vendor MRI scanners. 118 data sets were used for training and validation (age: 64.3 +- 8 years) and 37 - for testing (age: 65.1 +- 7.3 years). High b-value diffusion-weighted (hb-DW) data were reconstructed into noisy images using two averages and reference images using all sixteen averages. A conventional DnCNN was modified into a guided DnCNN, which uses the low b-value DWI image as a guidance input. Quantitative and qualitative reader evaluations were performed on the denoised hb-DW images. A cumulative link mixed regression model was used to compare the readers scores. The agreement between the apparent diffusion coefficient (ADC) maps (denoised vs reference) was analyzed using Bland Altman analysis. Results: Compared to the DnCNN, the guided DnCNN produced denoised hb-DW images with higher peak signal-to-noise ratio and structural similarity index and lower normalized mean square error (p < 0.001). Compared to the reference images, the denoised images received higher image quality scores (p < 0.0001). The ADC values based on the denoised hb-DW images were in good agreement with the reference ADC values. Conclusion: Accelerating prostate DWI by reducing the number of acquired averages and denoising the resulting image using the proposed guided DnCNN is technically feasible.

Dynamic PET cardiac and parametric image reconstruction: a fixed-point proximity gradient approach using patch-based DCT and tensor SVD regularization

Jun 13, 2019

Abstract:Our aim was to enhance visual quality and quantitative accuracy of dynamic positron emission tomography (PET)uptake images by improved image reconstruction, using sophisticated sparse penalty models that incorporate both 2D spatial+1D temporal (3DT) information. We developed two new 3DT PET reconstruction algorithms, incorporating different temporal and spatial penalties based on discrete cosine transform (DCT)w/ patches, and tensor nuclear norm (TNN) w/ patches, and compared to frame-by-frame methods; conventional 2D ordered subsets expectation maximization (OSEM) w/ post-filtering and 2D-DCT and 2D-TNN. A 3DT brain phantom with kinetic uptake (2-tissue model), and a moving 3DT cardiac/lung phantom was simulated and reconstructed. For the cardiac/lung phantom, an additional cardiac gated 2D-OSEM set was reconstructed. The structural similarity index (SSIM) and relative root mean squared error (rRMSE) relative ground truth was investigated. The image derived left ventricular (LV) volume for the cardiac/lung images was found by region growing and parametric images of the brain phantom were calculated. For the cardiac/lung phantom, 3DT-TNN yielded optimal images, and 3DT-DCT was best for the brain phantom. The optimal LV volume from the 3DT-TNN images was on average 11 and 55 percentage points closer to the true value compared to cardiac gated 2D-OSEM and 2D-OSEM respectively. Compared to 2D-OSEM, parametric images based on 3DT-DCT images generally had smaller bias and higher SSIM. Our novel methods that incorporate both 2D spatial and 1D temporal penalties produced dynamic PET images of higher quality than conventional 2D methods, w/o need for post-filtering. Breathing and cardiac motion were simultaneously captured w/o need for respiratory or cardiac gating. LV volumes were better recovered, and subsequently fitted parametric images were generally less biased and of higher quality.

DeepPET: A deep encoder-decoder network for directly solving the PET reconstruction inverse problem

Sep 25, 2018

Abstract:Positron emission tomography (PET) is a cornerstone of modern radiology. The ability to detect cancer and metastases in whole body scans fundamentally changed cancer diagnosis and treatment. One of the main bottlenecks in the clinical application is the time it takes to reconstruct the anatomical image from the deluge of data in PET imaging. State-of-the art methods based on expectation maximization can take hours for a single patient and depend on manual fine-tuning. This results not only in financial burden for hospitals but more importantly leads to less efficient patient handling, evaluation, and ultimately diagnosis and treatment for patients. To overcome this problem we present a novel PET image reconstruction technique based on a deep convolutional encoder-decoder network, that takes PET sinogram data as input and directly outputs full PET images. Using realistic simulated data, we demonstrate that our network is able to reconstruct images >100 times faster, and with comparable image quality (in terms of root mean squared error) relative to conventional iterative reconstruction techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge