Hwanwoo Kim

Implicit Q-Learning and SARSA: Liberating Policy Control from Step-Size Calibration

Jan 26, 2026Abstract:Q-learning and SARSA are foundational reinforcement learning algorithms whose practical success depends critically on step-size calibration. Step-sizes that are too large can cause numerical instability, while step-sizes that are too small can lead to slow progress. We propose implicit variants of Q-learning and SARSA that reformulate their iterative updates as fixed-point equations. This yields an adaptive step-size adjustment that scales inversely with feature norms, providing automatic regularization without manual tuning. Our non-asymptotic analyses demonstrate that implicit methods maintain stability over significantly broader step-size ranges. Under favorable conditions, it permits arbitrarily large step-sizes while achieving comparable convergence rates. Empirical validation across benchmark environments spanning discrete and continuous state spaces shows that implicit Q-learning and SARSA exhibit substantially reduced sensitivity to step-size selection, achieving stable performance with step-sizes that would cause standard methods to fail.

Bayesian Optimization with Inexact Acquisition: Is Random Grid Search Sufficient?

Jun 13, 2025Abstract:Bayesian optimization (BO) is a widely used iterative algorithm for optimizing black-box functions. Each iteration requires maximizing an acquisition function, such as the upper confidence bound (UCB) or a sample path from the Gaussian process (GP) posterior, as in Thompson sampling (TS). However, finding an exact solution to these maximization problems is often intractable and computationally expensive. Reflecting such realistic situations, in this paper, we delve into the effect of inexact maximizers of the acquisition functions. Defining a measure of inaccuracy in acquisition solutions, we establish cumulative regret bounds for both GP-UCB and GP-TS without requiring exact solutions of acquisition function maximization. Our results show that under appropriate conditions on accumulated inaccuracy, inexact BO algorithms can still achieve sublinear cumulative regret. Motivated by such findings, we provide both theoretical justification and numerical validation for random grid search as an effective and computationally efficient acquisition function solver.

Stabilizing Temporal Difference Learning via Implicit Stochastic Approximation

May 02, 2025

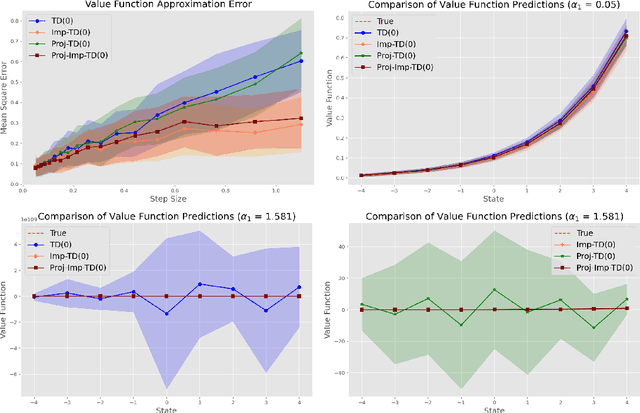

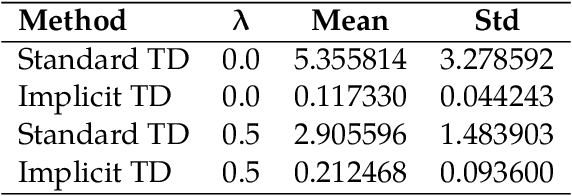

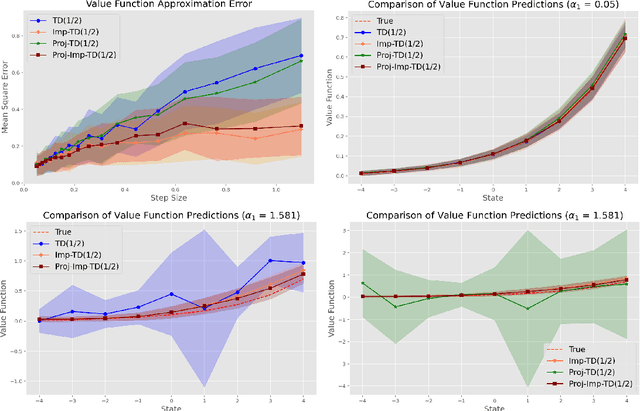

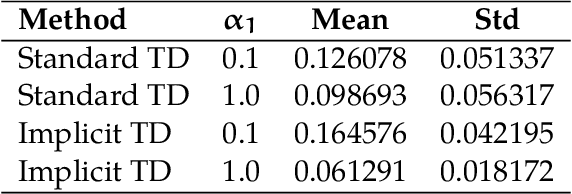

Abstract:Temporal Difference (TD) learning is a foundational algorithm in reinforcement learning (RL). For nearly forty years, TD learning has served as a workhorse for applied RL as well as a building block for more complex and specialized algorithms. However, despite its widespread use, it is not without drawbacks, the most prominent being its sensitivity to step size. A poor choice of step size can dramatically inflate the error of value estimates and slow convergence. Consequently, in practice, researchers must use trial and error in order to identify a suitable step size -- a process that can be tedious and time consuming. As an alternative, we propose implicit TD algorithms that reformulate TD updates into fixed-point equations. These updates are more stable and less sensitive to step size without sacrificing computational efficiency. Moreover, our theoretical analysis establishes asymptotic convergence guarantees and finite-time error bounds. Our results demonstrate their robustness and practicality for modern RL tasks, establishing implicit TD as a versatile tool for policy evaluation and value approximation.

Bayesian Optimization with Noise-Free Observations: Improved Regret Bounds via Random Exploration

Jan 30, 2024

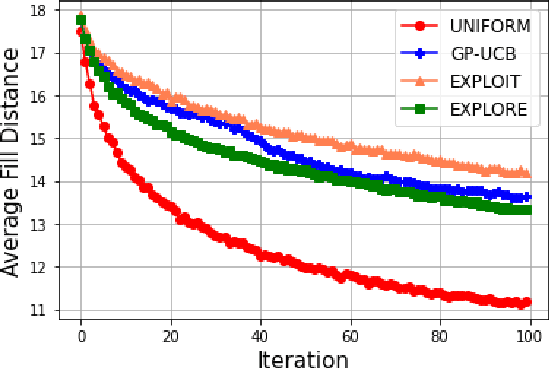

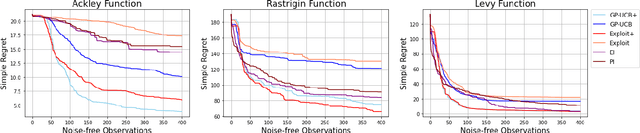

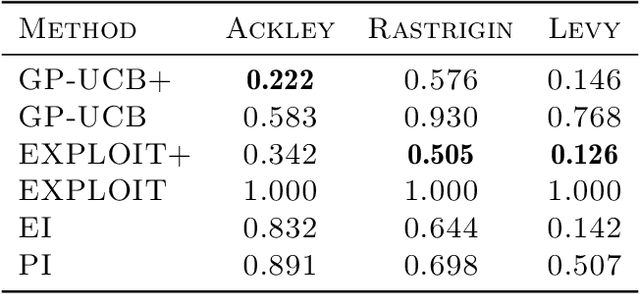

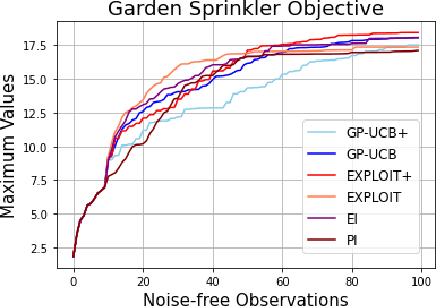

Abstract:This paper studies Bayesian optimization with noise-free observations. We introduce new algorithms rooted in scattered data approximation that rely on a random exploration step to ensure that the fill-distance of query points decays at a near-optimal rate. Our algorithms retain the ease of implementation of the classical GP-UCB algorithm and satisfy cumulative regret bounds that nearly match those conjectured in arXiv:2002.05096, hence solving a COLT open problem. Furthermore, the new algorithms outperform GP-UCB and other popular Bayesian optimization strategies in several examples.

ReTaSA: A Nonparametric Functional Estimation Approach for Addressing Continuous Target Shift

Jan 29, 2024

Abstract:The presence of distribution shifts poses a significant challenge for deploying modern machine learning models in real-world applications. This work focuses on the target shift problem in a regression setting (Zhang et al., 2013; Nguyen et al., 2016). More specifically, the target variable y (also known as the response variable), which is continuous, has different marginal distributions in the training source and testing domain, while the conditional distribution of features x given y remains the same. While most literature focuses on classification tasks with finite target space, the regression problem has an infinite dimensional target space, which makes many of the existing methods inapplicable. In this work, we show that the continuous target shift problem can be addressed by estimating the importance weight function from an ill-posed integral equation. We propose a nonparametric regularized approach named ReTaSA to solve the ill-posed integral equation and provide theoretical justification for the estimated importance weight function. The effectiveness of the proposed method has been demonstrated with extensive numerical studies on synthetic and real-world datasets.

A Variational Inference Approach to Inverse Problems with Gamma Hyperpriors

Nov 29, 2021

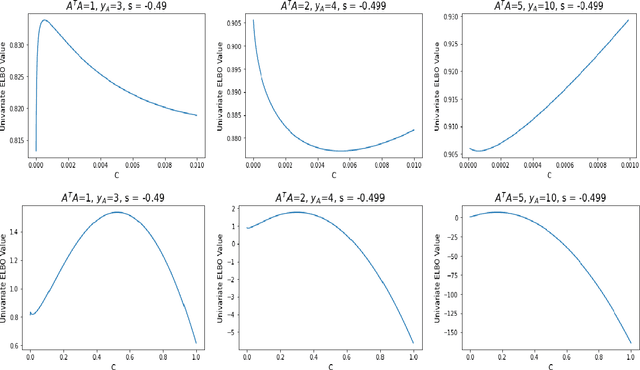

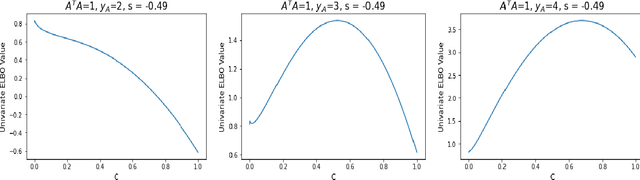

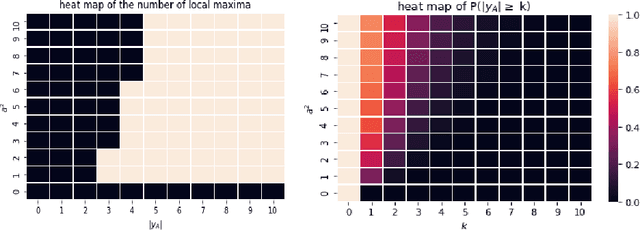

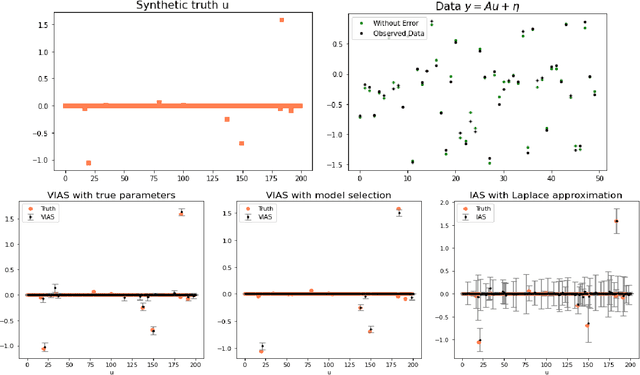

Abstract:Hierarchical models with gamma hyperpriors provide a flexible, sparse-promoting framework to bridge $L^1$ and $L^2$ regularizations in Bayesian formulations to inverse problems. Despite the Bayesian motivation for these models, existing methodologies are limited to \textit{maximum a posteriori} estimation. The potential to perform uncertainty quantification has not yet been realized. This paper introduces a variational iterative alternating scheme for hierarchical inverse problems with gamma hyperpriors. The proposed variational inference approach yields accurate reconstruction, provides meaningful uncertainty quantification, and is easy to implement. In addition, it lends itself naturally to conduct model selection for the choice of hyperparameters. We illustrate the performance of our methodology in several computed examples, including a deconvolution problem and sparse identification of dynamical systems from time series data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge