Hunter Goforth

Joint Pose and Shape Estimation of Vehicles from LiDAR Data

Sep 08, 2020

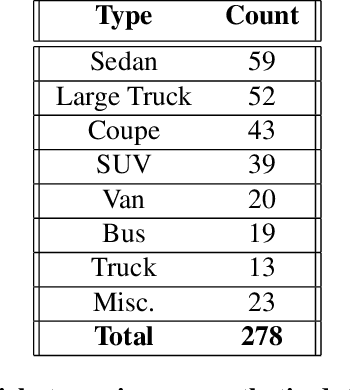

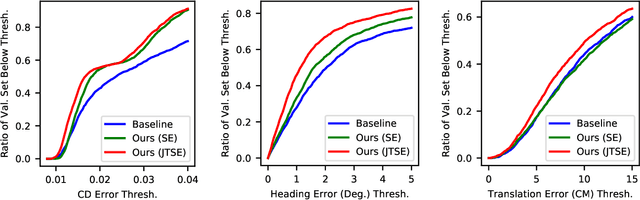

Abstract:We address the problem of estimating the pose and shape of vehicles from LiDAR scans, a common problem faced by the autonomous vehicle community. Recent work has tended to address pose and shape estimation separately in isolation, despite the inherent connection between the two. We investigate a method of jointly estimating shape and pose where a single encoding is learned from which shape and pose may be decoded in an efficient yet effective manner. We additionally introduce a novel joint pose and shape loss, and show that this joint training method produces better results than independently-trained pose and shape estimators. We evaluate our method on both synthetic data and real-world data, and show superior performance against a state-of-the-art baseline.

One Framework to Register Them All: PointNet Encoding for Point Cloud Alignment

Dec 12, 2019

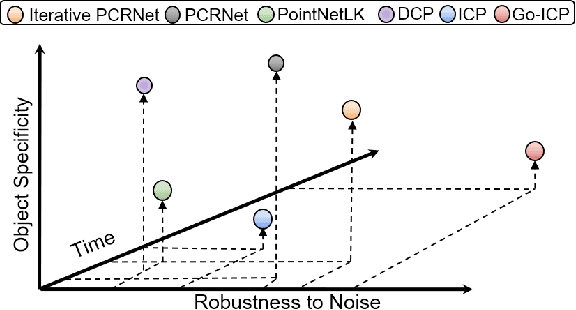

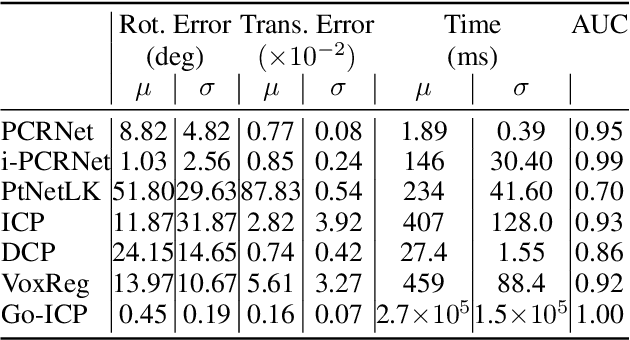

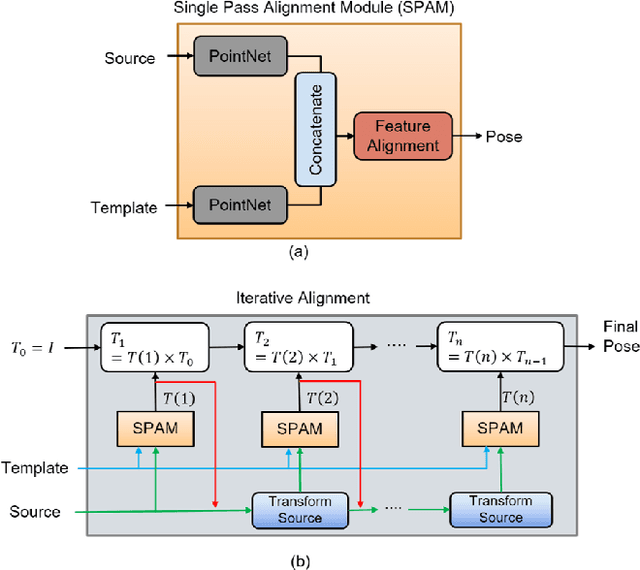

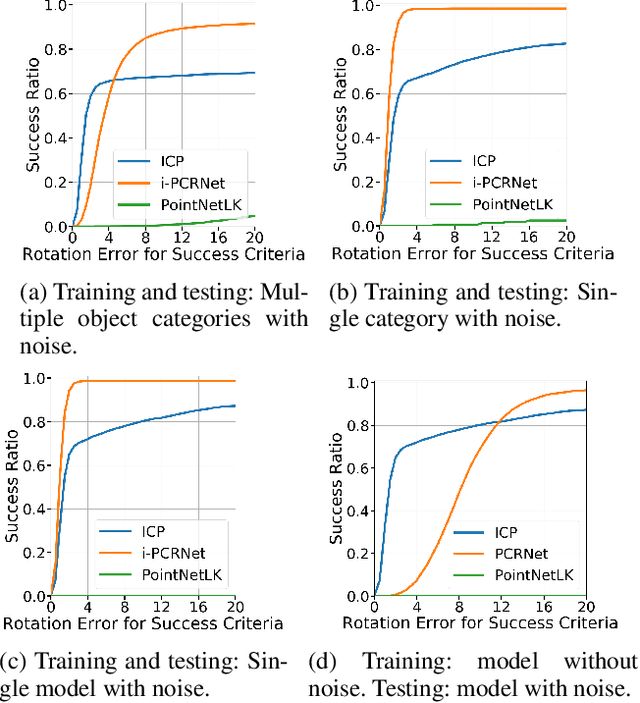

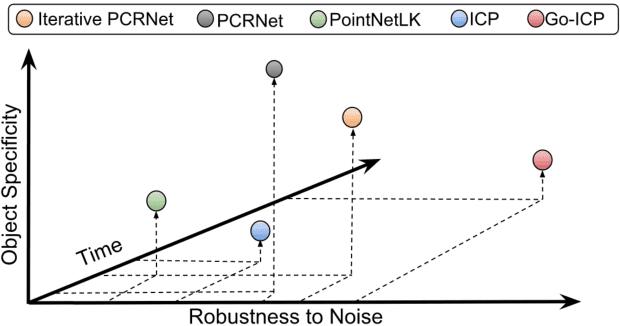

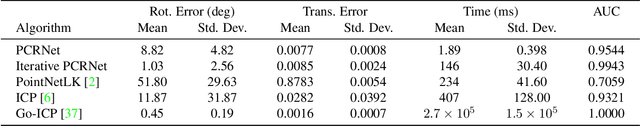

Abstract:PointNet has recently emerged as a popular representation for unstructured point cloud data, allowing application of deep learning to tasks such as object detection, segmentation and shape completion. However, recent works in literature have shown the sensitivity of the PointNet representation to pose misalignment. This paper presents a novel framework that uses PointNet encoding to align point clouds and perform registration for applications such as 3D reconstruction, tracking and pose estimation. We develop a framework that compares PointNet features of template and source point clouds to find the transformation that aligns them accurately. In doing so, we avoid computationally expensive correspondence finding steps, that are central to popular registration methods such as ICP and its variants. Depending on the prior information about the shape of the object formed by the point clouds, our framework can produce approaches that are shape specific or general to unseen shapes. Our framework produces approaches that are robust to noise and initial misalignment in data and work robustly with sparse as well as partial point clouds. We perform extensive simulation and real-world experiments to validate the efficacy of our approach and compare the performance with state-of-art approaches. Code is available at https://github.com/vinits5/pointnet-registrationframework.

PCRNet: Point Cloud Registration Network using PointNet Encoding

Aug 21, 2019

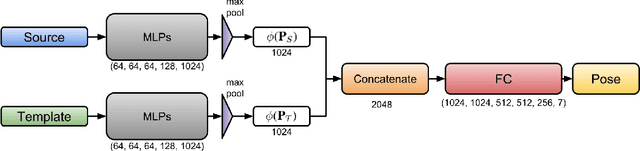

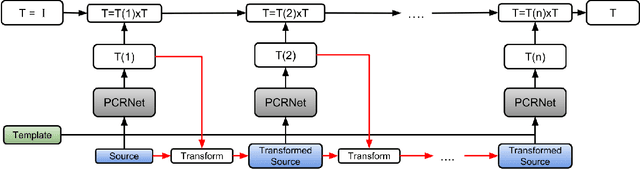

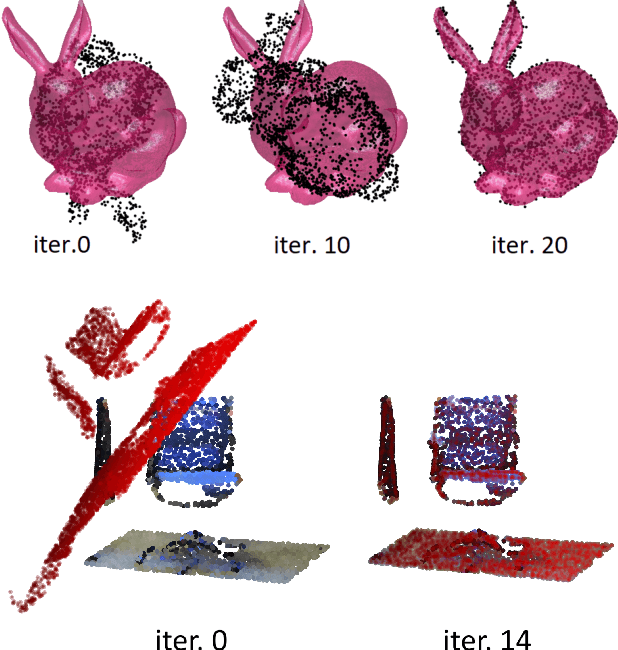

Abstract:PointNet has recently emerged as a popular representation for unstructured point cloud data, allowing application of deep learning to tasks such as object detection, segmentation and shape completion. However, recent works in literature have shown the sensitivity of the PointNet representation to pose misalignment. This paper presents a novel framework that uses the PointNet representation to align point clouds and perform registration for applications such as tracking, 3D reconstruction and pose estimation. We develop a framework that compares PointNet features of template and source point clouds to find the transformation that aligns them accurately. Depending on the prior information about the shape of the object formed by the point clouds, our framework can produce approaches that are shape specific or general to unseen shapes. The shape specific approach uses a Siamese architecture with fully connected (FC) layers and is robust to noise and initial misalignment in data. We perform extensive simulation and real-world experiments to validate the efficacy of our approach and compare the performance with state-of-art approaches.

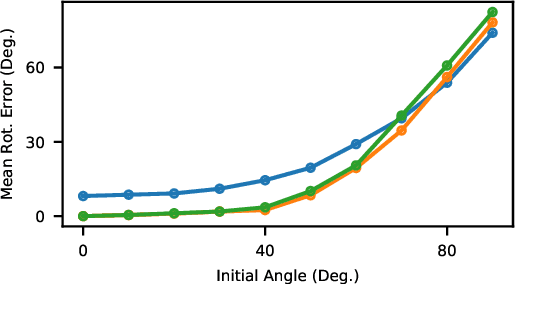

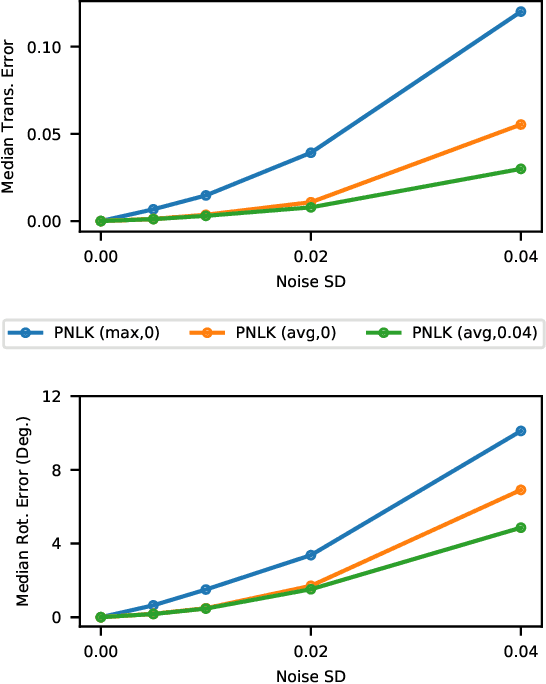

PointNetLK: Robust & Efficient Point Cloud Registration using PointNet

Apr 04, 2019

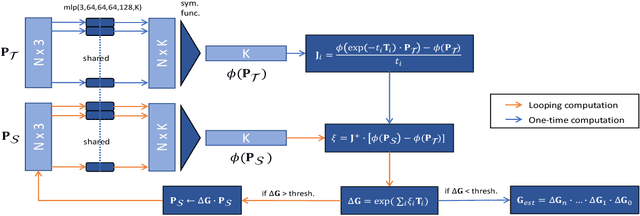

Abstract:PointNet has revolutionized how we think about representing point clouds. For classification and segmentation tasks, the approach and its subsequent extensions are state-of-the-art. To date, the successful application of PointNet to point cloud registration has remained elusive. In this paper we argue that PointNet itself can be thought of as a learnable "imaging" function. As a consequence, classical vision algorithms for image alignment can be applied on the problem - namely the Lucas & Kanade (LK) algorithm. Our central innovations stem from: (i) how to modify the LK algorithm to accommodate the PointNet imaging function, and (ii) unrolling PointNet and the LK algorithm into a single trainable recurrent deep neural network. We describe the architecture, and compare its performance against state-of-the-art in common registration scenarios. The architecture offers some remarkable properties including: generalization across shape categories and computational efficiency - opening up new paths of exploration for the application of deep learning to point cloud registration. Code and videos are available at https://github.com/hmgoforth/PointNetLK.

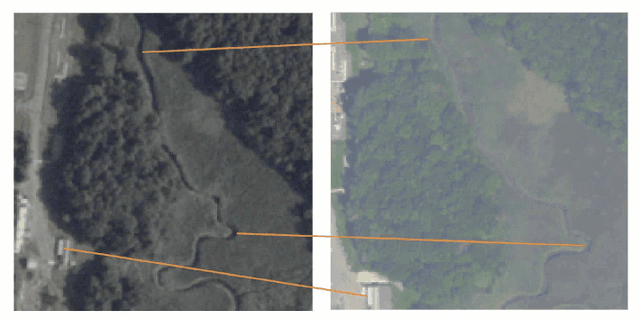

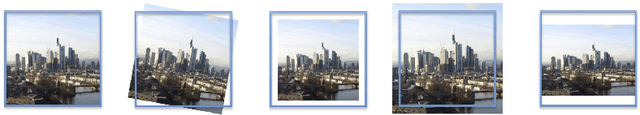

Aligning Across Large Gaps in Time

Mar 22, 2018

Abstract:We present a method of temporally-invariant image registration for outdoor scenes, with invariance across time of day, across seasonal variations, and across decade-long periods, for low- and high-texture scenes. Our method can be useful for applications in remote sensing, GPS-denied UAV localization, 3D reconstruction, and many others. Our method leverages a recently proposed approach to image registration, where fully-convolutional neural networks are used to create feature maps which can be registered using the Inverse-Composition Lucas-Kanade algorithm (ICLK). We show that invariance that is learned from satellite imagery can be transferable to time-lapse data captured by webcams mounted on buildings near ground-level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge