Hung-Yu Kao

From Persona to Person: Enhancing the Naturalness with Multiple Discourse Relations Graph Learning in Personalized Dialogue Generation

Jun 13, 2025Abstract:In dialogue generation, the naturalness of responses is crucial for effective human-machine interaction. Personalized response generation poses even greater challenges, as the responses must remain coherent and consistent with the user's personal traits or persona descriptions. We propose MUDI ($\textbf{Mu}$ltiple $\textbf{Di}$scourse Relations Graph Learning) for personalized dialogue generation. We utilize a Large Language Model to assist in annotating discourse relations and to transform dialogue data into structured dialogue graphs. Our graph encoder, the proposed DialogueGAT model, then captures implicit discourse relations within this structure, along with persona descriptions. During the personalized response generation phase, novel coherence-aware attention strategies are implemented to enhance the decoder's consideration of discourse relations. Our experiments demonstrate significant improvements in the quality of personalized responses, thus resembling human-like dialogue exchanges.

MAPLE: Enhancing Review Generation with Multi-Aspect Prompt LEarning in Explainable Recommendation

Aug 19, 2024

Abstract:Explainable Recommendation task is designed to receive a pair of user and item and output explanations to justify why an item is recommended to a user. Many models treat review-generation as a proxy of explainable recommendation. Although they are able to generate fluent and grammatical sentences, they suffer from generality and hallucination issues. We propose a personalized, aspect-controlled model called Multi-Aspect Prompt LEarner (MAPLE), in which it integrates aspect category as another input dimension to facilitate the memorization of fine-grained aspect terms. Experiments on two real-world review datasets in restaurant domain show that MAPLE outperforms the baseline review-generation models in terms of text and feature diversity while maintaining excellent coherence and factual relevance. We further treat MAPLE as a retriever component in the retriever-reader framework and employ a Large-Language Model (LLM) as the reader, showing that MAPLE's explanation along with the LLM's comprehension ability leads to enriched and personalized explanation as a result. We will release the code and data in this http upon acceptance.

CFEVER: A Chinese Fact Extraction and VERification Dataset

Feb 20, 2024Abstract:We present CFEVER, a Chinese dataset designed for Fact Extraction and VERification. CFEVER comprises 30,012 manually created claims based on content in Chinese Wikipedia. Each claim in CFEVER is labeled as "Supports", "Refutes", or "Not Enough Info" to depict its degree of factualness. Similar to the FEVER dataset, claims in the "Supports" and "Refutes" categories are also annotated with corresponding evidence sentences sourced from single or multiple pages in Chinese Wikipedia. Our labeled dataset holds a Fleiss' kappa value of 0.7934 for five-way inter-annotator agreement. In addition, through the experiments with the state-of-the-art approaches developed on the FEVER dataset and a simple baseline for CFEVER, we demonstrate that our dataset is a new rigorous benchmark for factual extraction and verification, which can be further used for developing automated systems to alleviate human fact-checking efforts. CFEVER is available at https://ikmlab.github.io/CFEVER.

ELECTRA is a Zero-Shot Learner, Too

Jul 20, 2022

Abstract:Recently, for few-shot or even zero-shot learning, the new paradigm "pre-train, prompt, and predict" has achieved remarkable achievements compared with the "pre-train, fine-tune" paradigm. After the success of prompt-based GPT-3, a series of masked language model (MLM)-based (e.g., BERT, RoBERTa) prompt learning methods became popular and widely used. However, another efficient pre-trained discriminative model, ELECTRA, has probably been neglected. In this paper, we attempt to accomplish several NLP tasks in the zero-shot scenario using a novel our proposed replaced token detection (RTD)-based prompt learning method. Experimental results show that ELECTRA model based on RTD-prompt learning achieves surprisingly state-of-the-art zero-shot performance. Numerically, compared to MLM-RoBERTa-large and MLM-BERT-large, our RTD-ELECTRA-large has an average of about 8.4% and 13.7% improvement on all 15 tasks. Especially on the SST-2 task, our RTD-ELECTRA-large achieves an astonishing 90.1% accuracy without any training data. Overall, compared to the pre-trained masked language models, the pre-trained replaced token detection model performs better in zero-shot learning. The source code is available at: https://github.com/nishiwen1214/RTD-ELECTRA.

True or False: Does the Deep Learning Model Learn to Detect Rumors?

Dec 01, 2021

Abstract:It is difficult for humans to distinguish the true and false of rumors, but current deep learning models can surpass humans and achieve excellent accuracy on many rumor datasets. In this paper, we investigate whether deep learning models that seem to perform well actually learn to detect rumors. We evaluate models on their generalization ability to out-of-domain examples by fine-tuning BERT-based models on five real-world datasets and evaluating against all test sets. The experimental results indicate that the generalization ability of the models on other unseen datasets are unsatisfactory, even common-sense rumors cannot be detected. Moreover, we found through experiments that models take shortcuts and learn absurd knowledge when the rumor datasets have serious data pitfalls. This means that simple modifications to the rumor text based on specific rules will lead to inconsistent model predictions. To more realistically evaluate rumor detection models, we proposed a new evaluation method called paired test (PairT), which requires models to correctly predict a pair of test samples at the same time. Furthermore, we make recommendations on how to better create rumor dataset and evaluate rumor detection model at the end of this paper.

DropAttack: A Masked Weight Adversarial Training Method to Improve Generalization of Neural Networks

Aug 29, 2021

Abstract:Adversarial training has been proven to be a powerful regularization method to improve the generalization of models. However, current adversarial training methods only attack the original input sample or the embedding vectors, and their attacks lack coverage and diversity. To further enhance the breadth and depth of attack, we propose a novel masked weight adversarial training method called DropAttack, which enhances generalization of model by adding intentionally worst-case adversarial perturbations to both the input and hidden layers in different dimensions and minimize the adversarial risks generated by each layer. DropAttack is a general technique and can be adopt to a wide variety of neural networks with different architectures. To validate the effectiveness of the proposed method, we used five public datasets in the fields of natural language processing (NLP) and computer vision (CV) for experimental evaluating. We compare the proposed method with other adversarial training methods and regularization methods, and our method achieves state-of-the-art on all datasets. In addition, Dropattack can achieve the same performance when it use only a half training data compared to other standard training method. Theoretical analysis reveals that DropAttack can perform gradient regularization at random on some of the input and wight parameters of the model. Further visualization experiments show that DropAttack can push the minimum risk of the model to a lower and flatter loss landscapes. Our source code is publicly available on https://github.com/nishiwen1214/DropAttack.

Rumor Detection on Twitter Using Multiloss Hierarchical BiLSTM with an Attenuation Factor

Oct 31, 2020

Abstract:Social media platforms such as Twitter have become a breeding ground for unverified information or rumors. These rumors can threaten people's health, endanger the economy, and affect the stability of a country. Many researchers have developed models to classify rumors using traditional machine learning or vanilla deep learning models. However, previous studies on rumor detection have achieved low precision and are time consuming. Inspired by the hierarchical model and multitask learning, a multiloss hierarchical BiLSTM model with an attenuation factor is proposed in this paper. The model is divided into two BiLSTM modules: post level and event level. By means of this hierarchical structure, the model can extract deep in-formation from limited quantities of text. Each module has a loss function that helps to learn bilateral features and reduce the training time. An attenuation fac-tor is added at the post level to increase the accuracy. The results on two rumor datasets demonstrate that our model achieves better performance than that of state-of-the-art machine learning and vanilla deep learning models.

Probing Neural Network Comprehension of Natural Language Arguments

Jul 17, 2019

Abstract:We are surprised to find that BERT's peak performance of 77% on the Argument Reasoning Comprehension Task reaches just three points below the average untrained human baseline. However, we show that this result is entirely accounted for by exploitation of spurious statistical cues in the dataset. We analyze the nature of these cues and demonstrate that a range of models all exploit them. This analysis informs the construction of an adversarial dataset on which all models achieve random accuracy. Our adversarial dataset provides a more robust assessment of argument comprehension and should be adopted as the standard in future work.

Fake News Detection as Natural Language Inference

Jul 17, 2019

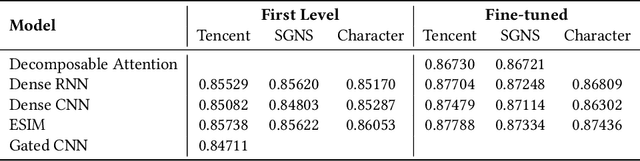

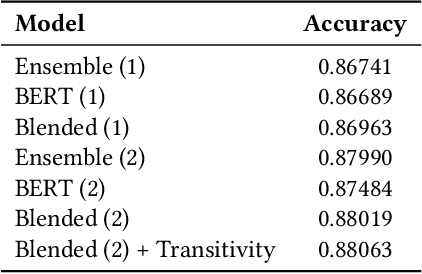

Abstract:This report describes the entry by the Intelligent Knowledge Management (IKM) Lab in the WSDM 2019 Fake News Classification challenge. We treat the task as natural language inference (NLI). We individually train a number of the strongest NLI models as well as BERT. We ensemble these results and retrain with noisy labels in two stages. We analyze transitivity relations in the train and test sets and determine a set of test cases that can be reliably classified on this basis. The remainder of test cases are classified by our ensemble. Our entry achieves test set accuracy of 88.063% for 3rd place in the competition.

SOC: hunting the underground inside story of the ethereum Social-network Opinion and Comment

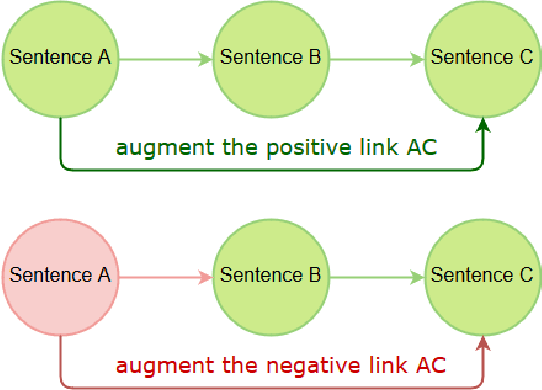

Nov 27, 2018

Abstract:The cryptocurrency is attracting more and more attention because of the blockchain technology. Ethereum is gaining a significant popularity in blockchain community, mainly due to the fact that it is designed in a way that enables developers to write smart contracts and decentralized applications (Dapps). There are many kinds of cryptocurrency information on the social network. The risks and fraud problems behind it have pushed many countries including the United States, South Korea, and China to make warnings and set up corresponding regulations. However, the security of Ethereum smart contracts has not gained much attention. Through the Deep Learning approach, we propose a method of sentiment analysis for Ethereum's community comments. In this research, we first collected the users' cryptocurrency comments from the social network and then fed to our LSTM + CNN model for training. Then we made prediction through sentiment analysis. With our research result, we have demonstrated that both the precision and the recall of sentiment analysis can achieve 0.80+. More importantly, we deploy our sentiment analysis1 on RatingToken and Coin Master (mobile application of Cheetah Mobile Blockchain Security Center23). We can effectively provide detail information to resolve the risks of being fake and fraud problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge