Huihui Pan

Minimalist and High-Performance Semantic Segmentation with Plain Vision Transformers

Oct 19, 2023

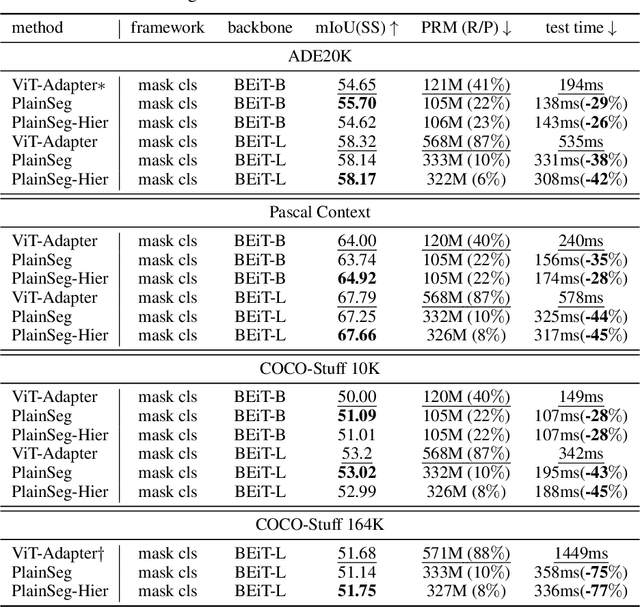

Abstract:In the wake of Masked Image Modeling (MIM), a diverse range of plain, non-hierarchical Vision Transformer (ViT) models have been pre-trained with extensive datasets, offering new paradigms and significant potential for semantic segmentation. Current state-of-the-art systems incorporate numerous inductive biases and employ cumbersome decoders. Building upon the original motivations of plain ViTs, which are simplicity and generality, we explore high-performance `minimalist' systems to this end. Our primary purpose is to provide simple and efficient baselines for practical semantic segmentation with plain ViTs. Specifically, we first explore the feasibility and methodology for achieving high-performance semantic segmentation using the last feature map. As a result, we introduce the PlainSeg, a model comprising only three 3$\times$3 convolutions in addition to the transformer layers (either encoder or decoder). In this process, we offer insights into two underlying principles: (i) high-resolution features are crucial to high performance in spite of employing simple up-sampling techniques and (ii) the slim transformer decoder requires a much larger learning rate than the wide transformer decoder. On this basis, we further present the PlainSeg-Hier, which allows for the utilization of hierarchical features. Extensive experiments on four popular benchmarks demonstrate the high performance and efficiency of our methods. They can also serve as powerful tools for assessing the transfer ability of base models in semantic segmentation. Code is available at \url{https://github.com/ydhongHIT/PlainSeg}.

Representation Separation for Semantic Segmentation with Vision Transformers

Dec 28, 2022Abstract:Vision transformers (ViTs) encoding an image as a sequence of patches bring new paradigms for semantic segmentation.We present an efficient framework of representation separation in local-patch level and global-region level for semantic segmentation with ViTs. It is targeted for the peculiar over-smoothness of ViTs in semantic segmentation, and therefore differs from current popular paradigms of context modeling and most existing related methods reinforcing the advantage of attention. We first deliver the decoupled two-pathway network in which another pathway enhances and passes down local-patch discrepancy complementary to global representations of transformers. We then propose the spatially adaptive separation module to obtain more separate deep representations and the discriminative cross-attention which yields more discriminative region representations through novel auxiliary supervisions. The proposed methods achieve some impressive results: 1) incorporated with large-scale plain ViTs, our methods achieve new state-of-the-art performances on five widely used benchmarks; 2) using masked pre-trained plain ViTs, we achieve 68.9% mIoU on Pascal Context, setting a new record; 3) pyramid ViTs integrated with the decoupled two-pathway network even surpass the well-designed high-resolution ViTs on Cityscapes; 4) the improved representations by our framework have favorable transferability in images with natural corruptions. The codes will be released publicly.

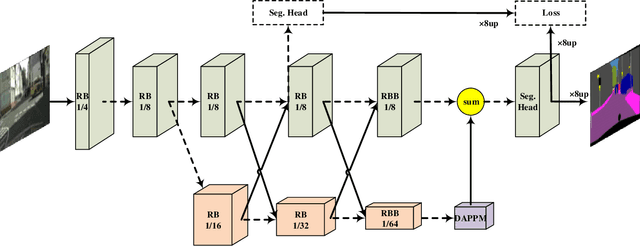

Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes

Jan 15, 2021

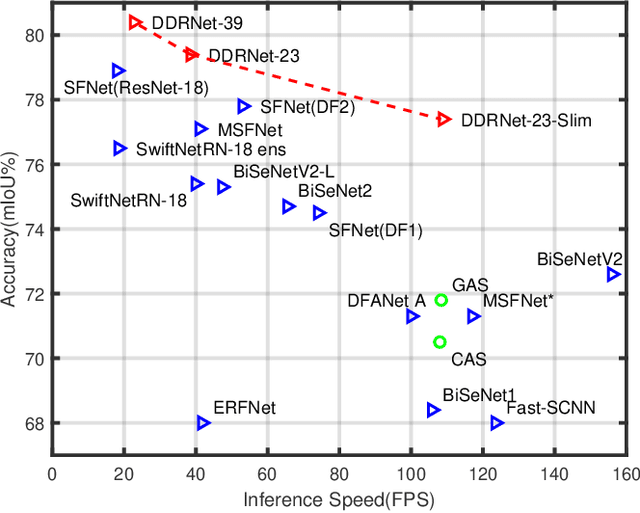

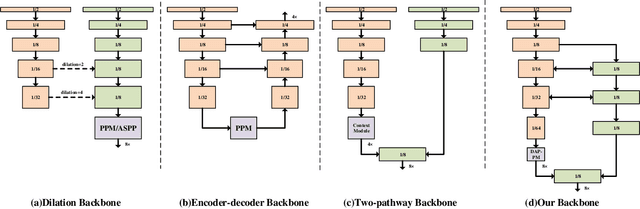

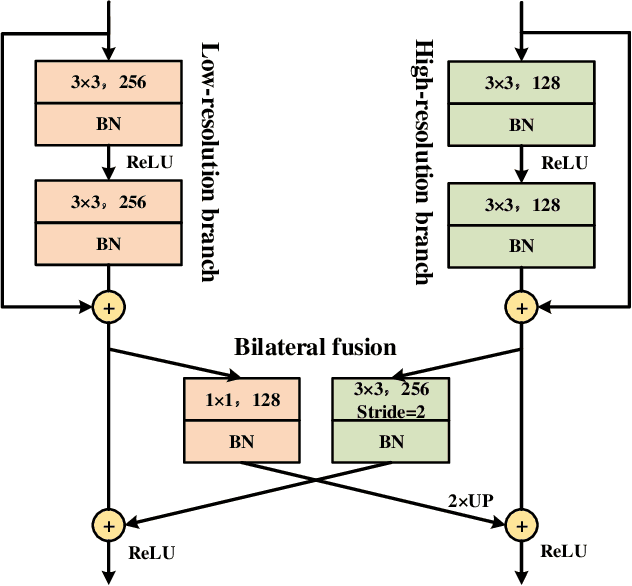

Abstract:Semantic segmentation is a critical technology for autonomous vehicles to understand surrounding scenes. For practical autonomous vehicles, it is undesirable to spend a considerable amount of inference time to achieve high-accuracy segmentation results. Using light-weight architectures (encoder-decoder or two-pathway) or reasoning on low-resolution images, recent methods realize very fast scene parsing which even run at more than 100 FPS on single 1080Ti GPU. However, there are still evident gaps in performance between these real-time methods and models based on dilation backbones. To tackle this problem, we propose novel deep dual-resolution networks (DDRNets) for real-time semantic segmentation of road scenes. Besides, we design a new contextual information extractor named Deep Aggregation Pyramid Pooling Module (DAPPM) to enlarge effective receptive fields and fuse multi-scale context. Our method achieves new state-of-the-art trade-off between accuracy and speed on both Cityscapes and CamVid dataset. Specially, on single 2080Ti GPU, DDRNet-23-slim yields 77.4% mIoU at 109 FPS on Cityscapes test set and 74.4% mIoU at 230 FPS on CamVid test set. Without utilizing attention mechanism, pre-training on larger semantic segmentation dataset or inference acceleration, DDRNet-39 attains 80.4% test mIoU at 23 FPS on Cityscapes. With widely used test augmentation, our method is still superior to most state-of-the-art models, requiring much less computation. Codes and trained models will be made publicly available.

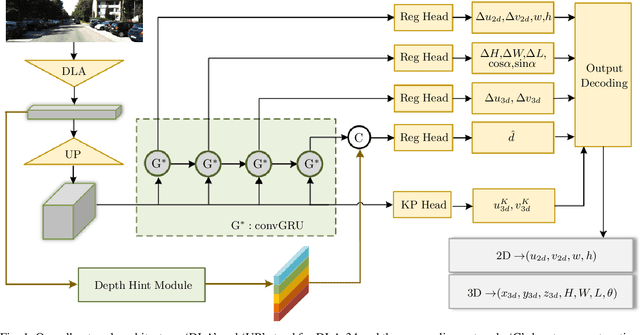

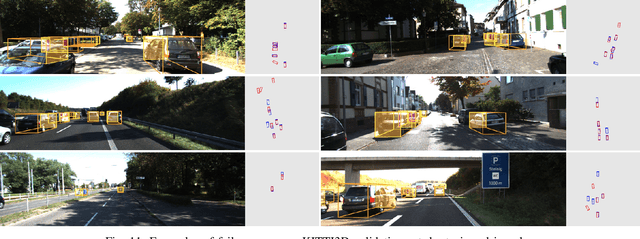

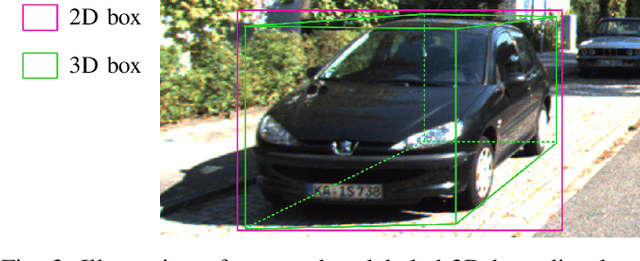

Monocular 3D Object Detection with Sequential Feature Association and Depth Hint Augmentation

Dec 02, 2020

Abstract:Monocular 3D object detection is a promising research topic for the intelligent perception systems of autonomous driving. In this work, a single-stage keypoint-based network, named as FADNet, is presented to address the task of monocular 3D object detection. In contrast to previous keypoint-based methods which adopt identical layouts for output branches, we propose to divide the output modalities into different groups according to the estimating difficulty, whereby different groups are treated differently by sequential feature association. Another contribution of this work is the strategy of depth hint augmentation. To provide characterized depth patterns as hints for depth estimation, a dedicated depth hint module is designed to generate row-wise features named as depth hints, which are explicitly supervised in a bin-wise manner. In the training stage, the regression outputs are uniformly encoded to enable loss disentanglement. The 2D loss term is further adapted to be depth-aware for improving the detection accuracy of small objects. The contributions of this work are validated by conducting experiments and ablation study on the KITTI benchmark. Without utilizing depth priors, post optimization, or other refinement modules, our network performs competitively against state-of-the-art methods while maintaining a decent running speed.

A CRF-based Framework for Tracklet Inactivation in Online Multi-Object Tracking

Nov 30, 2020

Abstract:Online multi-object tracking (MOT) is an active research topic in the domain of computer vision. In this paper, a CRF-based framework is put forward to tackle the tracklet inactivation issues in online MOT problems. We apply the proposed framework to one of the state-of-the-art online MOT trackers, Tracktor++. The baseline algorithm for online MOT has the drawback of simple strategy on tracklet inactivation, which relies merely on tracking hypotheses' classification scores partitioned by using a fixed threshold. To overcome such a drawback, a discrete conditional random field (CRF) is developed to exploit the intra-frame relationship between tracking hypotheses. Separate sets of feature functions are designed for the unary and binary terms in the CRF so as to cope with various challenges in practical situations. The hypothesis filtering and dummy nodes techniques are employed to handle the problem of varying CRF nodes in the MOT context. In this paper, the inference of CRF is achieved by using the loopy belief propagation algorithm, and the parameters of the CRF are determined by utilizing the maximum likelihood estimation method. Experimental results demonstrate that the developed tracker with our CRF-based framework outperforms the baseline on the MOT16 and MOT17 datasets. The extensibility of the proposed method is further validated by an extensive experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge