Huihan Yao

Refining Neural Networks with Compositional Explanations

Mar 18, 2021

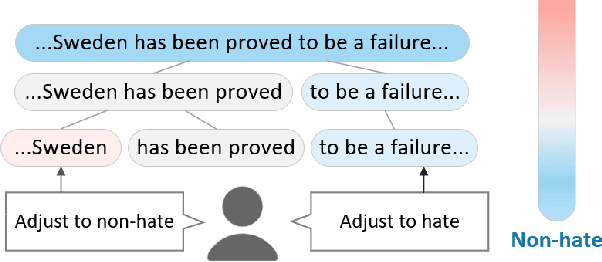

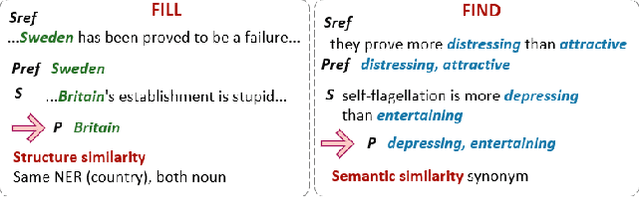

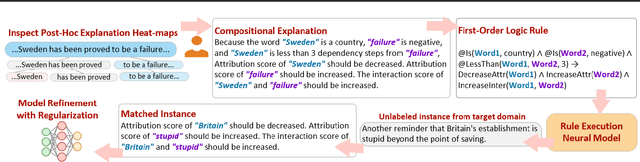

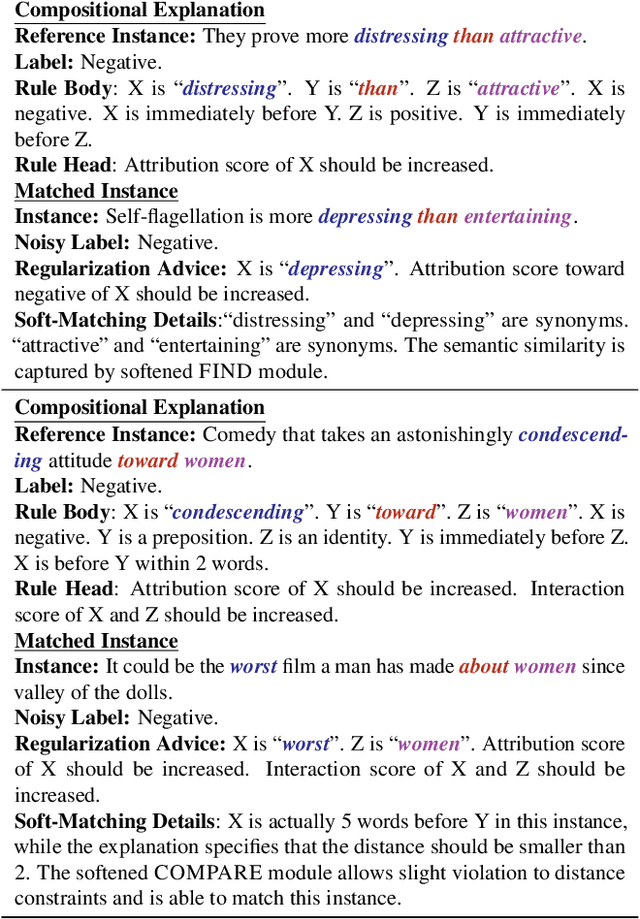

Abstract:Neural networks are prone to learning spurious correlations from biased datasets, and are thus vulnerable when making inferences in a new target domain. Prior work reveals spurious patterns via post-hoc model explanations which compute the importance of input features, and further eliminates the unintended model behaviors by regularizing importance scores with human knowledge. However, such regularization technique lacks flexibility and coverage, since only importance scores towards a pre-defined list of features are adjusted, while more complex human knowledge such as feature interaction and pattern generalization can hardly be incorporated. In this work, we propose to refine a learned model by collecting human-provided compositional explanations on the models' failure cases. By describing generalizable rules about spurious patterns in the explanation, more training examples can be matched and regularized, tackling the challenge of regularization coverage. We additionally introduce a regularization term for feature interaction to support more complex human rationale in refining the model. We demonstrate the effectiveness of the proposed approach on two text classification tasks by showing improved performance in target domain after refinement.

An Empirical Study on Deployment Faults of Deep Learning Based Mobile Applications

Feb 10, 2021

Abstract:Deep Learning (DL) is finding its way into a growing number of mobile software applications. These software applications, named as DL based mobile applications (abbreviated as mobile DL apps) integrate DL models trained using large-scale data with DL programs. A DL program encodes the structure of a desirable DL model and the process by which the model is trained using training data. Due to the increasing dependency of current mobile apps on DL, software engineering (SE) for mobile DL apps has become important. However, existing efforts in SE research community mainly focus on the development of DL models and extensively analyze faults in DL programs. In contrast, faults related to the deployment of DL models on mobile devices (named as deployment faults of mobile DL apps) have not been well studied. Since mobile DL apps have been used by billions of end users daily for various purposes including for safety-critical scenarios, characterizing their deployment faults is of enormous importance. To fill the knowledge gap, this paper presents the first comprehensive study on the deployment faults of mobile DL apps. We identify 304 real deployment faults from Stack Overflow and GitHub, two commonly used data sources for studying software faults. Based on the identified faults, we construct a fine-granularity taxonomy consisting of 23 categories regarding to fault symptoms and distill common fix strategies for different fault types. Furthermore, we suggest actionable implications and research avenues that could further facilitate the deployment of DL models on mobile devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge