Huatian Wang

Profiling Visual Dynamic Complexity Using a Bio-Robotic Approach

May 20, 2021

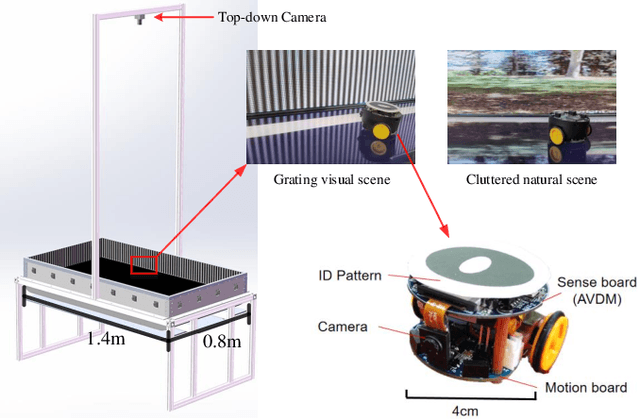

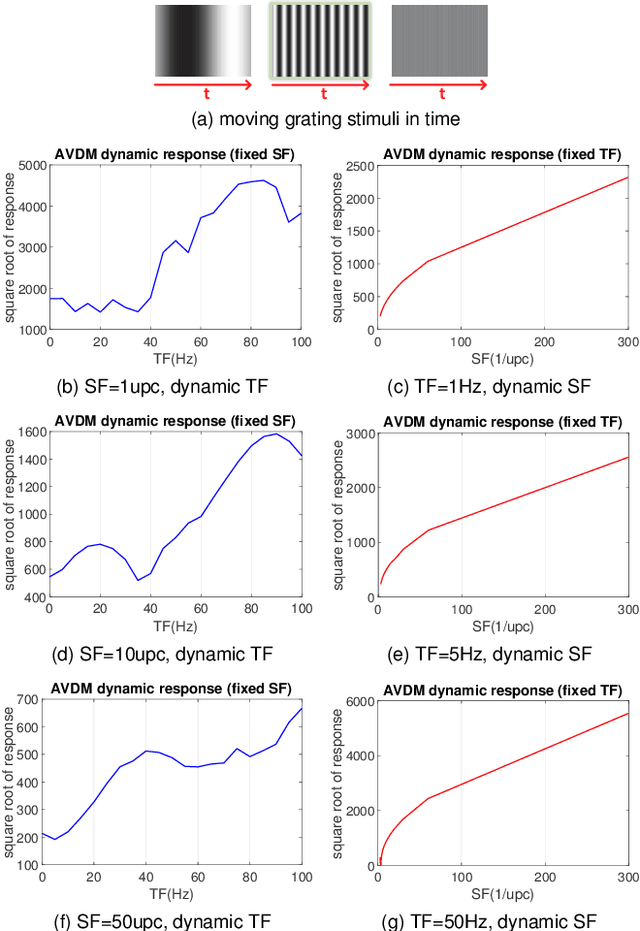

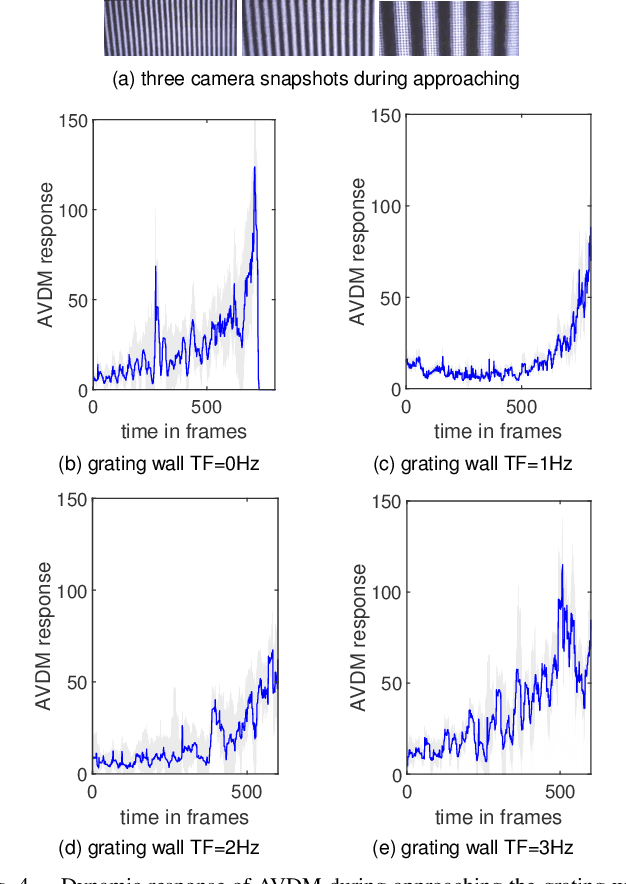

Abstract:Visual dynamic complexity is a ubiquitous, hidden attribute of the visual world that every dynamic vision system is faced with. However, it is implicit and intractable which has never been quantitatively described due to the difficulty in defending temporal features correlated to spatial image complexity. To fill this vacancy, we propose a novel bio-robotic approach to profile visual dynamic complexity which can be used as a new metric. Here we apply a state-of-the-art brain-inspired motion detection neural network model to explicitly profile such complexity associated with spatial-temporal frequency (SF-TF) of visual scene. This model is for the first time implemented in an autonomous micro-mobile robot which navigates freely in an arena with visual walls displaying moving sine-wave grating or cluttered natural scene. The neural dynamic response can make reasonable prediction on surrounding complexity since it can be mapped monotonically to varying SF-TF of visual scene. The experiments show this approach is flexible to different visual scenes for profiling the dynamic complexity. We also use this metric as a predictor to investigate the boundary of another collision detection visual system performing in changing environment with increasing dynamic complexity. This research demonstrates a new paradigm of using biologically plausible visual processing scheme to estimate dynamic complexity of visual scene from both spatial and temporal perspectives, which could be beneficial to predicting input complexity when evaluating dynamic vision systems.

Attention and Prediction Guided Motion Detection for Low-Contrast Small Moving Targets

May 08, 2021

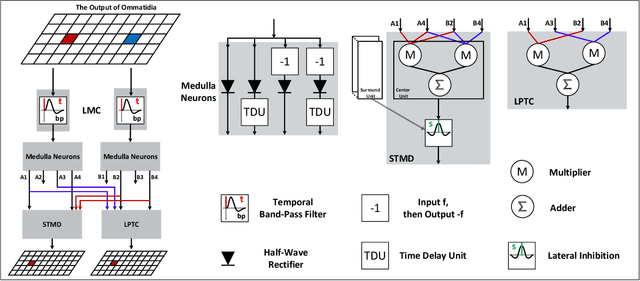

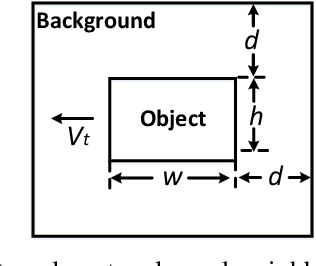

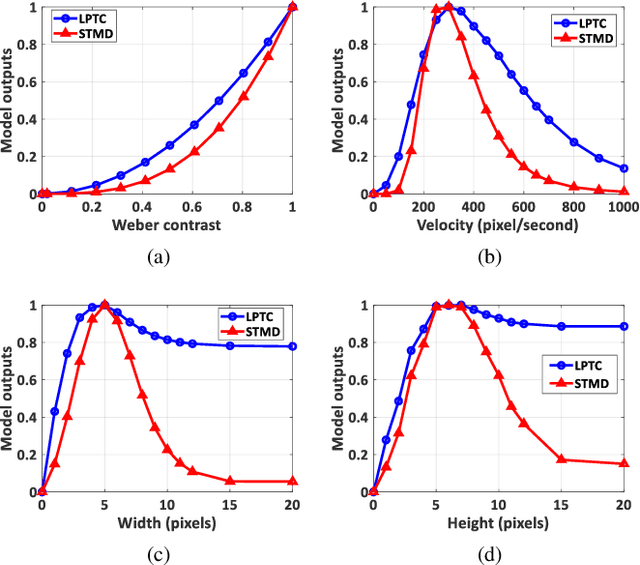

Abstract:Small target motion detection within complex natural environments is an extremely challenging task for autonomous robots. Surprisingly, the visual systems of insects have evolved to be highly efficient in detecting mates and tracking prey, even though targets are as small as a few pixels in their visual fields. The excellent sensitivity to small target motion relies on a class of specialized neurons called small target motion detectors (STMDs). However, existing STMD-based models are heavily dependent on visual contrast and perform poorly in complex natural environments where small targets generally exhibit extremely low contrast against neighbouring backgrounds. In this paper, we develop an attention and prediction guided visual system to overcome this limitation. The developed visual system comprises three main subsystems, namely, an attention module, an STMD-based neural network, and a prediction module. The attention module searches for potential small targets in the predicted areas of the input image and enhances their contrast against complex background. The STMD-based neural network receives the contrast-enhanced image and discriminates small moving targets from background false positives. The prediction module foresees future positions of the detected targets and generates a prediction map for the attention module. The three subsystems are connected in a recurrent architecture allowing information to be processed sequentially to activate specific areas for small target detection. Extensive experiments on synthetic and real-world datasets demonstrate the effectiveness and superiority of the proposed visual system for detecting small, low-contrast moving targets against complex natural environments.

Does Time-Delay Feedback Matter to Small Target Motion Detection Against Complex Dynamic Environments?

Dec 29, 2019

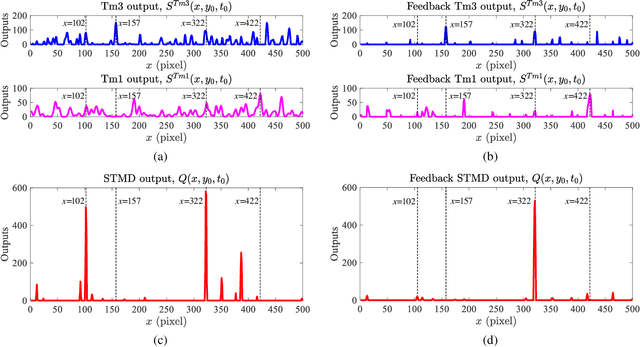

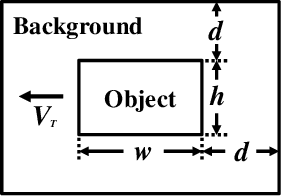

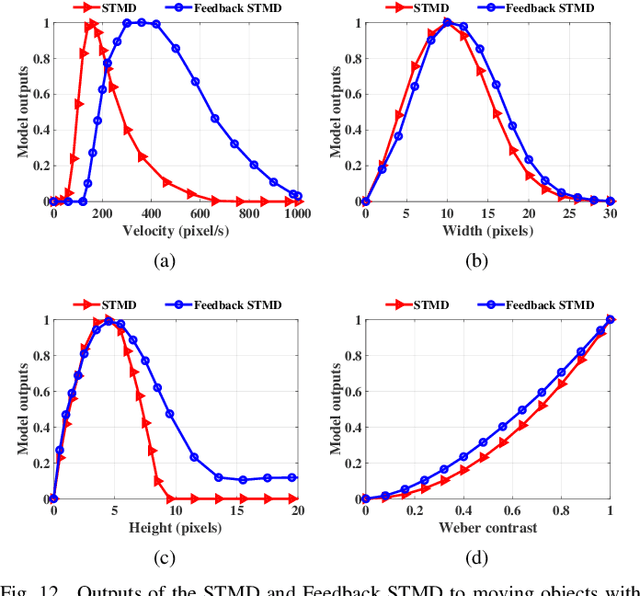

Abstract:Discriminating small moving objects in complex visual environments is a significant challenge for autonomous micro robots that are generally limited in computational power. Relying on well-evolved visual systems, flying insects can effortlessly detect mates and track prey in rapid pursuits, despite target sizes as small as a few pixels in the visual field. Such exquisite sensitivity for small target motion is known to be supported by a class of specialized neurons named as small target motion detectors (STMDs). The existing STMD-based models normally consist of four sequentially arranged neural layers interconnected through feedforward loops to extract motion information about small targets from raw visual inputs. However, feedback loop, another important regulatory circuit for motion perception, has not been investigated in the STMD pathway and its functional roles for small target motion detection are not clear. In this paper, we assume the existence of the feedback and propose a STMD-based visual system with feedback connection (Feedback STMD), where the system output is temporally delayed, then fed back to lower layers to mediate neural responses. We compare the properties of the visual system with and without the time-delay feedback loop, and discuss its effect on small target motion detection. The experimental results suggest that the Feedback STMD prefers fast-moving small targets, while significantly suppresses those background features moving at lower velocities.

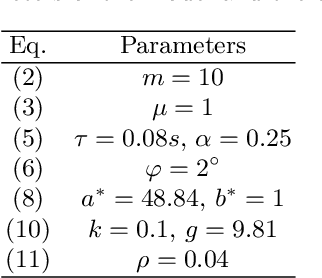

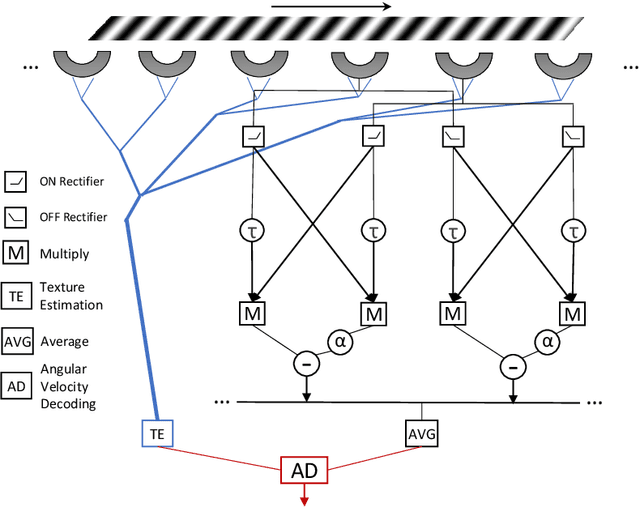

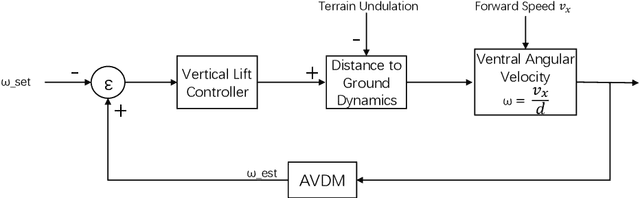

Constant Angular Velocity Regulation for Visually Guided Terrain Following

Apr 04, 2019

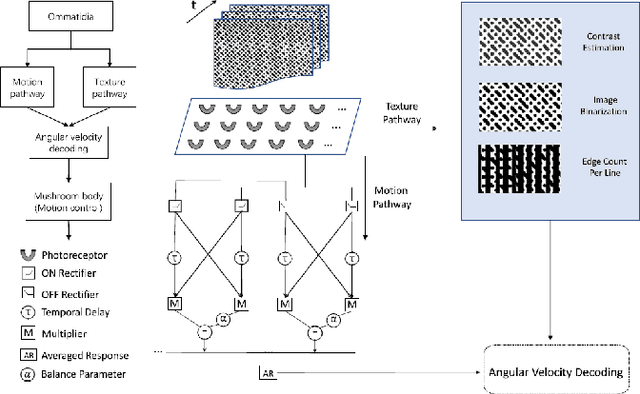

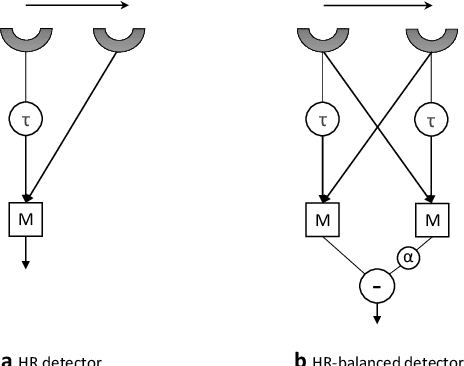

Abstract:Insects use visual cues to control their flight behaviours. By estimating the angular velocity of the visual stimuli and regulating it to a constant value, honeybees can perform a terrain following task which keeps the certain height above the undulated ground. For mimicking this behaviour in a bio-plausible computation structure, this paper presents a new angular velocity decoding model based on the honeybee's behavioural experiments. The model consists of three parts, the texture estimation layer for spatial information extraction, the motion detection layer for temporal information extraction and the decoding layer combining information from pervious layers to estimate the angular velocity. Compared to previous methods on this field, the proposed model produces responses largely independent of the spatial frequency and contrast in grating experiments. The angular velocity based control scheme is proposed to implement the model into a bee simulated by the game engine Unity. The perfect terrain following above patterned ground and successfully flying over irregular textured terrain show its potential for micro unmanned aerial vehicles' terrain following.

A Visual Neural Network for Robust Collision Perception in Vehicle Driving Scenarios

Apr 03, 2019

Abstract:This research addresses the challenging problem of visual collision detection in very complex and dynamic real physical scenes, specifically, the vehicle driving scenarios. This research takes inspiration from a large-field looming sensitive neuron, i.e., the lobula giant movement detector (LGMD) in the locust's visual pathways, which represents high spike frequency to rapid approaching objects. Building upon our previous models, in this paper we propose a novel inhibition mechanism that is capable of adapting to different levels of background complexity. This adaptive mechanism works effectively to mediate the local inhibition strength and tune the temporal latency of local excitation reaching the LGMD neuron. As a result, the proposed model is effective to extract colliding cues from complex dynamic visual scenes. We tested the proposed method using a range of stimuli including simulated movements in grating backgrounds and shifting of a natural panoramic scene, as well as vehicle crash video sequences. The experimental results demonstrate the proposed method is feasible for fast collision perception in real-world situations with potential applications in future autonomous vehicles.

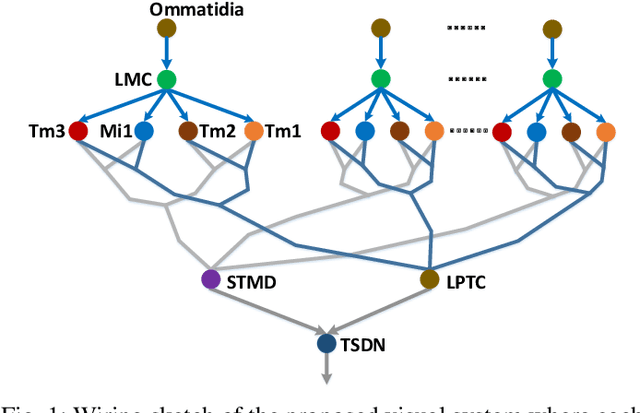

Visual Cue Integration for Small Target Motion Detection in Natural Cluttered Backgrounds

Mar 18, 2019

Abstract:The robust detection of small targets against cluttered background is important for future artificial visual systems in searching and tracking applications. The insects' visual systems have demonstrated excellent ability to avoid predators, find prey or identify conspecifics - which always appear as small dim speckles in the visual field. Build a computational model of the insects' visual pathways could provide effective solutions to detect small moving targets. Although a few visual system models have been proposed, they only make use of small-field visual features for motion detection and their detection results often contain a number of false positives. To address this issue, we develop a new visual system model for small target motion detection against cluttered moving backgrounds. Compared to the existing models, the small-field and wide-field visual features are separately extracted by two motion-sensitive neurons to detect small target motion and background motion. These two types of motion information are further integrated to filter out false positives. Extensive experiments showed that the proposed model can outperform the existing models in terms of detection rates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge