Huanyu Jiang

PlantStereo: A Stereo Matching Benchmark for Plant Surface Dense Reconstruction

Nov 30, 2021

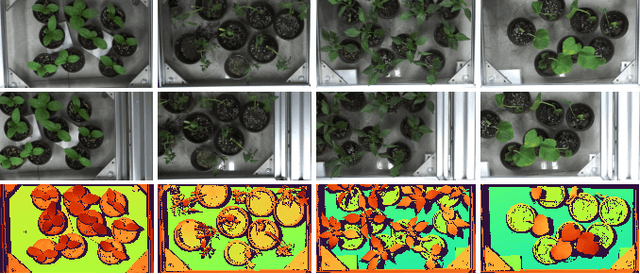

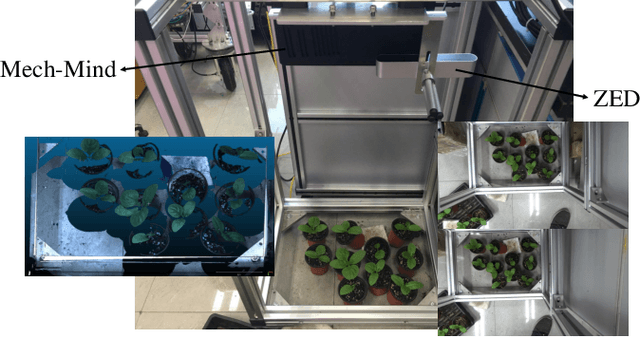

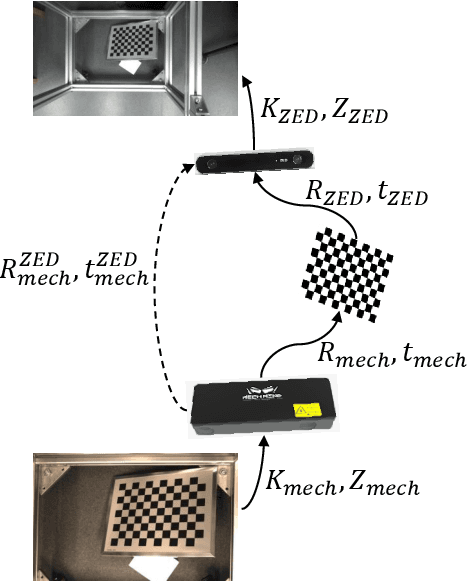

Abstract:Stereo matching is an important task in computer vision which has drawn tremendous research attention for decades. While in terms of disparity accuracy, density and data size, public stereo datasets are difficult to meet the requirements of models. In this paper, we aim to address the issue between datasets and models and propose a large scale stereo dataset with high accuracy disparity ground truth named PlantStereo. We used a semi-automatic way to construct the dataset: after camera calibration and image registration, high accuracy disparity images can be obtained from the depth images. In total, PlantStereo contains 812 image pairs covering a diverse set of plants: spinach, tomato, pepper and pumpkin. We firstly evaluated our PlantStereo dataset on four different stereo matching methods. Extensive experiments on different models and plants show that compared with ground truth in integer accuracy, high accuracy disparity images provided by PlantStereo can remarkably improve the training effect of deep learning models. This paper provided a feasible and reliable method to realize plant surface dense reconstruction. The PlantStereo dataset and relative code are available at: https://www.github.com/wangqingyu985/PlantStereo

Motion Planning for Heterogeneous Unmanned Systems under Partial Observation from UAV

Jul 28, 2020

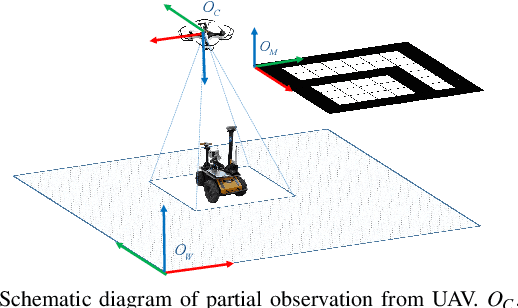

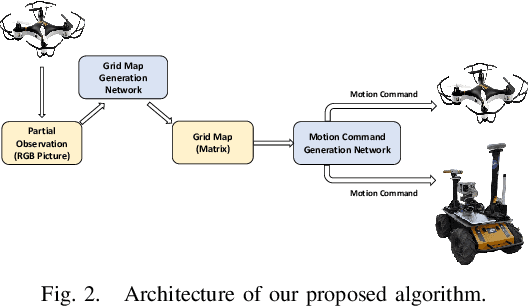

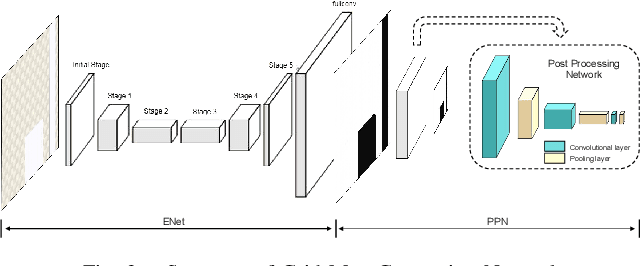

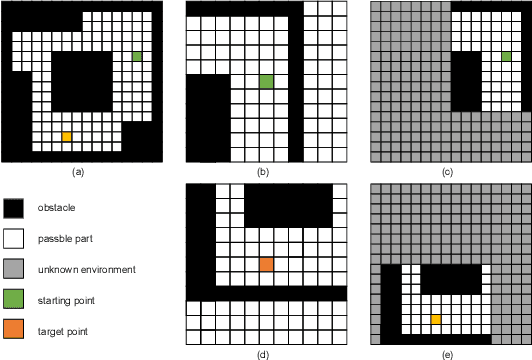

Abstract:For heterogeneous unmanned systems composed of unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs), using UAVs serve as eyes to assist UGVs in motion planning is a promising research direction due to the UAVs' vast view scope. However, due to UAVs flight altitude limitations, it may be impossible to observe the global map, and motion planning in the local map is a POMDP (Partially Observable Markov Decision Process) problem. This paper proposes a motion planning algorithm for heterogeneous unmanned system under partial observation from UAV without reconstruction of global maps, which consists of two parts designed for perception and decision-making, respectively. For the perception part, we propose the Grid Map Generation Network (GMGN), which is used to perceive scenes from UAV's perspective and classify the pathways and obstacles. For the decision-making part, we propose the Motion Command Generation Network (MCGN). Due to the addition of memory mechanism, MCGN has planning and reasoning abilities under partial observation from UAVs. We evaluate our proposed algorithm by comparing with baseline algorithms. The results show that our method effectively plans the motion of heterogeneous unmanned systems and achieves a relatively high success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge