Hua Qi

ArchRepair: Block-Level Architecture-Oriented Repairing for Deep Neural Networks

Dec 11, 2021

Abstract:Over the past few years, deep neural networks (DNNs) have achieved tremendous success and have been continuously applied in many application domains. However, during the practical deployment in the industrial tasks, DNNs are found to be erroneous-prone due to various reasons such as overfitting, lacking robustness to real-world corruptions during practical usage. To address these challenges, many recent attempts have been made to repair DNNs for version updates under practical operational contexts by updating weights (i.e., network parameters) through retraining, fine-tuning, or direct weight fixing at a neural level. In this work, as the first attempt, we initiate to repair DNNs by jointly optimizing the architecture and weights at a higher (i.e., block) level. We first perform empirical studies to investigate the limitation of whole network-level and layer-level repairing, which motivates us to explore a novel repairing direction for DNN repair at the block level. To this end, we first propose adversarial-aware spectrum analysis for vulnerable block localization that considers the neurons' status and weights' gradients in blocks during the forward and backward processes, which enables more accurate candidate block localization for repairing even under a few examples. Then, we further propose the architecture-oriented search-based repairing that relaxes the targeted block to a continuous repairing search space at higher deep feature levels. By jointly optimizing the architecture and weights in that space, we can identify a much better block architecture. We implement our proposed repairing techniques as a tool, named ArchRepair, and conduct extensive experiments to validate the proposed method. The results show that our method can not only repair but also enhance accuracy & robustness, outperforming the state-of-the-art DNN repair techniques.

DeepRepair: Style-Guided Repairing for DNNs in the Real-world Operational Environment

Nov 19, 2020

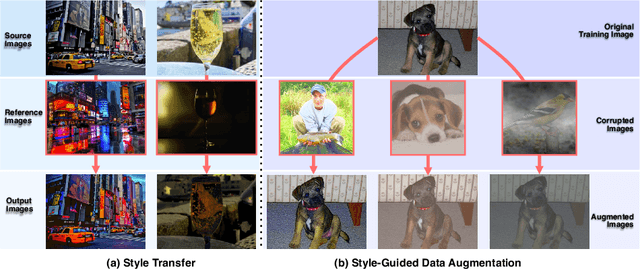

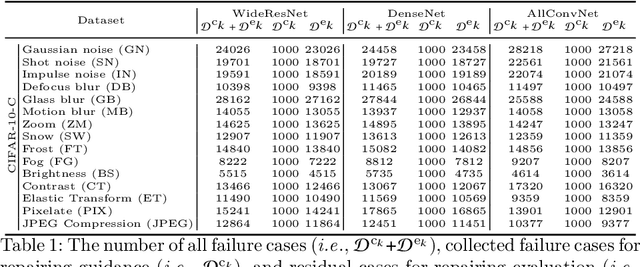

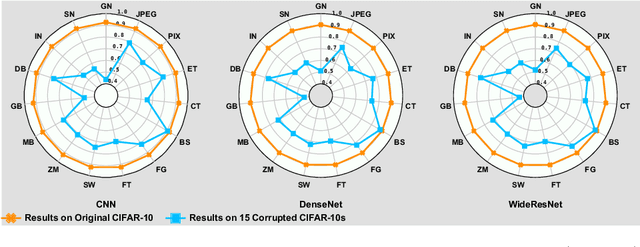

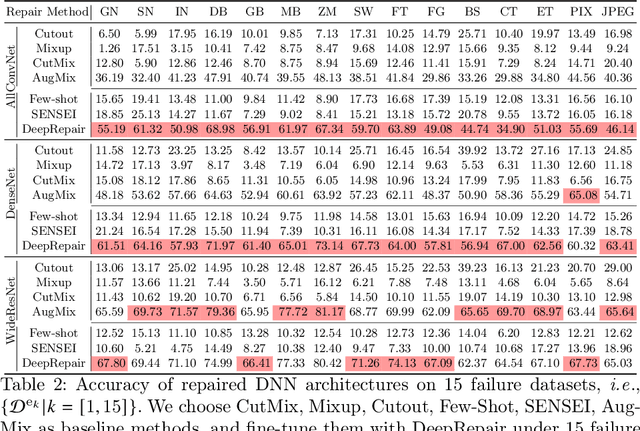

Abstract:Deep neural networks (DNNs) are being widely applied for various real-world applications across domains due to their high performance (e.g., high accuracy on image classification). Nevertheless, a well-trained DNN after deployment could oftentimes raise errors during practical use in the operational environment due to the mismatching between distributions of the training dataset and the potential unknown noise factors in the operational environment, e.g., weather, blur, noise etc. Hence, it poses a rather important problem for the DNNs' real-world applications: how to repair the deployed DNNs for correcting the failure samples (i.e., incorrect prediction) under the deployed operational environment while not harming their capability of handling normal or clean data. The number of failure samples we can collect in practice, caused by the noise factors in the operational environment, is often limited. Therefore, It is rather challenging how to repair more similar failures based on the limited failure samples we can collect. In this paper, we propose a style-guided data augmentation for repairing DNN in the operational environment. We propose a style transfer method to learn and introduce the unknown failure patterns within the failure data into the training data via data augmentation. Moreover, we further propose the clustering-based failure data generation for much more effective style-guided data augmentation. We conduct a large-scale evaluation with fifteen degradation factors that may happen in the real world and compare with four state-of-the-art data augmentation methods and two DNN repairing methods, demonstrating that our method can significantly enhance the deployed DNNs on the corrupted data in the operational environment, and with even better accuracy on clean datasets.

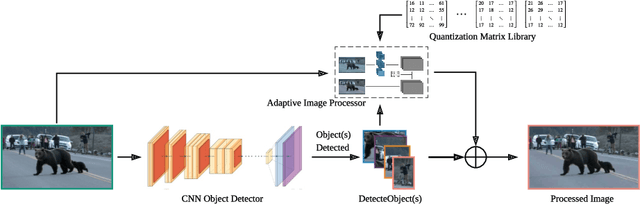

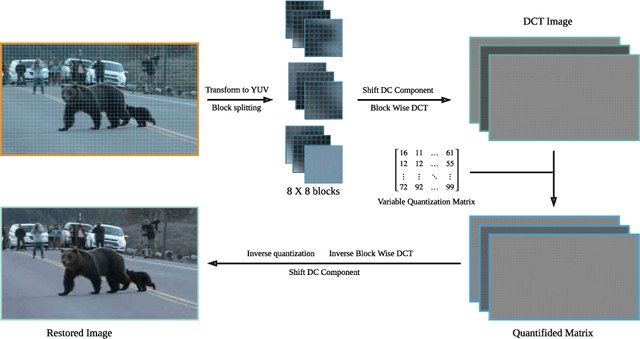

Object Detection-Based Variable Quantization Processing

Sep 01, 2020

Abstract:In this paper, we propose a preprocessing method for conventional image and video encoders that can make these existing encoders content-aware. By going through our process, a higher quality parameter could be set on a traditional encoder without increasing the output size. A still frame or an image will firstly go through an object detector. Either the properties of the detection result will decide the parameters of the following procedures, or the system will be bypassed if no object is detected in the given frame. The processing method utilizes an adaptive quantization process to determine the portion of data to be dropped. This method is primarily based on the JPEG compression theory and is optimum for JPEG-based encoders such as JPEG encoders and the Motion JPEG encoders. However, other DCT-based encoders like MPEG part 2, H.264, etc. can also benefit from this method. In the experiments, we compare the MS-SSIM under the same bitrate as well as similar MS-SSIM but enhanced bitrate. As this method is based on human perception, even with similar MS-SSIM, the overall watching experience will be better than the direct encoded ones.

DeepRhythm: Exposing DeepFakes with Attentional Visual Heartbeat Rhythms

Jun 13, 2020Abstract:As the GAN-based face image and video generation techniques, widely known as DeepFakes, have become more and more matured and realistic, the need for an effective DeepFakes detector has become imperative. Motivated by the fact that remote visualphotoplethysmography (PPG) is made possible by monitoring the minuscule periodic changes of skin color due to blood pumping through the face, we conjecture that normal heartbeat rhythms found in the real face videos will be diminished or even disrupted entirely in a DeepFake video, making it a powerful indicator for detecting DeepFakes. In this work, we show that our conjecture holds true and the proposed method indeed can very effectively exposeDeepFakes by monitoring the heartbeat rhythms, which is termedasDeepRhythm. DeepRhythm utilizes dual-spatial-temporal attention to adapt to dynamically changing face and fake types. Extensive experiments on FaceForensics++ and DFDC-preview datasets have demonstrated not only the effectiveness of our proposed method, but also how it can generalize over different datasets with various DeepFakes generation techniques and multifarious challenging degradations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge