Harnaik Dhami

Planning and Perception for Unmanned Aerial Vehicles in Object and Environmental Monitoring

Jul 17, 2024

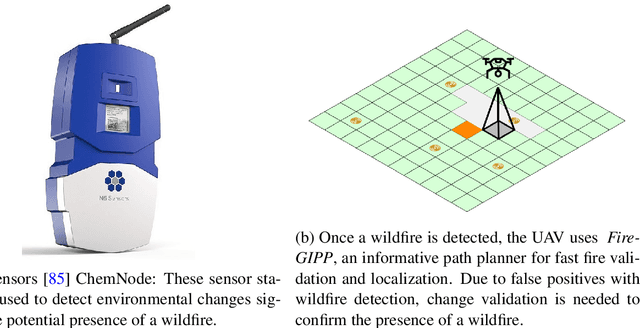

Abstract:Unmanned Aerial Vehicles (UAVs) equipped with high-resolution sensors enable extensive data collection from previously inaccessible areas at a remarkable spatio-temporal scale, promising to revolutionize fields such as precision agriculture and infrastructure inspection. To fully exploit their potential, developing autonomy algorithms for planning and perception is crucial. This dissertation focuses on developing planning and perception algorithms tailored to UAVs used in monitoring applications. In the first part, we address object monitoring and its associated planning challenges. Object monitoring involves continuous observation, tracking, and analysis of specific objects. We tackle the problem of visual reconstruction where the goal is to maximize visual coverage of an object in an unknown environment efficiently. Leveraging shape prediction deep learning models, we optimize planning for quick information gathering. Extending this to multi-UAV systems, we create efficient paths around objects based on reconstructed 3D models, crucial for close-up inspections aimed at detecting changes. Next, we explore inspection scenarios where an object has changed or no prior information is available, focusing on infrastructure inspection. We validate our planning algorithms through real-world experiments and high-fidelity simulations, integrating defect detection seamlessly into the process. In the second part, we shift focus to monitoring entire environments, distinct from object-specific monitoring. Here, the goal is to maximize coverage to understand spatio-temporal changes. We investigate slow-changing environments like vegetative growth estimation and fast-changing environments such as wildfire management. For wildfires, we employ informative path planning to validate and localize fires early, utilizing LSTM networks for enhanced early detection.

MAP-NBV: Multi-agent Prediction-guided Next-Best-View Planning for Active 3D Object Reconstruction

Jul 08, 2023

Abstract:We propose MAP-NBV, a prediction-guided active algorithm for 3D reconstruction with multi-agent systems. Prediction-based approaches have shown great improvement in active perception tasks by learning the cues about structures in the environment from data. But these methods primarily focus on single-agent systems. We design a next-best-view approach that utilizes geometric measures over the predictions and jointly optimizes the information gain and control effort for efficient collaborative 3D reconstruction of the object. Our method achieves 22.75% improvement over the prediction-based single-agent approach and 15.63% improvement over the non-predictive multi-agent approach. We make our code publicly available through our project website: http://raaslab.org/projects/MAPNBV/

Pred-NBV: Prediction-guided Next-Best-View for 3D Object Reconstruction

Apr 22, 2023

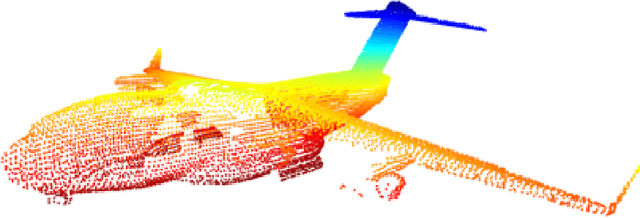

Abstract:Prediction-based active perception has shown the potential to improve the navigation efficiency and safety of the robot by anticipating the uncertainty in the unknown environment. The existing works for 3D shape prediction make an implicit assumption about the partial observations and therefore cannot be used for real-world planning and do not consider the control effort for next-best-view planning. We present Pred-NBV, a realistic object shape reconstruction method consisting of PoinTr-C, an enhanced 3D prediction model trained on the ShapeNet dataset, and an information and control effort-based next-best-view method to address these issues. Pred-NBV shows an improvement of 25.46% in object coverage over the traditional method in the AirSim simulator, and performs better shape completion than PoinTr, the state-of-the-art shape completion model, even on real data obtained from a Velodyne 3D LiDAR mounted on DJI M600 Pro.

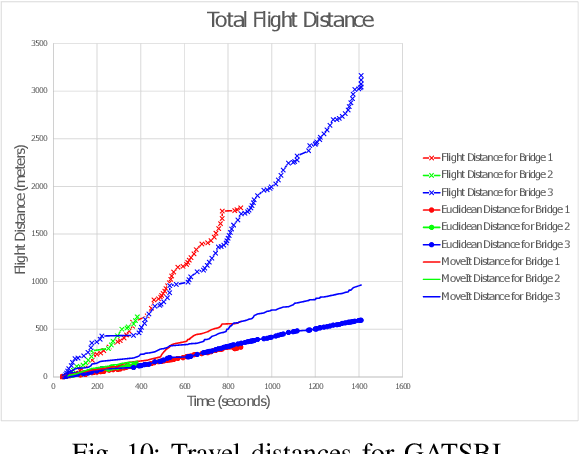

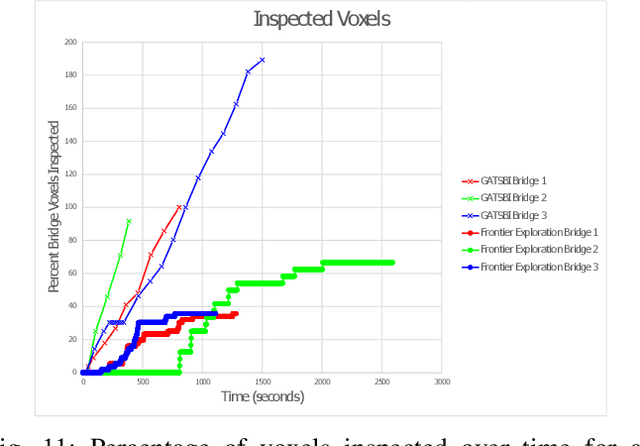

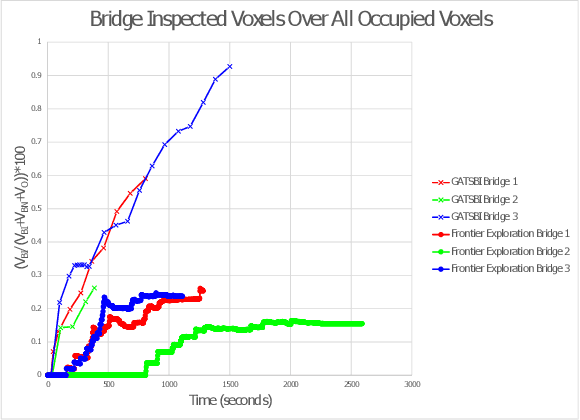

GATSBI: An Online GTSP-Based Algorithm for Targeted Surface Bridge Inspection

Dec 09, 2020

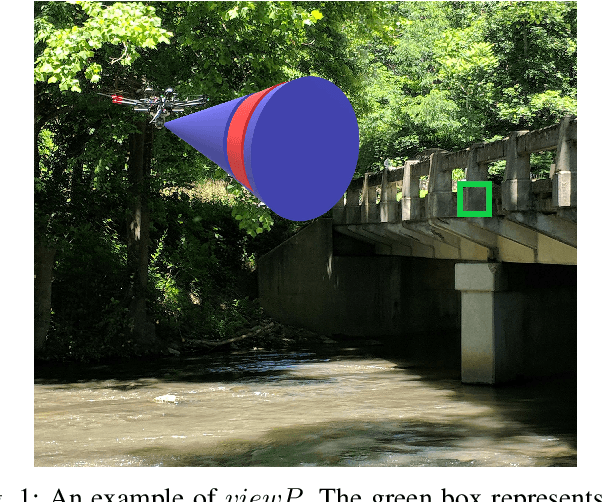

Abstract:We study the problem of visually inspecting the surface of a bridge using an Unmanned Aerial Vehicle (UAV) for defects. We do not assume that the geometric model of the bridge is known. The UAV is equipped with a LiDAR and RGB sensor that is used to build a 3D semantic map of the environment. Our planner, termed GATSBI, plans in an online fashion a path that is targeted towards inspecting all points on the surface of the bridge. The input to GATSBI consists of a 3D occupancy grid map of the part of the environment seen by the UAV so far. We use semantic segmentation to segment the voxels into those that are part of the bridge and the surroundings. Inspecting a bridge voxel requires the UAV to take images from a desired viewing angle and distance. We then create a Generalized Traveling Salesperson Problem (GTSP) instance to cluster candidate viewpoints for inspecting the bridge voxels and use an off-the-shelf GTSP solver to find the optimal path for the given instance. As more parts of the environment are seen, we replan the path. We evaluate the performance of our algorithm through high-fidelity simulations conducted in Gazebo. We compare the performance of this algorithm with a frontier exploration algorithm. Our evaluation reveals that targeting the inspection to only the segmented bridge voxels and planning carefully using a GTSP solver leads to more efficient inspection than the baseline algorithms.

Crop Height and Plot Estimation from Unmanned Aerial Vehicles using 3D LiDAR

Oct 30, 2019

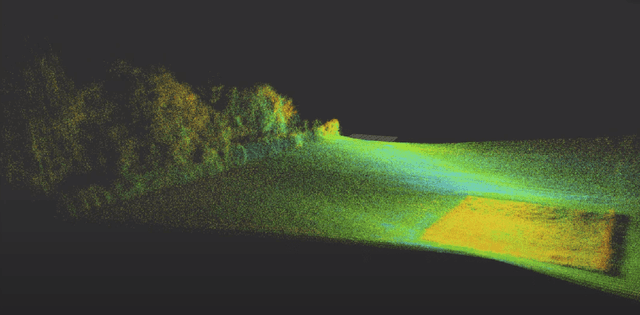

Abstract:In this paper, we present techniques to measure crop heights using a 3D LiDAR mounted on an Unmanned Aerial Vehicle (UAV). Knowing the height of plants is crucial to monitor their overall health and growth cycles, especially for high-throughput plant phenotyping. We present a methodology for extracting plant heights from 3D LiDAR point clouds, specifically focusing on row-crop environments. The key steps in our algorithm are clustering of LiDAR points to semi-automatically detect plots, local ground plane estimation, and height estimation. The plot detection uses a k--means clustering algorithm followed by a voting scheme to find the bounding boxes of individual plots. We conducted a series of experiments in controlled and natural settings. Our algorithm was able to estimate the plant heights in a field with 112 plots within +-5.36%. This is the first such dataset for 3D LiDAR from an airborne robot over a wheat field. The developed code can be found on the GitHub repository located at https://github.com/hsd1121/PointCloudProcessing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge