Planning and Perception for Unmanned Aerial Vehicles in Object and Environmental Monitoring

Paper and Code

Jul 17, 2024

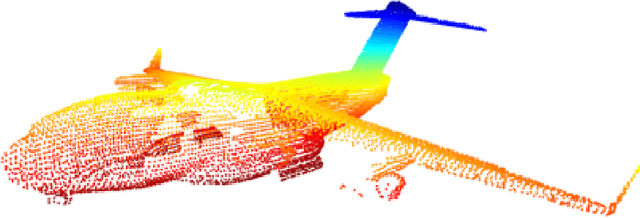

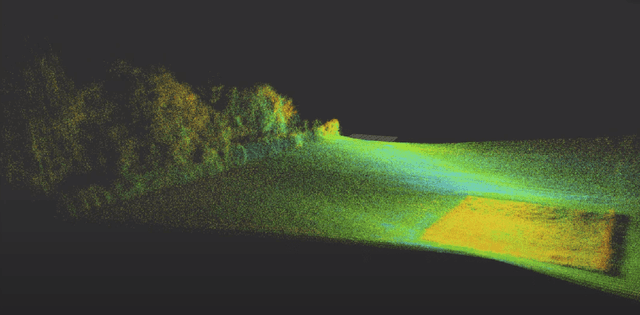

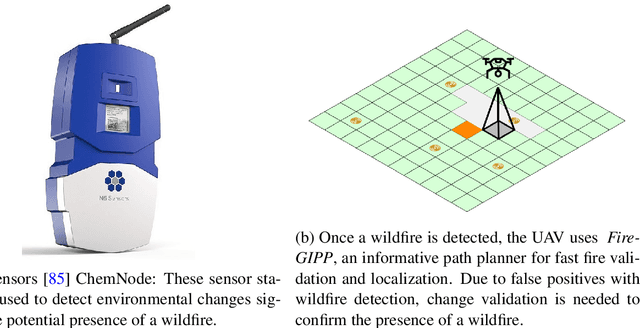

Unmanned Aerial Vehicles (UAVs) equipped with high-resolution sensors enable extensive data collection from previously inaccessible areas at a remarkable spatio-temporal scale, promising to revolutionize fields such as precision agriculture and infrastructure inspection. To fully exploit their potential, developing autonomy algorithms for planning and perception is crucial. This dissertation focuses on developing planning and perception algorithms tailored to UAVs used in monitoring applications. In the first part, we address object monitoring and its associated planning challenges. Object monitoring involves continuous observation, tracking, and analysis of specific objects. We tackle the problem of visual reconstruction where the goal is to maximize visual coverage of an object in an unknown environment efficiently. Leveraging shape prediction deep learning models, we optimize planning for quick information gathering. Extending this to multi-UAV systems, we create efficient paths around objects based on reconstructed 3D models, crucial for close-up inspections aimed at detecting changes. Next, we explore inspection scenarios where an object has changed or no prior information is available, focusing on infrastructure inspection. We validate our planning algorithms through real-world experiments and high-fidelity simulations, integrating defect detection seamlessly into the process. In the second part, we shift focus to monitoring entire environments, distinct from object-specific monitoring. Here, the goal is to maximize coverage to understand spatio-temporal changes. We investigate slow-changing environments like vegetative growth estimation and fast-changing environments such as wildfire management. For wildfires, we employ informative path planning to validate and localize fires early, utilizing LSTM networks for enhanced early detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge