Harini Kesavamoorthy

TRScore: A Novel GPT-based Readability Scorer for ASR Segmentation and Punctuation model evaluation and selection

Oct 27, 2022

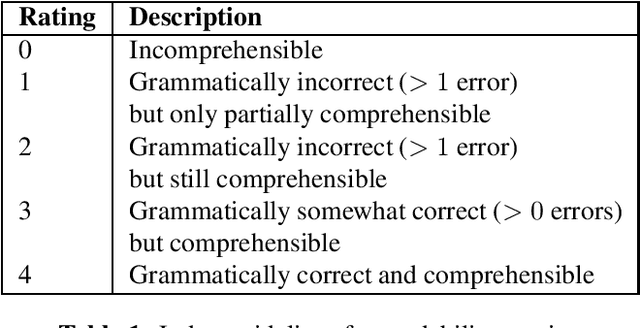

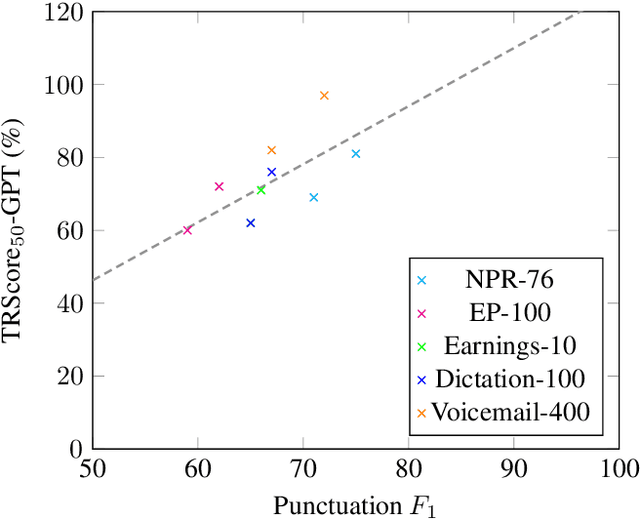

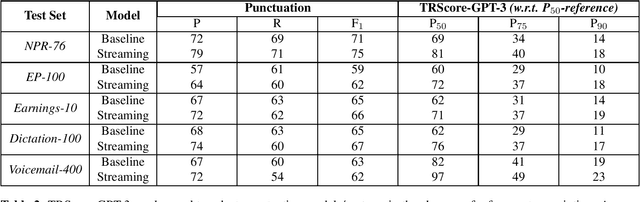

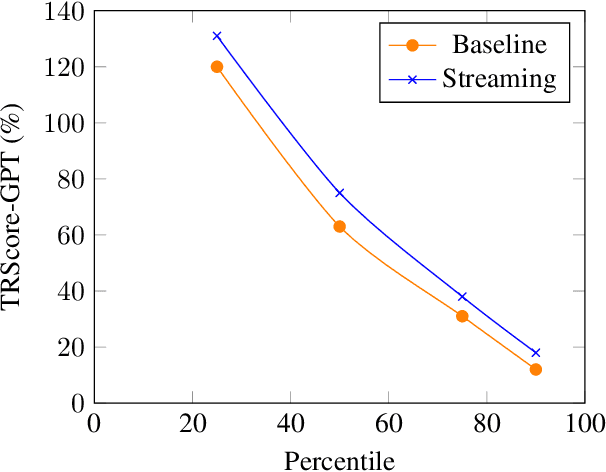

Abstract:Punctuation and Segmentation are key to readability in Automatic Speech Recognition (ASR), often evaluated using F1 scores that require high-quality human transcripts and do not reflect readability well. Human evaluation is expensive, time-consuming, and suffers from large inter-observer variability, especially in conversational speech devoid of strict grammatical structures. Large pre-trained models capture a notion of grammatical structure. We present TRScore, a novel readability measure using the GPT model to evaluate different segmentation and punctuation systems. We validate our approach with human experts. Additionally, our approach enables quantitative assessment of text post-processing techniques such as capitalization, inverse text normalization (ITN), and disfluency on overall readability, which traditional word error rate (WER) and slot error rate (SER) metrics fail to capture. TRScore is strongly correlated to traditional F1 and human readability scores, with Pearson's correlation coefficients of 0.67 and 0.98, respectively. It also eliminates the need for human transcriptions for model selection.

Activity Recognition on a Large Scale in Short Videos - Moments in Time Dataset

Sep 13, 2018

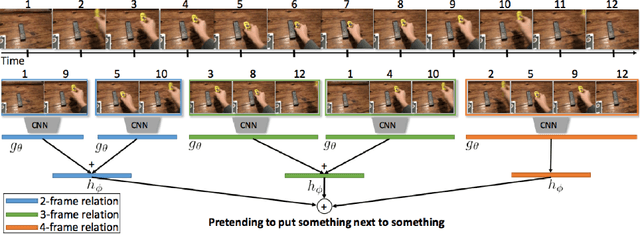

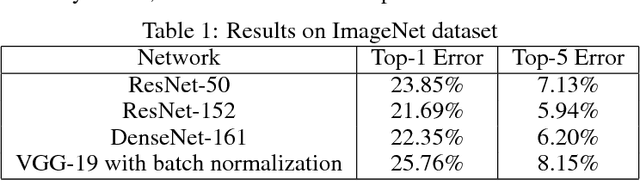

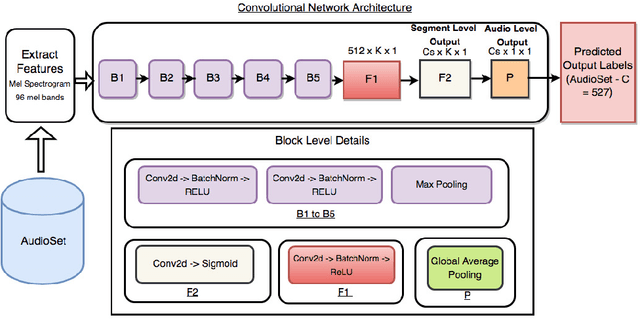

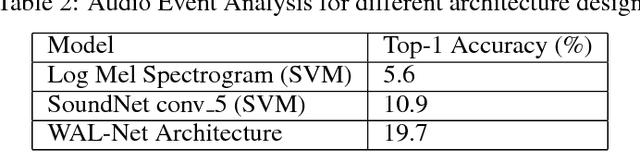

Abstract:Moments capture a huge part of our lives. Accurate recognition of these moments is challenging due to the diverse and complex interpretation of the moments. Action recognition refers to the act of classifying the desired action/activity present in a given video. In this work, we perform experiments on Moments in Time dataset to recognize accurately activities occurring in 3 second clips. We use state of the art techniques for visual, auditory and spatio temporal localization and develop method to accurately classify the activity in the Moments in Time dataset. Our novel approach of using Visual Based Textual features and fusion techniques performs well providing an overall 89.23 % Top - 5 accuracy on the 20 classes - a significant improvement over the Baseline TRN model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge