Hardik Chauhan

Indian Institute of Technology Roorkee, India

Reinforced Multi-task Approach for Multi-hop Question Generation

Apr 07, 2020

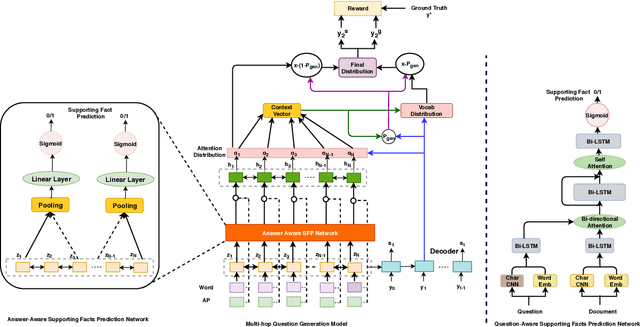

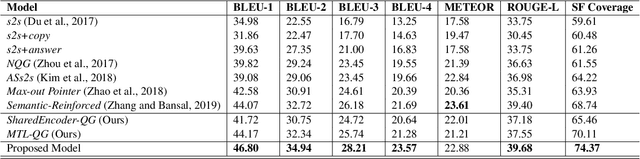

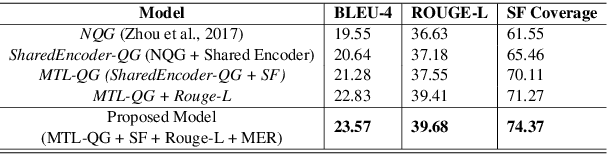

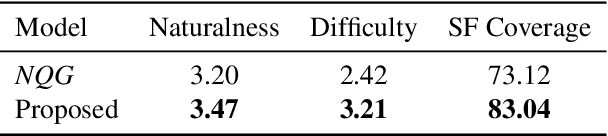

Abstract:Question generation (QG) attempts to solve the inverse of question answering (QA) problem by generating a natural language question given a document and an answer. While sequence to sequence neural models surpass rule-based systems for QG, they are limited in their capacity to focus on more than one supporting fact. For QG, we often require multiple supporting facts to generate high-quality questions. Inspired by recent works on multi-hop reasoning in QA, we take up Multi-hop question generation, which aims at generating relevant questions based on supporting facts in the context. We employ multitask learning with the auxiliary task of answer-aware supporting fact prediction to guide the question generator. In addition, we also proposed a question-aware reward function in a Reinforcement Learning (RL) framework to maximize the utilization of the supporting facts. We demonstrate the effectiveness of our approach through experiments on the multi-hop question answering dataset, HotPotQA. Empirical evaluation shows our model to outperform the single-hop neural question generation models on both automatic evaluation metrics such as BLEU, METEOR, and ROUGE, and human evaluation metrics for quality and coverage of the generated questions.

An Attention Model for group-level emotion recognition

Jul 09, 2018

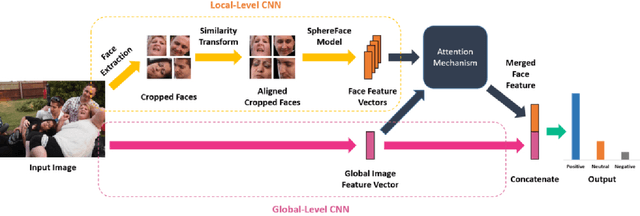

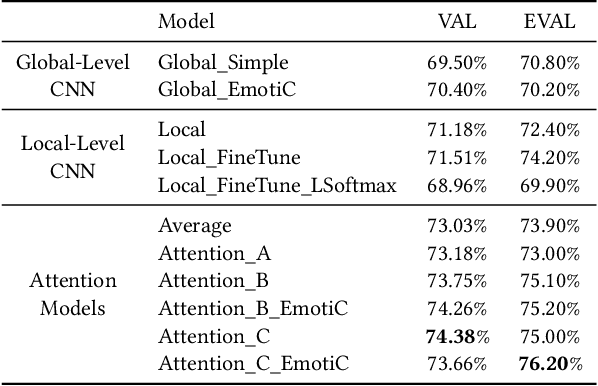

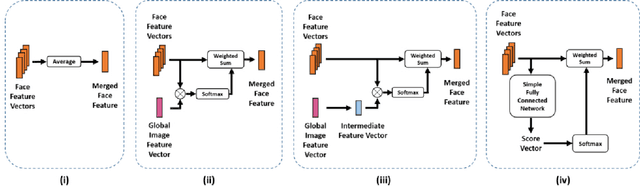

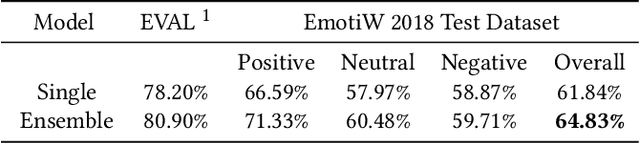

Abstract:In this paper we propose a new approach for classifying the global emotion of images containing groups of people. To achieve this task, we consider two different and complementary sources of information: i) a global representation of the entire image (ii) a local representation where only faces are considered. While the global representation of the image is learned with a convolutional neural network (CNN), the local representation is obtained by merging face features through an attention mechanism. The two representations are first learned independently with two separate CNN branches and then fused through concatenation in order to obtain the final group-emotion classifier. For our submission to the EmotiW 2018 group-level emotion recognition challenge, we combine several variations of the proposed model into an ensemble, obtaining a final accuracy of 64.83% on the test set and ranking 4th among all challenge participants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge