Haowen Lai

Building Audio-Visual Digital Twins with Smartphones

Dec 11, 2025Abstract:Digital twins today are almost entirely visual, overlooking acoustics-a core component of spatial realism and interaction. We introduce AV-Twin, the first practical system that constructs editable audio-visual digital twins using only commodity smartphones. AV-Twin combines mobile RIR capture and a visual-assisted acoustic field model to efficiently reconstruct room acoustics. It further recovers per-surface material properties through differentiable acoustic rendering, enabling users to modify materials, geometry, and layout while automatically updating both audio and visuals. Together, these capabilities establish a practical path toward fully modifiable audio-visual digital twins for real-world environments.

Enabling Visual Recognition at Radio Frequency

May 29, 2024

Abstract:This paper introduces PanoRadar, a novel RF imaging system that brings RF resolution close to that of LiDAR, while providing resilience against conditions challenging for optical signals. Our LiDAR-comparable 3D imaging results enable, for the first time, a variety of visual recognition tasks at radio frequency, including surface normal estimation, semantic segmentation, and object detection. PanoRadar utilizes a rotating single-chip mmWave radar, along with a combination of novel signal processing and machine learning algorithms, to create high-resolution 3D images of the surroundings. Our system accurately estimates robot motion, allowing for coherent imaging through a dense grid of synthetic antennas. It also exploits the high azimuth resolution to enhance elevation resolution using learning-based methods. Furthermore, PanoRadar tackles 3D learning via 2D convolutions and addresses challenges due to the unique characteristics of RF signals. Our results demonstrate PanoRadar's robust performance across 12 buildings.

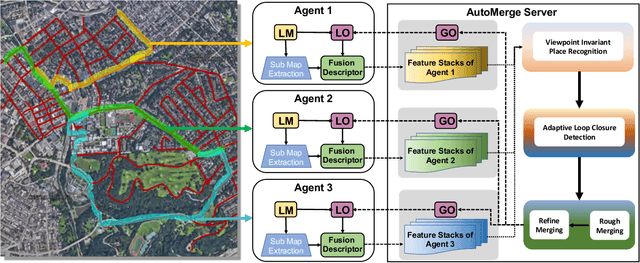

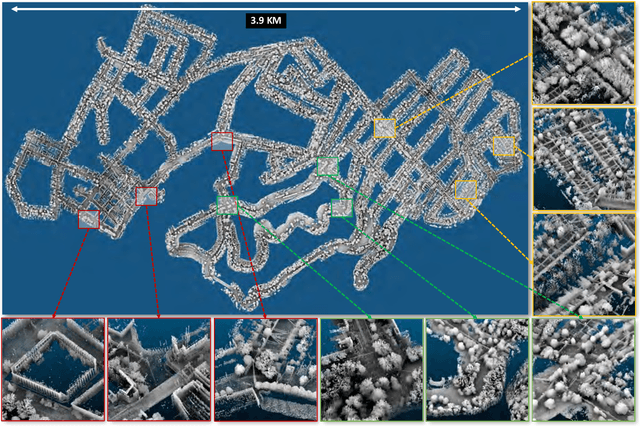

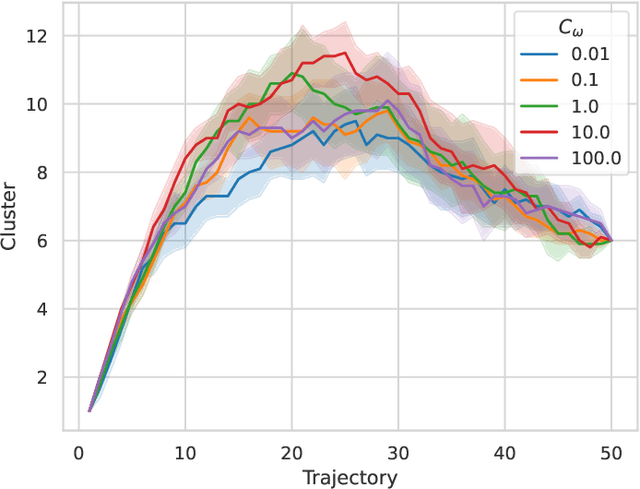

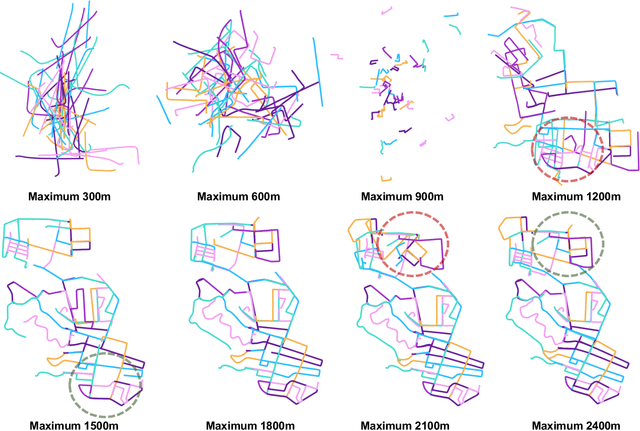

AutoMerge: A Framework for Map Assembling and Smoothing in City-scale Environments

Jul 14, 2022

Abstract:We present AutoMerge, a LiDAR data processing framework for assembling a large number of map segments into a complete map. Traditional large-scale map merging methods are fragile to incorrect data associations, and are primarily limited to working only offline. AutoMerge utilizes multi-perspective fusion and adaptive loop closure detection for accurate data associations, and it uses incremental merging to assemble large maps from individual trajectory segments given in random order and with no initial estimations. Furthermore, after assembling the segments, AutoMerge performs fine matching and pose-graph optimization to globally smooth the merged map. We demonstrate AutoMerge on both city-scale merging (120km) and campus-scale repeated merging (4.5km x 8). The experiments show that AutoMerge (i) surpasses the second- and third- best methods by 14% and 24% recall in segment retrieval, (ii) achieves comparable 3D mapping accuracy for 120 km large-scale map assembly, (iii) and it is robust to temporally-spaced revisits. To the best of our knowledge, AutoMerge is the first mapping approach that can merge hundreds of kilometers of individual segments without the aid of GPS.

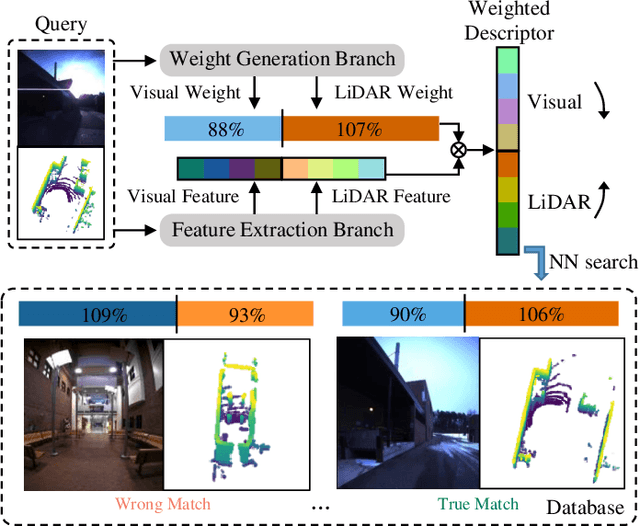

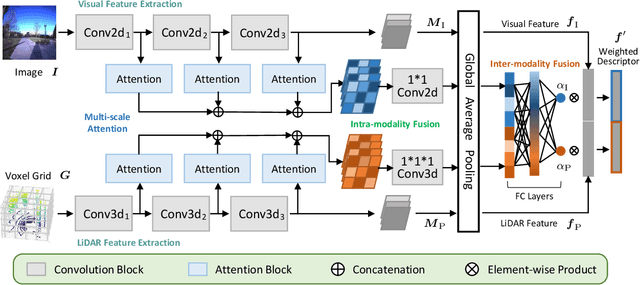

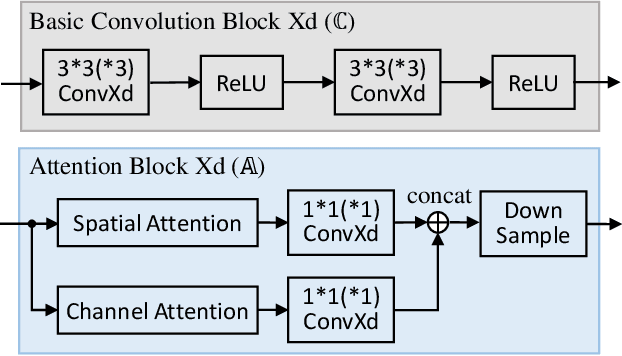

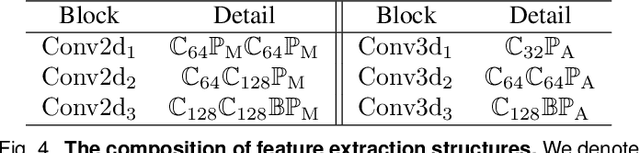

AdaFusion: Visual-LiDAR Fusion with Adaptive Weights for Place Recognition

Nov 23, 2021

Abstract:Recent years have witnessed the increasing application of place recognition in various environments, such as city roads, large buildings, and a mix of indoor and outdoor places. This task, however, still remains challenging due to the limitations of different sensors and the changing appearance of environments. Current works only consider the use of individual sensors, or simply combine different sensors, ignoring the fact that the importance of different sensors varies as the environment changes. In this paper, an adaptive weighting visual-LiDAR fusion method, named AdaFusion, is proposed to learn the weights for both images and point cloud features. Features of these two modalities are thus contributed differently according to the current environmental situation. The learning of weights is achieved by the attention branch of the network, which is then fused with the multi-modality feature extraction branch. Furthermore, to better utilize the potential relationship between images and point clouds, we design a twostage fusion approach to combine the 2D and 3D attention. Our work is tested on two public datasets, and experiments show that the adaptive weights help improve recognition accuracy and system robustness to varying environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge