Hao-Zhe Feng

Prior-Enhanced Few-Shot Segmentation with Meta-Prototypes

Jun 01, 2021

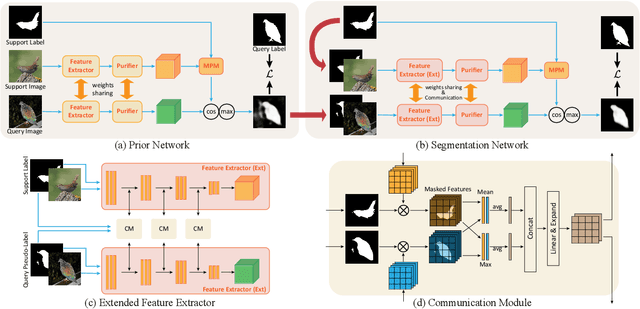

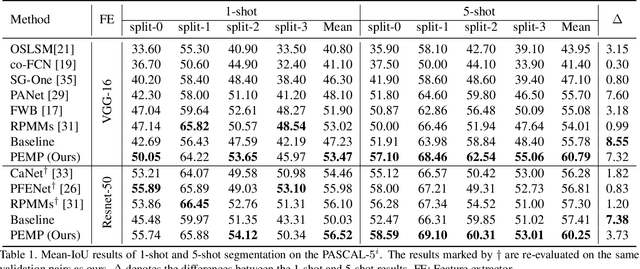

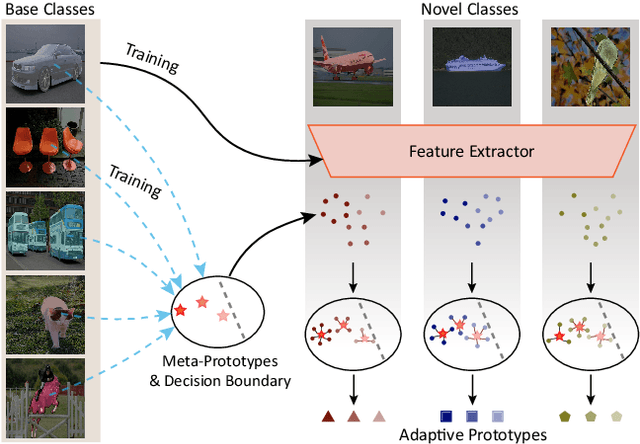

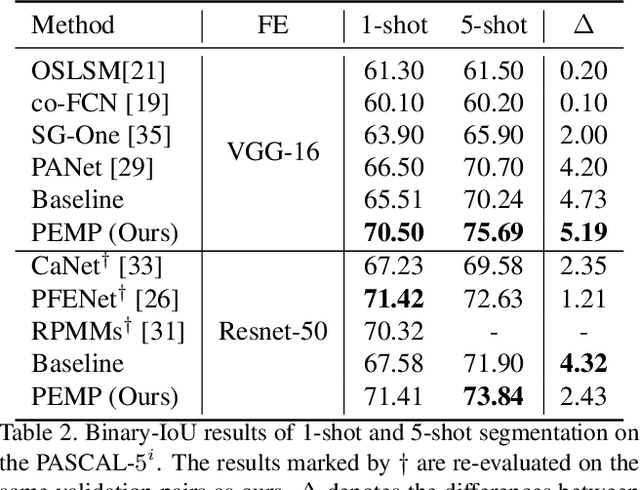

Abstract:Few-shot segmentation~(FSS) performance has been extensively promoted by introducing episodic training and class-wise prototypes. However, the FSS problem remains challenging due to three limitations: (1) Models are distracted by task-unrelated information; (2) The representation ability of a single prototype is limited; (3) Class-related prototypes ignore the prior knowledge of base classes. We propose the Prior-Enhanced network with Meta-Prototypes to tackle these limitations. The prior-enhanced network leverages the support and query (pseudo-) labels in feature extraction, which guides the model to focus on the task-related features of the foreground objects, and suppress much noise due to the lack of supervised knowledge. Moreover, we introduce multiple meta-prototypes to encode hierarchical features and learn class-agnostic structural information. The hierarchical features help the model highlight the decision boundary and focus on hard pixels, and the structural information learned from base classes is treated as the prior knowledge for novel classes. Experiments show that our method achieves the mean-IoU scores of 60.79% and 41.16% on PASCAL-$5^i$ and COCO-$20^i$, outperforming the state-of-the-art method by 3.49% and 5.64% in the 5-shot setting. Moreover, comparing with 1-shot results, our method promotes 5-shot accuracy by 3.73% and 10.32% on the above two benchmarks. The source code of our method is available at https://github.com/Jarvis73/PEMP.

KD3A: Unsupervised Multi-Source Decentralized Domain Adaptation via Knowledge Distillation

Dec 08, 2020

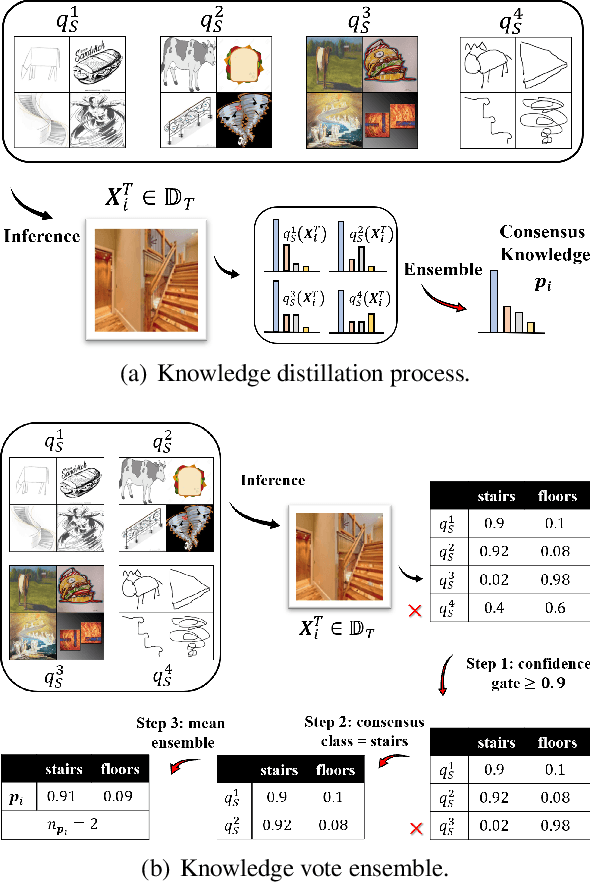

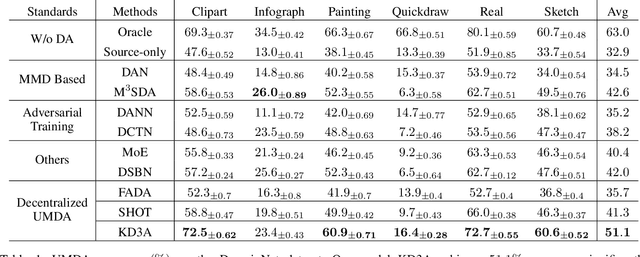

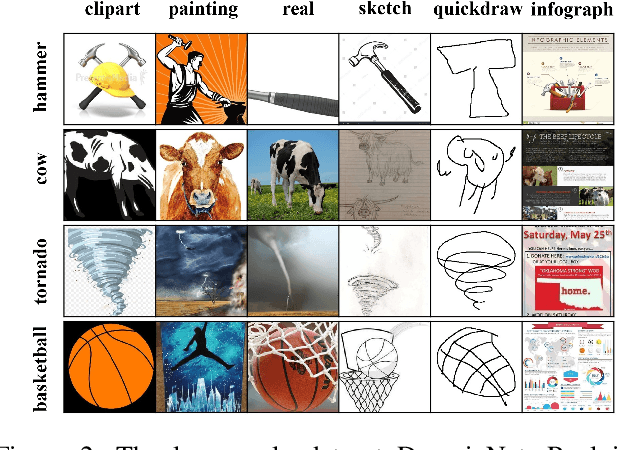

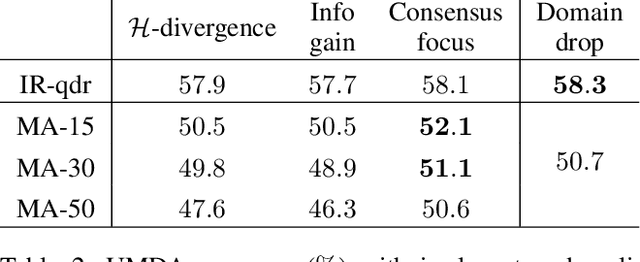

Abstract:Conventional unsupervised multi-source domain adaptation (UMDA) methods assume all source domains can be accessed directly. This neglects the privacy-preserving policy, that is, all the data and computations must be kept decentralized. There exists three problems in this scenario: (1) Minimizing the domain distance requires the pairwise calculation of the data from source and target domains, which is not accessible. (2) The communication cost and privacy security limit the application of UMDA methods (e.g., the domain adversarial training). (3) Since users have no authority to check the data quality, the irrelevant or malicious source domains are more likely to appear, which causes negative transfer. In this study, we propose a privacy-preserving UMDA paradigm named Knowledge Distillation based Decentralized Domain Adaptation (KD3A), which performs domain adaptation through the knowledge distillation on models from different source domains. KD3A solves the above problems with three components: (1) A multi-source knowledge distillation method named Knowledge Vote to learn high-quality domain consensus knowledge. (2) A dynamic weighting strategy named Consensus Focus to identify both the malicious and irrelevant domains. (3) A decentralized optimization strategy for domain distance named BatchNorm MMD. The extensive experiments on DomainNet demonstrate that KD3A is robust to the negative transfer and brings a 100x reduction of communication cost compared with other decentralized UMDA methods. Moreover, our KD3A significantly outperforms state-of-the-art UMDA approaches.

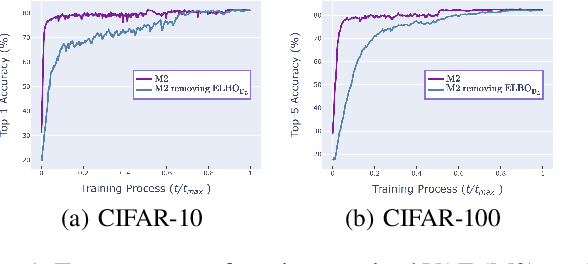

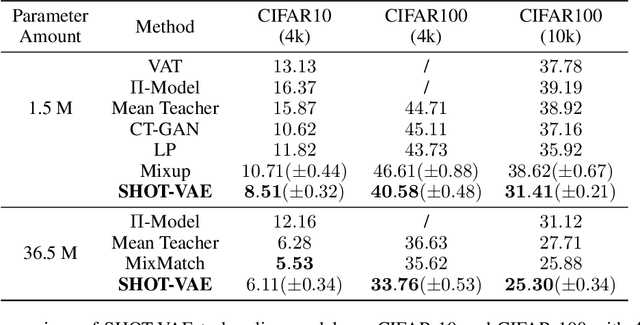

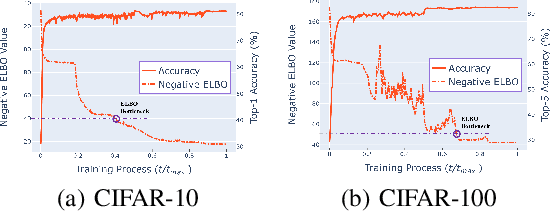

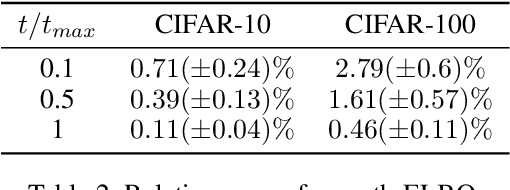

SHOT-VAE: Semi-supervised Deep Generative Models With Label-aware ELBO Approximations

Dec 08, 2020

Abstract:Semi-supervised variational autoencoders (VAEs) have obtained strong results, but have also encountered the challenge that good ELBO values do not always imply accurate inference results. In this paper, we investigate and propose two causes of this problem: (1) The ELBO objective cannot utilize the label information directly. (2) A bottleneck value exists and continuing to optimize ELBO after this value will not improve inference accuracy. On the basis of the experiment results, we propose SHOT-VAE to address these problems without introducing additional prior knowledge. The SHOT-VAE offers two contributions: (1) A new ELBO approximation named smooth-ELBO that integrates the label predictive loss into ELBO. (2) An approximation based on optimal interpolation that breaks the ELBO value bottleneck by reducing the margin between ELBO and the data likelihood. The SHOT-VAE achieves good performance with a 25.30% error rate on CIFAR-100 with 10k labels and reduces the error rate to 6.11% on CIFAR-10 with 4k labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge