Hao Wan

The Master-Slave Encoder Model for Improving Patent Text Summarization: A New Approach to Combining Specifications and Claims

Nov 21, 2024

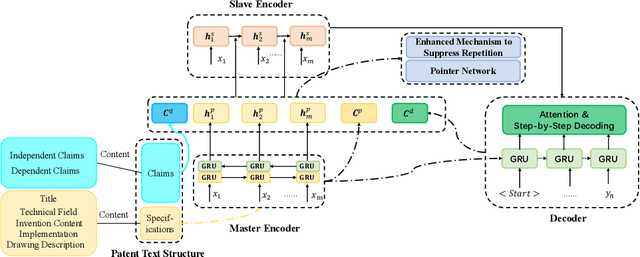

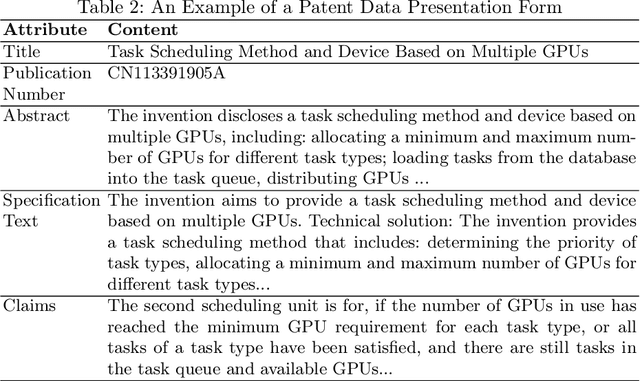

Abstract:In order to solve the problem of insufficient generation quality caused by traditional patent text abstract generation models only originating from patent specifications, the problem of new terminology OOV caused by rapid patent updates, and the problem of information redundancy caused by insufficient consideration of the high professionalism, accuracy, and uniqueness of patent texts, we proposes a patent text abstract generation model (MSEA) based on a master-slave encoder architecture; Firstly, the MSEA model designs a master-slave encoder, which combines the instructions in the patent text with the claims as input, and fully explores the characteristics and details between the two through the master-slave encoder; Then, the model enhances the consideration of new technical terms in the input sequence based on the pointer network, and further enhances the correlation with the input text by re weighing the "remembered" and "for-gotten" parts of the input sequence from the encoder; Finally, an enhanced repetition suppression mechanism for patent text was introduced to ensure accurate and non redundant abstracts generated. On a publicly available patent text dataset, compared to the state-of-the-art model, Improved Multi-Head Attention Mechanism (IMHAM), the MSEA model achieves an improvement of 0.006, 0.005, and 0.005 in Rouge-1, Rouge-2, and Rouge-L scores, respectively. MSEA leverages the characteristics of patent texts to effectively enhance the quality of patent text generation, demonstrating its advancement and effectiveness in the experiments.

Reward Shaping for User Satisfaction in a REINFORCE Recommender

Sep 30, 2022

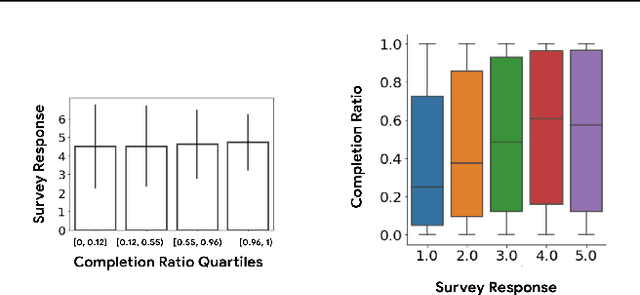

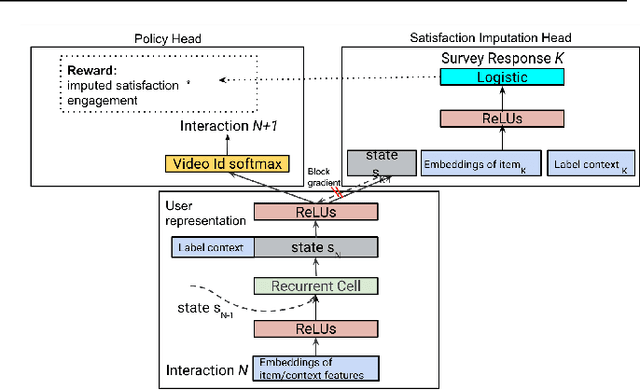

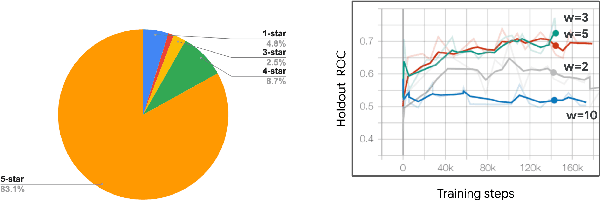

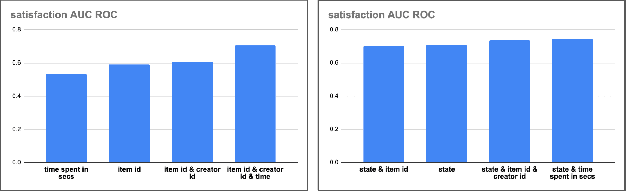

Abstract:How might we design Reinforcement Learning (RL)-based recommenders that encourage aligning user trajectories with the underlying user satisfaction? Three research questions are key: (1) measuring user satisfaction, (2) combatting sparsity of satisfaction signals, and (3) adapting the training of the recommender agent to maximize satisfaction. For measurement, it has been found that surveys explicitly asking users to rate their experience with consumed items can provide valuable orthogonal information to the engagement/interaction data, acting as a proxy to the underlying user satisfaction. For sparsity, i.e, only being able to observe how satisfied users are with a tiny fraction of user-item interactions, imputation models can be useful in predicting satisfaction level for all items users have consumed. For learning satisfying recommender policies, we postulate that reward shaping in RL recommender agents is powerful for driving satisfying user experiences. Putting everything together, we propose to jointly learn a policy network and a satisfaction imputation network: The role of the imputation network is to learn which actions are satisfying to the user; while the policy network, built on top of REINFORCE, decides which items to recommend, with the reward utilizing the imputed satisfaction. We use both offline analysis and live experiments in an industrial large-scale recommendation platform to demonstrate the promise of our approach for satisfying user experiences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge