Hanxiao Tan

Flow AM: Generating Point Cloud Global Explanations by Latent Alignment

Apr 29, 2024Abstract:Although point cloud models have gained significant improvements in prediction accuracy over recent years, their trustworthiness is still not sufficiently investigated. In terms of global explainability, Activation Maximization (AM) techniques in the image domain are not directly transplantable due to the special structure of the point cloud models. Existing studies exploit generative models to yield global explanations that can be perceived by humans. However, the opacity of the generative models themselves and the introduction of additional priors call into question the plausibility and fidelity of the explanations. In this work, we demonstrate that when the classifier predicts different types of instances, the intermediate layer activations are differently activated, known as activation flows. Based on this property, we propose an activation flow-based AM method that generates global explanations that can be perceived without incorporating any generative model. Furthermore, we reveal that AM based on generative models fails the sanity checks and thus lack of fidelity. Extensive experiments show that our approach dramatically enhances the perceptibility of explanations compared to other AM methods that are not based on generative models. Our code is available at: https://github.com/Explain3D/FlowAM

DAM: Diffusion Activation Maximization for 3D Global Explanations

Jan 26, 2024Abstract:In recent years, the performance of point cloud models has been rapidly improved. However, due to the limited amount of relevant explainability studies, the unreliability and opacity of these black-box models may lead to potential risks in applications where human lives are at stake, e.g. autonomous driving or healthcare. This work proposes a DDPM-based point cloud global explainability method (DAM) that leverages Point Diffusion Transformer (PDT), a novel point-wise symmetric model, with dual-classifier guidance to generate high-quality global explanations. In addition, an adapted path gradient integration method for DAM is proposed, which not only provides a global overview of the saliency maps for point cloud categories, but also sheds light on how the attributions of the explanations vary during the generation process. Extensive experiments indicate that our method outperforms existing ones in terms of perceptibility, representativeness, and diversity, with a significant reduction in generation time. Our code is available at: https://github.com/Explain3D/DAM

The Generalizability of Explanations

Feb 23, 2023Abstract:Due to the absence of ground truth, objective evaluation of explainability methods is an essential research direction. So far, the vast majority of evaluations can be summarized into three categories, namely human evaluation, sensitivity testing, and salinity check. This work proposes a novel evaluation methodology from the perspective of generalizability. We employ an Autoencoder to learn the distributions of the generated explanations and observe their learnability as well as the plausibility of the learned distributional features. We first briefly demonstrate the evaluation idea of the proposed approach at LIME, and then quantitatively evaluate multiple popular explainability methods. We also find that smoothing the explanations with SmoothGrad can significantly enhance the generalizability of explanations.

Maximum Entropy Baseline for Integrated Gradients

Apr 12, 2022

Abstract:Integrated Gradients (IG), one of the most popular explainability methods available, still remains ambiguous in the selection of baseline, which may seriously impair the credibility of the explanations. This study proposes a new uniform baseline, i.e., the Maximum Entropy Baseline, which is consistent with the "uninformative" property of baselines defined in IG. In addition, we propose an improved ablating evaluation approach incorporating the new baseline, where the information conservativeness is maintained. We explain the linear transformation invariance of IG baselines from an information perspective. Finally, we assess the reliability of the explanations generated by different explainability methods and different IG baselines through extensive evaluation experiments.

Visualizing Global Explanations of Point Cloud DNNs

Mar 28, 2022

Abstract:In the field of autonomous driving and robotics, point clouds are showing their excellent real-time performance as raw data from most of the mainstream 3D sensors. Therefore, point cloud neural networks have become a popular research direction in recent years. So far, however, there has been little discussion about the explainability of deep neural networks for point clouds. In this paper, we propose a point cloud-applicable explainability approach based on a local surrogate model-based method to show which components contribute to the classification. Moreover, we propose quantitative fidelity validations for generated explanations that enhance the persuasive power of explainability and compare the plausibility of different existing point cloud-applicable explainability methods. Our new explainability approach provides a fairly accurate, more semantically coherent and widely applicable explanation for point cloud classification tasks. Our code is available at https://github.com/Explain3D/LIME-3D

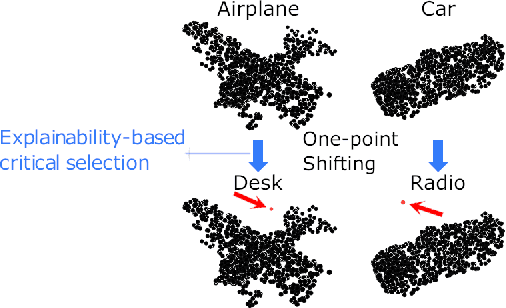

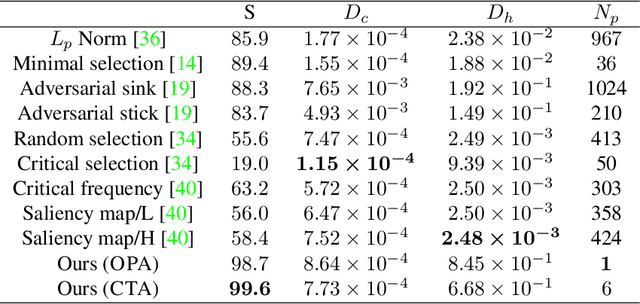

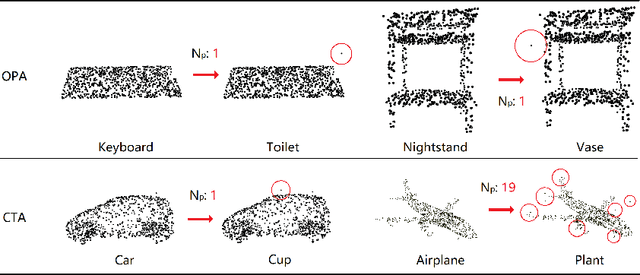

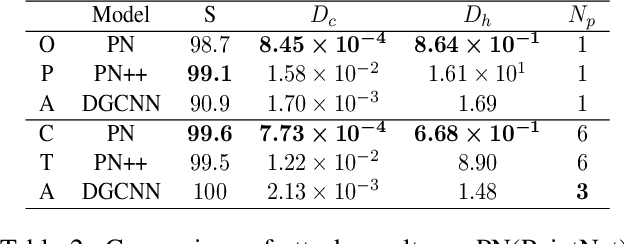

Explainability-Aware One Point Attack for Point Cloud Neural Networks

Oct 08, 2021

Abstract:With the proposition of neural networks for point clouds, deep learning has started to shine in the field of 3D object recognition while researchers have shown an increased interest to investigate the reliability of point cloud networks by fooling them with perturbed instances. However, most studies focus on the imperceptibility or surface consistency, with humans perceiving no perturbations on the adversarial examples. This work proposes two new attack methods: opa and cta, which go in the opposite direction: we restrict the perturbation dimensions to a human cognizable range with the help of explainability methods, which enables the working principle or decision boundary of the models to be comprehensible through the observable perturbation magnitude. Our results show that the popular point cloud networks can be deceived with almost 100% success rate by shifting only one point from the input instance. In addition, we attempt to provide a more persuasive viewpoint of comparing the robustness of point cloud models against adversarial attacks. We also show the interesting impact of different point attribution distributions on the adversarial robustness of point cloud networks. Finally, we discuss how our approaches facilitate the explainability study for point cloud networks. To the best of our knowledge, this is the first point-cloud-based adversarial approach concerning explainability. Our code is available at https://github.com/Explain3D/Exp-One-Point-Atk-PC.

Surrogate Model-Based Explainability Methods for Point Cloud NNs

Aug 18, 2021

Abstract:In the field of autonomous driving and robotics, point clouds are showing their excellent real-time performance as raw data from most of the mainstream 3D sensors. Therefore, point cloud neural networks have become a popular research direction in recent years. So far, however, there has been little discussion about the explainability of deep neural networks for point clouds. In this paper, we propose a point cloud-applicable explainability approach based on local surrogate model-based method to show which components contribute to the classification. Moreover, we propose quantitative fidelity validations for generated explanations that enhance the persuasive power of explainability and compare the plausibility of different existing point cloud-applicable explainability methods. Our new explainability approach provides a fairly accurate, more semantically coherent and widely applicable explanation for point cloud classification tasks. Our code is available at https://github.com/Explain3D/LIME-3D

Explainable Machine Learning with Prior Knowledge: An Overview

May 21, 2021

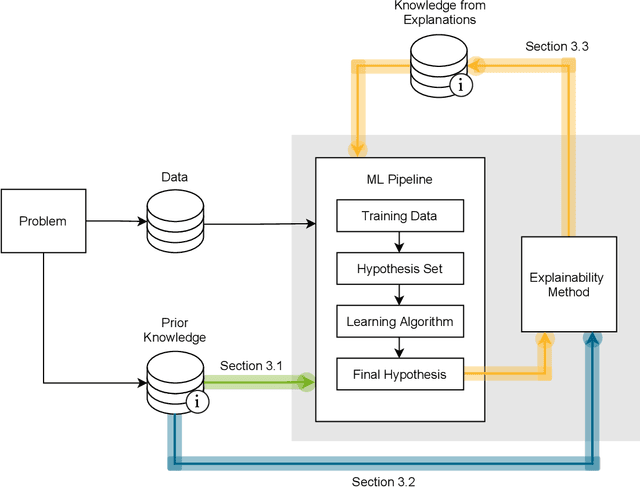

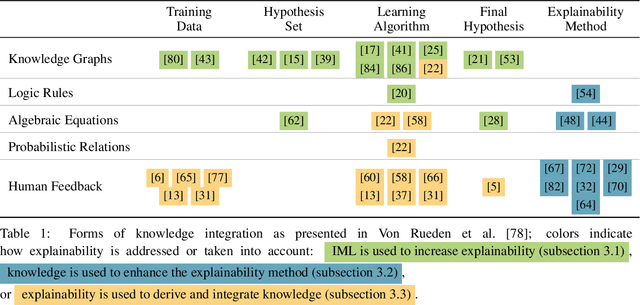

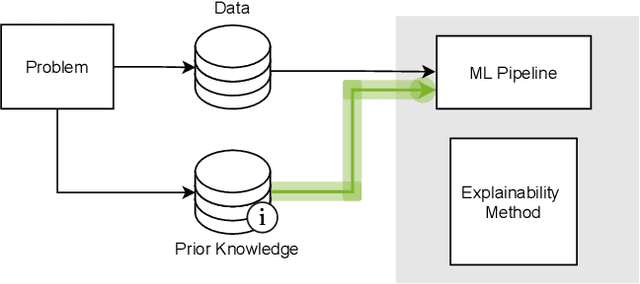

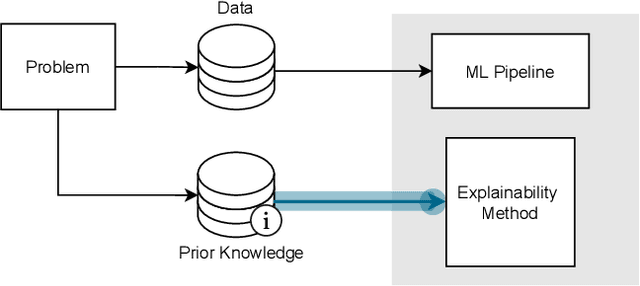

Abstract:This survey presents an overview of integrating prior knowledge into machine learning systems in order to improve explainability. The complexity of machine learning models has elicited research to make them more explainable. However, most explainability methods cannot provide insight beyond the given data, requiring additional information about the context. We propose to harness prior knowledge to improve upon the explanation capabilities of machine learning models. In this paper, we present a categorization of current research into three main categories which either integrate knowledge into the machine learning pipeline, into the explainability method or derive knowledge from explanations. To classify the papers, we build upon the existing taxonomy of informed machine learning and extend it from the perspective of explainability. We conclude with open challenges and research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge