Hans-Joachim Bungartz

Multi-fidelity Gaussian process surrogate modeling for regression problems in physics

Apr 18, 2024Abstract:One of the main challenges in surrogate modeling is the limited availability of data due to resource constraints associated with computationally expensive simulations. Multi-fidelity methods provide a solution by chaining models in a hierarchy with increasing fidelity, associated with lower error, but increasing cost. In this paper, we compare different multi-fidelity methods employed in constructing Gaussian process surrogates for regression. Non-linear autoregressive methods in the existing literature are primarily confined to two-fidelity models, and we extend these methods to handle more than two levels of fidelity. Additionally, we propose enhancements for an existing method incorporating delay terms by introducing a structured kernel. We demonstrate the performance of these methods across various academic and real-world scenarios. Our findings reveal that multi-fidelity methods generally have a smaller prediction error for the same computational cost as compared to the single-fidelity method, although their effectiveness varies across different scenarios.

Multi-fidelity Constrained Optimization for Stochastic Black Box Simulators

Nov 25, 2023

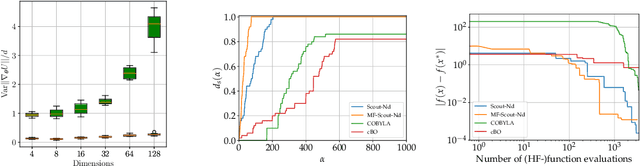

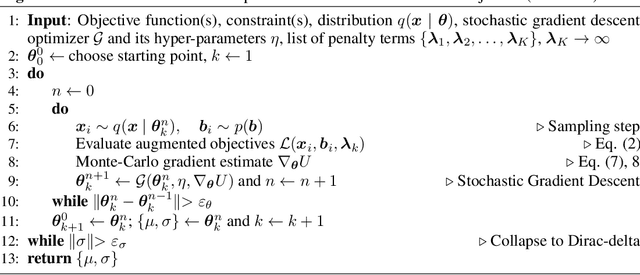

Abstract:Constrained optimization of the parameters of a simulator plays a crucial role in a design process. These problems become challenging when the simulator is stochastic, computationally expensive, and the parameter space is high-dimensional. One can efficiently perform optimization only by utilizing the gradient with respect to the parameters, but these gradients are unavailable in many legacy, black-box codes. We introduce the algorithm Scout-Nd (Stochastic Constrained Optimization for N dimensions) to tackle the issues mentioned earlier by efficiently estimating the gradient, reducing the noise of the gradient estimator, and applying multi-fidelity schemes to further reduce computational effort. We validate our approach on standard benchmarks, demonstrating its effectiveness in optimizing parameters highlighting better performance compared to existing methods.

Context-aware learning of hierarchies of low-fidelity models for multi-fidelity uncertainty quantification

Nov 20, 2022

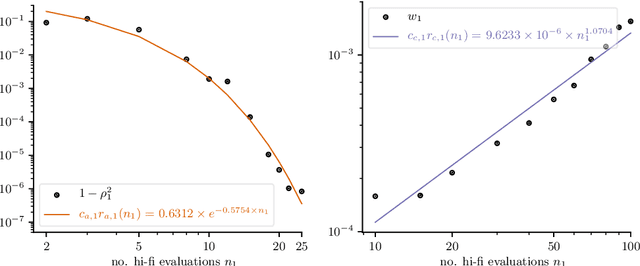

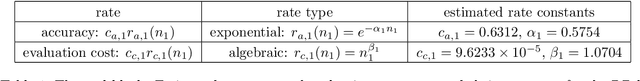

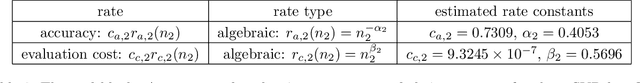

Abstract:Multi-fidelity Monte Carlo methods leverage low-fidelity and surrogate models for variance reduction to make tractable uncertainty quantification even when numerically simulating the physical systems of interest with high-fidelity models is computationally expensive. This work proposes a context-aware multi-fidelity Monte Carlo method that optimally balances the costs of training low-fidelity models with the costs of Monte Carlo sampling. It generalizes the previously developed context-aware bi-fidelity Monte Carlo method to hierarchies of multiple models and to more general types of low-fidelity models. When training low-fidelity models, the proposed approach takes into account the context in which the learned low-fidelity models will be used, namely for variance reduction in Monte Carlo estimation, which allows it to find optimal trade-offs between training and sampling to minimize upper bounds of the mean-squared errors of the estimators for given computational budgets. This is in stark contrast to traditional surrogate modeling and model reduction techniques that construct low-fidelity models with the primary goal of approximating well the high-fidelity model outputs and typically ignore the context in which the learned models will be used in upstream tasks. The proposed context-aware multi-fidelity Monte Carlo method applies to hierarchies of a wide range of types of low-fidelity models such as sparse-grid and deep-network models. Numerical experiments with the gyrokinetic simulation code \textsc{Gene} show speedups of up to two orders of magnitude compared to standard estimators when quantifying uncertainties in small-scale fluctuations in confined plasma in fusion reactors. This corresponds to a runtime reduction from 72 days to about four hours on one node of the Lonestar6 supercomputer at the Texas Advanced Computing Center.

Neural Nets with a Newton Conjugate Gradient Method on Multiple GPUs

Aug 03, 2022

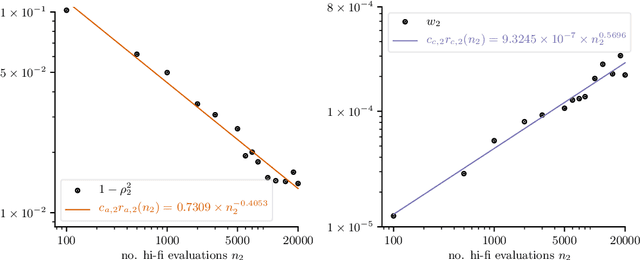

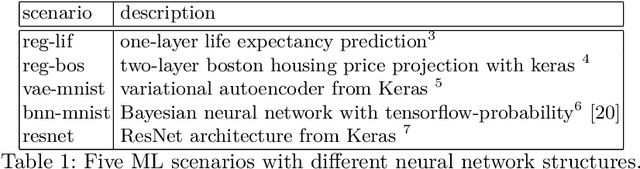

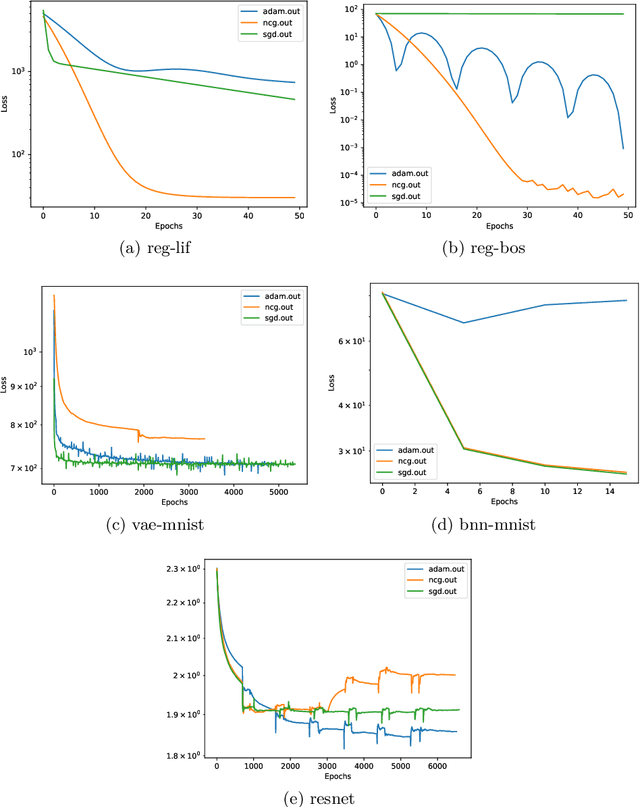

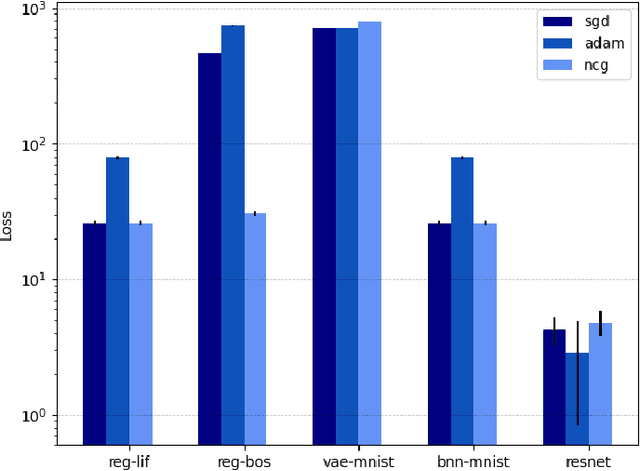

Abstract:Training deep neural networks consumes increasing computational resource shares in many compute centers. Often, a brute force approach to obtain hyperparameter values is employed. Our goal is (1) to enhance this by enabling second-order optimization methods with fewer hyperparameters for large-scale neural networks and (2) to perform a survey of the performance optimizers for specific tasks to suggest users the best one for their problem. We introduce a novel second-order optimization method that requires the effect of the Hessian on a vector only and avoids the huge cost of explicitly setting up the Hessian for large-scale networks. We compare the proposed second-order method with two state-of-the-art optimizers on five representative neural network problems, including regression and very deep networks from computer vision or variational autoencoders. For the largest setup, we efficiently parallelized the optimizers with Horovod and applied it to a 8 GPU NVIDIA P100 (DGX-1) machine.

* Accepted to PPAM conference

Model Order Reduction based on Runge-Kutta Neural Network

Mar 25, 2021

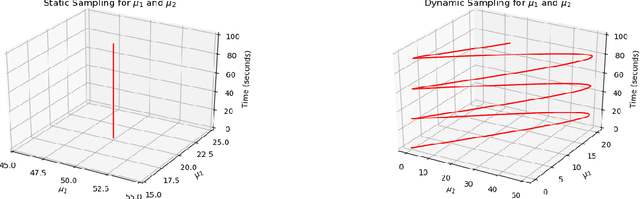

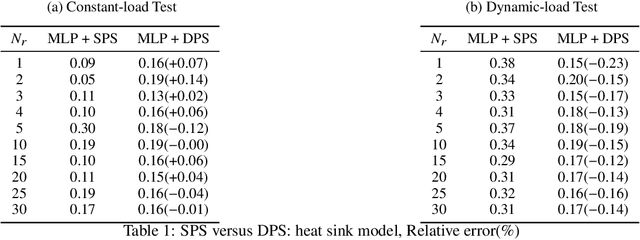

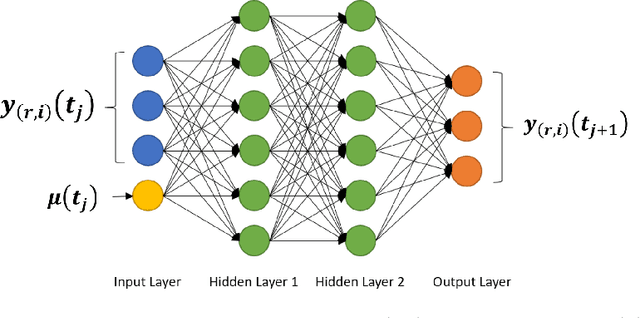

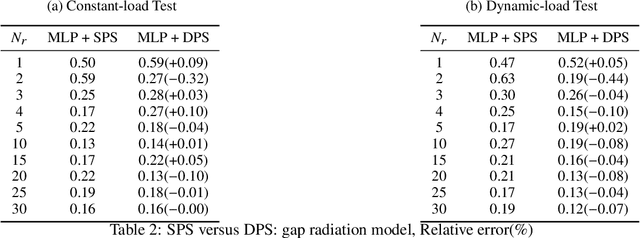

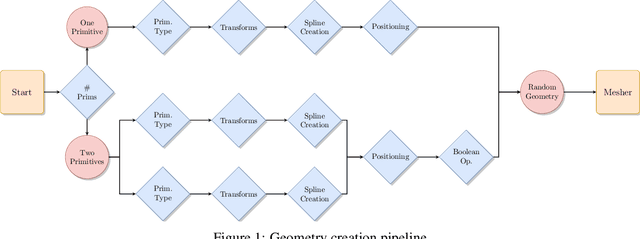

Abstract:Model Order Reduction (MOR) methods enable the generation of real-time-capable digital twins, which can enable various novel value streams in industry. While traditional projection-based methods are robust and accurate for linear problems, incorporating Machine Learning to deal with nonlinearity becomes a new choice for reducing complex problems. Such methods usually consist of two steps. The first step is dimension reduction by projection-based method, and the second is the model reconstruction by Neural Network. In this work, we apply some modifications for both steps respectively and investigate how they are impacted by testing with three simulation models. In all cases Proper Orthogonal Decomposition (POD) is used for dimension reduction. For this step, the effects of generating the input snapshot database with constant input parameters is compared with time-dependent input parameters. For the model reconstruction step, two types of neural network architectures are compared: Multilayer Perceptron (MLP) and Runge-Kutta Neural Network (RKNN). The MLP learns the system state directly while RKNN learns the derivative of system state and predicts the new state as a Runge-Kutta integrator.

Machine Learning-Based Optimal Mesh Generation in Computational Fluid Dynamics

Feb 25, 2021

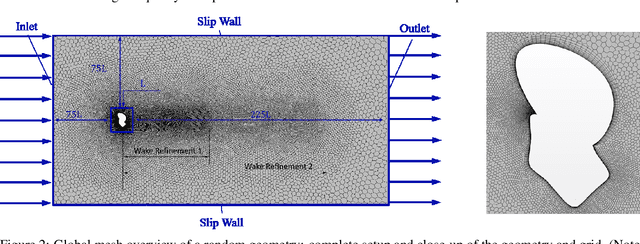

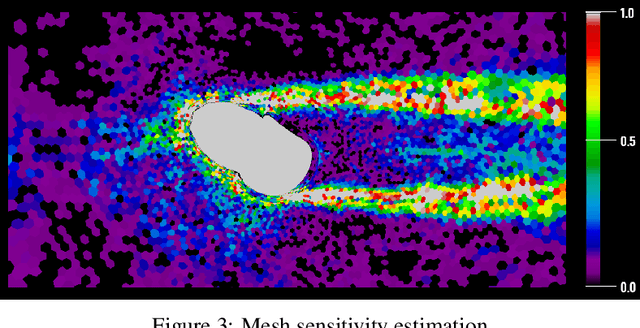

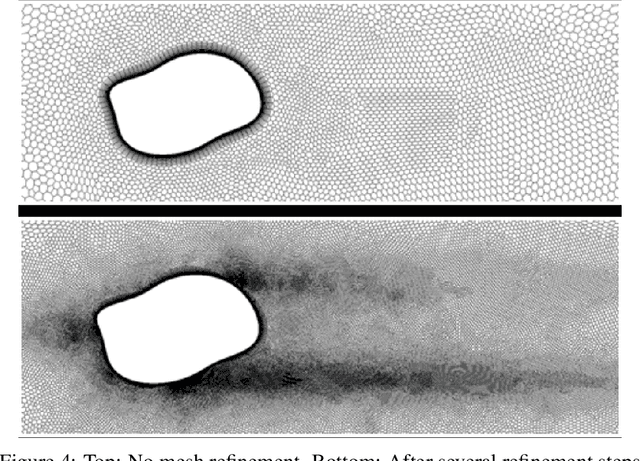

Abstract:Computational Fluid Dynamics (CFD) is a major sub-field of engineering. Corresponding flow simulations are typically characterized by heavy computational resource requirements. Often, very fine and complex meshes are required to resolve physical effects in an appropriate manner. Since all CFD algorithms scale at least linearly with the size of the underlying mesh discretization, finding an optimal mesh is key for computational efficiency. One methodology used to find optimal meshes is goal-oriented adaptive mesh refinement. However, this is typically computationally demanding and only available in a limited number of tools. Within this contribution, we adopt a machine learning approach to identify optimal mesh densities. We generate optimized meshes using classical methodologies and propose to train a convolutional network predicting optimal mesh densities given arbitrary geometries. The proposed concept is validated along 2d wind tunnel simulations with more than 60,000 simulations. Using a training set of 20,000 simulations we achieve accuracies of more than 98.7%. Corresponding predictions of optimal meshes can be used as input for any mesh generation and CFD tool. Thus without complex computations, any CFD engineer can start his predictions from a high quality mesh.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge