Hanlu Yang

Constrained Independent Vector Analysis with Reference for Multi-Subject fMRI Analysis

Nov 08, 2023

Abstract:Independent component analysis (ICA) is now a widely used solution for the analysis of multi-subject functional magnetic resonance imaging (fMRI) data. Independent vector analysis (IVA) generalizes ICA to multiple datasets, i.e., to multi-subject data, and in addition to higher-order statistical information in ICA, it leverages the statistical dependence across the datasets as an additional type of statistical diversity. As such, it preserves variability in the estimation of single-subject maps but its performance might suffer when the number of datasets increases. Constrained IVA is an effective way to bypass computational issues and improve the quality of separation by incorporating available prior information. Existing constrained IVA approaches often rely on user-defined threshold values to define the constraints. However, an improperly selected threshold can have a negative impact on the final results. This paper proposes two novel methods for constrained IVA: one using an adaptive-reverse scheme to select variable thresholds for the constraints and a second one based on a threshold-free formulation by leveraging the unique structure of IVA. We demonstrate that our solutions provide an attractive solution to multi-subject fMRI analysis both by simulations and through analysis of resting state fMRI data collected from 98 subjects -- the highest number of subjects ever used by IVA algorithms. Our results show that both proposed approaches obtain significantly better separation quality and model match while providing computationally efficient and highly reproducible solutions.

New Interpretable Patterns and Discriminative Features from Brain Functional Network Connectivity Using Dictionary Learning

Nov 10, 2022

Abstract:Independent component analysis (ICA) of multi-subject functional magnetic resonance imaging (fMRI) data has proven useful in providing a fully multivariate summary that can be used for multiple purposes. ICA can identify patterns that can discriminate between healthy controls (HC) and patients with various mental disorders such as schizophrenia (Sz). Temporal functional network connectivity (tFNC) obtained from ICA can effectively explain the interactions between brain networks. On the other hand, dictionary learning (DL) enables the discovery of hidden information in data using learnable basis signals through the use of sparsity. In this paper, we present a new method that leverages ICA and DL for the identification of directly interpretable patterns to discriminate between the HC and Sz groups. We use multi-subject resting-state fMRI data from $358$ subjects and form subject-specific tFNC feature vectors from ICA results. Then, we learn sparse representations of the tFNCs and introduce a new set of sparse features as well as new interpretable patterns from the learned atoms. Our experimental results show that the new representation not only leads to effective classification between HC and Sz groups using sparse features, but can also identify new interpretable patterns from the learned atoms that can help understand the complexities of mental diseases such as schizophrenia.

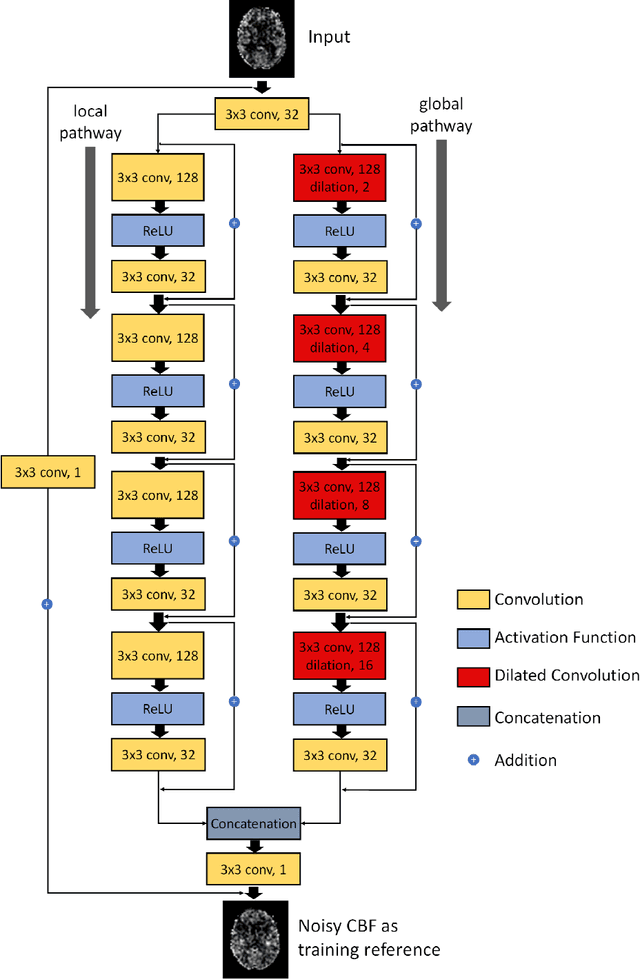

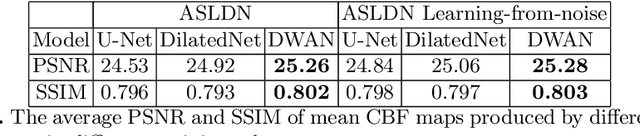

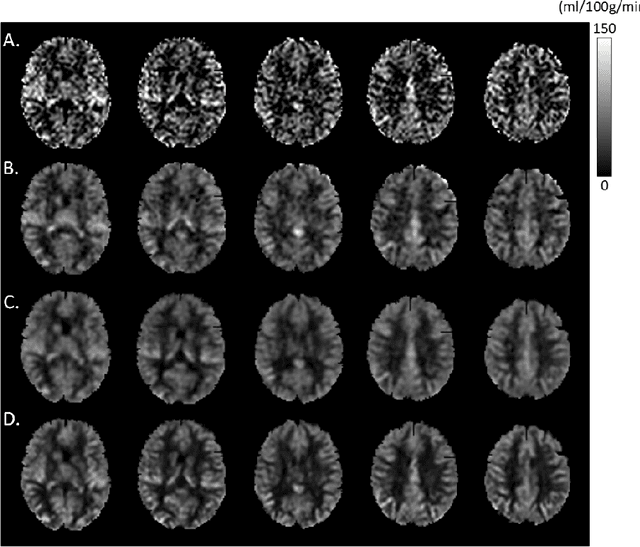

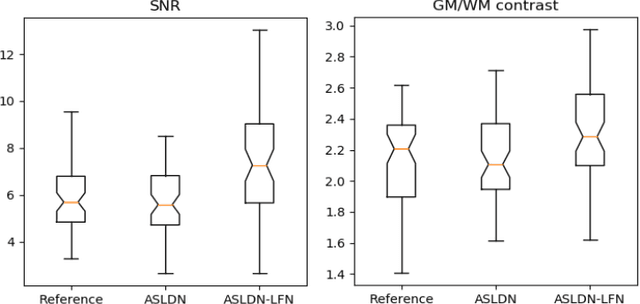

A Learning-from-noise Dilated Wide Activation Network for denoising Arterial Spin Labeling Perfusion Images

May 15, 2020

Abstract:Arterial spin labeling (ASL) perfusion MRI provides a non-invasive way to quantify cerebral blood flow (CBF) but it still suffers from a low signal-to-noise-ratio (SNR). Using deep machine learning (DL), several groups have shown encouraging denoising results. Interestingly, the improvement was obtained when the deep neural network was trained using noise-contaminated surrogate reference because of the lack of golden standard high quality ASL CBF images. More strikingly, the output of these DL ASL networks (ASLDN) showed even higher SNR than the surrogate reference. This phenomenon indicates a learning-from-noise capability of deep networks for ASL CBF image denoising, which can be further enhanced by network optimization. In this study, we proposed a new ASLDN to test whether similar or even better ASL CBF image quality can be achieved in the case of highly noisy training reference. Different experiments were performed to validate the learning-from-noise hypothesis. The results showed that the learning-from-noise strategy produced better output quality than ASLDN trained with relatively high SNR reference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge