Hanjoo Kim

NSML: Meet the MLaaS platform with a real-world case study

Oct 08, 2018

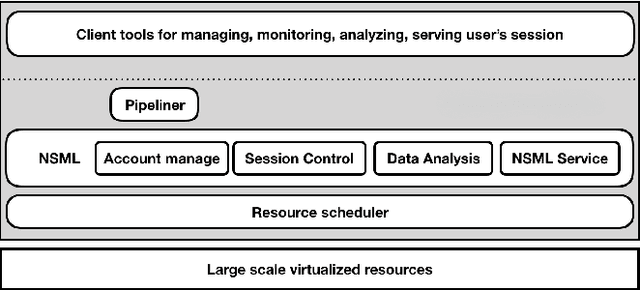

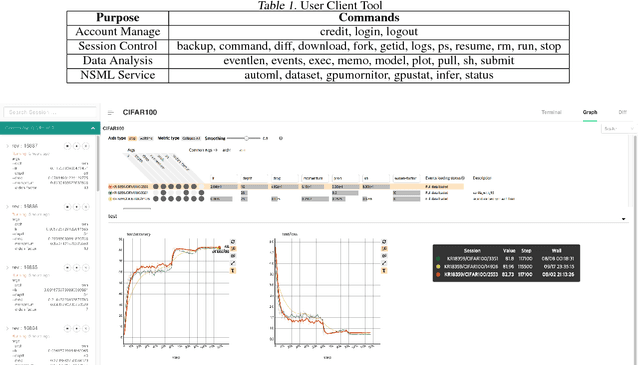

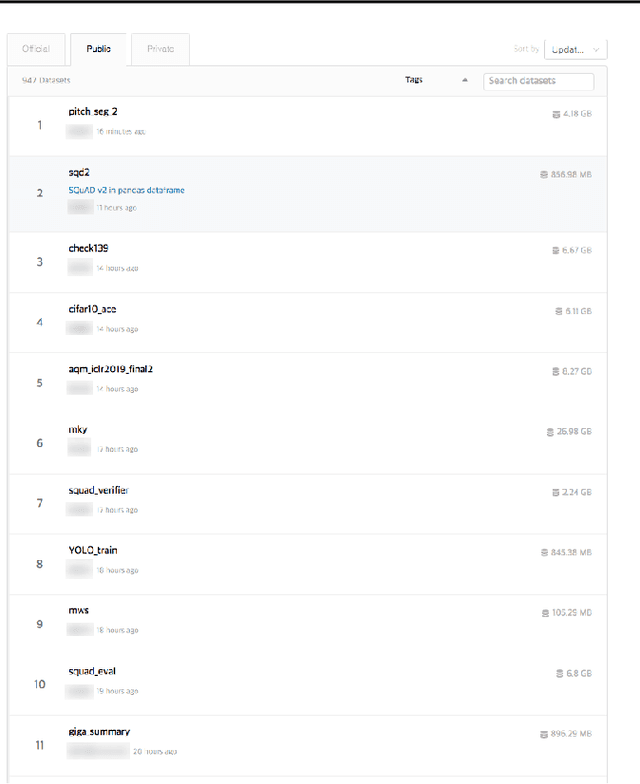

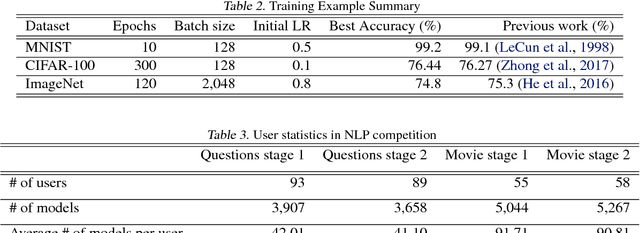

Abstract:The boom of deep learning induced many industries and academies to introduce machine learning based approaches into their concern, competitively. However, existing machine learning frameworks are limited to sufficiently fulfill the collaboration and management for both data and models. We proposed NSML, a machine learning as a service (MLaaS) platform, to meet these demands. NSML helps machine learning work be easily launched on a NSML cluster and provides a collaborative environment which can afford development at enterprise scale. Finally, NSML users can deploy their own commercial services with NSML cluster. In addition, NSML furnishes convenient visualization tools which assist the users in analyzing their work. To verify the usefulness and accessibility of NSML, we performed some experiments with common examples. Furthermore, we examined the collaborative advantages of NSML through three competitions with real-world use cases.

DeepSpark: A Spark-Based Distributed Deep Learning Framework for Commodity Clusters

Oct 01, 2016

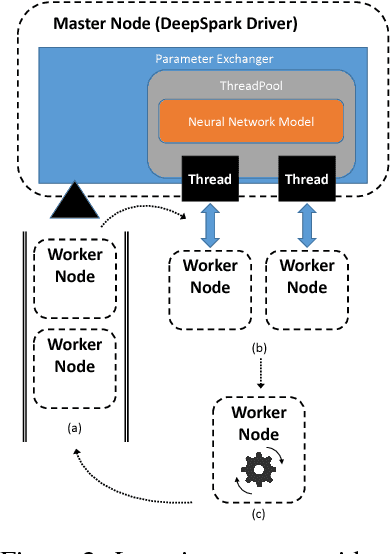

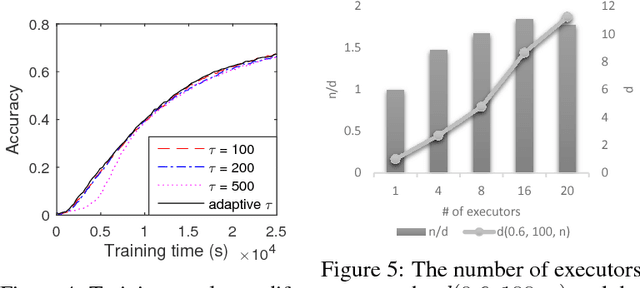

Abstract:The increasing complexity of deep neural networks (DNNs) has made it challenging to exploit existing large-scale data processing pipelines for handling massive data and parameters involved in DNN training. Distributed computing platforms and GPGPU-based acceleration provide a mainstream solution to this computational challenge. In this paper, we propose DeepSpark, a distributed and parallel deep learning framework that exploits Apache Spark on commodity clusters. To support parallel operations, DeepSpark automatically distributes workloads and parameters to Caffe/Tensorflow-running nodes using Spark, and iteratively aggregates training results by a novel lock-free asynchronous variant of the popular elastic averaging stochastic gradient descent based update scheme, effectively complementing the synchronized processing capabilities of Spark. DeepSpark is an on-going project, and the current release is available at http://deepspark.snu.ac.kr.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge