Han Ji

Transferable Graph Condensation from the Causal Perspective

Jan 29, 2026Abstract:The increasing scale of graph datasets has significantly improved the performance of graph representation learning methods, but it has also introduced substantial training challenges. Graph dataset condensation techniques have emerged to compress large datasets into smaller yet information-rich datasets, while maintaining similar test performance. However, these methods strictly require downstream applications to match the original dataset and task, which often fails in cross-task and cross-domain scenarios. To address these challenges, we propose a novel causal-invariance-based and transferable graph dataset condensation method, named \textbf{TGCC}, providing effective and transferable condensed datasets. Specifically, to preserve domain-invariant knowledge, we first extract domain causal-invariant features from the spatial domain of the graph using causal interventions. Then, to fully capture the structural and feature information of the original graph, we perform enhanced condensation operations. Finally, through spectral-domain enhanced contrastive learning, we inject the causal-invariant features into the condensed graph, ensuring that the compressed graph retains the causal information of the original graph. Experimental results on five public datasets and our novel \textbf{FinReport} dataset demonstrate that TGCC achieves up to a 13.41\% improvement in cross-task and cross-domain complex scenarios compared to existing methods, and achieves state-of-the-art performance on 5 out of 6 datasets in the single dataset and task scenario.

Loss Functions for Predictor-based Neural Architecture Search

Jun 06, 2025Abstract:Evaluation is a critical but costly procedure in neural architecture search (NAS). Performance predictors have been widely adopted to reduce evaluation costs by directly estimating architecture performance. The effectiveness of predictors is heavily influenced by the choice of loss functions. While traditional predictors employ regression loss functions to evaluate the absolute accuracy of architectures, recent approaches have explored various ranking-based loss functions, such as pairwise and listwise ranking losses, to focus on the ranking of architecture performance. Despite their success in NAS, the effectiveness and characteristics of these loss functions have not been thoroughly investigated. In this paper, we conduct the first comprehensive study on loss functions in performance predictors, categorizing them into three main types: regression, ranking, and weighted loss functions. Specifically, we assess eight loss functions using a range of NAS-relevant metrics on 13 tasks across five search spaces. Our results reveal that specific categories of loss functions can be effectively combined to enhance predictor-based NAS. Furthermore, our findings could provide practical guidance for selecting appropriate loss functions for various tasks. We hope this work provides meaningful insights to guide the development of loss functions for predictor-based methods in the NAS community.

CARL: Causality-guided Architecture Representation Learning for an Interpretable Performance Predictor

Jun 04, 2025Abstract:Performance predictors have emerged as a promising method to accelerate the evaluation stage of neural architecture search (NAS). These predictors estimate the performance of unseen architectures by learning from the correlation between a small set of trained architectures and their performance. However, most existing predictors ignore the inherent distribution shift between limited training samples and diverse test samples. Hence, they tend to learn spurious correlations as shortcuts to predictions, leading to poor generalization. To address this, we propose a Causality-guided Architecture Representation Learning (CARL) method aiming to separate critical (causal) and redundant (non-causal) features of architectures for generalizable architecture performance prediction. Specifically, we employ a substructure extractor to split the input architecture into critical and redundant substructures in the latent space. Then, we generate multiple interventional samples by pairing critical representations with diverse redundant representations to prioritize critical features. Extensive experiments on five NAS search spaces demonstrate the state-of-the-art accuracy and superior interpretability of CARL. For instance, CARL achieves 97.67% top-1 accuracy on CIFAR-10 using DARTS.

Learning Load Balancing with GNN in MPTCP-Enabled Heterogeneous Networks

Oct 22, 2024

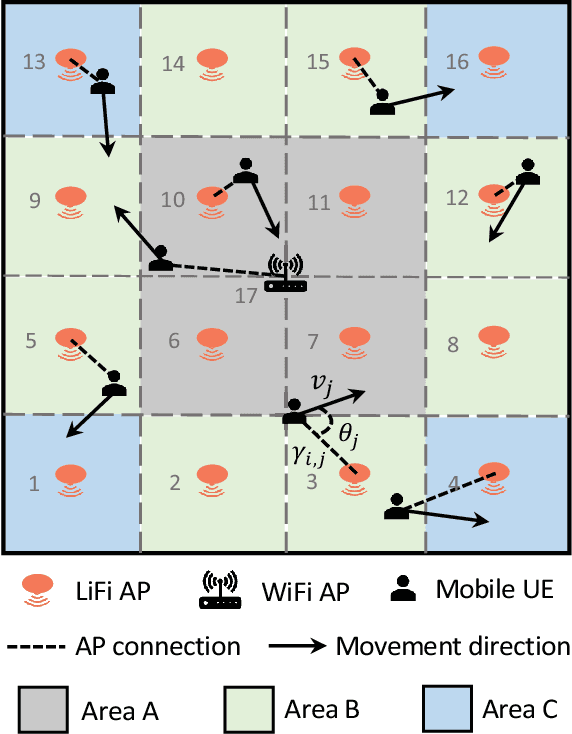

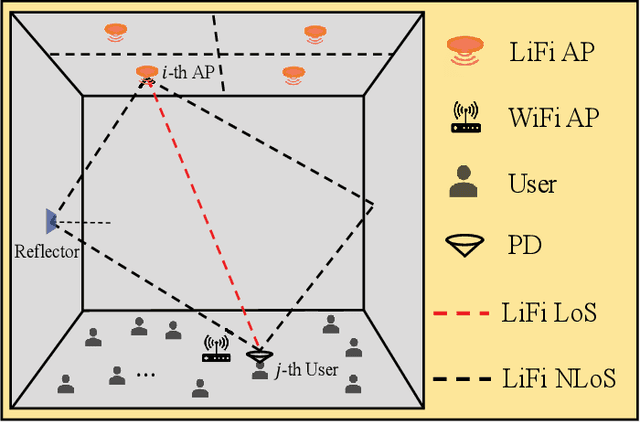

Abstract:Hybrid light fidelity (LiFi) and wireless fidelity (WiFi) networks are a promising paradigm of heterogeneous network (HetNet), attributed to the complementary physical properties of optical spectra and radio frequency. However, the current development of such HetNets is mostly bottlenecked by the existing transmission control protocol (TCP), which restricts the user equipment (UE) to connecting one access point (AP) at a time. While the ongoing investigation on multipath TCP (MPTCP) can bring significant benefits, it complicates the network topology of HetNets, making the existing load balancing (LB) learning models less effective. Driven by this, we propose a graph neural network (GNN)-based model to tackle the LB problem for MPTCP-enabled HetNets, which results in a partial mesh topology. Such a topology can be modeled as a graph, with the channel state information and data rate requirement embedded as node features, while the LB solutions are deemed as edge labels. Compared to the conventional deep neural network (DNN), the proposed GNN-based model exhibits two key strengths: i) it can better interpret a complex network topology; and ii) it can handle various numbers of APs and UEs with a single trained model. Simulation results show that against the traditional optimisation method, the proposed learning model can achieve near-optimal throughput within a gap of 11.5%, while reducing the inference time by 4 orders of magnitude. In contrast to the DNN model, the new method can improve the network throughput by up to 21.7%, at a similar inference time level.

User-Centric Machine Learning for Resource Allocation in MPTCP-Enabled Hybrid LiFi and WiFi Networks

Aug 14, 2024Abstract:As an emerging paradigm of heterogeneous networks (HetNets) towards 6G, the hybrid light fidelity (LiFi) and wireless fidelity (WiFi) networks (HLWNets) have potential to explore the complementary advantages of the optical and radio spectra. Like other cooperation-native HetNets, HLWNets face a crucial load balancing (LB) problem due to the heterogeneity of access points (APs). The existing literature mostly formulates this problem as joint AP selection and resource allocation (RA), presuming that each user equipment (UE) is served by one AP at a time, under the constraint of the traditional transmission control protocol (TCP). In contrast, multipath TCP (MPTCP), which allows for the simultaneous use of multiple APs, can significantly boost the UE's throughput as well as enhancing its network resilience. However, the existing TCP-based LB methods, particularly those aided by machine learning, are not suitable for the MPTCP scenario. In this paper, we discuss the challenges when developing learning-aided LB in MPTCP-enabled HLWNets, and propose a novel user-centric learning model to tackle this tricky problem. Unlike the conventional network-centric learning methods, the proposed method determines the LB solution for a single target UE, rendering low complexity and high flexibility in practical implementations. Results show that the proposed user-centric approach can greatly outperform the network-centric learning method. Against the TCP-based LB method such as game theory, the proposed method can increase the throughput of HLWNets by up to 40\%.

PEER: Expertizing Domain-Specific Tasks with a Multi-Agent Framework and Tuning Methods

Jul 10, 2024

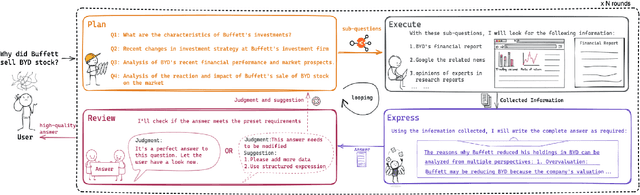

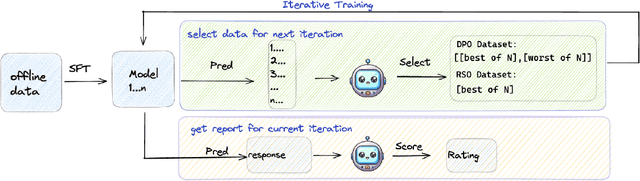

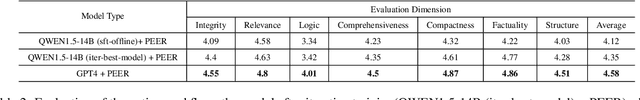

Abstract:In domain-specific applications, GPT-4, augmented with precise prompts or Retrieval-Augmented Generation (RAG), shows notable potential but faces the critical tri-lemma of performance, cost, and data privacy. High performance requires sophisticated processing techniques, yet managing multiple agents within a complex workflow often proves costly and challenging. To address this, we introduce the PEER (Plan, Execute, Express, Review) multi-agent framework. This systematizes domain-specific tasks by integrating precise question decomposition, advanced information retrieval, comprehensive summarization, and rigorous self-assessment. Given the concerns of cost and data privacy, enterprises are shifting from proprietary models like GPT-4 to custom models, striking a balance between cost, security, and performance. We developed industrial practices leveraging online data and user feedback for efficient model tuning. This study provides best practice guidelines for applying multi-agent systems in domain-specific problem-solving and implementing effective agent tuning strategies. Our empirical studies, particularly in the financial question-answering domain, demonstrate that our approach achieves 95.0% of GPT-4's performance, while effectively managing costs and ensuring data privacy.

CAP: A Context-Aware Neural Predictor for NAS

Jun 04, 2024Abstract:Neural predictors are effective in boosting the time-consuming performance evaluation stage in neural architecture search (NAS), owing to their direct estimation of unseen architectures. Despite the effectiveness, training a powerful neural predictor with fewer annotated architectures remains a huge challenge. In this paper, we propose a context-aware neural predictor (CAP) which only needs a few annotated architectures for training based on the contextual information from the architectures. Specifically, the input architectures are encoded into graphs and the predictor infers the contextual structure around the nodes inside each graph. Then, enhanced by the proposed context-aware self-supervised task, the pre-trained predictor can obtain expressive and generalizable representations of architectures. Therefore, only a few annotated architectures are sufficient for training. Experimental results in different search spaces demonstrate the superior performance of CAP compared with state-of-the-art neural predictors. In particular, CAP can rank architectures precisely at the budget of only 172 annotated architectures in NAS-Bench-101. Moreover, CAP can help find promising architectures in both NAS-Bench-101 and DARTS search spaces on the CIFAR-10 dataset, serving as a useful navigator for NAS to explore the search space efficiently.

Resource and Mobility Management in Hybrid LiFi and WiFi Networks: A User-Centric Learning Approach

Mar 25, 2024

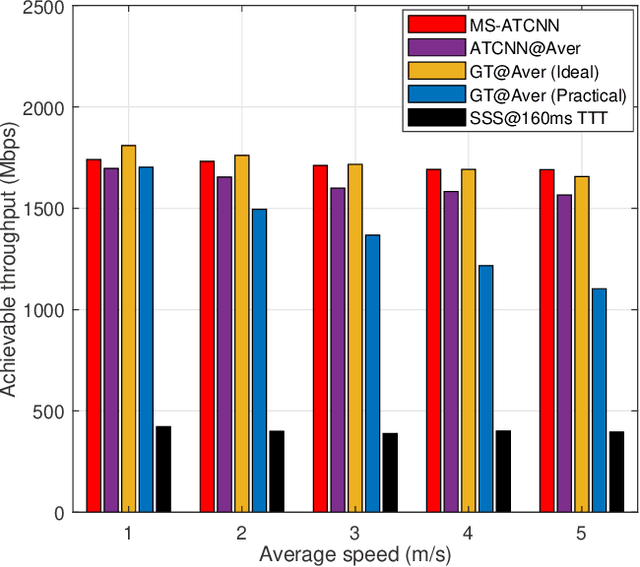

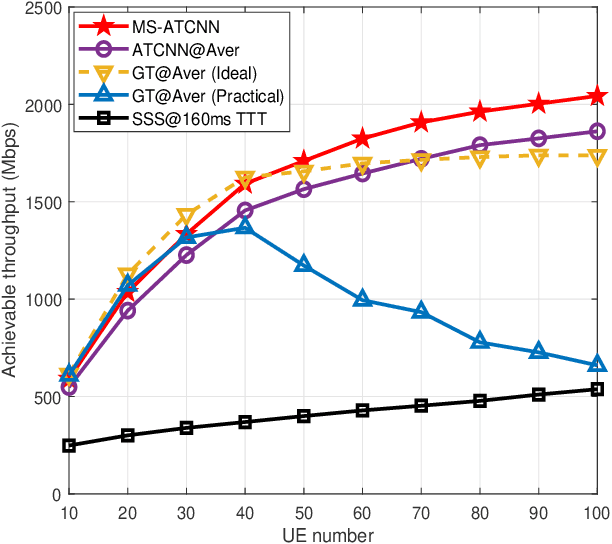

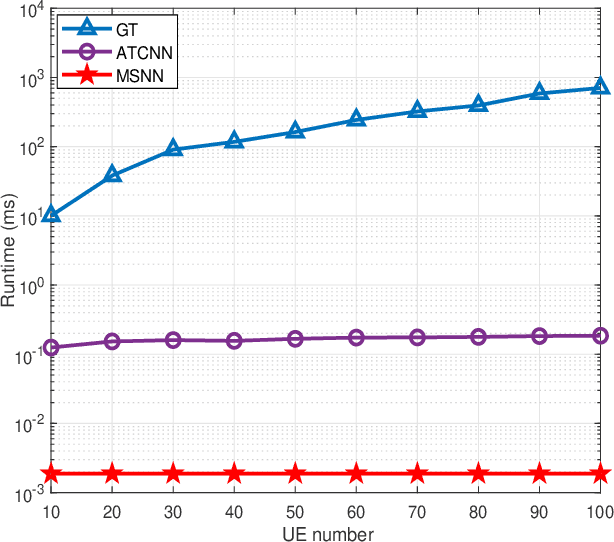

Abstract:Hybrid light fidelity (LiFi) and wireless fidelity (WiFi) networks (HLWNets) are an emerging indoor wireless communication paradigm, which combines the advantages of the capacious optical spectra of LiFi and ubiquitous coverage of WiFi. Meanwhile, load balancing (LB) becomes a key challenge in resource management for such hybrid networks. The existing LB methods are mostly network-centric, relying on a central unit to make a solution for the users all at once. Consequently, the solution needs to be updated for all users at the same pace, regardless of their moving status. This would affect the network performance in two aspects: i) when the update frequency is low, it would compromise the connectivity of fast-moving users; ii) when the update frequency is high, it would cause unnecessary handovers as well as hefty feedback costs for slow-moving users. Motivated by this, we investigate user-centric LB which allows users to update their solutions at different paces. The research is developed upon our previous work on adaptive target-condition neural network (ATCNN), which can conduct LB for individual users in quasi-static channels. In this paper, a deep neural network (DNN) model is designed to enable an adaptive update interval for each individual user. This new model is termed as mobility-supporting neural network (MSNN). Associating MSNN with ATCNN, a user-centric LB framework named mobility-supporting ATCNN (MS-ATCNN) is proposed to handle resource management and mobility management simultaneously. Results show that at the same level of average update interval, MS-ATCNN can achieve a network throughput up to 215\% higher than conventional LB methods such as game theory, especially for a larger number of users. In addition, MS-ATCNN costs an ultra low runtime at the level of 100s $\mu$s, which is two to three orders of magnitude lower than game theory.

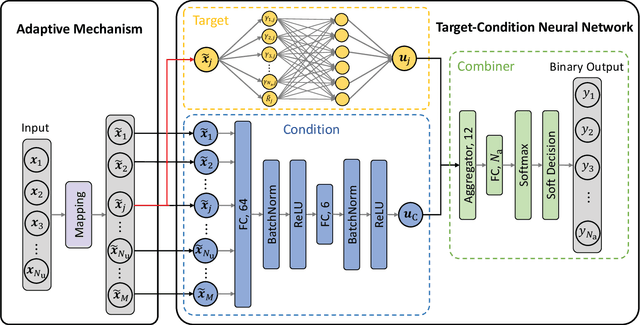

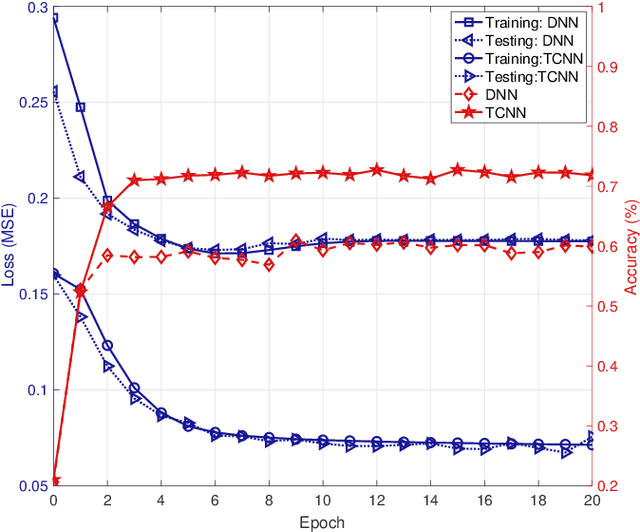

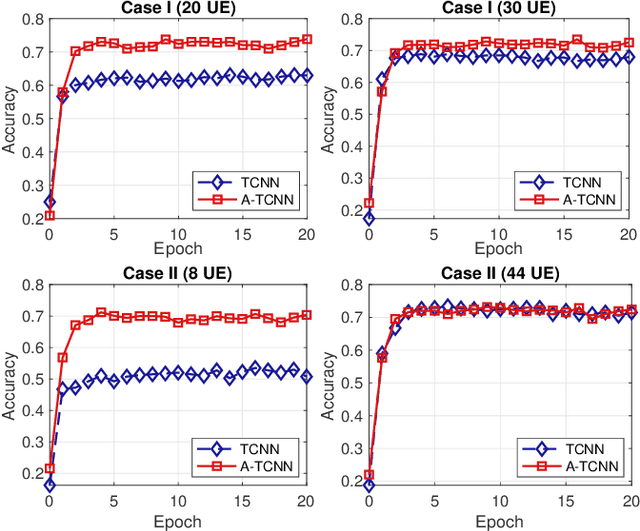

Adaptive Target-Condition Neural Network: DNN-Aided Load Balancing for Hybrid LiFi and WiFi Networks

Aug 09, 2022

Abstract:Load balancing (LB) is a challenging issue in the hybrid light fidelity (LiFi) and wireless fidelity (WiFi) networks (HLWNets), due to the nature of heterogeneous access points (APs). Machine learning has the potential to provide a complexity-friendly LB solution with near-optimal network performance, at the cost of a training process. The state-of-the-art (SOTA) learning-aided LB methods, however, need retraining when the network environment (especially the number of users) changes, significantly limiting its practicability. In this paper, a novel deep neural network (DNN) structure named adaptive target-condition neural network (A-TCNN) is proposed, which conducts AP selection for one target user upon the condition of other users. Also, an adaptive mechanism is developed to map a smaller number of users to a larger number through splitting their data rate requirements, without affecting the AP selection result for the target user. This enables the proposed method to handle different numbers of users without the need for retraining. Results show that A-TCNN achieves a network throughput very close to that of the testing dataset, with a gap less than 3%. It is also proven that A-TCNN can obtain a network throughput comparable to two SOTA benchmarks, while reducing the runtime by up to three orders of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge