Hadi Tabatabaee

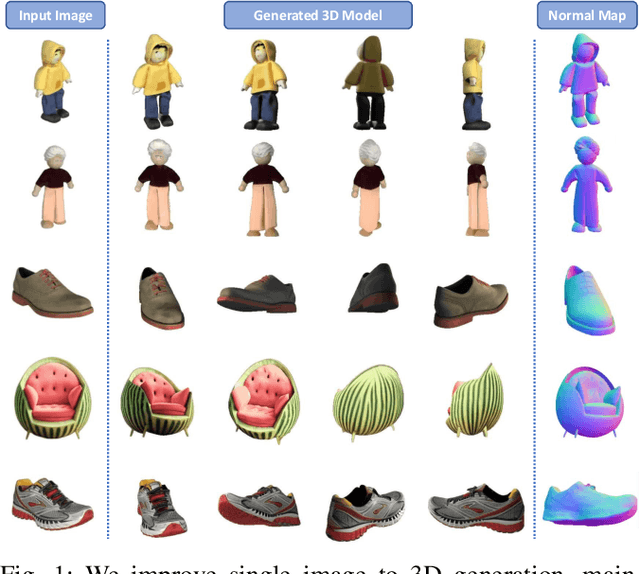

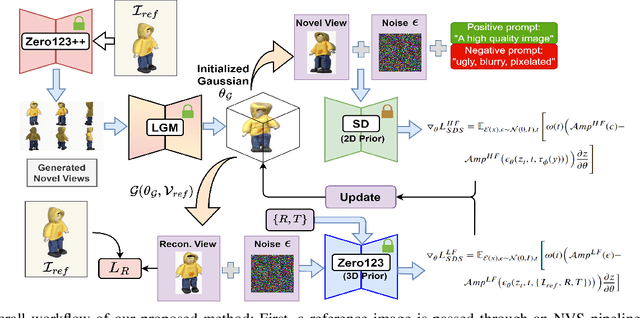

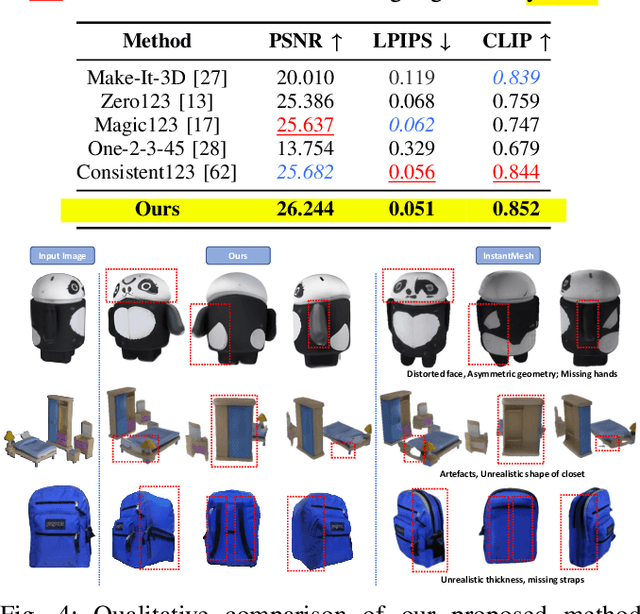

Enhancing Single Image to 3D Generation using Gaussian Splatting and Hybrid Diffusion Priors

Oct 12, 2024

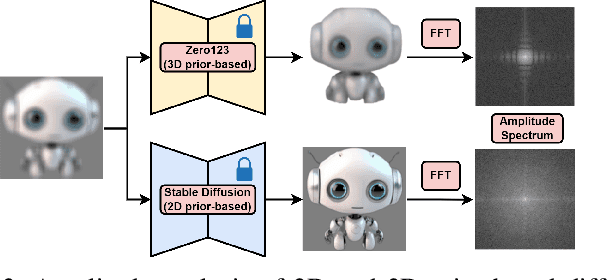

Abstract:3D object generation from a single image involves estimating the full 3D geometry and texture of unseen views from an unposed RGB image captured in the wild. Accurately reconstructing an object's complete 3D structure and texture has numerous applications in real-world scenarios, including robotic manipulation, grasping, 3D scene understanding, and AR/VR. Recent advancements in 3D object generation have introduced techniques that reconstruct an object's 3D shape and texture by optimizing the efficient representation of Gaussian Splatting, guided by pre-trained 2D or 3D diffusion models. However, a notable disparity exists between the training datasets of these models, leading to distinct differences in their outputs. While 2D models generate highly detailed visuals, they lack cross-view consistency in geometry and texture. In contrast, 3D models ensure consistency across different views but often result in overly smooth textures. We propose bridging the gap between 2D and 3D diffusion models to address this limitation by integrating a two-stage frequency-based distillation loss with Gaussian Splatting. Specifically, we leverage geometric priors in the low-frequency spectrum from a 3D diffusion model to maintain consistent geometry and use a 2D diffusion model to refine the fidelity and texture in the high-frequency spectrum of the generated 3D structure, resulting in more detailed and fine-grained outcomes. Our approach enhances geometric consistency and visual quality, outperforming the current SOTA. Additionally, we demonstrate the easy adaptability of our method for efficient object pose estimation and tracking.

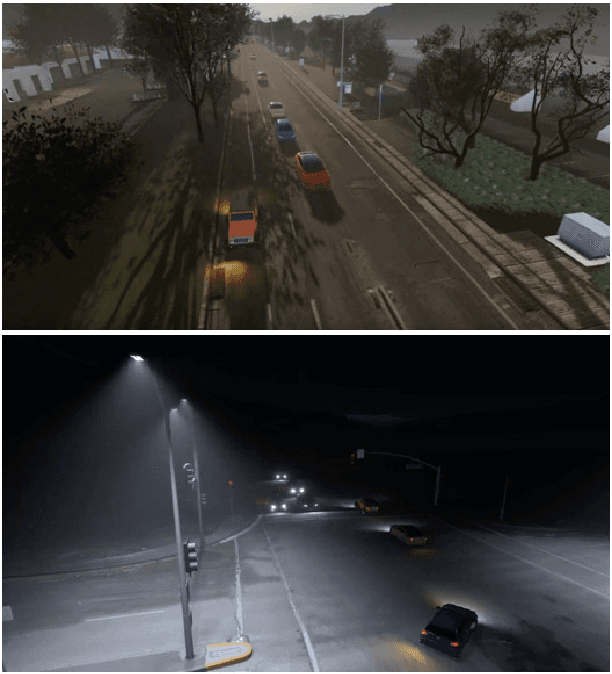

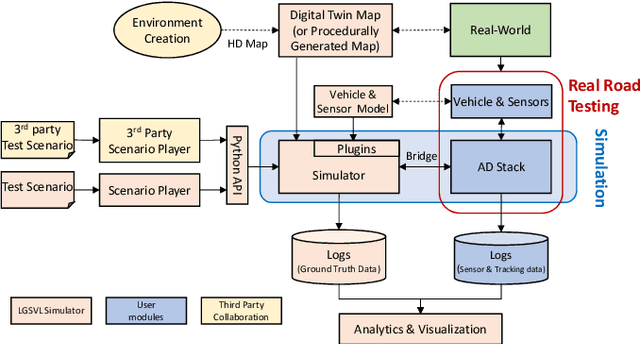

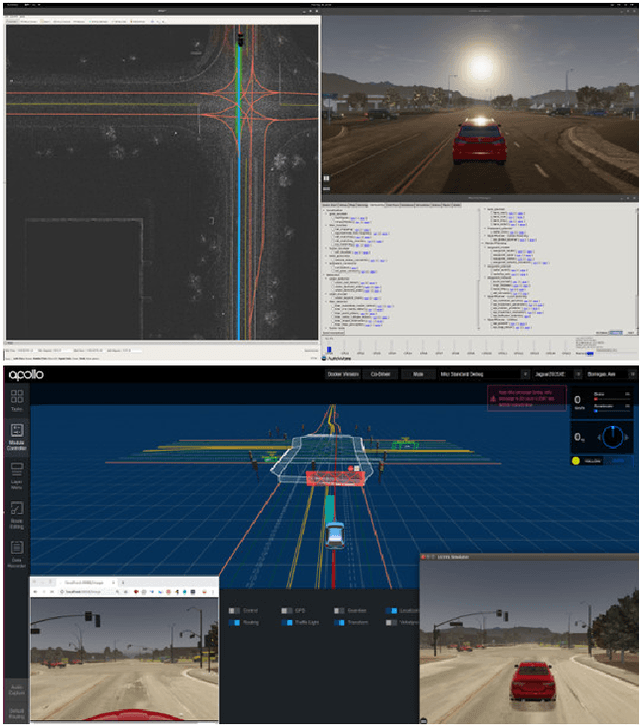

LGSVL Simulator: A High Fidelity Simulator for Autonomous Driving

May 11, 2020

Abstract:Testing autonomous driving algorithms on real autonomous vehicles is extremely costly and many researchers and developers in the field cannot afford a real car and the corresponding sensors. Although several free and open-source autonomous driving stacks, such as Autoware and Apollo are available, choices of open-source simulators to use with them are limited. In this paper, we introduce the LGSVL Simulator which is a high fidelity simulator for autonomous driving. The simulator engine provides end-to-end, full-stack simulation which is ready to be hooked up to Autoware and Apollo. In addition, simulator tools are provided with the core simulation engine which allow users to easily customize sensors, create new types of controllable objects, replace some modules in the core simulator, and create digital twins of particular environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge