Gustavo Olague

Analytical-Heuristic Modeling and Optimization for Low-Light Image Enhancement

Dec 10, 2024Abstract:Low-light image enhancement remains an open problem, and the new wave of artificial intelligence is at the center of this problem. This work describes the use of genetic algorithms for optimizing analytical models that can improve the visualization of images with poor light. Genetic algorithms are part of metaheuristic approaches, which proved helpful in solving challenging optimization tasks. We propose two analytical methods combined with optimization reasoning to approach a solution to the physical and computational aspects of transforming dark images into visible ones. The experiments demonstrate that the proposed approach ranks at the top among 26 state-of-the-art algorithms in the LOL benchmark. The results show evidence that a simple genetic algorithm combined with analytical reasoning can defeat the current mainstream in a challenging computer vision task through controlled experiments and objective comparisons. This work opens interesting new research avenues for the swarm and evolutionary computation community and others interested in analytical and heuristic reasoning.

Modeling Image Tone Dichotomy with the Power Function

Sep 10, 2024Abstract:The primary purpose of this paper is to present the concept of dichotomy in image illumination modeling based on the power function. In particular, we review several mathematical properties of the power function to identify the limitations and propose a new mathematical model capable of abstracting illumination dichotomy. The simplicity of the equation opens new avenues for classical and modern image analysis and processing. The article provides practical and illustrative image examples to explain how the new model manages dichotomy in image perception. The article shows dichotomy image space as a viable way to extract rich information from images despite poor contrast linked to tone, lightness, and color perception. Moreover, a comparison with state-of-the-art methods in image enhancement provides evidence of the method's value.

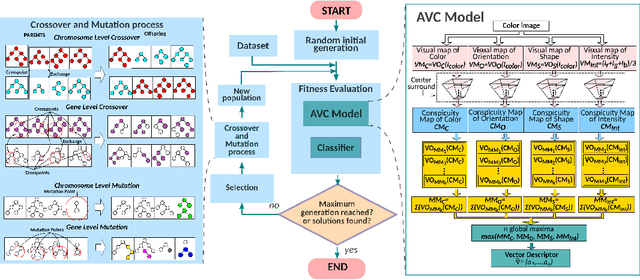

Adversarial Attacks Assessment of Salient Object Detection via Symbolic Learning

Sep 12, 2023Abstract:Machine learning is at the center of mainstream technology and outperforms classical approaches to handcrafted feature design. Aside from its learning process for artificial feature extraction, it has an end-to-end paradigm from input to output, reaching outstandingly accurate results. However, security concerns about its robustness to malicious and imperceptible perturbations have drawn attention since its prediction can be changed entirely. Salient object detection is a research area where deep convolutional neural networks have proven effective but whose trustworthiness represents a significant issue requiring analysis and solutions to hackers' attacks. Brain programming is a kind of symbolic learning in the vein of good old-fashioned artificial intelligence. This work provides evidence that symbolic learning robustness is crucial in designing reliable visual attention systems since it can withstand even the most intense perturbations. We test this evolutionary computation methodology against several adversarial attacks and noise perturbations using standard databases and a real-world problem of a shorebird called the Snowy Plover portraying a visual attention task. We compare our methodology with five different deep learning approaches, proving that they do not match the symbolic paradigm regarding robustness. All neural networks suffer significant performance losses, while brain programming stands its ground and remains unaffected. Also, by studying the Snowy Plover, we remark on the importance of security in surveillance activities regarding wildlife protection and conservation.

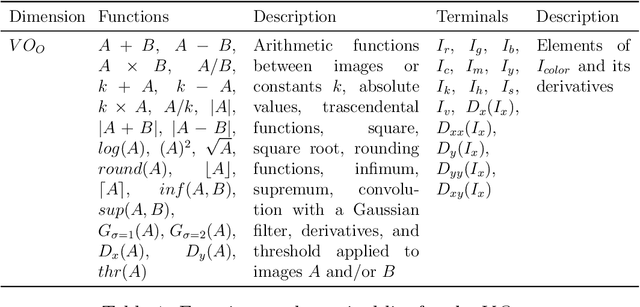

Automated Design of Salient Object Detection Algorithms with Brain Programming

Apr 07, 2022

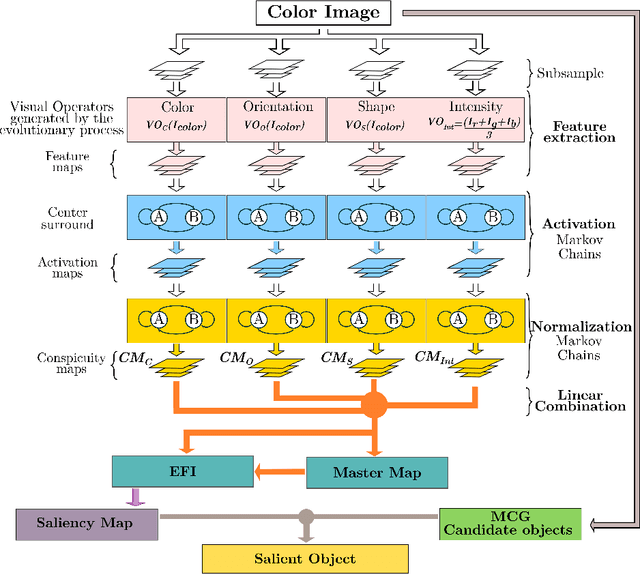

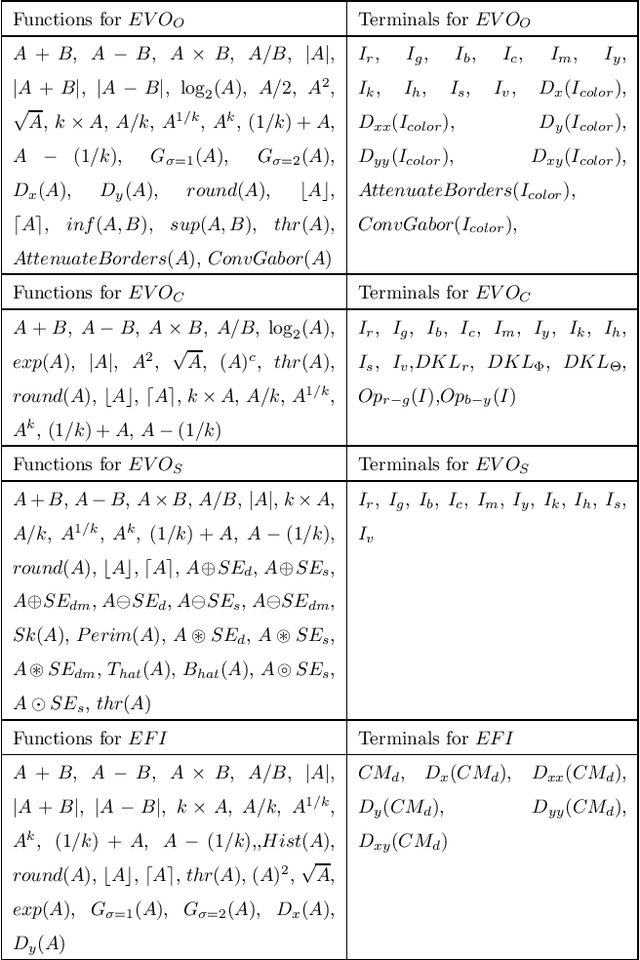

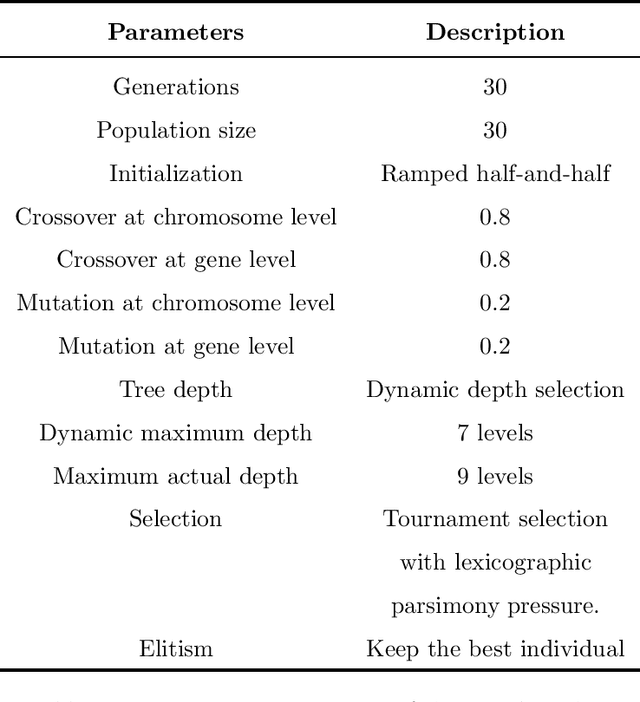

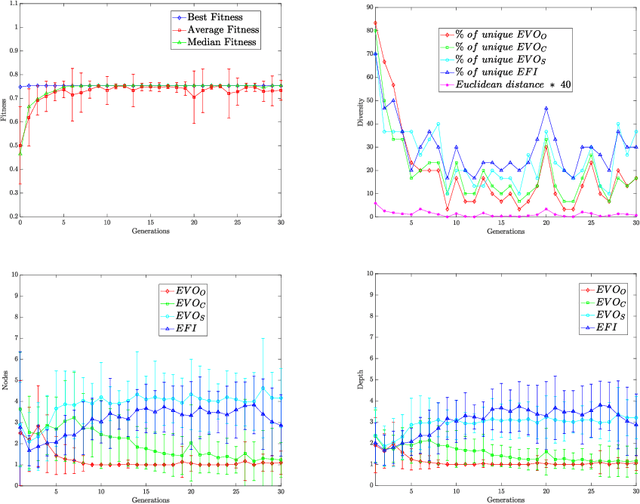

Abstract:Despite recent improvements in computer vision, artificial visual systems' design is still daunting since an explanation of visual computing algorithms remains elusive. Salient object detection is one problem that is still open due to the difficulty of understanding the brain's inner workings. Progress on this research area follows the traditional path of hand-made designs using neuroscience knowledge. In recent years two different approaches based on genetic programming appear to enhance their technique. One follows the idea of combining previous hand-made methods through genetic programming and fuzzy logic. The other approach consists of improving the inner computational structures of basic hand-made models through artificial evolution. This research work proposes expanding the artificial dorsal stream using a recent proposal to solve salient object detection problems. This approach uses the benefits of the two main aspects of this research area: fixation prediction and detection of salient objects. We decided to apply the fusion of visual saliency and image segmentation algorithms as a template. The proposed methodology discovers several critical structures in the template through artificial evolution. We present results on a benchmark designed by experts with outstanding results in comparison with the state-of-the-art.

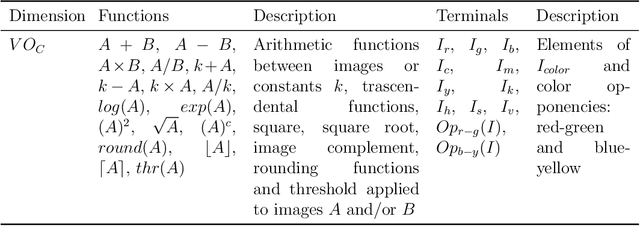

Brain Programming is Immune to Adversarial Attacks: Towards Accurate and Robust Image Classification using Symbolic Learning

Mar 01, 2021

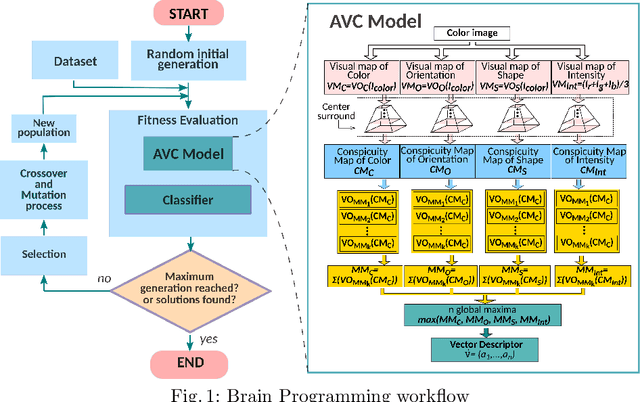

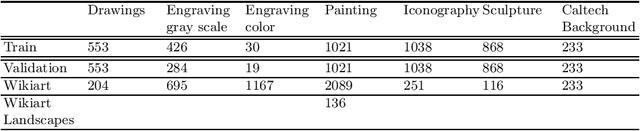

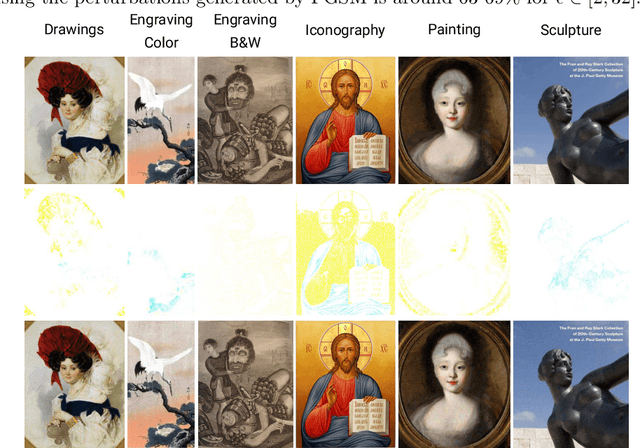

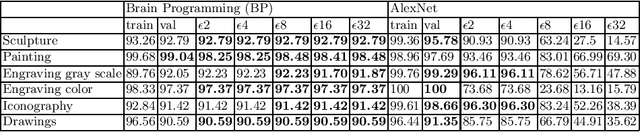

Abstract:In recent years, the security concerns about the vulnerability of Deep Convolutional Neural Networks (DCNN) to Adversarial Attacks (AA) in the form of small modifications to the input image almost invisible to human vision make their predictions untrustworthy. Therefore, it is necessary to provide robustness to adversarial examples in addition to an accurate score when developing a new classifier. In this work, we perform a comparative study of the effects of AA on the complex problem of art media categorization, which involves a sophisticated analysis of features to classify a fine collection of artworks. We tested a prevailing bag of visual words approach from computer vision, four state-of-the-art DCNN models (AlexNet, VGG, ResNet, ResNet101), and the Brain Programming (BP) algorithm. In this study, we analyze the algorithms' performance using accuracy. Besides, we use the accuracy ratio between adversarial examples and clean images to measure robustness. Moreover, we propose a statistical analysis of each classifier's predictions' confidence to corroborate the results. We confirm that BP predictions' change was below 2\% using adversarial examples computed with the fast gradient sign method. Also, considering the multiple pixel attack, BP obtained four out of seven classes without changes and the rest with a maximum error of 4\% in the predictions. Finally, BP also gets four categories using adversarial patches without changes and for the remaining three classes with a variation of 1\%. Additionally, the statistical analysis showed that the predictions' confidence of BP were not significantly different for each pair of clean and perturbed images in every experiment. These results prove BP's robustness against adversarial examples compared to DCNN and handcrafted features methods, whose performance on the art media classification was compromised with the proposed perturbations.

A Deep Genetic Programming based Methodology for Art Media Classification Robust to Adversarial Perturbations

Oct 03, 2020

Abstract:Art Media Classification problem is a current research area that has attracted attention due to the complex extraction and analysis of features of high-value art pieces. The perception of the attributes can not be subjective, as humans sometimes follow a biased interpretation of artworks while ensuring automated observation's trustworthiness. Machine Learning has outperformed many areas through its learning process of artificial feature extraction from images instead of designing handcrafted feature detectors. However, a major concern related to its reliability has brought attention because, with small perturbations made intentionally in the input image (adversarial attack), its prediction can be completely changed. In this manner, we foresee two ways of approaching the situation: (1) solve the problem of adversarial attacks in current neural networks methodologies, or (2) propose a different approach that can challenge deep learning without the effects of adversarial attacks. The first one has not been solved yet, and adversarial attacks have become even more complex to defend. Therefore, this work presents a Deep Genetic Programming method, called Brain Programming, that competes with deep learning and studies the transferability of adversarial attacks using two artworks databases made by art experts. The results show that the Brain Programming method preserves its performance in comparison with AlexNet, making it robust to these perturbations and competing to the performance of Deep Learning.

It is Time for New Perspectives on How to Fight Bloat in GP

May 01, 2020

Abstract:The present and future of evolutionary algorithms depends on the proper use of modern parallel and distributed computing infrastructures. Although still sequential approaches dominate the landscape, available multi-core, many-core and distributed systems will make users and researchers to more frequently deploy parallel version of the algorithms. In such a scenario, new possibilities arise regarding the time saved when parallel evaluation of individuals are performed. And this time saving is particularly relevant in Genetic Programming. This paper studies how evaluation time influences not only time to solution in parallel/distributed systems, but may also affect size evolution of individuals in the population, and eventually will reduce the bloat phenomenon GP features. This paper considers time and space as two sides of a single coin when devising a more natural method for fighting bloat. This new perspective allows us to understand that new methods for bloat control can be derived, and the first of such a method is described and tested. Experimental data confirms the strength of the approach: using computing time as a measure of individuals' complexity allows to control the growth in size of genetic programming individuals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge