Guannan Lou

STFL: A Temporal-Spatial Federated Learning Framework for Graph Neural Networks

Nov 12, 2021

Abstract:We present a spatial-temporal federated learning framework for graph neural networks, namely STFL. The framework explores the underlying correlation of the input spatial-temporal data and transform it to both node features and adjacency matrix. The federated learning setting in the framework ensures data privacy while achieving a good model generalization. Experiments results on the sleep stage dataset, ISRUC_S3, illustrate the effectiveness of STFL on graph prediction tasks.

Deep Learning-Based Autonomous Driving Systems: A Survey of Attacks and Defenses

Apr 10, 2021

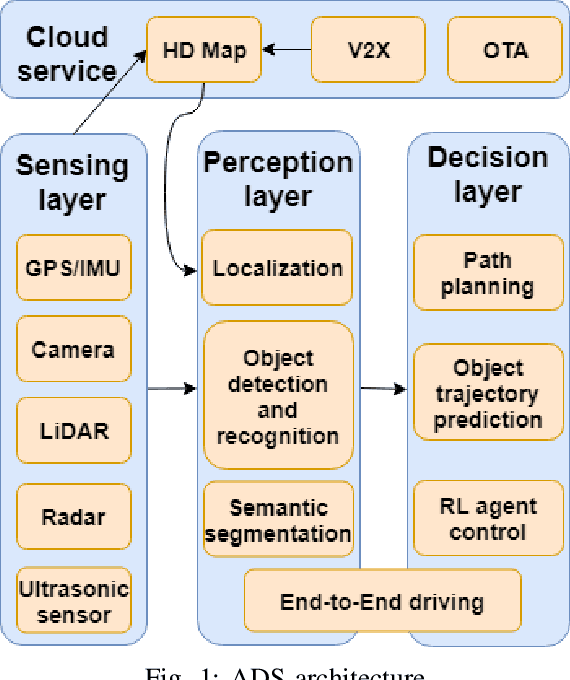

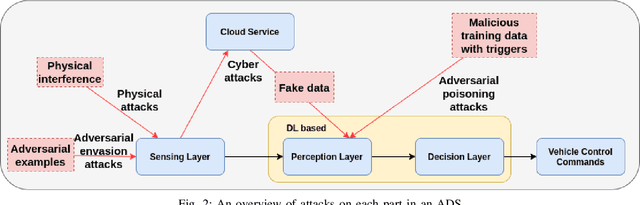

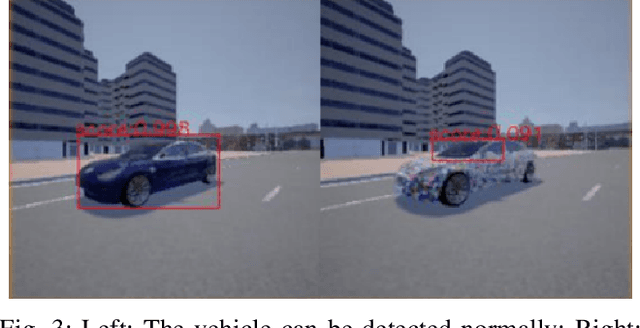

Abstract:The rapid development of artificial intelligence, especially deep learning technology, has advanced autonomous driving systems (ADSs) by providing precise control decisions to counterpart almost any driving event, spanning from anti-fatigue safe driving to intelligent route planning. However, ADSs are still plagued by increasing threats from different attacks, which could be categorized into physical attacks, cyberattacks and learning-based adversarial attacks. Inevitably, the safety and security of deep learning-based autonomous driving are severely challenged by these attacks, from which the countermeasures should be analyzed and studied comprehensively to mitigate all potential risks. This survey provides a thorough analysis of different attacks that may jeopardize ADSs, as well as the corresponding state-of-the-art defense mechanisms. The analysis is unrolled by taking an in-depth overview of each step in the ADS workflow, covering adversarial attacks for various deep learning models and attacks in both physical and cyber context. Furthermore, some promising research directions are suggested in order to improve deep learning-based autonomous driving safety, including model robustness training, model testing and verification, and anomaly detection based on cloud/edge servers.

An Analysis of Adversarial Attacks and Defenses on Autonomous Driving Models

Feb 06, 2020

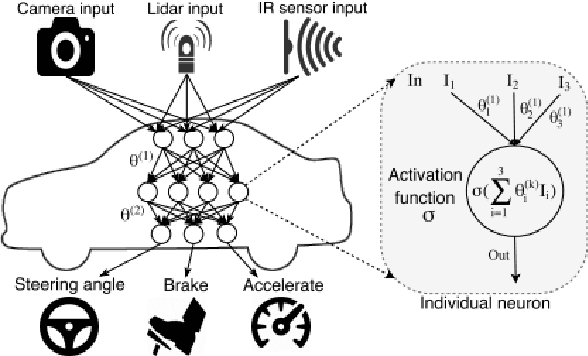

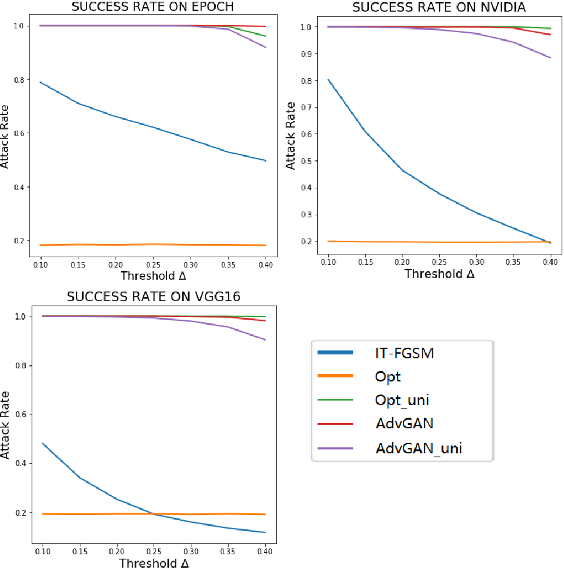

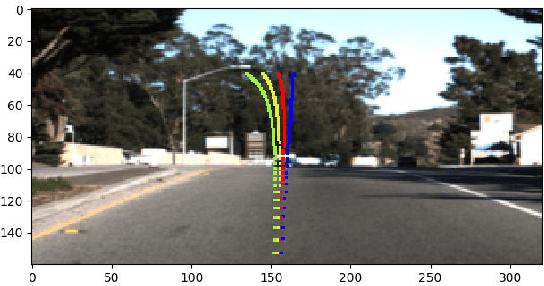

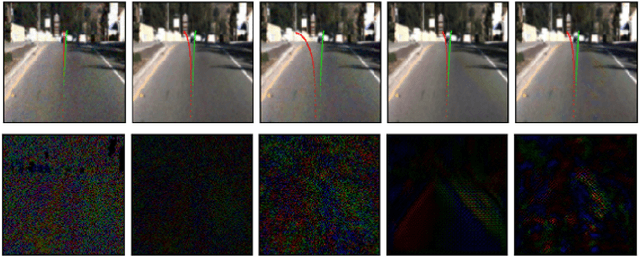

Abstract:Nowadays, autonomous driving has attracted much attention from both industry and academia. Convolutional neural network (CNN) is a key component in autonomous driving, which is also increasingly adopted in pervasive computing such as smartphones, wearable devices, and IoT networks. Prior work shows CNN-based classification models are vulnerable to adversarial attacks. However, it is uncertain to what extent regression models such as driving models are vulnerable to adversarial attacks, the effectiveness of existing defense techniques, and the defense implications for system and middleware builders. This paper presents an in-depth analysis of five adversarial attacks and four defense methods on three driving models. Experiments show that, similar to classification models, these models are still highly vulnerable to adversarial attacks. This poses a big security threat to autonomous driving and thus should be taken into account in practice. While these defense methods can effectively defend against different attacks, none of them are able to provide adequate protection against all five attacks. We derive several implications for system and middleware builders: (1) when adding a defense component against adversarial attacks, it is important to deploy multiple defense methods in tandem to achieve a good coverage of various attacks, (2) a blackbox attack is much less effective compared with a white-box attack, implying that it is important to keep model details (e.g., model architecture, hyperparameters) confidential via model obfuscation, and (3) driving models with a complex architecture are preferred if computing resources permit as they are more resilient to adversarial attacks than simple models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge