Gregory A. Wellenius

Modular Deep-Learning-Based Early Warning System for Deadly Heatwave Prediction

Dec 09, 2025Abstract:Severe heatwaves in urban areas significantly threaten public health, calling for establishing early warning strategies. Despite predicting occurrence of heatwaves and attributing historical mortality, predicting an incoming deadly heatwave remains a challenge due to the difficulty in defining and estimating heat-related mortality. Furthermore, establishing an early warning system imposes additional requirements, including data availability, spatial and temporal robustness, and decision costs. To address these challenges, we propose DeepTherm, a modular early warning system for deadly heatwave prediction without requiring heat-related mortality history. By highlighting the flexibility of deep learning, DeepTherm employs a dual-prediction pipeline, disentangling baseline mortality in the absence of heatwaves and other irregular events from all-cause mortality. We evaluated DeepTherm on real-world data across Spain. Results demonstrate consistent, robust, and accurate performance across diverse regions, time periods, and population groups while allowing trade-off between missed alarms and false alarms.

Optimizing Heat Alert Issuance for Public Health in the United States with Reinforcement Learning

Dec 21, 2023

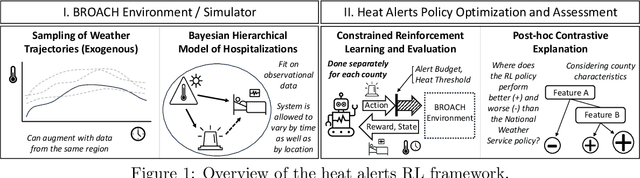

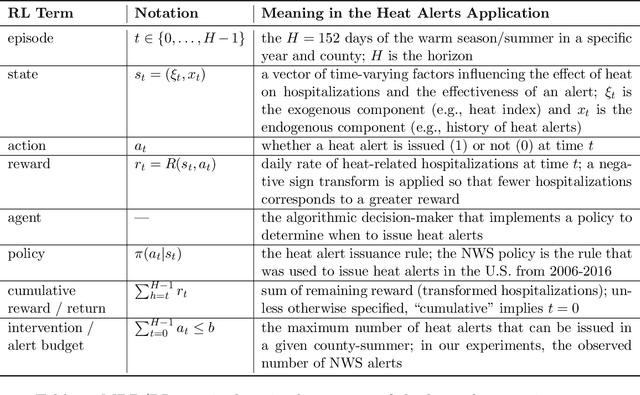

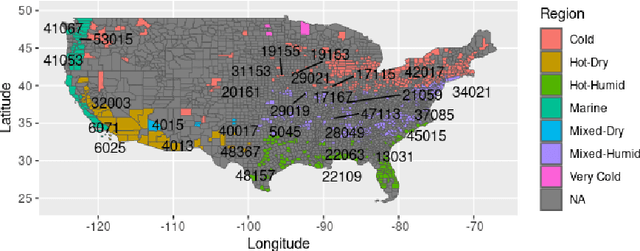

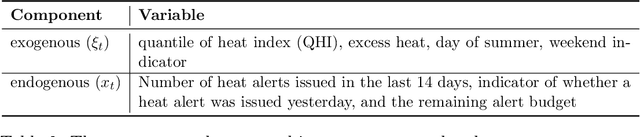

Abstract:Alerting the public when heat may harm their health is a crucial service, especially considering that extreme heat events will be more frequent under climate change. Current practice for issuing heat alerts in the US does not take advantage of modern data science methods for optimizing local alert criteria. Specifically, application of reinforcement learning (RL) has the potential to inform more health-protective policies, accounting for regional and sociodemographic heterogeneity as well as sequential dependence of alerts. In this work, we formulate the issuance of heat alerts as a sequential decision making problem and develop modifications to the RL workflow to address challenges commonly encountered in environmental health settings. Key modifications include creating a simulator that pairs hierarchical Bayesian modeling of low-signal health effects with sampling of real weather trajectories (exogenous features), constraining the total number of alerts issued as well as preventing alerts on less-hot days, and optimizing location-specific policies. Post-hoc contrastive analysis offers insights into scenarios when using RL for heat alert issuance may protect public health better than the current or alternative policies. This work contributes to a broader movement of advancing data-driven policy optimization for public health and climate change adaptation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge