Rachel C. Nethery

Towards Optimal Environmental Policies: Policy Learning under Arbitrary Bipartite Network Interference

Oct 10, 2024

Abstract:The substantial effect of air pollution on cardiovascular disease and mortality burdens is well-established. Emissions-reducing interventions on coal-fired power plants -- a major source of hazardous air pollution -- have proven to be an effective, but costly, strategy for reducing pollution-related health burdens. Targeting the power plants that achieve maximum health benefits while satisfying realistic cost constraints is challenging. The primary difficulty lies in quantifying the health benefits of intervening at particular plants. This is further complicated because interventions are applied on power plants, while health impacts occur in potentially distant communities, a setting known as bipartite network interference (BNI). In this paper, we introduce novel policy learning methods based on Q- and A-Learning to determine the optimal policy under arbitrary BNI. We derive asymptotic properties and demonstrate finite sample efficacy in simulations. We apply our novel methods to a comprehensive dataset of Medicare claims, power plant data, and pollution transport networks. Our goal is to determine the optimal strategy for installing power plant scrubbers to minimize ischemic heart disease (IHD) hospitalizations under various cost constraints. We find that annual IHD hospitalization rates could be reduced in a range from 20.66-44.51 per 10,000 person-years through optimal policies under different cost constraints.

Optimizing Heat Alert Issuance for Public Health in the United States with Reinforcement Learning

Dec 21, 2023

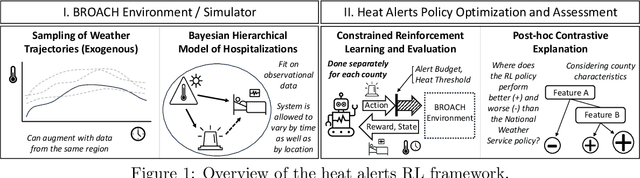

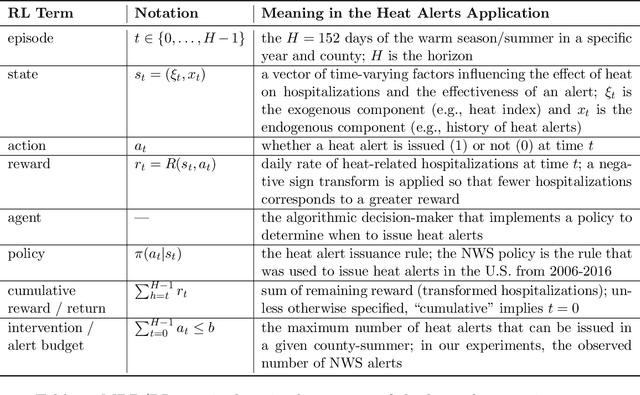

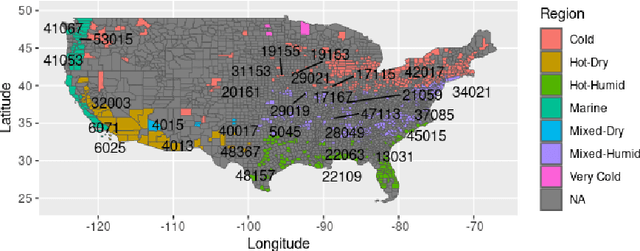

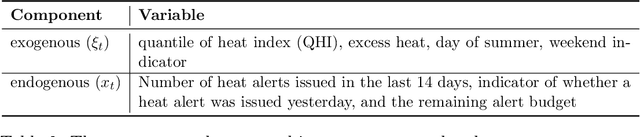

Abstract:Alerting the public when heat may harm their health is a crucial service, especially considering that extreme heat events will be more frequent under climate change. Current practice for issuing heat alerts in the US does not take advantage of modern data science methods for optimizing local alert criteria. Specifically, application of reinforcement learning (RL) has the potential to inform more health-protective policies, accounting for regional and sociodemographic heterogeneity as well as sequential dependence of alerts. In this work, we formulate the issuance of heat alerts as a sequential decision making problem and develop modifications to the RL workflow to address challenges commonly encountered in environmental health settings. Key modifications include creating a simulator that pairs hierarchical Bayesian modeling of low-signal health effects with sampling of real weather trajectories (exogenous features), constraining the total number of alerts issued as well as preventing alerts on less-hot days, and optimizing location-specific policies. Post-hoc contrastive analysis offers insights into scenarios when using RL for heat alert issuance may protect public health better than the current or alternative policies. This work contributes to a broader movement of advancing data-driven policy optimization for public health and climate change adaptation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge