Graham Finlayson

Unifying Optimization Methods for Color Filter Design

Jun 24, 2020

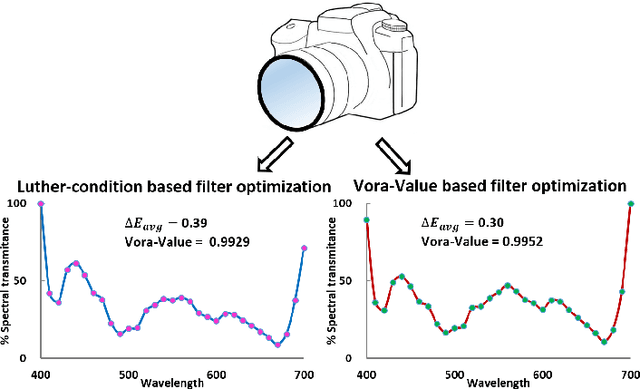

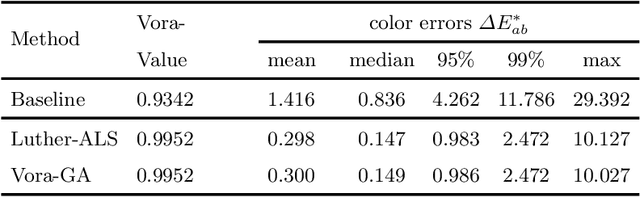

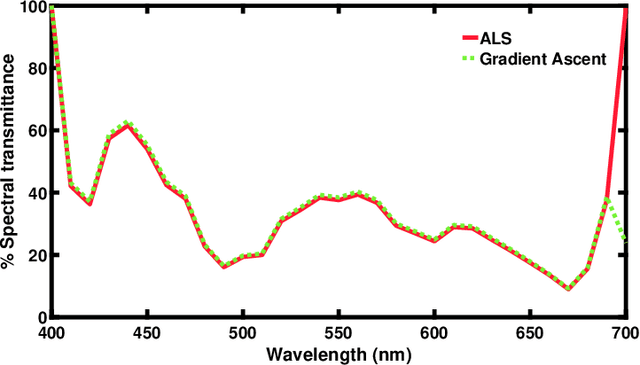

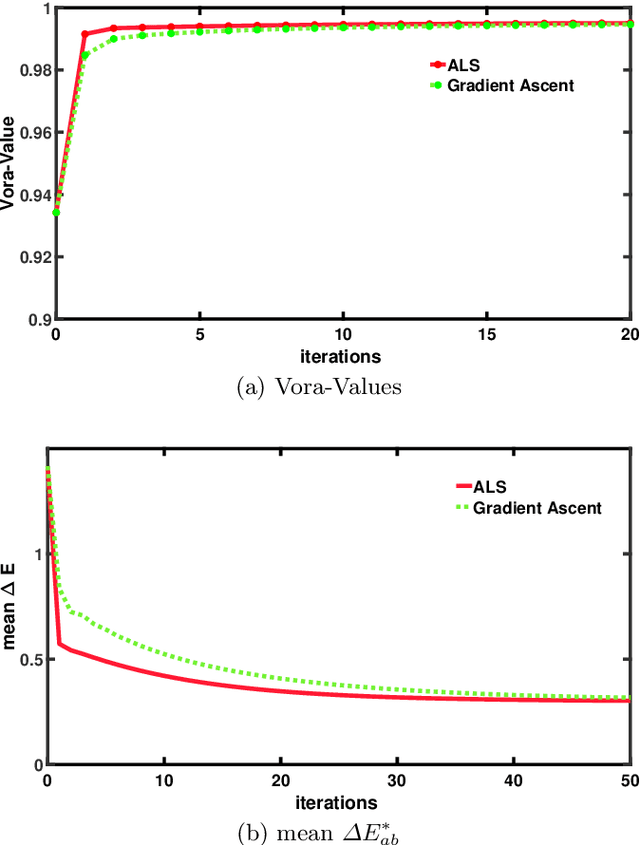

Abstract:Through optimization we can solve for a filter that when the camera views the world through this filter, it is more colorimetric. Previous work solved for the filter that best satisfied the Luther condition: the camera spectral sensitivities after filtering were approximately a linear transform from the CIE XYZ color matching functions. A more recent method optimized for the filter that maximized the Vora-Value (a measure which relates to the closeness of the vector spaces spanned by the camera sensors and human vision sensors). The optimized Luther- and Vora-filters are different from one another. In this paper we begin by observing that the function defining the Vora-Value is equivalent to the Luther-condition optimization if we use the orthonormal basis of the XYZ color matching functions, i.e. we linearly transform the XYZ sensitivities to a set of orthonormal basis. In this formulation, the Luther-optimization algorithm is shown to almost optimize the Vora-Value. Moreover, experiments demonstrate that the modified orthonormal Luther-method finds the same color filter compared to the Vora-Value filter optimization. Significantly, our modified algorithm is simpler in formulation and also converges faster than the direct Vora-Value method.

NTIRE 2020 Challenge on Spectral Reconstruction from an RGB Image

May 07, 2020

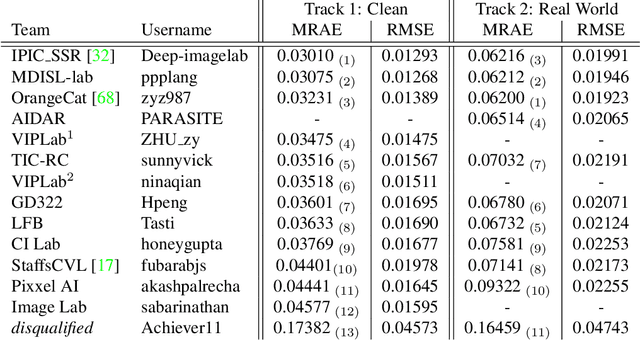

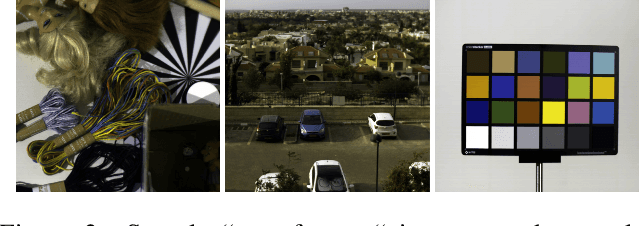

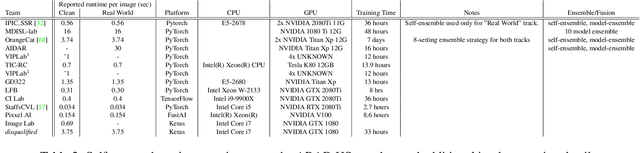

Abstract:This paper reviews the second challenge on spectral reconstruction from RGB images, i.e., the recovery of whole-scene hyperspectral (HS) information from a 3-channel RGB image. As in the previous challenge, two tracks were provided: (i) a "Clean" track where HS images are estimated from noise-free RGBs, the RGB images are themselves calculated numerically using the ground-truth HS images and supplied spectral sensitivity functions (ii) a "Real World" track, simulating capture by an uncalibrated and unknown camera, where the HS images are recovered from noisy JPEG-compressed RGB images. A new, larger-than-ever, natural hyperspectral image data set is presented, containing a total of 510 HS images. The Clean and Real World tracks had 103 and 78 registered participants respectively, with 14 teams competing in the final testing phase. A description of the proposed methods, alongside their challenge scores and an extensive evaluation of top performing methods is also provided. They gauge the state-of-the-art in spectral reconstruction from an RGB image.

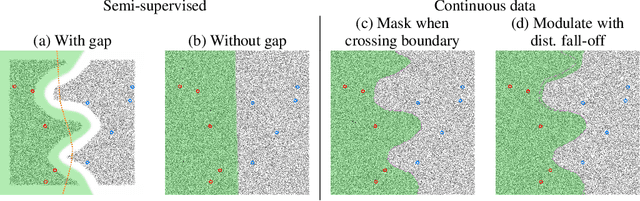

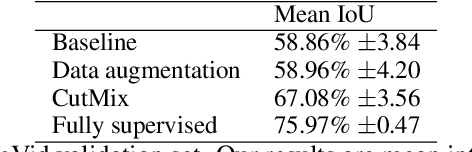

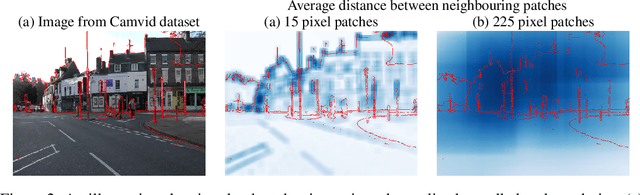

Consistency regularization and CutMix for semi-supervised semantic segmentation

Jun 05, 2019

Abstract:Consistency regularization describes a class of approaches that have yielded ground breaking results in semi-supervised classification problems. Prior work has established the cluster assumption -- under which the data distribution consists of uniform class clusters of samples separated by low density regions -- as key to its success. We analyse the problem of semantic segmentation and find that the data distribution does not exhibit low density regions separating classes and offer this as an explanation for why semi-supervised segmentation is a challenging problem. We adapt the recently proposed CutMix regularizer for semantic segmentation and find that it is able to overcome this obstacle, leading to a successful application of consistency regularization to semi-supervised semantic segmentation.

Interactive Illumination Invariance

Jul 20, 2016

Abstract:Illumination effects cause problems for many computer vision algorithms. We present a user-friendly interactive system for robust illumination-invariant image generation. Compared with the previous automated illumination-invariant image derivation approaches, our system enables users to specify a particular kind of illumination variation for removal. The derivation of illumination-invariant image is guided by the user input. The input is a stroke that defines an area covering a set of pixels whose intensities are influenced predominately by the illumination variation. This additional flexibility enhances the robustness for processing non-linearly rendered images and the images of the scenes where their illumination variations are difficult to estimate automatically. Finally, we present some evaluation results of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge