Graham D. Finlayson

Matched Illumination

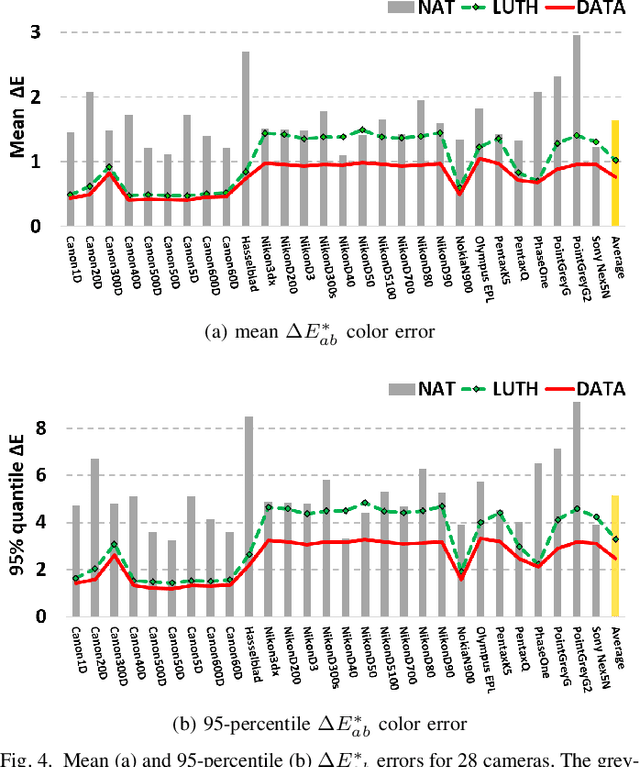

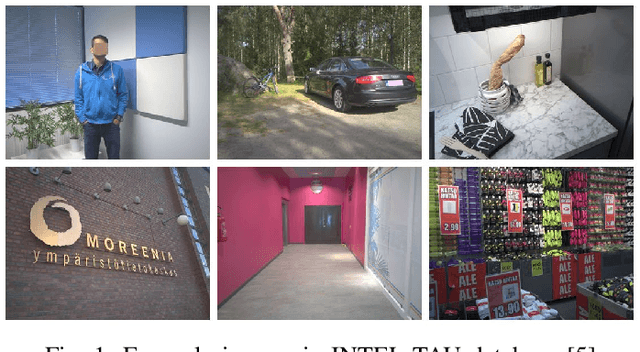

Jan 27, 2022Abstract:In previous work, it was shown that a camera can theoretically be made more colorimetric - its RGBs become more linearly related to XYZ tristimuli - by placing a specially designed color filter in the optical path. While the prior art demonstrated the principle, the optimal color-correction filters were not actually manufactured. In this paper, we provide a novel way of creating the color filtering effect without making a physical filter: we modulate the spectrum of the light source by using a spectrally tunable lighting system to recast the prefiltering effect from a lighting perspective. According to our method, if we wish to measure color under a D65 light, we relight the scene with a modulated D65 spectrum where the light modulation mimics the effect of color prefiltering in the prior art. We call our optimally modulated light, the matched illumination. In the experiments, using synthetic and real measurements, we show that color measurement errors can be reduced by about 50% or more on simulated data and 25% or more on real images when the matched illumination is used.

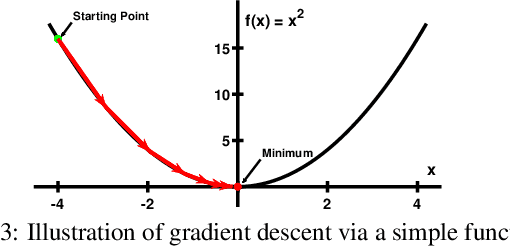

Mathematical derivation for Vora-Value based filter design method: Gradient and Hessian

Oct 04, 2020Abstract:In this paper, we present the detailed mathematical derivation of the gradient and Hessian matrix for the Vora-Value based colorimetric filter optimization. We make a full recapitulation of the steps involved in differentiating the objective function and reveal the positive-definite Hessian matrix when a positive regularizer is applied. This paper serves as a supplementary material for our paper in the colorimetric filter design theory.

Designing a Color Filter via Optimization of Vora-Value for Making a Camera more Colorimetric

May 13, 2020

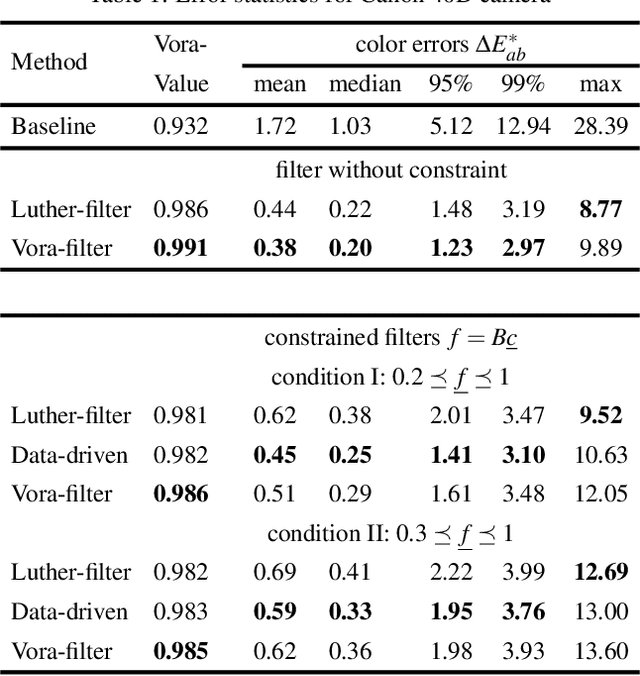

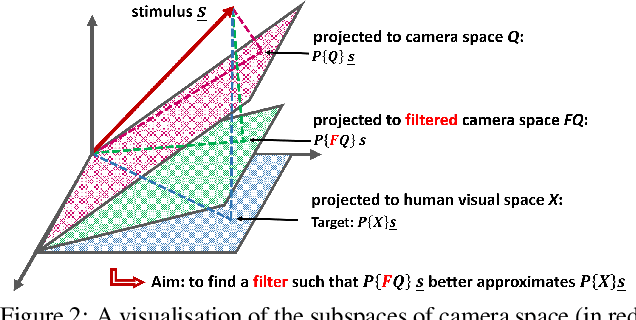

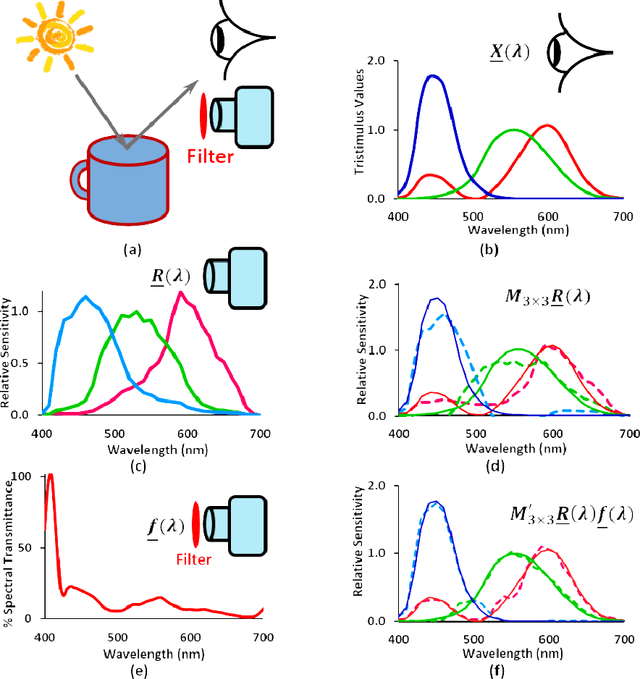

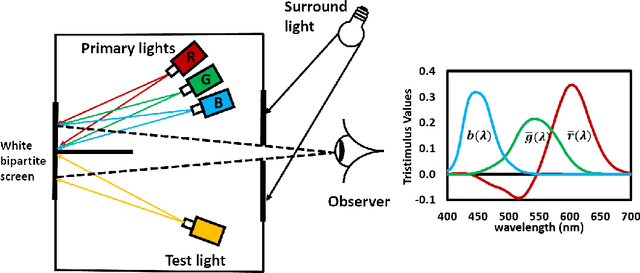

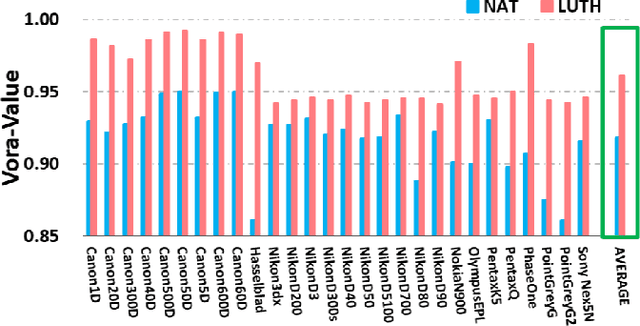

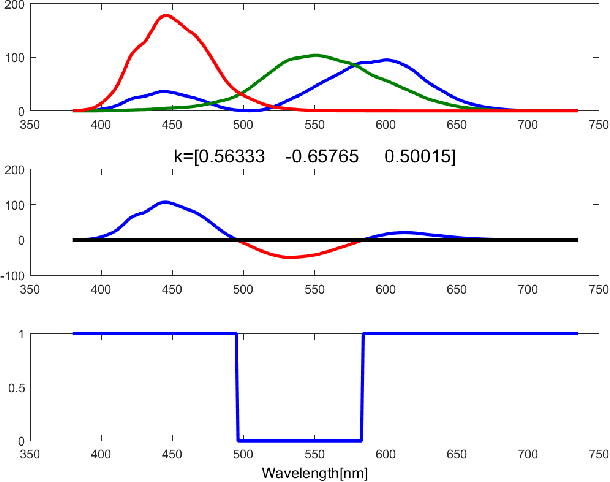

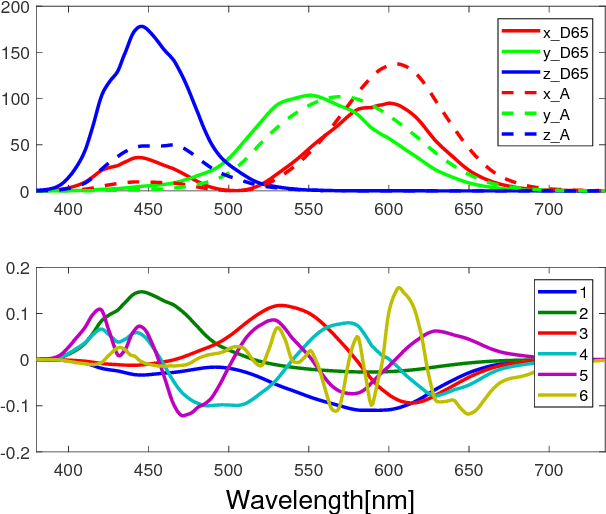

Abstract:The Luther condition states that if the spectral sensitivity responses of a camera are a linear transform from the color matching functions of the human visual system, the camera is colorimetric. Previous work proposed to solve for a filter which, when placed in front of a camera, results in sensitivities that best satisfy the Luther condition. By construction, the prior art solves for a filter for a given set of human visual sensitivities, e.g. the XYZ color matching functions or the cone response functions. However, depending on the target spectral sensitivity set, a different optimal filter is found. This paper begins with the observation that the cone fundamentals, XYZ color matching functions or any linear combination thereof span the same 3-dimensional subspace. Thus, we set out to solve for a filter that makes the vector space spanned by the filtered camera sensitivities as similar as possible to the space spanned by human vision sensors. We argue that the Vora-Value is a suitable way to measure subspace similarity and we develop an optimization method for finding a filter that maximizes the Vora-Value measure. Experiments demonstrate that our new optimization leads to filtered camera sensitivities which have a significantly higher Vora-Value compared with antecedent methods.

Designing Color Filters that Make Cameras MoreColorimetric

Mar 27, 2020

Abstract:When we place a colored filter in front of a camera the effective camera response functions are equal to the given camera spectral sensitivities multiplied by the filter spectral transmittance. In this paper, we solve for the filter which returns the modified sensitivities as close to being a linear transformation from the color matching functions of human visual system as possible. When this linearity condition - sometimes called the Luther condition - is approximately met, the `camera+filter' system can be used for accurate color measurement. Then, we reformulate our filter design optimisation for making the sensor responses as close to the CIEXYZ tristimulus values as possible given the knowledge of real measured surfaces and illuminants spectra data. This data-driven method in turn is extended to incorporate constraints on the filter (smoothness and bounded transmission). Also, because how the optimisation is initialised is shown to impact on the performance of the solved-for filters, a multi-initialisation optimisation is developed. Experiments demonstrate that, by taking pictures through our optimised color filters we can make cameras significantly more colorimetric.

Physically Plausible Spectral Reconstruction from RGB Images

Jan 02, 2020

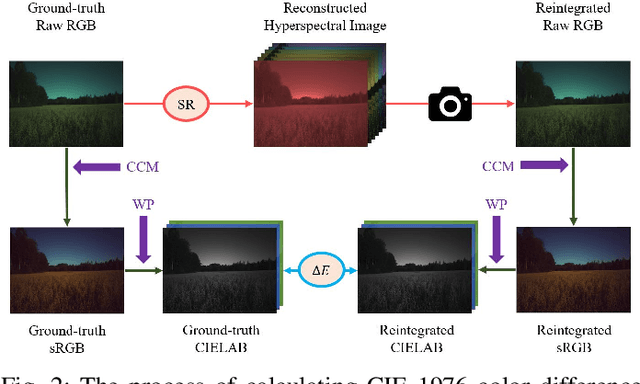

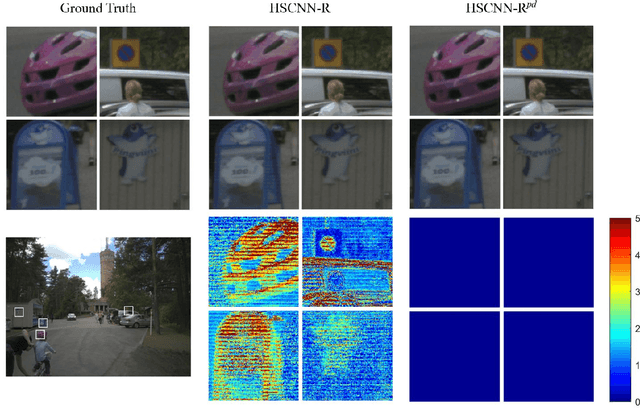

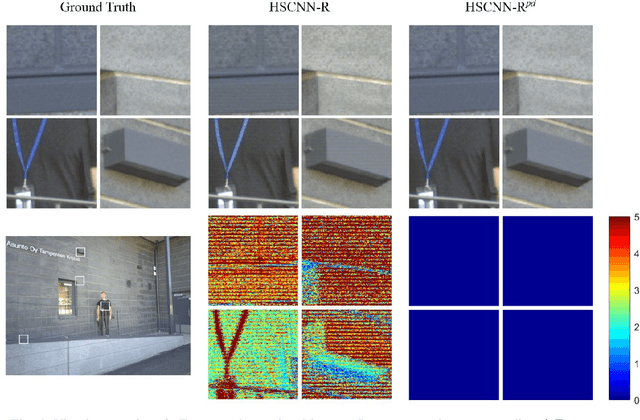

Abstract:Recently Convolutional Neural Networks (CNN) have been used to reconstruct hyperspectral information from RGB images. Moreover, this spectral reconstruction problem (SR) can often be solved with good (low) error. However, these methods are not physically plausible: that is when the recovered spectra are reintegrated with the underlying camera sensitivities, the resulting predicted RGB is not the same as the actual RGB, and sometimes this discrepancy can be large. The problem is further compounded by exposure change. Indeed, most learning-based SR models train for a fixed exposure setting and we show that this can result in poor performance when exposure varies. In this paper we show how CNN learning can be extended so that physical plausibility is enforced and the problem resulting from changing exposures is mitigated. Our SR solution improves the state-of-the-art spectral recovery performance under varying exposure conditions while simultaneously ensuring physical plausibility (the recovered spectra reintegrate to the input RGBs exactly).

Spherical sampling methods for the calculation of metamer mismatch volumes

Jan 23, 2019

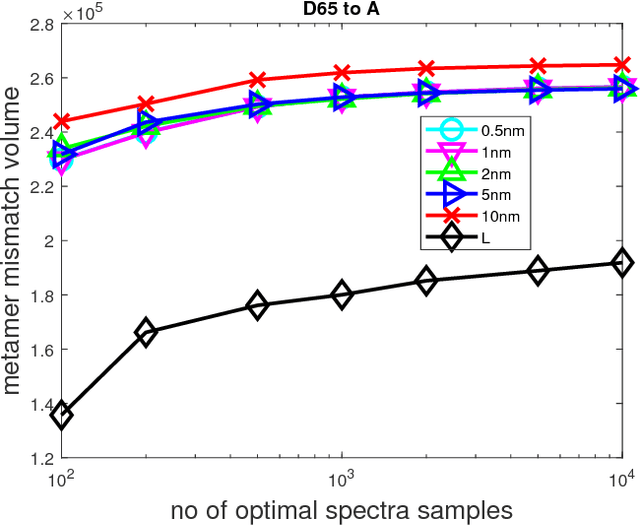

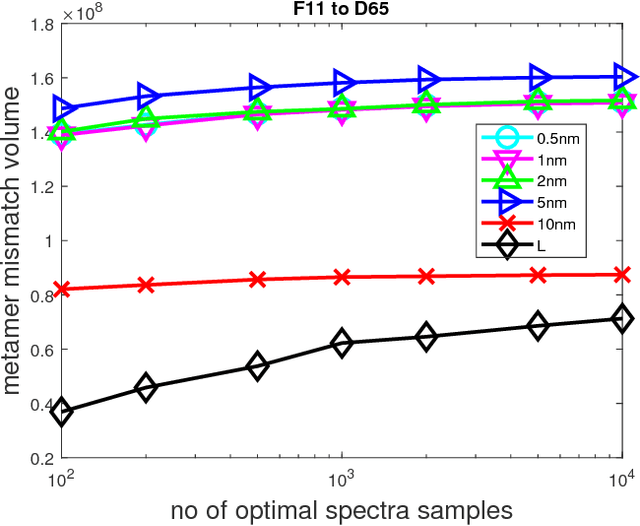

Abstract:In this paper, we propose two methods of calculating theoretically maximal metamer mismatch volumes. Unlike prior art techniques, our methods do not make any assumptions on the shape of spectra on the boundary of the mismatch volumes. Both methods utilize a spherical sampling approach, but they calculate mismatch volumes in two different ways. The first method uses a linear programming optimization, while the second is a computational geometry approach based on half-space intersection. We show that under certain conditions the theoretically maximal metamer mismatch volume is significantly larger than the one approximated using a prior art method.

* One print or electronic copy may be made for personal use only. Systematic reproduction and distribution, duplication of any material in this paper for a fee or for commercial purposes, or modifications of this paper are prohibited. Optical Society of America

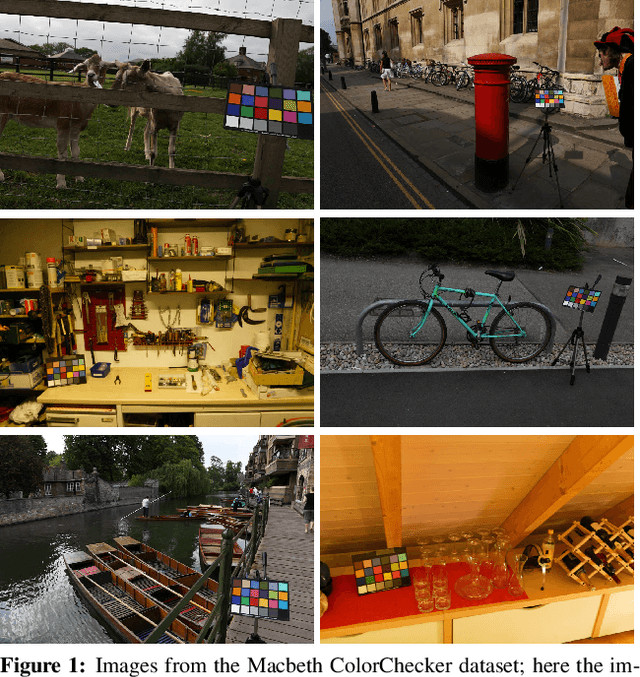

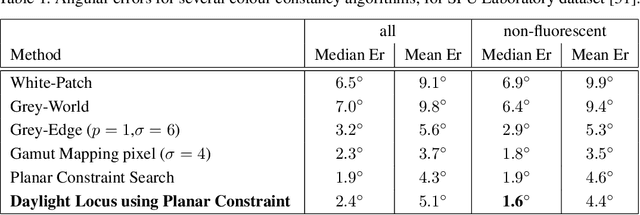

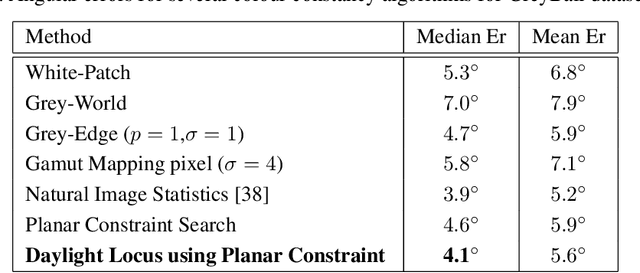

Rehabilitating the ColorChecker Dataset for Illuminant Estimation

Sep 17, 2018

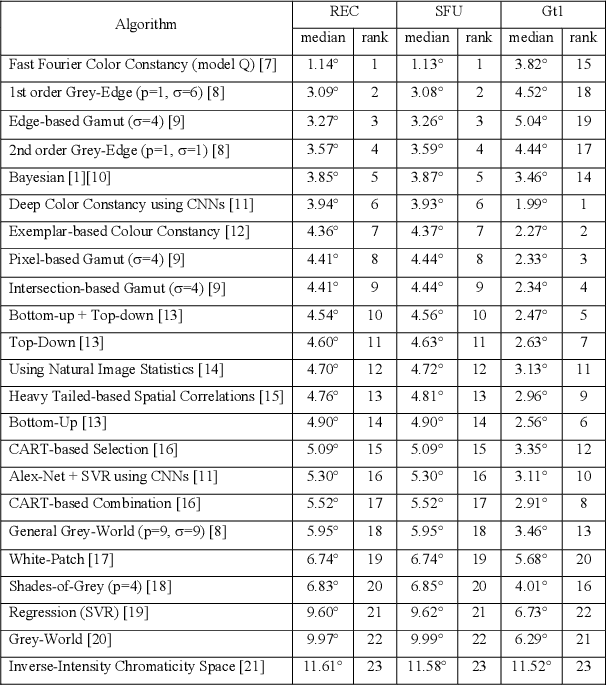

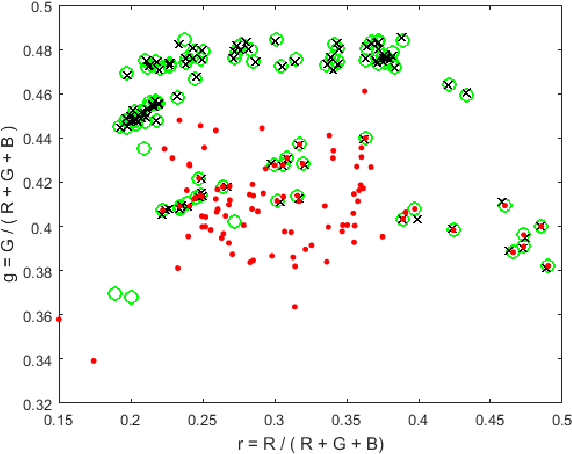

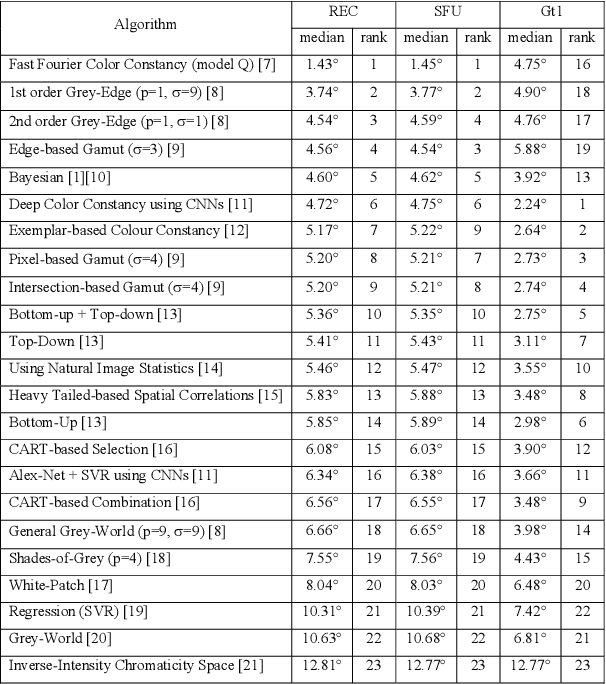

Abstract:In a previous work, it was shown that there is a curious problem with the benchmark ColorChecker dataset for illuminant estimation. To wit, this dataset has at least 3 different sets of ground-truths. Typically, for a single algorithm a single ground-truth is used. But then different algorithms, whose performance is measured with respect to different ground-truths, are compared against each other and then ranked. This makes no sense. We show in this paper that there are also errors in how each ground-truth set was calculated. As a result, all performance rankings based on the ColorChecker dataset - and there are scores of these - are inaccurate. In this paper, we re-generate a new 'recommended' set of ground-truth based on the calculation methodology described by Shi and Funt. We then review the performance evaluation of a range of illuminant estimation algorithms. Compared with the legacy ground-truths, we find that the difference in how algorithms perform can be large, with many local rankings of algorithms being reversed. Finally, we draw the readers attention to our new 'open' data repository which, we hope, will allow the ColorChecker set to be rehabilitated and once again to become a useful benchmark for illuminant estimation algorithms.

Concise Radiometric Calibration Using The Power of Ranking

Mar 15, 2018

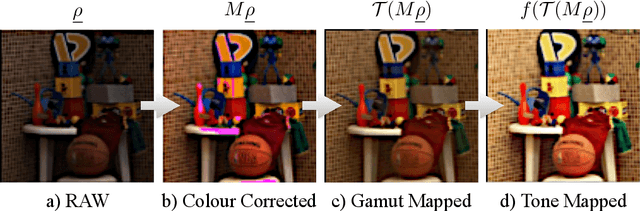

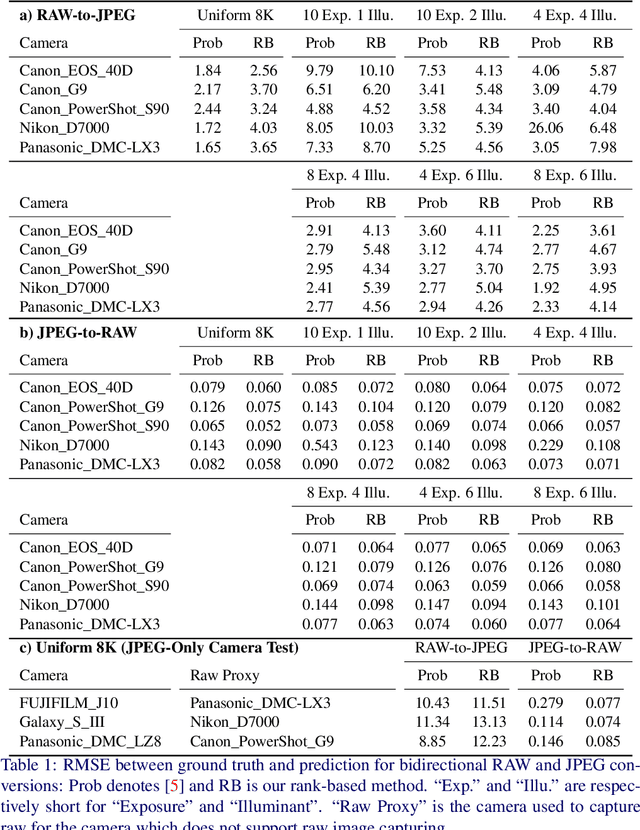

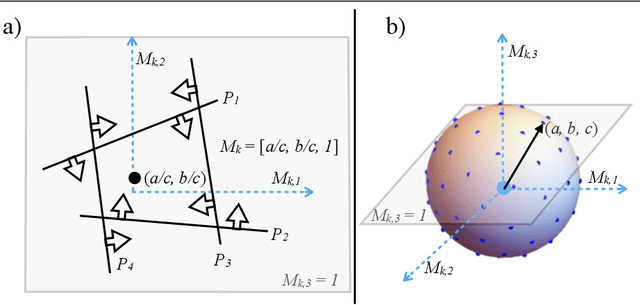

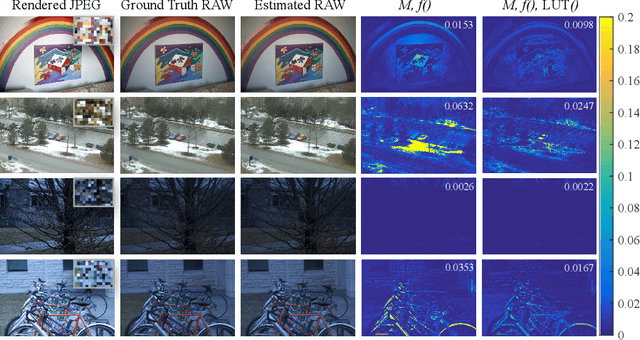

Abstract:Compared with raw images, the more common JPEG images are less useful for machine vision algorithms and professional photographers because JPEG-sRGB does not preserve a linear relation between pixel values and the light measured from the scene. A camera is said to be radiometrically calibrated if there is a computational model which can predict how the raw linear sensor image is mapped to the corresponding rendered image (e.g. JPEGs) and vice versa. This paper begins with the observation that the rank order of pixel values are mostly preserved post colour correction. We show that this observation is the key to solving for the whole camera pipeline (colour correction, tone and gamut mapping). Our rank-based calibration method is simpler than the prior art and so is parametrised by fewer variables which, concomitantly, can be solved for using less calibration data. Another advantage is that we can derive the camera pipeline from a single pair of raw-JPEG images. Experiments demonstrate that our method delivers state-of-the-art results (especially for the most interesting case of JPEG to raw).

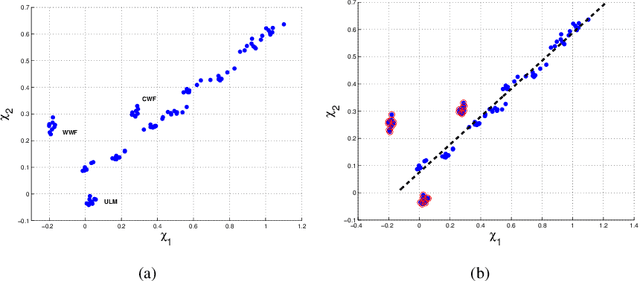

Camera Calibration for Daylight Specular-Point Locus

Dec 12, 2017

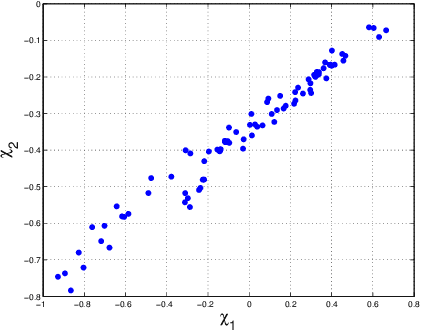

Abstract:In this paper we present a new camera calibration method aimed at finding a straight-line locus, in a special colour feature space, that is traversed by daylights and as well also approximately followed by specular points. The aim of the calibration is to enable recovering the colour of the illuminant in a scene, using the calibrated camera. First we prove theoretically that any candidate specular points, for an image that is generated by a specific camera and taken under a daylight, must lie on a straight line in log-chromaticity space, for a chromaticity that is generated using a geometric-mean denominator. Use is made of the assumptions that daylight illuminants can be approximated using Planckians and that camera sensors are narrowband or can be made so by spectral sharpening. Then we show how a particular camera can be calibrated so as to discover this locus. As applications we use this curve for illuminant detection, and also for re-lighting of images to show they would appear under lighting having a different colour temperature.

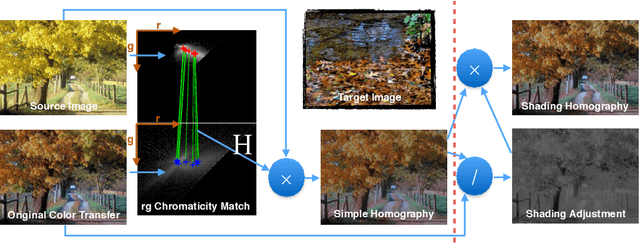

Recoding Color Transfer as a Color Homography

Aug 04, 2016

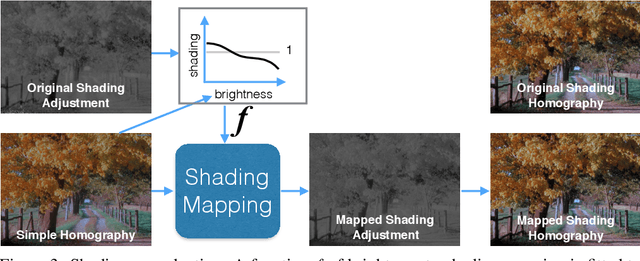

Abstract:Color transfer is an image editing process that adjusts the colors of a picture to match a target picture's color theme. A natural color transfer not only matches the color styles but also prevents after-transfer artifacts due to image compression, noise, and gradient smoothness change. The recently discovered color homography theorem proves that colors across a change in photometric viewing condition are related by a homography. In this paper, we propose a color-homography-based color transfer decomposition which encodes color transfer as a combination of chromaticity shift and shading adjustment. A powerful form of shading adjustment is shown to be a global shading curve by which the same shading homography can be applied elsewhere. Our experiments show that the proposed color transfer decomposition provides a very close approximation to many popular color transfer methods. The advantage of our approach is that the learned color transfer can be applied to many other images (e.g. other frames in a video), instead of a frame-to-frame basis. We demonstrate two applications for color transfer enhancement and video color grading re-application. This simple model of color transfer is also important for future color transfer algorithm design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge