Gloria Beraldo

Causality-enhanced Decision-Making for Autonomous Mobile Robots in Dynamic Environments

Apr 16, 2025

Abstract:The growing integration of robots in shared environments -- such as warehouses, shopping centres, and hospitals -- demands a deep understanding of the underlying dynamics and human behaviours, including how, when, and where individuals engage in various activities and interactions. This knowledge goes beyond simple correlation studies and requires a more comprehensive causal analysis. By leveraging causal inference to model cause-and-effect relationships, we can better anticipate critical environmental factors and enable autonomous robots to plan and execute tasks more effectively. To this end, we propose a novel causality-based decision-making framework that reasons over a learned causal model to predict battery usage and human obstructions, understanding how these factors could influence robot task execution. Such reasoning framework assists the robot in deciding when and how to complete a given task. To achieve this, we developed also PeopleFlow, a new Gazebo-based simulator designed to model context-sensitive human-robot spatial interactions in shared workspaces. PeopleFlow features realistic human and robot trajectories influenced by contextual factors such as time, environment layout, and robot state, and can simulate a large number of agents. While the simulator is general-purpose, in this paper we focus on a warehouse-like environment as a case study, where we conduct an extensive evaluation benchmarking our causal approach against a non-causal baseline. Our findings demonstrate the efficacy of the proposed solutions, highlighting how causal reasoning enables autonomous robots to operate more efficiently and safely in dynamic environments shared with humans.

Experimental Evaluation of ROS-Causal in Real-World Human-Robot Spatial Interaction Scenarios

Jun 07, 2024

Abstract:Deploying robots in human-shared environments requires a deep understanding of how nearby agents and objects interact. Employing causal inference to model cause-and-effect relationships facilitates the prediction of human behaviours and enables the anticipation of robot interventions. However, a significant challenge arises due to the absence of implementation of existing causal discovery methods within the ROS ecosystem, the standard de-facto framework in robotics, hindering effective utilisation on real robots. To bridge this gap, in our previous work we proposed ROS-Causal, a ROS-based framework designed for onboard data collection and causal discovery in human-robot spatial interactions. In this work, we present an experimental evaluation of ROS-Causal both in simulation and on a new dataset of human-robot spatial interactions in a lab scenario, to assess its performance and effectiveness. Our analysis demonstrates the efficacy of this approach, showcasing how causal models can be extracted directly onboard by robots during data collection. The online causal models generated from the simulation are consistent with those from lab experiments. These findings can help researchers to enhance the performance of robotic systems in shared environments, firstly by studying the causal relations between variables in simulation without real people, and then facilitating the actual robot deployment in real human environments. ROS-Causal: https://lcastri.github.io/roscausal

ROS-Causal: A ROS-based Causal Analysis Framework for Human-Robot Interaction Applications

Feb 29, 2024

Abstract:Deploying robots in human-shared spaces requires understanding interactions among nearby agents and objects. Modelling cause-and-effect relations through causal inference aids in predicting human behaviours and anticipating robot interventions. However, a critical challenge arises as existing causal discovery methods currently lack an implementation inside the ROS ecosystem, the standard de facto in robotics, hindering effective utilisation in robotics. To address this gap, this paper introduces ROS-Causal, a ROS-based framework for onboard data collection and causal discovery in human-robot spatial interactions. An ad-hoc simulator, integrated with ROS, illustrates the approach's effectiveness, showcasing the robot onboard generation of causal models during data collection. ROS-Causal is available on GitHub: https://github.com/lcastri/roscausal.git.

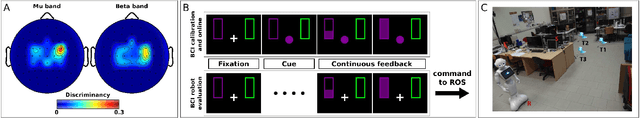

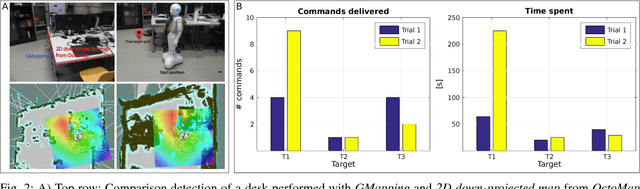

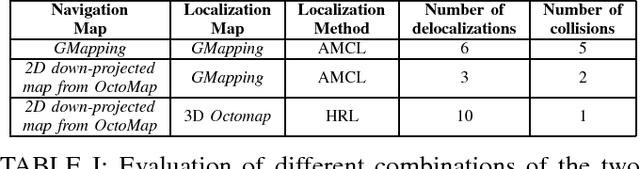

Brain-Computer Interface meets ROS: A robotic approach to mentally drive telepresence robots

Apr 04, 2019

Abstract:This paper shows and evaluates a novel approach to integrate a non-invasive Brain-Computer Interface (BCI) with the Robot Operating System (ROS) to mentally drive a telepresence robot. Controlling a mobile device by using human brain signals might improve the quality of life of people suffering from severe physical disabilities or elderly people who cannot move anymore. Thus, the BCI user is able to actively interact with relatives and friends located in different rooms thanks to a video streaming connection to the robot. To facilitate the control of the robot via BCI, we explore new ROS-based algorithms for navigation and obstacle avoidance, making the system safer and more reliable. In this regard, the robot can exploit two maps of the environment, one for localization and one for navigation, and both can be used also by the BCI user to watch the position of the robot while it is moving. As demonstrated by the experimental results, the user's cognitive workload is reduced, decreasing the number of commands necessary to complete the task and helping him/her to keep attention for longer periods of time.

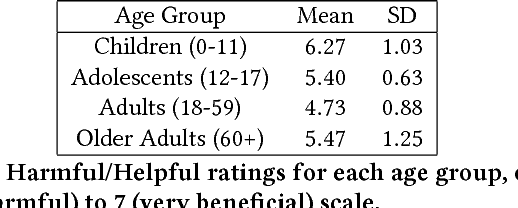

Proceedings of the Workshop on Social Robots in Therapy: Focusing on Autonomy and Ethical Challenges

Dec 18, 2018

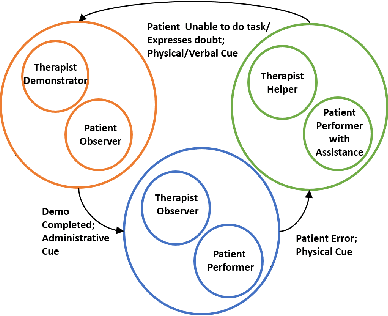

Abstract:Robot-Assisted Therapy (RAT) has successfully been used in HRI research by including social robots in health-care interventions by virtue of their ability to engage human users both social and emotional dimensions. Research projects on this topic exist all over the globe in the USA, Europe, and Asia. All of these projects have the overall ambitious goal to increase the well-being of a vulnerable population. Typical work in RAT is performed using remote controlled robots; a technique called Wizard-of-Oz (WoZ). The robot is usually controlled, unbeknownst to the patient, by a human operator. However, WoZ has been demonstrated to not be a sustainable technique in the long-term. Providing the robots with autonomy (while remaining under the supervision of the therapist) has the potential to lighten the therapists burden, not only in the therapeutic session itself but also in longer-term diagnostic tasks. Therefore, there is a need for exploring several degrees of autonomy in social robots used in therapy. Increasing the autonomy of robots might also bring about a new set of challenges. In particular, there will be a need to answer new ethical questions regarding the use of robots with a vulnerable population, as well as a need to ensure ethically-compliant robot behaviours. Therefore, in this workshop we want to gather findings and explore which degree of autonomy might help to improve health-care interventions and how we can overcome the ethical challenges inherent to it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge