Giuseppe Coccia

Expressive Machine Dubbing Through Phrase-level Cross-lingual Prosody Transfer

Jun 21, 2023

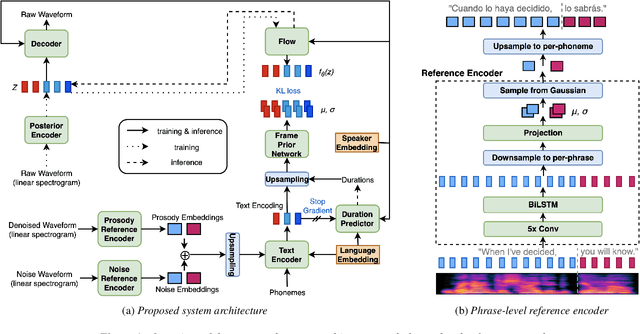

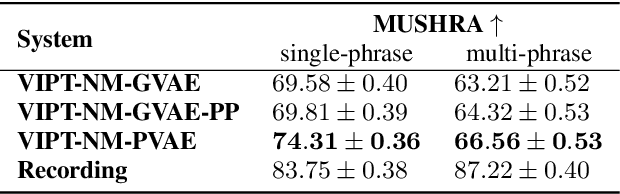

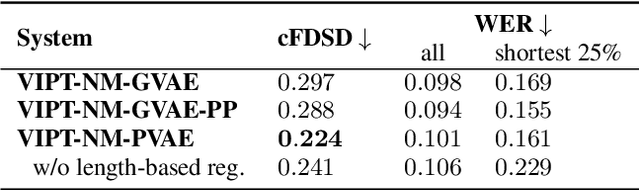

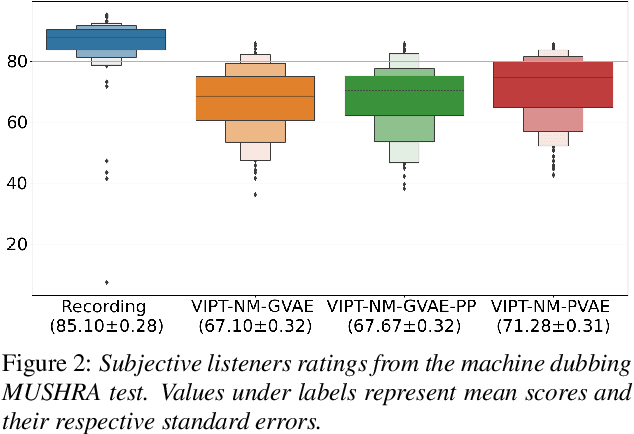

Abstract:Speech generation for machine dubbing adds complexity to conventional Text-To-Speech solutions as the generated output is required to match the expressiveness, emotion and speaking rate of the source content. Capturing and transferring details and variations in prosody is a challenge. We introduce phrase-level cross-lingual prosody transfer for expressive multi-lingual machine dubbing. The proposed phrase-level prosody transfer delivers a significant 6.2% MUSHRA score increase over a baseline with utterance-level global prosody transfer, thereby closing the gap between the baseline and expressive human dubbing by 23.2%, while preserving intelligibility of the synthesised speech.

Empirical Evaluation of Deep Learning Model Compression Techniques on the WaveNet Vocoder

Nov 20, 2020

Abstract:WaveNet is a state-of-the-art text-to-speech vocoder that remains challenging to deploy due to its autoregressive loop. In this work we focus on ways to accelerate the original WaveNet architecture directly, as opposed to modifying the architecture, such that the model can be deployed as part of a scalable text-to-speech system. We survey a wide variety of model compression techniques that are amenable to deployment on a range of hardware platforms. In particular, we compare different model sparsity methods and levels, and seven widely used precisions as targets for quantization; and are able to achieve models with a compression ratio of up to 13.84 without loss in audio fidelity compared to a dense, single-precision floating-point baseline. All techniques are implemented using existing open source deep learning frameworks and libraries to encourage their wider adoption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge