Ghazal Alinezhad Noghre

MoFM: A Large-Scale Human Motion Foundation Model

Feb 08, 2025Abstract:AFoundation Models (FM) have increasingly drawn the attention of researchers due to their scalability and generalization across diverse tasks. Inspired by the success of FMs and the principles that have driven advancements in Large Language Models (LLMs), we introduce MoFM as a novel Motion Foundation Model. MoFM is designed for the semantic understanding of complex human motions in both time and space. To facilitate large-scale training, MotionBook, a comprehensive human motion dictionary of discretized motions is designed and employed. MotionBook utilizes Thermal Cubes to capture spatio-temporal motion heatmaps, applying principles from discrete variational models to encode human movements into discrete units for a more efficient and scalable representation. MoFM, trained on a large corpus of motion data, provides a foundational backbone adaptable to diverse downstream tasks, supporting paradigms such as one-shot, unsupervised, and supervised tasks. This versatility makes MoFM well-suited for a wide range of motion-based applications.

Exploring Pose-Based Anomaly Detection for Retail Security: A Real-World Shoplifting Dataset and Benchmark

Jan 11, 2025Abstract:Shoplifting poses a significant challenge for retailers, resulting in billions of dollars in annual losses. Traditional security measures often fall short, highlighting the need for intelligent solutions capable of detecting shoplifting behaviors in real time. This paper frames shoplifting detection as an anomaly detection problem, focusing on the identification of deviations from typical shopping patterns. We introduce PoseLift, a privacy-preserving dataset specifically designed for shoplifting detection, addressing challenges such as data scarcity, privacy concerns, and model biases. PoseLift is built in collaboration with a retail store and contains anonymized human pose data from real-world scenarios. By preserving essential behavioral information while anonymizing identities, PoseLift balances privacy and utility. We benchmark state-of-the-art pose-based anomaly detection models on this dataset, evaluating performance using a comprehensive set of metrics. Our results demonstrate that pose-based approaches achieve high detection accuracy while effectively addressing privacy and bias concerns inherent in traditional methods. As one of the first datasets capturing real-world shoplifting behaviors, PoseLift offers researchers a valuable tool to advance computer vision ethically and will be publicly available to foster innovation and collaboration. The dataset is available at https://github.com/TeCSAR-UNCC/PoseLift.

PoseWatch: A Transformer-based Architecture for Human-centric Video Anomaly Detection Using Spatio-temporal Pose Tokenization

Aug 27, 2024Abstract:Video Anomaly Detection (VAD) presents a significant challenge in computer vision, particularly due to the unpredictable and infrequent nature of anomalous events, coupled with the diverse and dynamic environments in which they occur. Human-centric VAD, a specialized area within this domain, faces additional complexities, including variations in human behavior, potential biases in data, and substantial privacy concerns related to human subjects. These issues complicate the development of models that are both robust and generalizable. To address these challenges, recent advancements have focused on pose-based VAD, which leverages human pose as a high-level feature to mitigate privacy concerns, reduce appearance biases, and minimize background interference. In this paper, we introduce PoseWatch, a novel transformer-based architecture designed specifically for human-centric pose-based VAD. PoseWatch features an innovative Spatio-Temporal Pose and Relative Pose (ST-PRP) tokenization method that enhances the representation of human motion over time, which is also beneficial for broader human behavior analysis tasks. The architecture's core, a Unified Encoder Twin Decoders (UETD) transformer, significantly improves the detection of anomalous behaviors in video data. Extensive evaluations across multiple benchmark datasets demonstrate that PoseWatch consistently outperforms existing methods, establishing a new state-of-the-art in pose-based VAD. This work not only demonstrates the efficacy of PoseWatch but also highlights the potential of integrating Natural Language Processing techniques with computer vision to advance human behavior analysis.

PHEVA: A Privacy-preserving Human-centric Video Anomaly Detection Dataset

Aug 26, 2024Abstract:PHEVA, a Privacy-preserving Human-centric Ethical Video Anomaly detection dataset. By removing pixel information and providing only de-identified human annotations, PHEVA safeguards personally identifiable information. The dataset includes seven indoor/outdoor scenes, featuring one novel, context-specific camera, and offers over 5x the pose-annotated frames compared to the largest previous dataset. This study benchmarks state-of-the-art methods on PHEVA using a comprehensive set of metrics, including the 10% Error Rate (10ER), a metric used for anomaly detection for the first time providing insights relevant to real-world deployment. As the first of its kind, PHEVA bridges the gap between conventional training and real-world deployment by introducing continual learning benchmarks, with models outperforming traditional methods in 82.14% of cases. The dataset is publicly available at https://github.com/TeCSAR-UNCC/PHEVA.git.

Evaluating the Effectiveness of Video Anomaly Detection in the Wild: Online Learning and Inference for Real-world Deployment

Apr 29, 2024Abstract:Video Anomaly Detection (VAD) identifies unusual activities in video streams, a key technology with broad applications ranging from surveillance to healthcare. Tackling VAD in real-life settings poses significant challenges due to the dynamic nature of human actions, environmental variations, and domain shifts. Many research initiatives neglect these complexities, often concentrating on traditional testing methods that fail to account for performance on unseen datasets, creating a gap between theoretical models and their real-world utility. Online learning is a potential strategy to mitigate this issue by allowing models to adapt to new information continuously. This paper assesses how well current VAD algorithms can adjust to real-life conditions through an online learning framework, particularly those based on pose analysis, for their efficiency and privacy advantages. Our proposed framework enables continuous model updates with streaming data from novel environments, thus mirroring actual world challenges and evaluating the models' ability to adapt in real-time while maintaining accuracy. We investigate three state-of-the-art models in this setting, focusing on their adaptability across different domains. Our findings indicate that, even under the most challenging conditions, our online learning approach allows a model to preserve 89.39% of its original effectiveness compared to its offline-trained counterpart in a specific target domain.

Integrating AI into CCTV Systems: A Comprehensive Evaluation of Smart Video Surveillance in Community Space

Dec 04, 2023

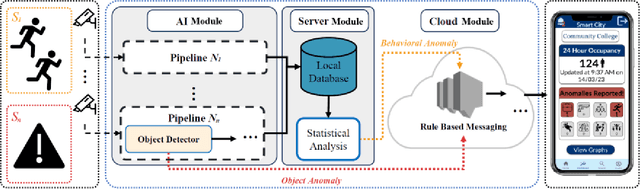

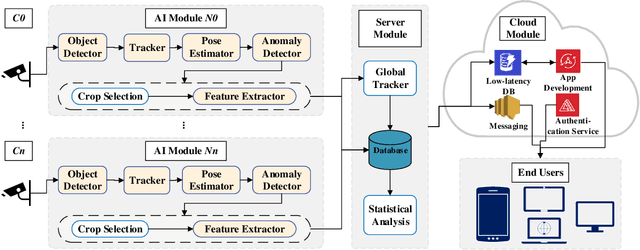

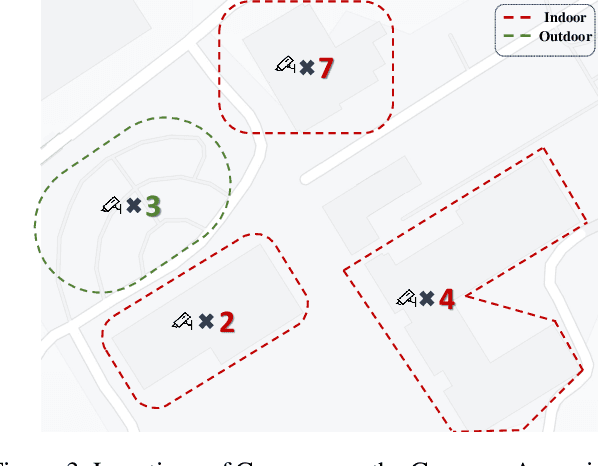

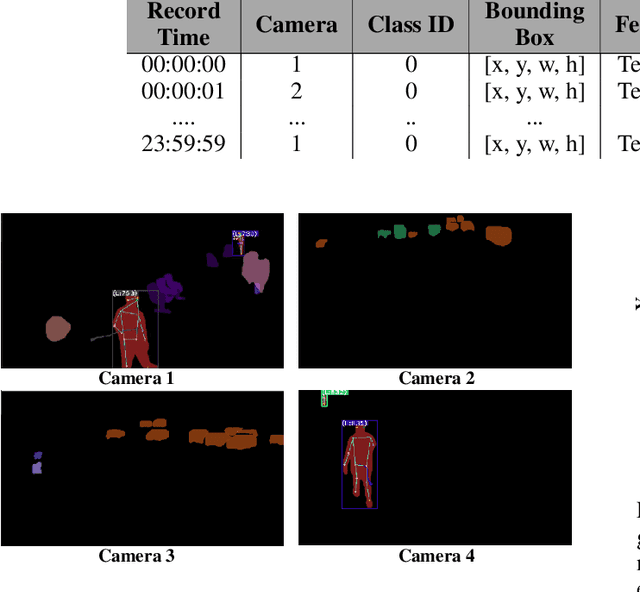

Abstract:This article presents an AI-enabled Smart Video Surveillance (SVS) designed to enhance safety in community spaces such as educational and recreational areas, and small businesses. The proposed system innovatively integrates with existing CCTV and wired camera networks, simplifying its adoption across various community cases to leverage recent AI advancements. Our SVS system, focusing on privacy, uses metadata instead of pixel data for activity recognition, aligning with ethical standards. It features cloud-based infrastructure and a mobile app for real-time, privacy-conscious alerts in communities. This article notably pioneers a comprehensive real-world evaluation of the SVS system, covering AI-driven visual processing, statistical analysis, database management, cloud communication, and user notifications. It's also the first to assess an end-to-end anomaly detection system's performance, vital for identifying potential public safety incidents. For our evaluation, we implemented the system in a community college, serving as an ideal model to exemplify the proposed system's capabilities. Our findings in this setting demonstrate the system's robustness, with throughput, latency, and scalability effectively managing 16 CCTV cameras. The system maintained a consistent 16.5 frames per second (FPS) over a 21-hour operation. The average end-to-end latency for detecting behavioral anomalies and alerting users was 26.76 seconds.

VegaEdge: Edge AI Confluence Anomaly Detection for Real-Time Highway IoT-Applications

Nov 14, 2023Abstract:Vehicle anomaly detection plays a vital role in highway safety applications such as accident prevention, rapid response, traffic flow optimization, and work zone safety. With the surge of the Internet of Things (IoT) in recent years, there has arisen a pressing demand for Artificial Intelligence (AI) based anomaly detection methods designed to meet the requirements of IoT devices. Catering to this futuristic vision, we introduce a lightweight approach to vehicle anomaly detection by utilizing the power of trajectory prediction. Our proposed design identifies vehicles deviating from expected paths, indicating highway risks from different camera-viewing angles from real-world highway datasets. On top of that, we present VegaEdge - a sophisticated AI confluence designed for real-time security and surveillance applications in modern highway settings through edge-centric IoT-embedded platforms equipped with our anomaly detection approach. Extensive testing across multiple platforms and traffic scenarios showcases the versatility and effectiveness of VegaEdge. This work also presents the Carolinas Anomaly Dataset (CAD), to bridge the existing gap in datasets tailored for highway anomalies. In real-world scenarios, our anomaly detection approach achieves an AUC-ROC of 0.94, and our proposed VegaEdge design, on an embedded IoT platform, processes 738 trajectories per second in a typical highway setting. The dataset is available at https://github.com/TeCSAR-UNCC/Carolinas_Dataset#chd-anomaly-test-set .

VT-Former: A Transformer-based Vehicle Trajectory Prediction Approach For Intelligent Highway Transportation Systems

Nov 11, 2023Abstract:Enhancing roadway safety and traffic management has become an essential focus area for a broad range of modern cyber-physical systems and intelligent transportation systems. Vehicle Trajectory Prediction is a pivotal element within numerous applications for highway and road safety. These applications encompass a wide range of use cases, spanning from traffic management and accident prevention to enhancing work-zone safety and optimizing energy conservation. The ability to implement intelligent management in this context has been greatly advanced by the developments in the field of Artificial Intelligence (AI), alongside the increasing deployment of surveillance cameras across road networks. In this paper, we introduce a novel transformer-based approach for vehicle trajectory prediction for highway safety and surveillance, denoted as VT-Former. In addition to utilizing transformers to capture long-range temporal patterns, a new Graph Attentive Tokenization (GAT) module has been proposed to capture intricate social interactions among vehicles. Combining these two core components culminates in a precise approach for vehicle trajectory prediction. Our study on three benchmark datasets with three different viewpoints demonstrates the State-of-The-Art (SoTA) performance of VT-Former in vehicle trajectory prediction and its generalizability and robustness. We also evaluate VT-Former's efficiency on embedded boards and explore its potential for vehicle anomaly detection as a sample application, showcasing its broad applicability.

Real-World Community-in-the-Loop Smart Video Surveillance -- A Case Study at a Community College

Mar 22, 2023Abstract:Smart Video surveillance systems have become important recently for ensuring public safety and security, especially in smart cities. However, applying real-time artificial intelligence technologies combined with low-latency notification and alarming has made deploying these systems quite challenging. This paper presents a case study for designing and deploying smart video surveillance systems based on a real-world testbed at a community college. We primarily focus on a smart camera-based system that can identify suspicious/abnormal activities and alert the stakeholders and residents immediately. The paper highlights and addresses different algorithmic and system design challenges to guarantee real-time high-accuracy video analytics processing in the testbed. It also presents an example of cloud system infrastructure and a mobile application for real-time notification to keep students, faculty/staff, and responsible security personnel in the loop. At the same time, it covers the design decision to maintain communities' privacy and ethical requirements as well as hardware configuration and setups. We evaluate the system's performance using throughput and end-to-end latency. The experiment results show that, on average, our system's end-to-end latency to notify the end users in case of detecting suspicious objects is 5.3, 5.78, and 11.11 seconds when running 1, 4, and 8 cameras, respectively. On the other hand, in case of detecting anomalous behaviors, the system could notify the end users with 7.3, 7.63, and 20.78 seconds average latency. These results demonstrate that the system effectively detects and notifies abnormal behaviors and suspicious objects to the end users within a reasonable period. The system can run eight cameras simultaneously at a 32.41 Frame Per Second (FPS) rate.

A POV-based Highway Vehicle Trajectory Dataset and Prediction Architecture

Mar 10, 2023Abstract:Vehicle Trajectory datasets that provide multiple point-of-views (POVs) can be valuable for various traffic safety and management applications. Despite the abundance of trajectory datasets, few offer a comprehensive and diverse range of driving scenes, capturing multiple viewpoints of various highway layouts, merging lanes, and configurations. This limits their ability to capture the nuanced interactions between drivers, vehicles, and the roadway infrastructure. We introduce the \emph{Carolinas Highway Dataset (CHD\footnote{\emph{CHD} available at: \url{https://github.com/TeCSAR-UNCC/Carolinas\_Dataset}})}, a vehicle trajectory, detection, and tracking dataset. \emph{CHD} is a collection of 1.6 million frames captured in highway-based videos from eye-level and high-angle POVs at eight locations across Carolinas with 338,000 vehicle trajectories. The locations, timing of recordings, and camera angles were carefully selected to capture various road geometries, traffic patterns, lighting conditions, and driving behaviors. We also present \emph{PishguVe}\footnote{\emph{PishguVe} code available at: \url{https://github.com/TeCSAR-UNCC/PishguVe}}, a novel vehicle trajectory prediction architecture that uses attention-based graph isomorphism and convolutional neural networks. The results demonstrate that \emph{PishguVe} outperforms existing algorithms to become the new state-of-the-art (SotA) in bird's-eye, eye-level, and high-angle POV trajectory datasets. Specifically, it achieves a 12.50\% and 10.20\% improvement in ADE and FDE, respectively, over the current SotA on NGSIM dataset. Compared to best-performing models on CHD, \emph{PishguVe} achieves lower ADE and FDE on eye-level data by 14.58\% and 27.38\%, respectively, and improves ADE and FDE on high-angle data by 8.3\% and 6.9\%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge