George Bebis

Two-Step Data Augmentation for Masked Face Detection and Recognition: Turning Fake Masks to Real

Dec 13, 2025Abstract:Data scarcity and distribution shift pose major challenges for masked face detection and recognition. We propose a two-step generative data augmentation framework that combines rule-based mask warping with unpaired image-to-image translation using GANs, enabling the generation of realistic masked-face samples beyond purely synthetic transformations. Compared to rule-based warping alone, the proposed approach yields consistent qualitative improvements and complements existing GAN-based masked face generation methods such as IAMGAN. We introduce a non-mask preservation loss and stochastic noise injection to stabilize training and enhance sample diversity. Experimental observations highlight the effectiveness of the proposed components and suggest directions for future improvements in data-centric augmentation for face recognition tasks.

* 9 pages, 9 figures. Conference version

RaceGAN: A Framework for Preserving Individuality while Converting Racial Information for Image-to-Image Translation

Sep 18, 2025Abstract:Generative adversarial networks (GANs) have demonstrated significant progress in unpaired image-to-image translation in recent years for several applications. CycleGAN was the first to lead the way, although it was restricted to a pair of domains. StarGAN overcame this constraint by tackling image-to-image translation across various domains, although it was not able to map in-depth low-level style changes for these domains. Style mapping via reference-guided image synthesis has been made possible by the innovations of StarGANv2 and StyleGAN. However, these models do not maintain individuality and need an extra reference image in addition to the input. Our study aims to translate racial traits by means of multi-domain image-to-image translation. We present RaceGAN, a novel framework capable of mapping style codes over several domains during racial attribute translation while maintaining individuality and high level semantics without relying on a reference image. RaceGAN outperforms other models in translating racial features (i.e., Asian, White, and Black) when tested on Chicago Face Dataset. We also give quantitative findings utilizing InceptionReNetv2-based classification to demonstrate the effectiveness of our racial translation. Moreover, we investigate how well the model partitions the latent space into distinct clusters of faces for each ethnic group.

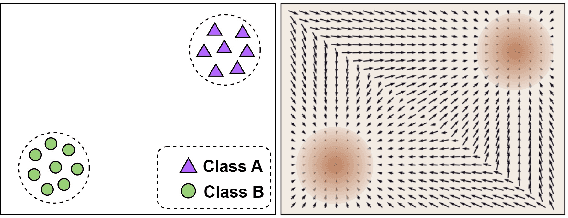

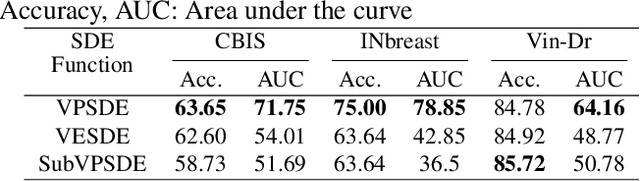

Can Score-Based Generative Modeling Effectively Handle Medical Image Classification?

Feb 24, 2025

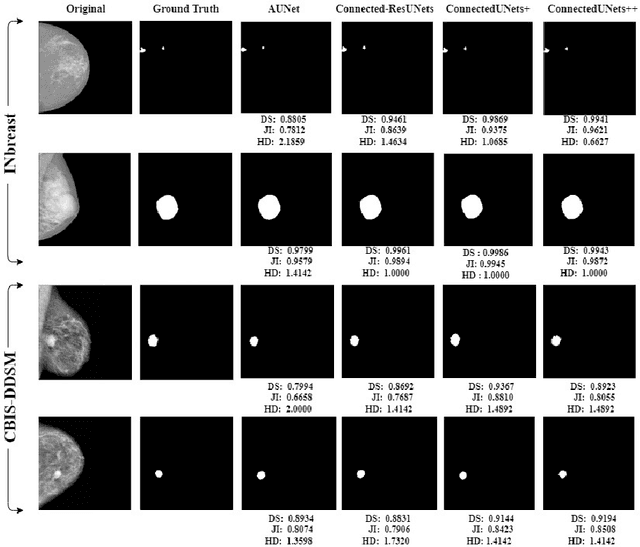

Abstract:The remarkable success of deep learning in recent years has prompted applications in medical image classification and diagnosis tasks. While classification models have demonstrated robustness in classifying simpler datasets like MNIST or natural images such as ImageNet, this resilience is not consistently observed in complex medical image datasets where data is more scarce and lacks diversity. Moreover, previous findings on natural image datasets have indicated a potential trade-off between data likelihood and classification accuracy. In this study, we explore the use of score-based generative models as classifiers for medical images, specifically mammographic images. Our findings suggest that our proposed generative classifier model not only achieves superior classification results on CBIS-DDSM, INbreast and Vin-Dr Mammo datasets, but also introduces a novel approach to image classification in a broader context. Our code is publicly available at https://github.com/sushmitasarker/sgc_for_medical_image_classification

A comprehensive overview of deep learning techniques for 3D point cloud classification and semantic segmentation

May 20, 2024Abstract:Point cloud analysis has a wide range of applications in many areas such as computer vision, robotic manipulation, and autonomous driving. While deep learning has achieved remarkable success on image-based tasks, there are many unique challenges faced by deep neural networks in processing massive, unordered, irregular and noisy 3D points. To stimulate future research, this paper analyzes recent progress in deep learning methods employed for point cloud processing and presents challenges and potential directions to advance this field. It serves as a comprehensive review on two major tasks in 3D point cloud processing-- namely, 3D shape classification and semantic segmentation.

* Published in Springer Nature (Machine Vision and Applications)

MV-Swin-T: Mammogram Classification with Multi-view Swin Transformer

Feb 26, 2024Abstract:Traditional deep learning approaches for breast cancer classification has predominantly concentrated on single-view analysis. In clinical practice, however, radiologists concurrently examine all views within a mammography exam, leveraging the inherent correlations in these views to effectively detect tumors. Acknowledging the significance of multi-view analysis, some studies have introduced methods that independently process mammogram views, either through distinct convolutional branches or simple fusion strategies, inadvertently leading to a loss of crucial inter-view correlations. In this paper, we propose an innovative multi-view network exclusively based on transformers to address challenges in mammographic image classification. Our approach introduces a novel shifted window-based dynamic attention block, facilitating the effective integration of multi-view information and promoting the coherent transfer of this information between views at the spatial feature map level. Furthermore, we conduct a comprehensive comparative analysis of the performance and effectiveness of transformer-based models under diverse settings, employing the CBIS-DDSM and Vin-Dr Mammo datasets. Our code is publicly available at https://github.com/prithuls/MV-Swin-T

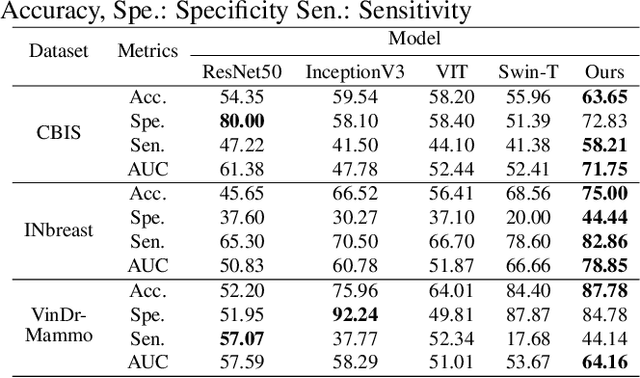

Identification of Abnormality in Maize Plants From UAV Images Using Deep Learning Approaches

Oct 20, 2023

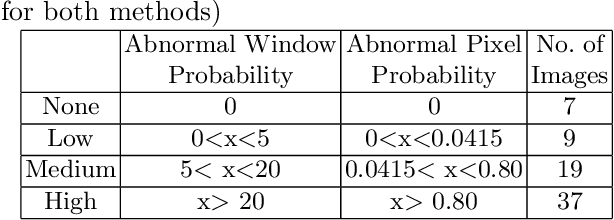

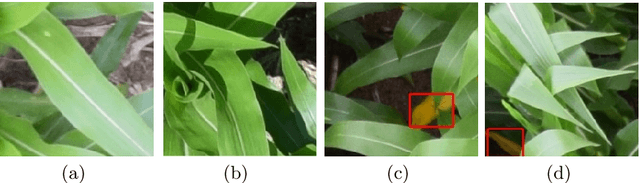

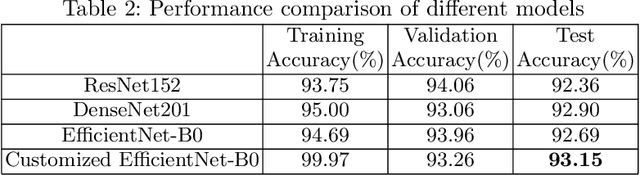

Abstract:Early identification of abnormalities in plants is an important task for ensuring proper growth and achieving high yields from crops. Precision agriculture can significantly benefit from modern computer vision tools to make farming strategies addressing these issues efficient and effective. As farming lands are typically quite large, farmers have to manually check vast areas to determine the status of the plants and apply proper treatments. In this work, we consider the problem of automatically identifying abnormal regions in maize plants from images captured by a UAV. Using deep learning techniques, we have developed a methodology which can detect different levels of abnormality (i.e., low, medium, high or no abnormality) in maize plants independently of their growth stage. The primary goal is to identify anomalies at the earliest possible stage in order to maximize the effectiveness of potential treatments. At the same time, the proposed system can provide valuable information to human annotators for ground truth data collection by helping them to focus their attention on a much smaller set of images only. We have experimented with two different but complimentary approaches, the first considering abnormality detection as a classification problem and the second considering it as a regression problem. Both approaches can be generalized to different types of abnormalities and do not make any assumption about the abnormality occurring at an early plant growth stage which might be easier to detect due to the plants being smaller and easier to separate. As a case study, we have considered a publicly available data set which exhibits mostly Nitrogen deficiency in maize plants of various growth stages. We are reporting promising preliminary results with an 88.89\% detection accuracy of low abnormality and 100\% detection accuracy of no abnormality.

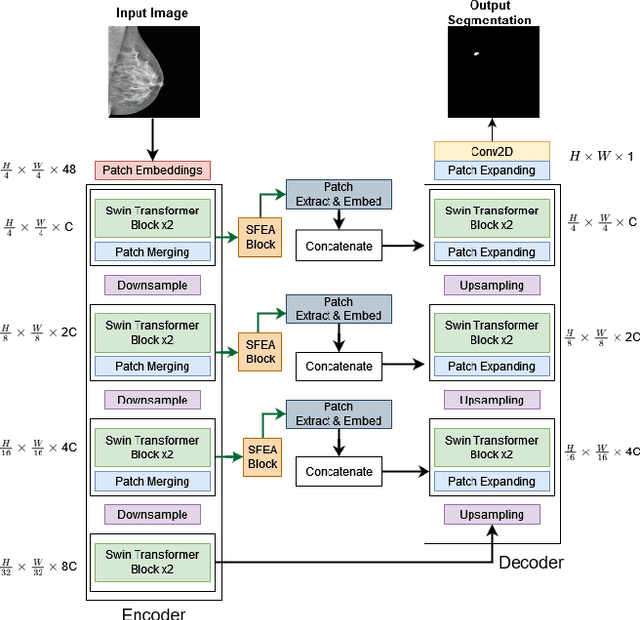

SWIN-SFTNet : Spatial Feature Expansion and Aggregation using Swin Transformer For Whole Breast micro-mass segmentation

Nov 16, 2022

Abstract:Incorporating various mass shapes and sizes in training deep learning architectures has made breast mass segmentation challenging. Moreover, manual segmentation of masses of irregular shapes is time-consuming and error-prone. Though Deep Neural Network has shown outstanding performance in breast mass segmentation, it fails in segmenting micro-masses. In this paper, we propose a novel U-net-shaped transformer-based architecture, called Swin-SFTNet, that outperforms state-of-the-art architectures in breast mammography-based micro-mass segmentation. Firstly to capture the global context, we designed a novel Spatial Feature Expansion and Aggregation Block(SFEA) that transforms sequential linear patches into a structured spatial feature. Next, we combine it with the local linear features extracted by the swin transformer block to improve overall accuracy. We also incorporate a novel embedding loss that calculates similarities between linear feature embeddings of the encoder and decoder blocks. With this approach, we achieve higher segmentation dice over the state-of-the-art by 3.10% on CBIS-DDSM, 3.81% on InBreast, and 3.13% on CBIS pre-trained model on the InBreast test data set.

ConnectedUNets++: Mass Segmentation from Whole Mammographic Images

Nov 04, 2022

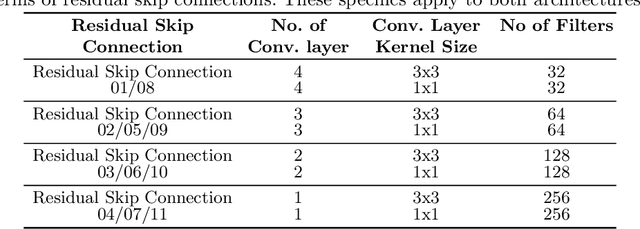

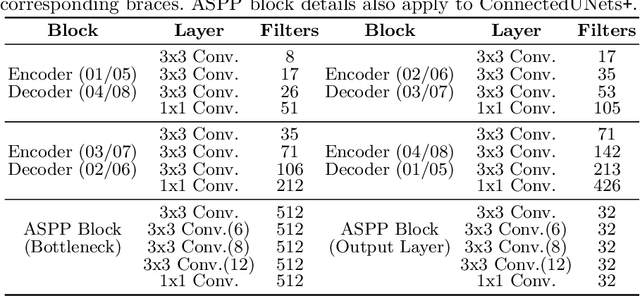

Abstract:Deep learning has made a breakthrough in medical image segmentation in recent years due to its ability to extract high-level features without the need for prior knowledge. In this context, U-Net is one of the most advanced medical image segmentation models, with promising results in mammography. Despite its excellent overall performance in segmenting multimodal medical images, the traditional U-Net structure appears to be inadequate in various ways. There are certain U-Net design modifications, such as MultiResUNet, Connected-UNets, and AU-Net, that have improved overall performance in areas where the conventional U-Net architecture appears to be deficient. Following the success of UNet and its variants, we have presented two enhanced versions of the Connected-UNets architecture: ConnectedUNets+ and ConnectedUNets++. In ConnectedUNets+, we have replaced the simple skip connections of Connected-UNets architecture with residual skip connections, while in ConnectedUNets++, we have modified the encoder-decoder structure along with employing residual skip connections. We have evaluated our proposed architectures on two publicly available datasets, the Curated Breast Imaging Subset of Digital Database for Screening Mammography (CBIS-DDSM) and INbreast.

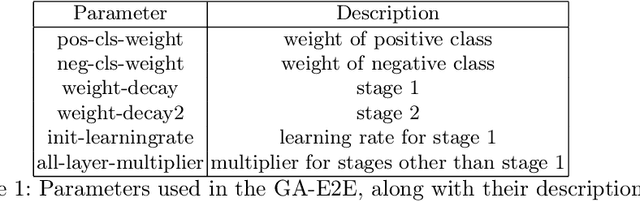

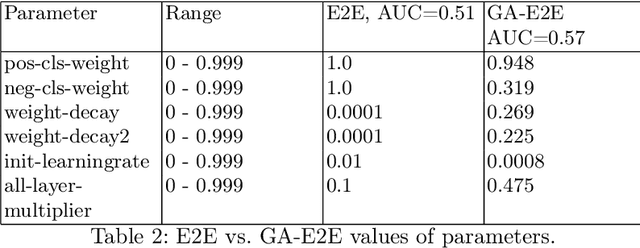

Deep Learning Hyperparameter Optimization for Breast Mass Detection in Mammograms

Jul 22, 2022

Abstract:Accurate breast cancer diagnosis through mammography has the potential to save millions of lives around the world. Deep learning (DL) methods have shown to be very effective for mass detection in mammograms. Additional improvements of current DL models will further improve the effectiveness of these methods. A critical issue in this context is how to pick the right hyperparameters for DL models. In this paper, we present GA-E2E, a new approach for tuning the hyperparameters of DL models for brest cancer detection using Genetic Algorithms (GAs). Our findings reveal that differences in parameter values can considerably alter the area under the curve (AUC), which is used to determine a classifier's performance.

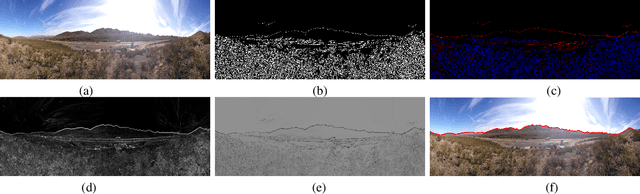

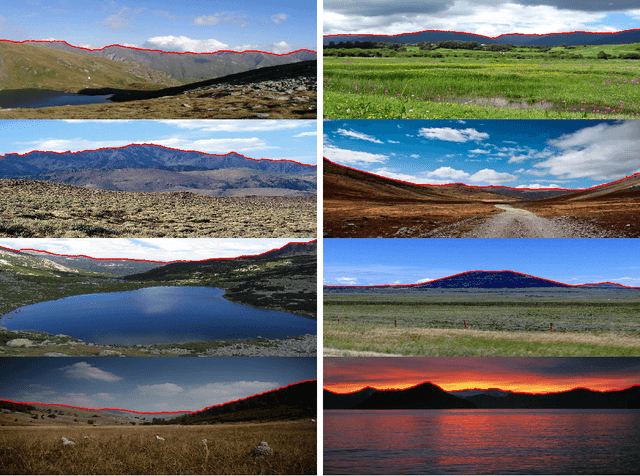

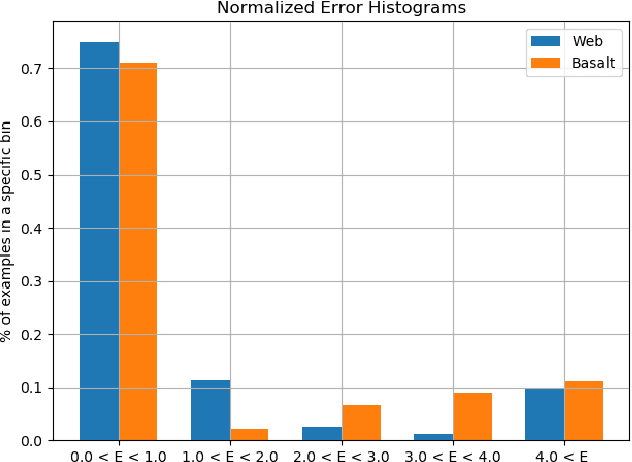

Resource Efficient Mountainous Skyline Extraction using Shallow Learning

Jul 23, 2021

Abstract:Skyline plays a pivotal role in mountainous visual geo-localization and localization/navigation of planetary rovers/UAVs and virtual/augmented reality applications. We present a novel mountainous skyline detection approach where we adapt a shallow learning approach to learn a set of filters to discriminate between edges belonging to sky-mountain boundary and others coming from different regions. Unlike earlier approaches, which either rely on extraction of explicit feature descriptors and their classification, or fine-tuning general scene parsing deep networks for sky segmentation, our approach learns linear filters based on local structure analysis. At test time, for every candidate edge pixel, a single filter is chosen from the set of learned filters based on pixel's structure tensor, and then applied to the patch around it. We then employ dynamic programming to solve the shortest path problem for the resultant multistage graph to get the sky-mountain boundary. The proposed approach is computationally faster than earlier methods while providing comparable performance and is more suitable for resource constrained platforms e.g., mobile devices, planetary rovers and UAVs. We compare our proposed approach against earlier skyline detection methods using four different data sets. Our code is available at \url{https://github.com/TouqeerAhmad/skyline_detection}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge