Fuxu Liu

Identifying Melanoma Images using EfficientNet Ensemble: Winning Solution to the SIIM-ISIC Melanoma Classification Challenge

Oct 11, 2020

Abstract:We present our winning solution to the SIIM-ISIC Melanoma Classification Challenge. It is an ensemble of convolutions neural network (CNN) models with different backbones and input sizes, most of which are image-only models while a few of them used image-level and patient-level metadata. The keys to our winning are: (1) stable validation scheme (2) good choice of model target (3) carefully tuned pipeline and (4) ensembling with very diverse models. The winning submission scored 0.9600 AUC on cross validation and 0.9490 AUC on private leaderboard.

Google Landmark Recognition 2020 Competition Third Place Solution

Oct 11, 2020

Abstract:We present our third place solution to the Google Landmark Recognition 2020 competition. It is an ensemble of global features only Sub-center ArcFace models. We introduce dynamic margins for ArcFace loss, a family of tune-able margin functions of class size, designed to deal with the extreme imbalance in GLDv2 dataset. Progressive finetuning and careful postprocessing are also key to the solution. Our two submissions scored 0.6344 and 0.6289 on private leaderboard, both ranking third place out of 736 teams.

A Strong Baseline and Batch Normalization Neck for Deep Person Re-identification

Jun 19, 2019

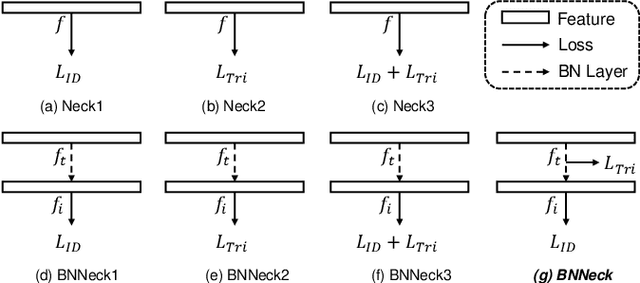

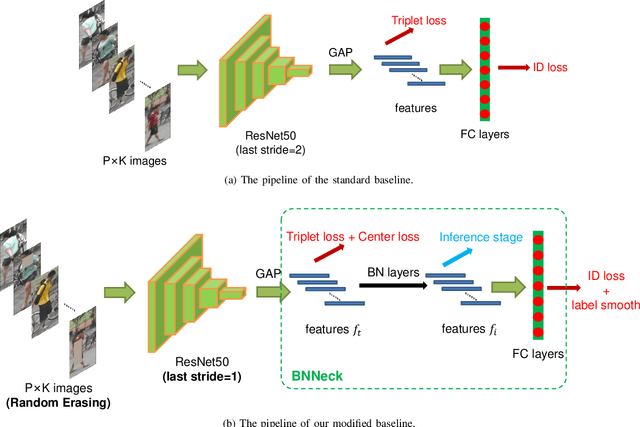

Abstract:This study explores a simple but strong baseline for person re-identification (ReID). Person ReID with deep neural networks has progressed and achieved high performance in recent years. However, many state-of-the-art methods design complex network structures and concatenate multi-branch features. In the literature, some effective training tricks briefly appear in several papers or source codes. The present study collects and evaluates these effective training tricks in person ReID. By combining these tricks, the model achieves 94.5% rank-1 and 85.9% mean average precision on Market1501 with only using the global features of ResNet50. The performance surpasses all existing global- and part-based baselines in person ReID. We propose a novel neck structure named as batch normalization neck (BNNeck). BNNeck adds a batch normalization layer after global pooling layer to separate metric and classification losses into two different feature spaces because we observe they are inconsistent in one embedding space. Extended experiments show that BNNeck can boost the baseline, and our baseline can improve the performance of existing state-of-the-art methods. Our codes and models are available at: https://github.com/michuanhaohao/reid-strong-baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge