Fubiao Zhang

Cooperative Multi-Agent Transfer Learning with Level-Adaptive Credit Assignment

Jun 03, 2021

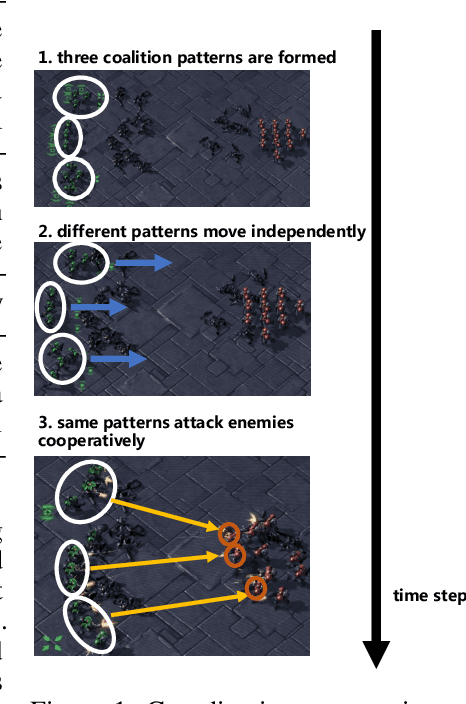

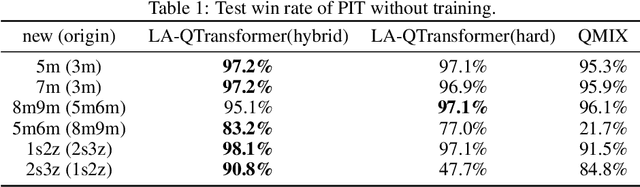

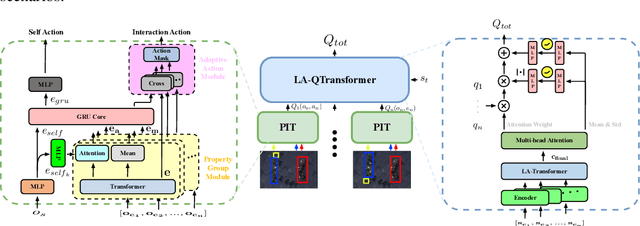

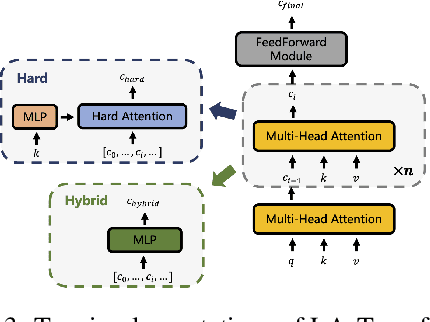

Abstract:Extending transfer learning to cooperative multi-agent reinforcement learning (MARL) has recently received much attention. In contrast to the single-agent setting, the coordination indispensable in cooperative MARL constrains each agent's policy. However, existing transfer methods focus exclusively on agent policy and ignores coordination knowledge. We propose a new architecture that realizes robust coordination knowledge transfer through appropriate decomposition of the overall coordination into several coordination patterns. We use a novel mixing network named level-adaptive QTransformer (LA-QTransformer) to realize agent coordination that considers credit assignment, with appropriate coordination patterns for different agents realized by a novel level-adaptive Transformer (LA-Transformer) dedicated to the transfer of coordination knowledge. In addition, we use a novel agent network named Population Invariant agent with Transformer (PIT) to realize the coordination transfer in more varieties of scenarios. Extensive experiments in StarCraft II micro-management show that LA-QTransformer together with PIT achieves superior performance compared with state-of-the-art baselines.

Multi-Agent Reinforcement Learning with Graph Clustering

Sep 28, 2020

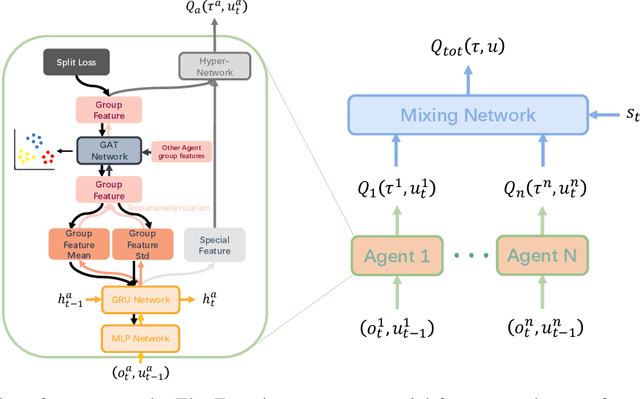

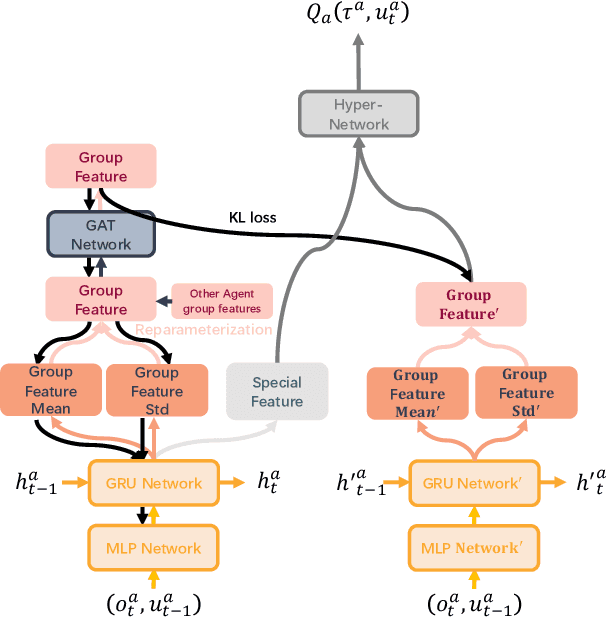

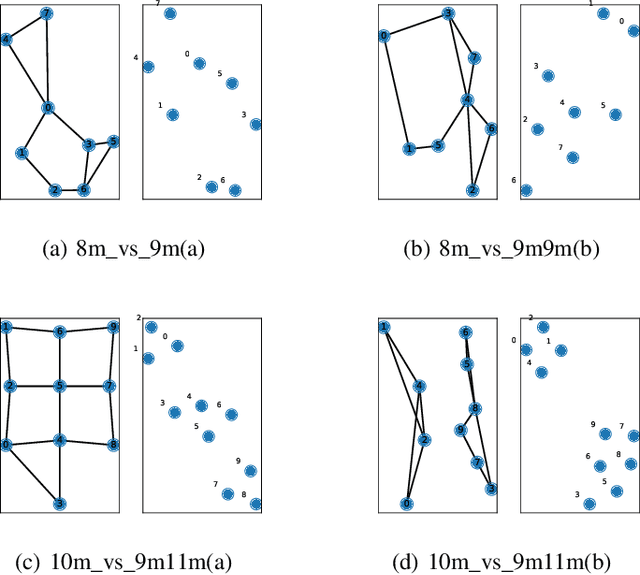

Abstract:In this paper, the group concept is introduced into multi-agent reinforcement learning. Agents, in this method, are divided into several groups, each of which completes a specific subtask, cooperating to accomplish the main task. In order to exchange information between agents, present methods mainly use the communication vector; this can lead to communication redundancy. To solve this problem, a MARL based method is proposed on graph clustering. It allows agents to learn group features adaptively and replaces the communication operation. In this approach, agent features are divided into two types, including in-group and individual features. The generality and differences between agents are represented by them, respectively. Based on the graph attention network(GAT), the graph clustering method is introduced to optimize agent group feature. These features are then applied to generate individual Q value. The split loss is presented to distinguish agent features in order to overcome the consistent problem brought by GAT. The proposed method is easy to be converted into the CTDE framework by using the Kullback-Leibler divergence method. Empirical results are evaluated on a challenging set of StarCraft II micromanagement tasks. The result reveals that the proposed method achieves significant performance improvements in the SMAC domain, and can maintain a great performance with the increase in the number of agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge