Friedhelm Schwenker

Institute of Neural Information Processing, University of Ulm, Ulm, Germany

ConceptACT: Episode-Level Concepts for Sample-Efficient Robotic Imitation Learning

Jan 23, 2026Abstract:Imitation learning enables robots to acquire complex manipulation skills from human demonstrations, but current methods rely solely on low-level sensorimotor data while ignoring the rich semantic knowledge humans naturally possess about tasks. We present ConceptACT, an extension of Action Chunking with Transformers that leverages episode-level semantic concept annotations during training to improve learning efficiency. Unlike language-conditioned approaches that require semantic input at deployment, ConceptACT uses human-provided concepts (object properties, spatial relationships, task constraints) exclusively during demonstration collection, adding minimal annotation burden. We integrate concepts using a modified transformer architecture in which the final encoder layer implements concept-aware cross-attention, supervised to align with human annotations. Through experiments on two robotic manipulation tasks with logical constraints, we demonstrate that ConceptACT converges faster and achieves superior sample efficiency compared to standard ACT. Crucially, we show that architectural integration through attention mechanisms significantly outperforms naive auxiliary prediction losses or language-conditioned models. These results demonstrate that properly integrated semantic supervision provides powerful inductive biases for more efficient robot learning.

Revisiting Evaluation of Deep Neural Networks for Pedestrian Detection

Nov 13, 2025

Abstract:Reliable pedestrian detection represents a crucial step towards automated driving systems. However, the current performance benchmarks exhibit weaknesses. The currently applied metrics for various subsets of a validation dataset prohibit a realistic performance evaluation of a DNN for pedestrian detection. As image segmentation supplies fine-grained information about a street scene, it can serve as a starting point to automatically distinguish between different types of errors during the evaluation of a pedestrian detector. In this work, eight different error categories for pedestrian detection are proposed and new metrics are proposed for performance comparison along these error categories. We use the new metrics to compare various backbones for a simplified version of the APD, and show a more fine-grained and robust way to compare models with each other especially in terms of safety-critical performance. We achieve SOTA on CityPersons-reasonable (without extra training data) by using a rather simple architecture.

Hybrid quantum tensor networks for aeroelastic applications

Aug 07, 2025Abstract:We investigate the application of hybrid quantum tensor networks to aeroelastic problems, harnessing the power of Quantum Machine Learning (QML). By combining tensor networks with variational quantum circuits, we demonstrate the potential of QML to tackle complex time series classification and regression tasks. Our results showcase the ability of hybrid quantum tensor networks to achieve high accuracy in binary classification. Furthermore, we observe promising performance in regressing discrete variables. While hyperparameter selection remains a challenge, requiring careful optimisation to unlock the full potential of these models, this work contributes significantly to the development of QML for solving intricate problems in aeroelasticity. We present an end-to-end trainable hybrid algorithm. We first encode time series into tensor networks to then utilise trainable tensor networks for dimensionality reduction, and convert the resulting tensor to a quantum circuit in the encoding step. Then, a tensor network inspired trainable variational quantum circuit is applied to solve either a classification or a multivariate or univariate regression task in the aeroelasticity domain.

PatternPortrait: Draw Me Like One of Your Scribbles

Jan 22, 2024Abstract:This paper introduces a process for generating abstract portrait drawings from pictures. Their unique style is created by utilizing single freehand pattern sketches as references to generate unique patterns for shading. The method involves extracting facial and body features from images and transforming them into vector lines. A key aspect of the research is the development of a graph neural network architecture designed to learn sketch stroke representations in vector form, enabling the generation of diverse stroke variations. The combination of these two approaches creates joyful abstract drawings that are realized via a pen plotter. The presented process garnered positive feedback from an audience of approximately 280 participants.

Trace and Detect Adversarial Attacks on CNNs using Feature Response Maps

Aug 24, 2022

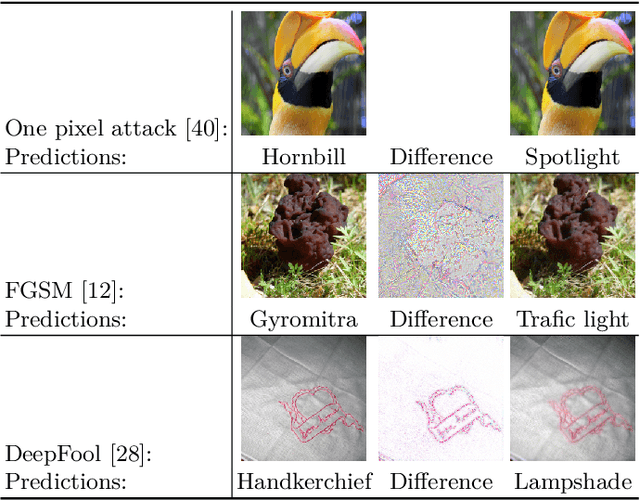

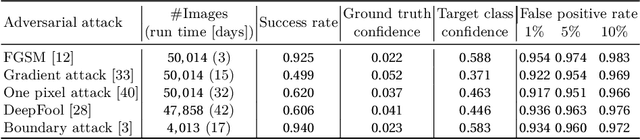

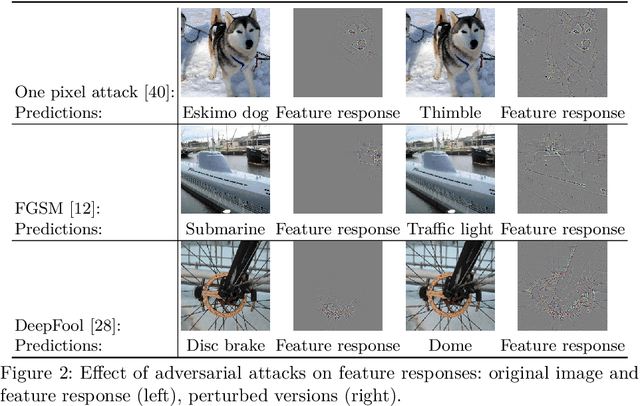

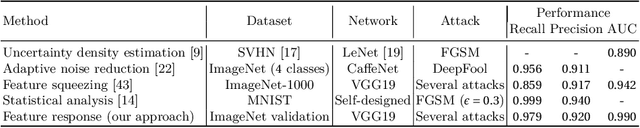

Abstract:The existence of adversarial attacks on convolutional neural networks (CNN) questions the fitness of such models for serious applications. The attacks manipulate an input image such that misclassification is evoked while still looking normal to a human observer -- they are thus not easily detectable. In a different context, backpropagated activations of CNN hidden layers -- "feature responses" to a given input -- have been helpful to visualize for a human "debugger" what the CNN "looks at" while computing its output. In this work, we propose a novel detection method for adversarial examples to prevent attacks. We do so by tracking adversarial perturbations in feature responses, allowing for automatic detection using average local spatial entropy. The method does not alter the original network architecture and is fully human-interpretable. Experiments confirm the validity of our approach for state-of-the-art attacks on large-scale models trained on ImageNet.

* 13 pages, 6 figures

Radial Basis Function Networks for Convolutional Neural Networks to Learn Similarity Distance Metric and Improve Interpretability

Aug 24, 2022

Abstract:Radial basis function neural networks (RBFs) are prime candidates for pattern classification and regression and have been used extensively in classical machine learning applications. However, RBFs have not been integrated into contemporary deep learning research and computer vision using conventional convolutional neural networks (CNNs) due to their lack of adaptability with modern architectures. In this paper, we adapt RBF networks as a classifier on top of CNNs by modifying the training process and introducing a new activation function to train modern vision architectures end-to-end for image classification. The specific architecture of RBFs enables the learning of a similarity distance metric to compare and find similar and dissimilar images. Furthermore, we demonstrate that using an RBF classifier on top of any CNN architecture provides new human-interpretable insights about the decision-making process of the models. Finally, we successfully apply RBFs to a range of CNN architectures and evaluate the results on benchmark computer vision datasets.

* 12 pages, 8 figures

PrepNet: A Convolutional Auto-Encoder to Homogenize CT Scans for Cross-Dataset Medical Image Analysis

Aug 19, 2022

Abstract:With the spread of COVID-19 over the world, the need arose for fast and precise automatic triage mechanisms to decelerate the spread of the disease by reducing human efforts e.g. for image-based diagnosis. Although the literature has shown promising efforts in this direction, reported results do not consider the variability of CT scans acquired under varying circumstances, thus rendering resulting models unfit for use on data acquired using e.g. different scanner technologies. While COVID-19 diagnosis can now be done efficiently using PCR tests, this use case exemplifies the need for a methodology to overcome data variability issues in order to make medical image analysis models more widely applicable. In this paper, we explicitly address the variability issue using the example of COVID-19 diagnosis and propose a novel generative approach that aims at erasing the differences induced by e.g. the imaging technology while simultaneously introducing minimal changes to the CT scans through leveraging the idea of deep auto-encoders. The proposed prepossessing architecture (PrepNet) (i) is jointly trained on multiple CT scan datasets and (ii) is capable of extracting improved discriminative features for improved diagnosis. Experimental results on three public datasets (SARS-COVID-2, UCSD COVID-CT, MosMed) show that our model improves cross-dataset generalization by up to $11.84$ percentage points despite a minor drop in within dataset performance.

* 7 pages 4 figures peer reviewed and published in IEEE EMBS Regional Conference on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI 2021)

Binary Classification: Counterbalancing Class Imbalance by Applying Regression Models in Combination with One-Sided Label Shifts

Nov 30, 2020

Abstract:In many real-world pattern recognition scenarios, such as in medical applications, the corresponding classification tasks can be of an imbalanced nature. In the current study, we focus on binary, imbalanced classification tasks, i.e.~binary classification tasks in which one of the two classes is under-represented (minority class) in comparison to the other class (majority class). In the literature, many different approaches have been proposed, such as under- or oversampling, to counter class imbalance. In the current work, we introduce a novel method, which addresses the issues of class imbalance. To this end, we first transfer the binary classification task to an equivalent regression task. Subsequently, we generate a set of negative and positive target labels, such that the corresponding regression task becomes balanced, with respect to the redefined target label set. We evaluate our approach on a number of publicly available data sets in combination with Support Vector Machines. Moreover, we compare our proposed method to one of the most popular oversampling techniques (SMOTE). Based on the detailed discussion of the presented outcomes of our experimental evaluation, we provide promising ideas for future research directions.

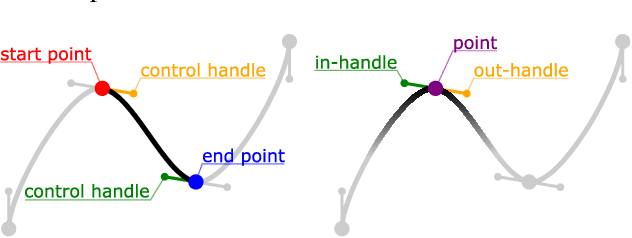

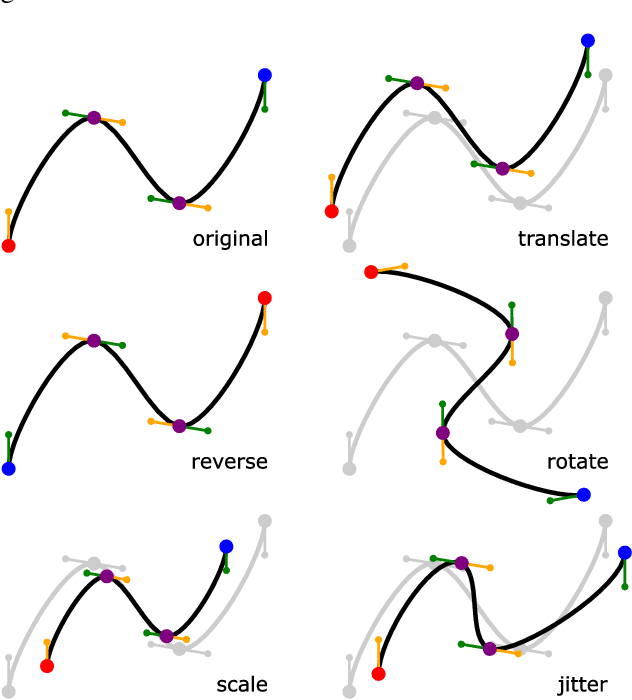

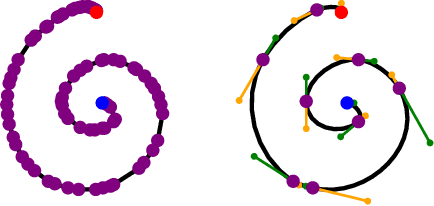

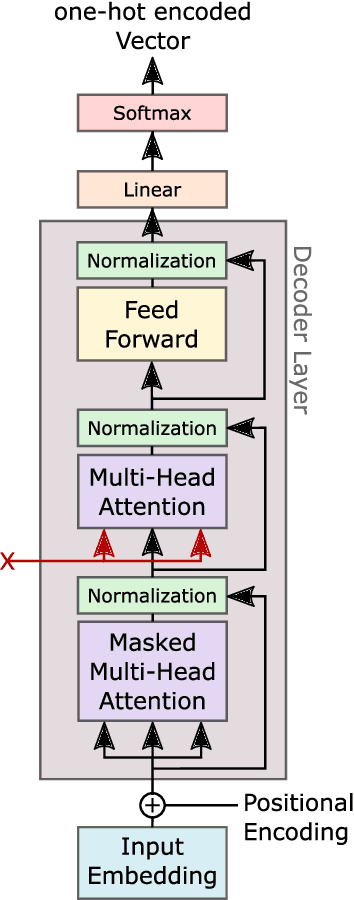

StrokeCoder: Path-Based Image Generation from Single Examples using Transformers

Mar 26, 2020

Abstract:This paper demonstrates how a Transformer Neural Network can be used to learn a Generative Model from a single path-based example image. We further show how a data set can be generated from the example image and how the model can be used to generate a large set of deviated images, which still represent the original image's style and concept.

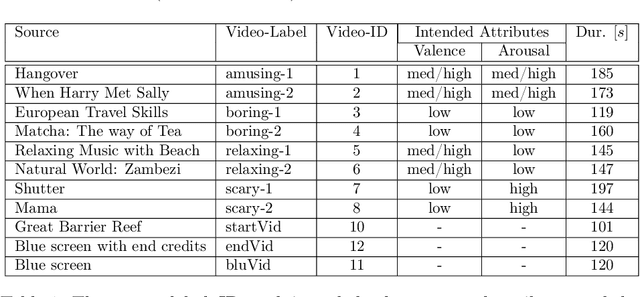

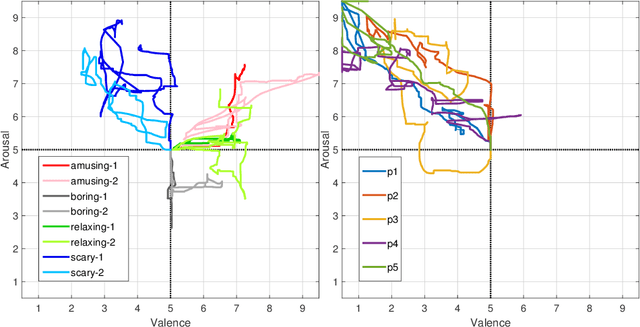

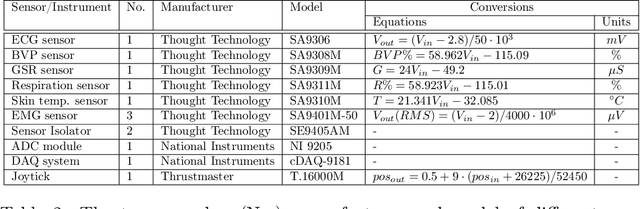

A dataset of continuous affect annotations and physiological signals for emotion analysis

Dec 06, 2018

Abstract:From a computational viewpoint, emotions continue to be intriguingly hard to understand. In research, direct, real-time inspection in realistic settings is not possible. Discrete, indirect, post-hoc recordings are therefore the norm. As a result, proper emotion assessment remains a problematic issue. The Continuously Annotated Signals of Emotion (CASE) dataset provides a solution as it focusses on real-time continuous annotation of emotions, as experienced by the participants, while watching various videos. For this purpose, a novel, intuitive joystick-based annotation interface was developed, that allowed for simultaneous reporting of valence and arousal, that are instead often annotated independently. In parallel, eight high quality, synchronized physiological recordings (1000 Hz, 16-bit ADC) were made of ECG, BVP, EMG (3x), GSR (or EDA), respiration and skin temperature. The dataset consists of the physiological and annotation data from 30 participants, 15 male and 15 female, who watched several validated video-stimuli. The validity of the emotion induction, as exemplified by the annotation and physiological data, is also presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge