Frieder Stolzenburg

From Data to Commonsense Reasoning: The Use of Large Language Models for Explainable AI

Jul 04, 2024Abstract:Commonsense reasoning is a difficult task for a computer, but a critical skill for an artificial intelligence (AI). It can enhance the explainability of AI models by enabling them to provide intuitive and human-like explanations for their decisions. This is necessary in many areas especially in question answering (QA), which is one of the most important tasks of natural language processing (NLP). Over time, a multitude of methods have emerged for solving commonsense reasoning problems such as knowledge-based approaches using formal logic or linguistic analysis. In this paper, we investigate the effectiveness of large language models (LLMs) on different QA tasks with a focus on their abilities in reasoning and explainability. We study three LLMs: GPT-3.5, Gemma and Llama 3. We further evaluate the LLM results by means of a questionnaire. We demonstrate the ability of LLMs to reason with commonsense as the models outperform humans on different datasets. While GPT-3.5's accuracy ranges from 56% to 93% on various QA benchmarks, Llama 3 achieved a mean accuracy of 90% on all eleven datasets. Thereby Llama 3 is outperforming humans on all datasets with an average 21% higher accuracy over ten datasets. Furthermore, we can appraise that, in the sense of explainable artificial intelligence (XAI), GPT-3.5 provides good explanations for its decisions. Our questionnaire revealed that 66% of participants rated GPT-3.5's explanations as either "good" or "excellent". Taken together, these findings enrich our understanding of current LLMs and pave the way for future investigations of reasoning and explainability.

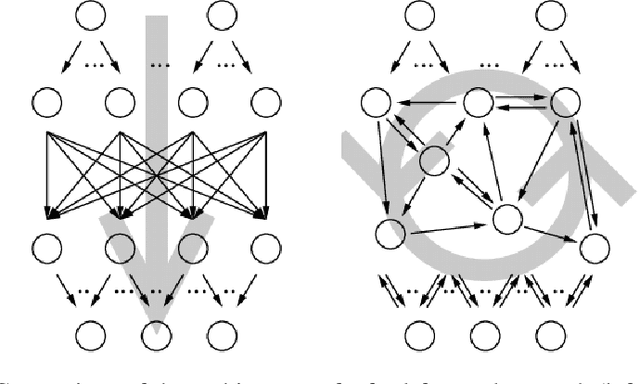

Fast Classification Learning with Neural Networks and Conceptors for Speech Recognition and Car Driving Maneuvers

Feb 10, 2021

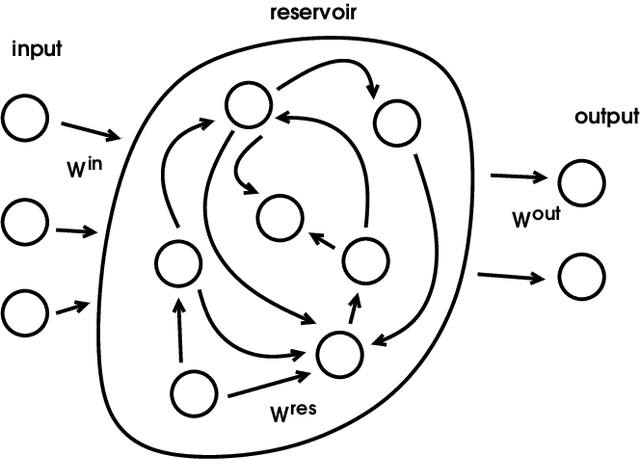

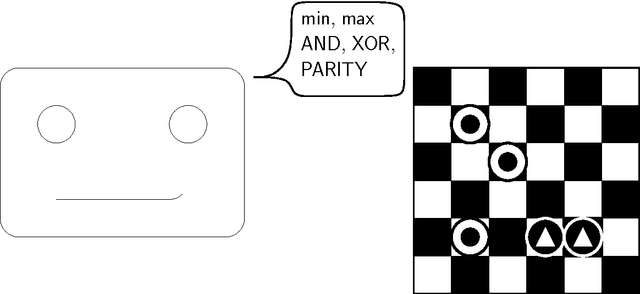

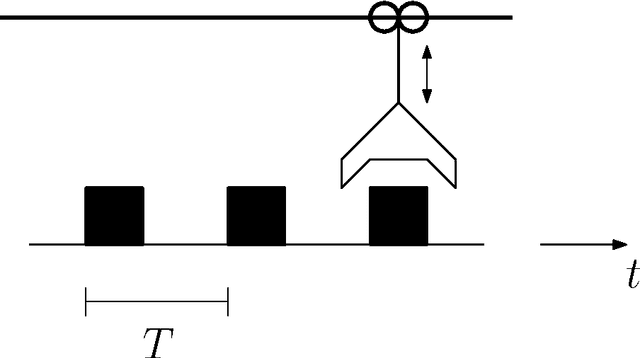

Abstract:Recurrent neural networks are a powerful means in diverse applications. We show that, together with so-called conceptors, they also allow fast learning, in contrast to other deep learning methods. In addition, a relatively small number of examples suffices to train neural networks with high accuracy. We demonstrate this with two applications, namely speech recognition and detecting car driving maneuvers. We improve the state-of-the art by application-specific preparation techniques: For speech recognition, we use mel frequency cepstral coefficients leading to a compact representation of the frequency spectra, and detecting car driving maneuvers can be done without the commonly used polynomial interpolation, as our evaluation suggests.

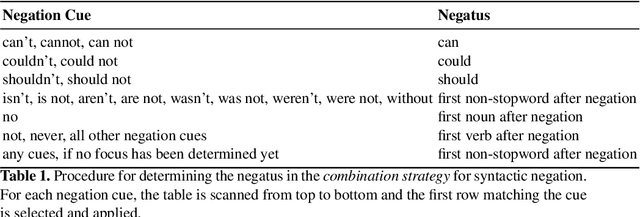

Negation in Cognitive Reasoning

Dec 23, 2020

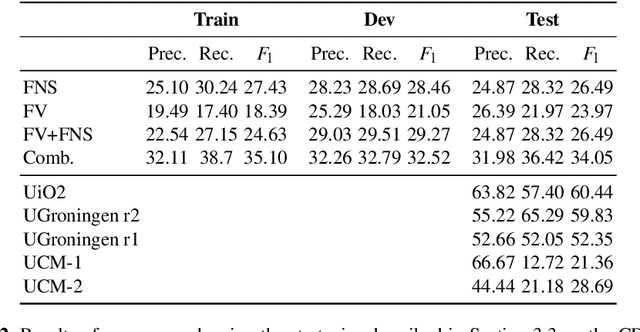

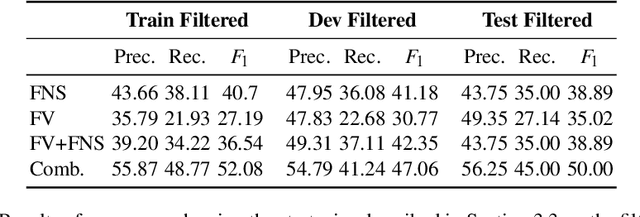

Abstract:Negation is both an operation in formal logic and in natural language by which a proposition is replaced by one stating the opposite, as by the addition of "not" or another negation cue. Treating negation in an adequate way is required for cognitive reasoning, which comprises commonsense reasoning and text comprehension. One task of cognitive reasoning is answering questions given by sentences in natural language. There are tools based on discourse representation theory to convert sentences automatically into a formal logical representation. However, since the knowledge in logical databases in practice always is incomplete, forward reasoning of automated reasoning systems alone does not suffice to derive answers to questions because, instead of complete proofs, often only partial positive knowledge can be derived. In consequence, negative information from negated expressions does not help in this context, because only negative knowledge can be derived from this. Therefore, we aim at reducing syntactic negation, strictly speaking, the negated event or property, to its inverse. This lays the basis of cognitive reasoning employing both logic and machine learning for general question answering. In this paper, we describe an effective procedure to determine the negated event or property in order to replace it with it inverse and our overall system for cognitive reasoning. We demonstrate the procedure with examples and evaluate it with several benchmarks.

Using ConceptNet to Teach Common Sense to an Automated Theorem Prover

Dec 30, 2019

Abstract:The CoRg system is a system to solve commonsense reasoning problems. The core of the CoRg system is the automated theorem prover Hyper that is fed with large amounts of background knowledge. This background knowledge plays a crucial role in solving commonsense reasoning problems. In this paper we present different ways to use knowledge graphs as background knowledge and discuss challenges that arise.

* In Proceedings ARCADE 2019, arXiv:1912.11786

Predictive Neural Networks

May 02, 2018

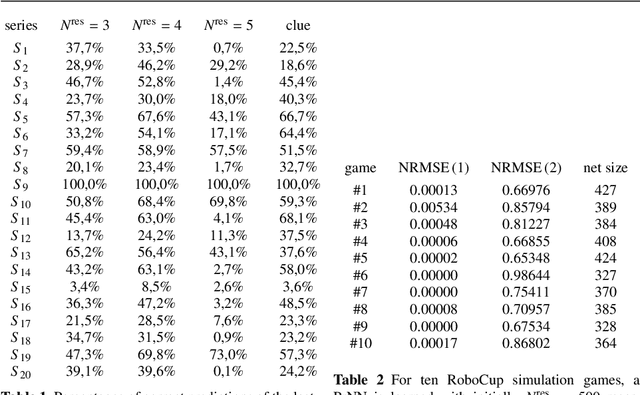

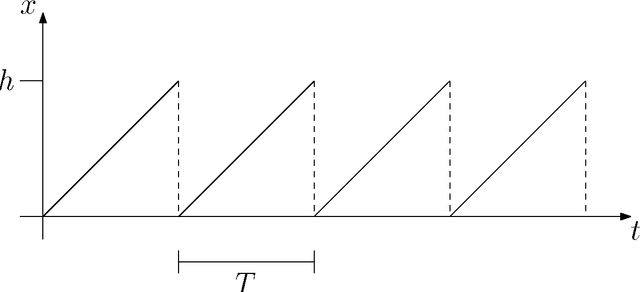

Abstract:Recurrent neural networks are a powerful means to cope with time series. We show that already linearly activated recurrent neural networks can approximate any time-dependent function f(t) given by a number of function values. The approximation can effectively be learned by simply solving a linear equation system; no backpropagation or similar methods are needed. Furthermore, the network size can be reduced by taking only the most relevant components of the network. Thus, in contrast to others, our approach not only learns network weights but also the network architecture. The networks have interesting properties: In the stationary case they end up in ellipse trajectories in the long run, and they allow the prediction of further values and compact representations of functions. We demonstrate this by several experiments, among them multiple superimposed oscillators (MSO) and robotic soccer. Predictive neural networks outperform the previous state-of-the-art for the MSO task with a minimal number of units.

RoboCupSimData: A RoboCup soccer research dataset

Nov 06, 2017

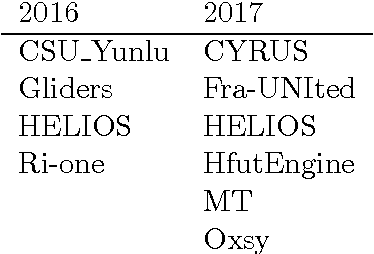

Abstract:RoboCup is an international scientific robot competition in which teams of multiple robots compete against each other. Its different leagues provide many sources of robotics data, that can be used for further analysis and application of machine learning. This paper describes a large dataset from games of some of the top teams (from 2016 and 2017) in RoboCup Soccer Simulation League (2D), where teams of 11 robots (agents) compete against each other. Overall, we used 10 different teams to play each other, resulting in 45 unique pairings. For each pairing, we ran 25 matches (of 10mins), leading to 1125 matches or more than 180 hours of game play. The generated CSV files are 17GB of data (zipped), or 229GB (unzipped). The dataset is unique in the sense that it contains both the ground truth data (global, complete, noise-free information of all objects on the field), as well as the noisy, local and incomplete percepts of each robot. These data are made available as CSV files, as well as in the original soccer simulator formats.

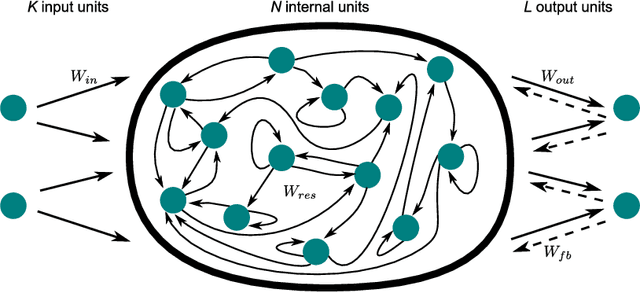

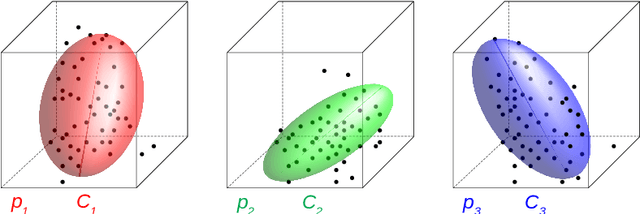

Analysing Soccer Games with Clustering and Conceptors

Aug 19, 2017

Abstract:We present a new approach for identifying situations and behaviours, which we call "moves", from soccer games in the 2D simulation league. Being able to identify key situations and behaviours are useful capabilities for analysing soccer matches, anticipating opponent behaviours to aid selection of appropriate tactics, and also as a prerequisite for automatic learning of behaviours and policies. To support a wide set of strategies, our goal is to identify situations from data, in an unsupervised way without making use of pre-defined soccer specific concepts such as "pass" or "dribble". The recurrent neural networks we use in our approach act as a high-dimensional projection of the recent history of a situation on the field. Similar situations, i.e., with similar histories, are found by clustering of network states. The same networks are also used to learn so-called conceptors, that are lower-dimensional manifolds that describe trajectories through a high-dimensional state space that enable situation-specific predictions from the same neural network. With the proposed approach, we can segment games into sequences of situations that are learnt in an unsupervised way, and learn conceptors that are useful for the prediction of the near future of the respective situation.

* To appear in RoboCup 2017: Robot World Cup XXI; Springer, 2018

Neural Networks and Continuous Time

Jun 14, 2016

Abstract:The fields of neural computation and artificial neural networks have developed much in the last decades. Most of the works in these fields focus on implementing and/or learning discrete functions or behavior. However, technical, physical, and also cognitive processes evolve continuously in time. This cannot be described directly with standard architectures of artificial neural networks such as multi-layer feed-forward perceptrons. Therefore, in this paper, we will argue that neural networks modeling continuous time are needed explicitly for this purpose, because with them the synthesis and analysis of continuous and possibly periodic processes in time are possible (e.g. for robot behavior) besides computing discrete classification functions (e.g. for logical reasoning). We will relate possible neural network architectures with (hybrid) automata models that allow to express continuous processes.

The RatioLog Project: Rational Extensions of Logical Reasoning

Jul 30, 2015

Abstract:Higher-level cognition includes logical reasoning and the ability of question answering with common sense. The RatioLog project addresses the problem of rational reasoning in deep question answering by methods from automated deduction and cognitive computing. In a first phase, we combine techniques from information retrieval and machine learning to find appropriate answer candidates from the huge amount of text in the German version of the free encyclopedia "Wikipedia". In a second phase, an automated theorem prover tries to verify the answer candidates on the basis of their logical representations. In a third phase - because the knowledge may be incomplete and inconsistent -, we consider extensions of logical reasoning to improve the results. In this context, we work toward the application of techniques from human reasoning: We employ defeasible reasoning to compare the answers w.r.t. specificity, deontic logic, normative reasoning, and model construction. Moreover, we use integrated case-based reasoning and machine learning techniques on the basis of the semantic structure of the questions and answer candidates to learn giving the right answers.

* 7 pages, 3 figures

Automated Reasoning for Robot Ethics

Feb 20, 2015Abstract:Deontic logic is a very well researched branch of mathematical logic and philosophy. Various kinds of deontic logics are considered for different application domains like argumentation theory, legal reasoning, and acts in multi-agent systems. In this paper, we show how standard deontic logic can be used to model ethical codes for multi-agent systems. Furthermore we show how Hyper, a high performance theorem prover, can be used to prove properties of these ethical codes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge