Sophie Siebert

Negation in Cognitive Reasoning

Dec 23, 2020

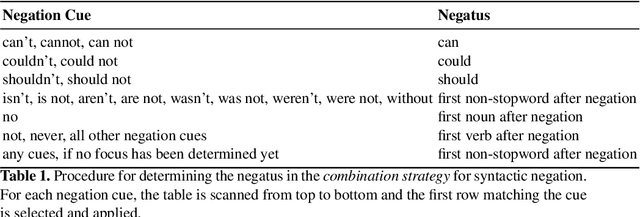

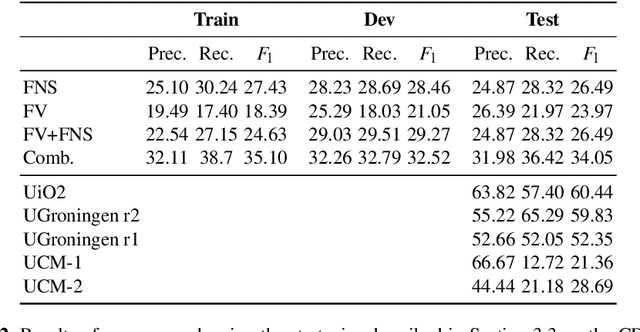

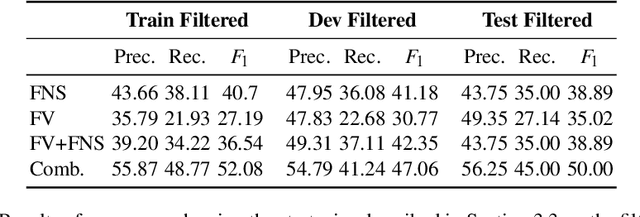

Abstract:Negation is both an operation in formal logic and in natural language by which a proposition is replaced by one stating the opposite, as by the addition of "not" or another negation cue. Treating negation in an adequate way is required for cognitive reasoning, which comprises commonsense reasoning and text comprehension. One task of cognitive reasoning is answering questions given by sentences in natural language. There are tools based on discourse representation theory to convert sentences automatically into a formal logical representation. However, since the knowledge in logical databases in practice always is incomplete, forward reasoning of automated reasoning systems alone does not suffice to derive answers to questions because, instead of complete proofs, often only partial positive knowledge can be derived. In consequence, negative information from negated expressions does not help in this context, because only negative knowledge can be derived from this. Therefore, we aim at reducing syntactic negation, strictly speaking, the negated event or property, to its inverse. This lays the basis of cognitive reasoning employing both logic and machine learning for general question answering. In this paper, we describe an effective procedure to determine the negated event or property in order to replace it with it inverse and our overall system for cognitive reasoning. We demonstrate the procedure with examples and evaluate it with several benchmarks.

Using ConceptNet to Teach Common Sense to an Automated Theorem Prover

Dec 30, 2019

Abstract:The CoRg system is a system to solve commonsense reasoning problems. The core of the CoRg system is the automated theorem prover Hyper that is fed with large amounts of background knowledge. This background knowledge plays a crucial role in solving commonsense reasoning problems. In this paper we present different ways to use knowledge graphs as background knowledge and discuss challenges that arise.

* In Proceedings ARCADE 2019, arXiv:1912.11786

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge