Stefanie Krause

Feeling Machines: Ethics, Culture, and the Rise of Emotional AI

Jun 14, 2025Abstract:This paper explores the growing presence of emotionally responsive artificial intelligence through a critical and interdisciplinary lens. Bringing together the voices of early-career researchers from multiple fields, it explores how AI systems that simulate or interpret human emotions are reshaping our interactions in areas such as education, healthcare, mental health, caregiving, and digital life. The analysis is structured around four central themes: the ethical implications of emotional AI, the cultural dynamics of human-machine interaction, the risks and opportunities for vulnerable populations, and the emerging regulatory, design, and technical considerations. The authors highlight the potential of affective AI to support mental well-being, enhance learning, and reduce loneliness, as well as the risks of emotional manipulation, over-reliance, misrepresentation, and cultural bias. Key challenges include simulating empathy without genuine understanding, encoding dominant sociocultural norms into AI systems, and insufficient safeguards for individuals in sensitive or high-risk contexts. Special attention is given to children, elderly users, and individuals with mental health challenges, who may interact with AI in emotionally significant ways. However, there remains a lack of cognitive or legal protections which are necessary to navigate such engagements safely. The report concludes with ten recommendations, including the need for transparency, certification frameworks, region-specific fine-tuning, human oversight, and longitudinal research. A curated supplementary section provides practical tools, models, and datasets to support further work in this domain.

Generative AI in Education: Student Skills and Lecturer Roles

Apr 28, 2025Abstract:Generative Artificial Intelligence (GenAI) tools such as ChatGPT are emerging as a revolutionary tool in education that brings both positive aspects and challenges for educators and students, reshaping how learning and teaching are approached. This study aims to identify and evaluate the key competencies students need to effectively engage with GenAI in education and to provide strategies for lecturers to integrate GenAI into teaching practices. The study applied a mixed method approach with a combination of a literature review and a quantitative survey involving 130 students from South Asia and Europe to obtain its findings. The literature review identified 14 essential student skills for GenAI engagement, with AI literacy, critical thinking, and ethical AI practices emerging as the most critical. The student survey revealed gaps in prompt engineering, bias awareness, and AI output management. In our study of lecturer strategies, we identified six key areas, with GenAI Integration and Curriculum Design being the most emphasised. Our findings highlight the importance of incorporating GenAI into education. While literature prioritized ethics and policy development, students favour hands-on, project-based learning and practical AI applications. To foster inclusive and responsible GenAI adoption, institutions should ensure equitable access to GenAI tools, establish clear academic integrity policies, and advocate for global GenAI research initiatives.

From Data to Commonsense Reasoning: The Use of Large Language Models for Explainable AI

Jul 04, 2024Abstract:Commonsense reasoning is a difficult task for a computer, but a critical skill for an artificial intelligence (AI). It can enhance the explainability of AI models by enabling them to provide intuitive and human-like explanations for their decisions. This is necessary in many areas especially in question answering (QA), which is one of the most important tasks of natural language processing (NLP). Over time, a multitude of methods have emerged for solving commonsense reasoning problems such as knowledge-based approaches using formal logic or linguistic analysis. In this paper, we investigate the effectiveness of large language models (LLMs) on different QA tasks with a focus on their abilities in reasoning and explainability. We study three LLMs: GPT-3.5, Gemma and Llama 3. We further evaluate the LLM results by means of a questionnaire. We demonstrate the ability of LLMs to reason with commonsense as the models outperform humans on different datasets. While GPT-3.5's accuracy ranges from 56% to 93% on various QA benchmarks, Llama 3 achieved a mean accuracy of 90% on all eleven datasets. Thereby Llama 3 is outperforming humans on all datasets with an average 21% higher accuracy over ten datasets. Furthermore, we can appraise that, in the sense of explainable artificial intelligence (XAI), GPT-3.5 provides good explanations for its decisions. Our questionnaire revealed that 66% of participants rated GPT-3.5's explanations as either "good" or "excellent". Taken together, these findings enrich our understanding of current LLMs and pave the way for future investigations of reasoning and explainability.

The Evolution of Learning: Assessing the Transformative Impact of Generative AI on Higher Education

Apr 16, 2024

Abstract:Generative Artificial Intelligence (GAI) models such as ChatGPT have experienced a surge in popularity, attracting 100 million active users in 2 months and generating an estimated 10 million daily queries. Despite this remarkable adoption, there remains a limited understanding to which extent this innovative technology influences higher education. This research paper investigates the impact of GAI on university students and Higher Education Institutions (HEIs). The study adopts a mixed-methods approach, combining a comprehensive survey with scenario analysis to explore potential benefits, drawbacks, and transformative changes the new technology brings. Using an online survey with 130 participants we assessed students' perspectives and attitudes concerning present ChatGPT usage in academics. Results show that students use the current technology for tasks like assignment writing and exam preparation and believe it to be a effective help in achieving academic goals. The scenario analysis afterwards projected potential future scenarios, providing valuable insights into the possibilities and challenges associated with incorporating GAI into higher education. The main motivation is to gain a tangible and precise understanding of the potential consequences for HEIs and to provide guidance responding to the evolving learning environment. The findings indicate that irresponsible and excessive use of the technology could result in significant challenges. Hence, HEIs must develop stringent policies, reevaluate learning objectives, upskill their lecturers, adjust the curriculum and reconsider examination approaches.

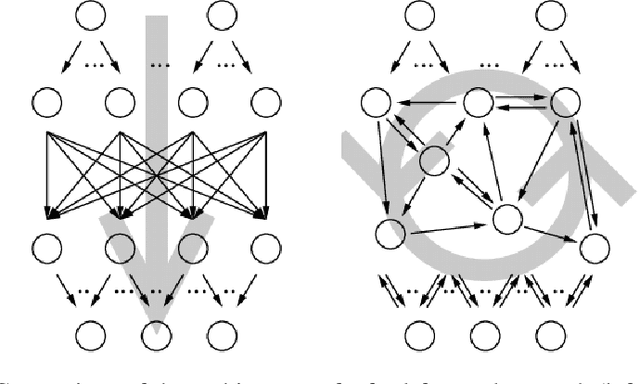

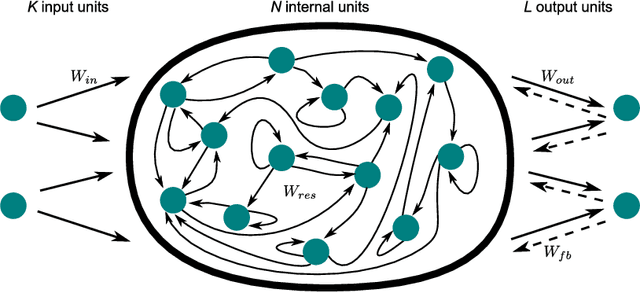

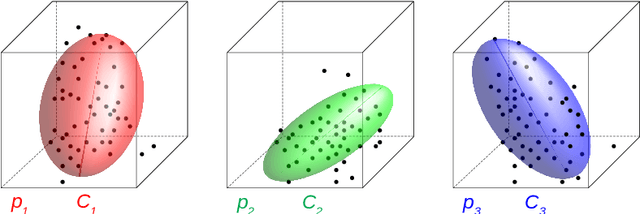

Fast Classification Learning with Neural Networks and Conceptors for Speech Recognition and Car Driving Maneuvers

Feb 10, 2021

Abstract:Recurrent neural networks are a powerful means in diverse applications. We show that, together with so-called conceptors, they also allow fast learning, in contrast to other deep learning methods. In addition, a relatively small number of examples suffices to train neural networks with high accuracy. We demonstrate this with two applications, namely speech recognition and detecting car driving maneuvers. We improve the state-of-the art by application-specific preparation techniques: For speech recognition, we use mel frequency cepstral coefficients leading to a compact representation of the frequency spectra, and detecting car driving maneuvers can be done without the commonly used polynomial interpolation, as our evaluation suggests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge