Francesco Pasti

MicroFlow: An Efficient Rust-Based Inference Engine for TinyML

Sep 28, 2024

Abstract:MicroFlow is an open-source TinyML framework for the deployment of Neural Networks (NNs) on embedded systems using the Rust programming language, specifically designed for efficiency and robustness, which is suitable for applications in critical environments. To achieve these objectives, MicroFlow employs a compiler-based inference engine approach, coupled with Rust's memory safety and features. The proposed solution enables the successful deployment of NNs on highly resource-constrained devices, including bare-metal 8-bit microcontrollers with only 2kB of RAM. Furthermore, MicroFlow is able to use less Flash and RAM memory than other state-of-the-art solutions for deploying NN reference models (i.e. wake-word and person detection). It can also achieve faster inference compared to existing engines on medium-size NNs, and similar performance on bigger ones. The experimental results prove the efficiency and suitability of MicroFlow for the deployment of TinyML models in critical environments where resources are particularly limited.

Tiny Robotics Dataset and Benchmark for Continual Object Detection

Sep 24, 2024

Abstract:Detecting objects in mobile robotics is crucial for numerous applications, from autonomous navigation to inspection. However, robots are often required to perform tasks in different domains with respect to the training one and need to adapt to these changes. Tiny mobile robots, subject to size, power, and computational constraints, encounter even more difficulties in running and adapting these algorithms. Such adaptability, though, is crucial for real-world deployment, where robots must operate effectively in dynamic and unpredictable settings. In this work, we introduce a novel benchmark to evaluate the continual learning capabilities of object detection systems in tiny robotic platforms. Our contributions include: (i) Tiny Robotics Object Detection (TiROD), a comprehensive dataset collected using a small mobile robot, designed to test the adaptability of object detectors across various domains and classes; (ii) an evaluation of state-of-the-art real-time object detectors combined with different continual learning strategies on this dataset, providing detailed insights into their performance and limitations; and (iii) we publish the data and the code to replicate the results to foster continuous advancements in this field. Our benchmark results indicate key challenges that must be addressed to advance the development of robust and efficient object detection systems for tiny robotics.

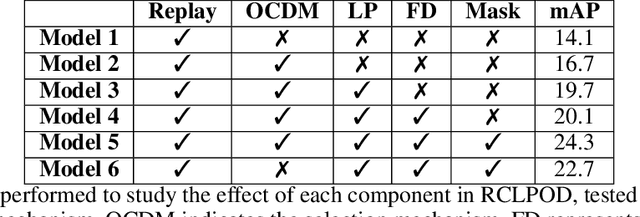

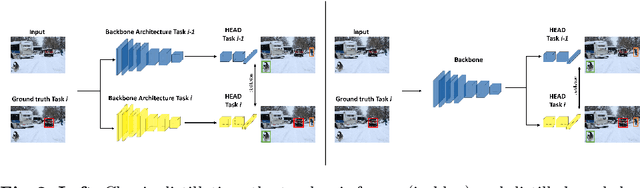

Replay Consolidation with Label Propagation for Continual Object Detection

Sep 09, 2024

Abstract:Object Detection is a highly relevant computer vision problem with many applications such as robotics and autonomous driving. Continual Learning~(CL) considers a setting where a model incrementally learns new information while retaining previously acquired knowledge. This is particularly challenging since Deep Learning models tend to catastrophically forget old knowledge while training on new data. In particular, Continual Learning for Object Detection~(CLOD) poses additional difficulties compared to CL for Classification. In CLOD, images from previous tasks may contain unknown classes that could reappear labeled in future tasks. These missing annotations cause task interference issues for replay-based approaches. As a result, most works in the literature have focused on distillation-based approaches. However, these approaches are effective only when there is a strong overlap of classes across tasks. To address the issues of current methodologies, we propose a novel technique to solve CLOD called Replay Consolidation with Label Propagation for Object Detection (RCLPOD). Based on the replay method, our solution avoids task interference issues by enhancing the buffer memory samples. Our method is evaluated against existing techniques in CLOD literature, demonstrating its superior performance on established benchmarks like VOC and COCO.

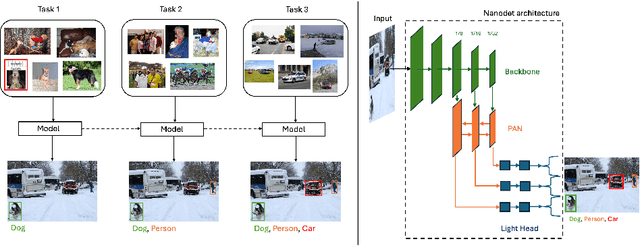

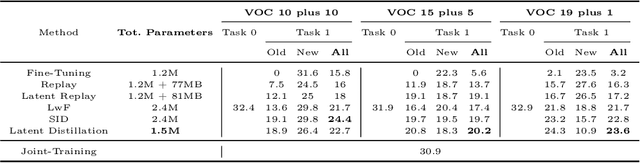

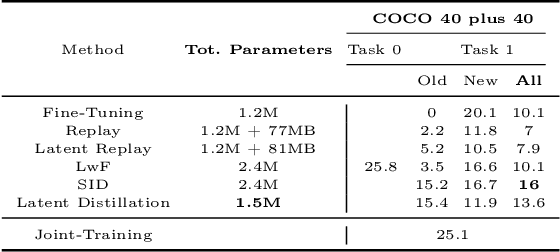

Latent Distillation for Continual Object Detection at the Edge

Sep 03, 2024

Abstract:While numerous methods achieving remarkable performance exist in the Object Detection literature, addressing data distribution shifts remains challenging. Continual Learning (CL) offers solutions to this issue, enabling models to adapt to new data while maintaining performance on previous data. This is particularly pertinent for edge devices, common in dynamic environments like automotive and robotics. In this work, we address the memory and computation constraints of edge devices in the Continual Learning for Object Detection (CLOD) scenario. Specifically, (i) we investigate the suitability of an open-source, lightweight, and fast detector, namely NanoDet, for CLOD on edge devices, improving upon larger architectures used in the literature. Moreover, (ii) we propose a novel CL method, called Latent Distillation~(LD), that reduces the number of operations and the memory required by state-of-the-art CL approaches without significantly compromising detection performance. Our approach is validated using the well-known VOC and COCO benchmarks, reducing the distillation parameter overhead by 74\% and the Floating Points Operations~(FLOPs) by 56\% per model update compared to other distillation methods.

OpenNav: Efficient Open Vocabulary 3D Object Detection for Smart Wheelchair Navigation

Aug 25, 2024Abstract:Open vocabulary 3D object detection (OV3D) allows precise and extensible object recognition crucial for adapting to diverse environments encountered in assistive robotics. This paper presents OpenNav, a zero-shot 3D object detection pipeline based on RGB-D images for smart wheelchairs. Our pipeline integrates an open-vocabulary 2D object detector with a mask generator for semantic segmentation, followed by depth isolation and point cloud construction to create 3D bounding boxes. The smart wheelchair exploits these 3D bounding boxes to identify potential targets and navigate safely. We demonstrate OpenNav's performance through experiments on the Replica dataset and we report preliminary results with a real wheelchair. OpenNav improves state-of-the-art significantly on the Replica dataset at mAP25 (+9pts) and mAP50 (+5pts) with marginal improvement at mAP. The code is publicly available at this link: https://github.com/EasyWalk-PRIN/OpenNav.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge