Francesco Mauro

Benchmarking of a new data splitting method on volcanic eruption data

Oct 08, 2024

Abstract:In this paper, a novel method for data splitting is presented: an iterative procedure divides the input dataset of volcanic eruption, chosen as the proposed use case, into two parts using a dissimilarity index calculated on the cumulative histograms of these two parts. The Cumulative Histogram Dissimilarity (CHD) index is introduced as part of the design. Based on the obtained results the proposed model in this case, compared to both Random splitting and K-means implemented over different configurations, achieves the best performance, with a slightly higher number of epochs. However, this demonstrates that the model can learn more deeply from the input dataset, which is attributable to the quality of the splitting. In fact, each model was trained with early stopping, suitable in case of overfitting, and the higher number of epochs in the proposed method demonstrates that early stopping did not detect overfitting, and consequently, the learning was optimal.

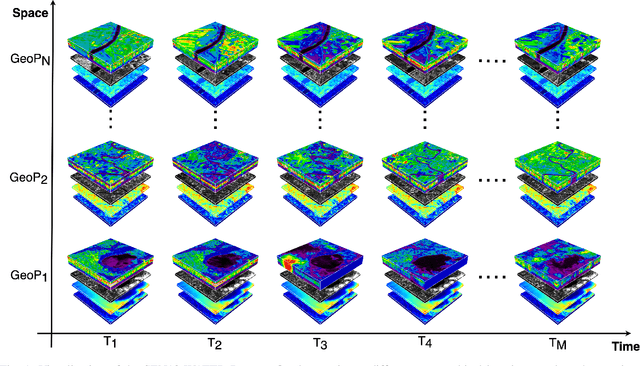

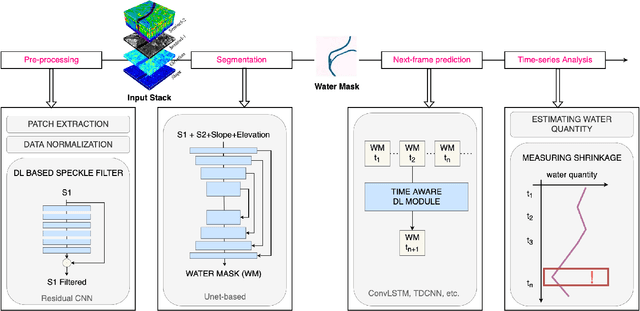

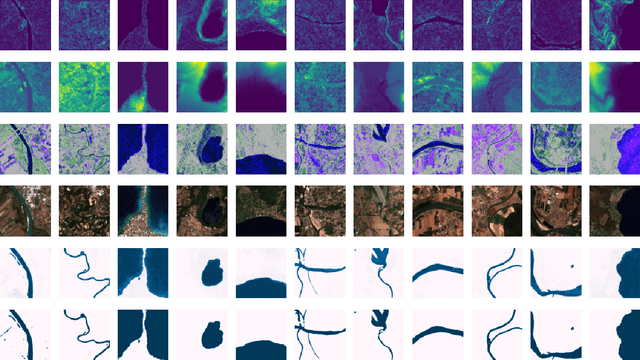

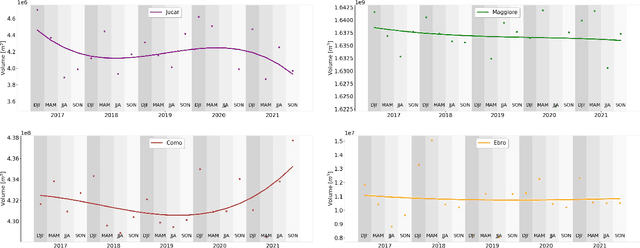

SEN12-WATER: A New Dataset for Hydrological Applications and its Benchmarking

Sep 25, 2024

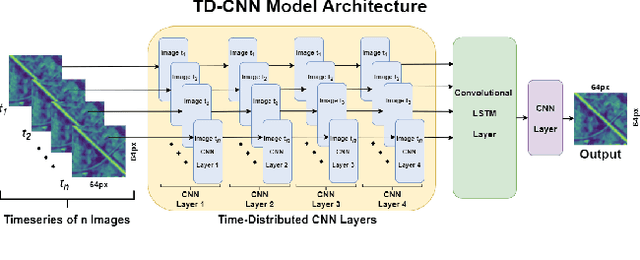

Abstract:Climate change and increasing droughts pose significant challenges to water resource management around the world. These problems lead to severe water shortages that threaten ecosystems, agriculture, and human communities. To advance the fight against these challenges, we present a new dataset, SEN12-WATER, along with a benchmark using a novel end-to-end Deep Learning (DL) framework for proactive drought-related analysis. The dataset, identified as a spatiotemporal datacube, integrates SAR polarization, elevation, slope, and multispectral optical bands. Our DL framework enables the analysis and estimation of water losses over time in reservoirs of interest, revealing significant insights into water dynamics for drought analysis by examining temporal changes in physical quantities such as water volume. Our methodology takes advantage of the multitemporal and multimodal characteristics of the proposed dataset, enabling robust generalization and advancing understanding of drought, contributing to climate change resilience and sustainable water resource management. The proposed framework involves, among the several components, speckle noise removal from SAR data, a water body segmentation through a U-Net architecture, the time series analysis, and the predictive capability of a Time-Distributed-Convolutional Neural Network (TD-CNN). Results are validated through ground truth data acquired on-ground via dedicated sensors and (tailored) metrics, such as Precision, Recall, Intersection over Union, Mean Squared Error, Structural Similarity Index Measure and Peak Signal-to-Noise Ratio.

From Graphs to Qubits: A Critical Review of Quantum Graph Neural Networks

Aug 12, 2024

Abstract:Quantum Graph Neural Networks (QGNNs) represent a novel fusion of quantum computing and Graph Neural Networks (GNNs), aimed at overcoming the computational and scalability challenges inherent in classical GNNs that are powerful tools for analyzing data with complex relational structures but suffer from limitations such as high computational complexity and over-smoothing in large-scale applications. Quantum computing, leveraging principles like superposition and entanglement, offers a pathway to enhanced computational capabilities. This paper critically reviews the state-of-the-art in QGNNs, exploring various architectures. We discuss their applications across diverse fields such as high-energy physics, molecular chemistry, finance and earth sciences, highlighting the potential for quantum advantage. Additionally, we address the significant challenges faced by QGNNs, including noise, decoherence, and scalability issues, proposing potential strategies to mitigate these problems. This comprehensive review aims to provide a foundational understanding of QGNNs, fostering further research and development in this promising interdisciplinary field.

Quanv4EO: Empowering Earth Observation by means of Quanvolutional Neural Networks

Jul 24, 2024

Abstract:A significant amount of remotely sensed data is generated daily by many Earth observation (EO) spaceborne and airborne sensors over different countries of our planet. Different applications use those data, such as natural hazard monitoring, global climate change, urban planning, and more. Many challenges are brought by the use of these big data in the context of remote sensing applications. In recent years, employment of machine learning (ML) and deep learning (DL)-based algorithms have allowed a more efficient use of these data but the issues in managing, processing, and efficiently exploiting them have even increased since classical computers have reached their limits. This article highlights a significant shift towards leveraging quantum computing techniques in processing large volumes of remote sensing data. The proposed Quanv4EO model introduces a quanvolution method for preprocessing multi-dimensional EO data. First its effectiveness is demonstrated through image classification tasks on MNIST and Fashion MNIST datasets, and later on, its capabilities on remote sensing image classification and filtering are shown. Key findings suggest that the proposed model not only maintains high precision in image classification but also shows improvements of around 5\% in EO use cases compared to classical approaches. Moreover, the proposed framework stands out for its reduced parameter size and the absence of training quantum kernels, enabling better scalability for processing massive datasets. These advancements underscore the promising potential of quantum computing in addressing the limitations of classical algorithms in remote sensing applications, offering a more efficient and effective alternative for image data classification and analysis.

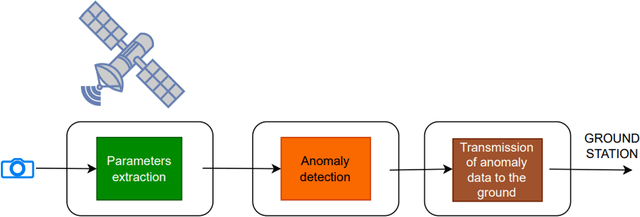

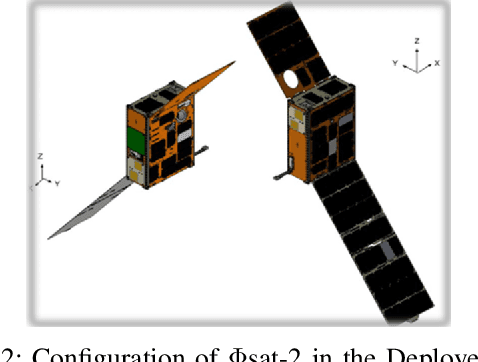

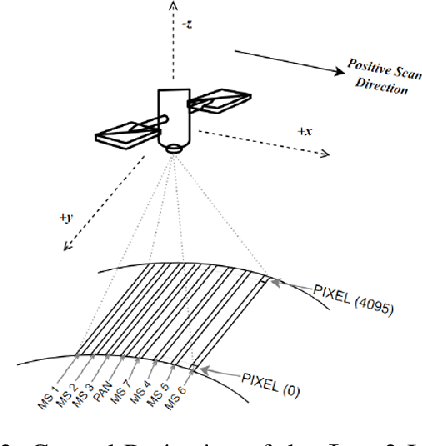

AI techniques for near real-time monitoring of contaminants in coastal waters on board future Phisat-2 mission

Apr 30, 2024

Abstract:Differently from conventional procedures, the proposed solution advocates for a groundbreaking paradigm in water quality monitoring through the integration of satellite Remote Sensing (RS) data, Artificial Intelligence (AI) techniques, and onboard processing. The objective is to offer nearly real-time detection of contaminants in coastal waters addressing a significant gap in the existing literature. Moreover, the expected outcomes include substantial advancements in environmental monitoring, public health protection, and resource conservation. The specific focus of our study is on the estimation of Turbidity and pH parameters, for their implications on human and aquatic health. Nevertheless, the designed framework can be extended to include other parameters of interest in the water environment and beyond. Originating from our participation in the European Space Agency (ESA) OrbitalAI Challenge, this article describes the distinctive opportunities and issues for the contaminants monitoring on the Phisat-2 mission. The specific characteristics of this mission, with the tools made available, will be presented, with the methodology proposed by the authors for the onboard monitoring of water contaminants in near real-time. Preliminary promising results are discussed and in progress and future work introduced.

QSpeckleFilter: a Quantum Machine Learning approach for SAR speckle filtering

Feb 02, 2024

Abstract:The use of Synthetic Aperture Radar (SAR) has greatly advanced our capacity for comprehensive Earth monitoring, providing detailed insights into terrestrial surface use and cover regardless of weather conditions, and at any time of day or night. However, SAR imagery quality is often compromised by speckle, a granular disturbance that poses challenges in producing accurate results without suitable data processing. In this context, the present paper explores the cutting-edge application of Quantum Machine Learning (QML) in speckle filtering, harnessing quantum algorithms to address computational complexities. We introduce here QSpeckleFilter, a novel QML model for SAR speckle filtering. The proposed method compared to a previous work from the same authors showcases its superior performance in terms of Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) on a testing dataset, and it opens new avenues for Earth Observation (EO) applications.

A Hybrid MLP-Quantum approach in Graph Convolutional Neural Networks for Oceanic Nino Index prediction

Jan 29, 2024Abstract:This paper explores an innovative fusion of Quantum Computing (QC) and Artificial Intelligence (AI) through the development of a Hybrid Quantum Graph Convolutional Neural Network (HQGCNN), combining a Graph Convolutional Neural Network (GCNN) with a Quantum Multilayer Perceptron (MLP). The study highlights the potentialities of GCNNs in handling global-scale dependencies and proposes the HQGCNN for predicting complex phenomena such as the Oceanic Nino Index (ONI). Preliminary results suggest the model potential to surpass state-of-the-art (SOTA). The code will be made available with the paper publication.

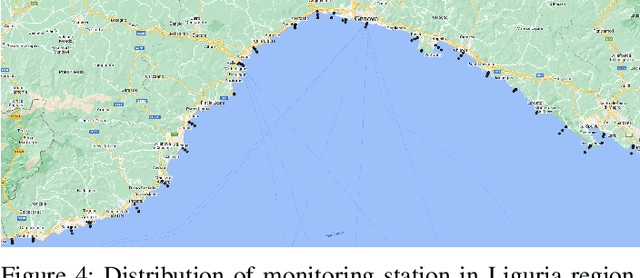

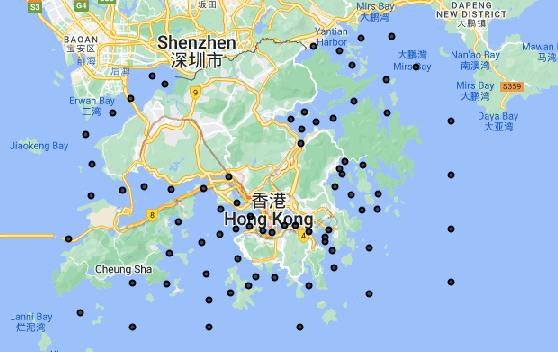

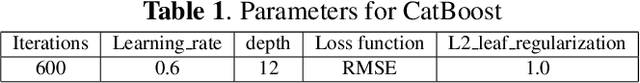

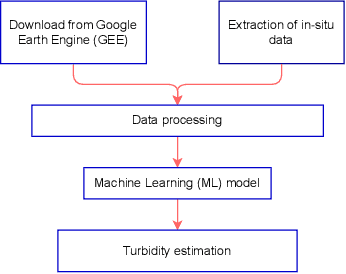

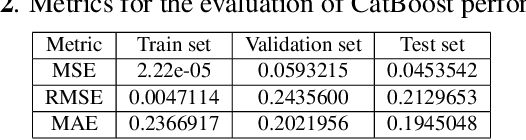

Monitoring water contaminants in coastal areas through ML algorithms leveraging atmospherically corrected Sentinel-2 data

Jan 08, 2024

Abstract:Monitoring water contaminants is of paramount importance, ensuring public health and environmental well-being. Turbidity, a key parameter, poses a significant problem, affecting water quality. Its accurate assessment is crucial for safeguarding ecosystems and human consumption, demanding meticulous attention and action. For this, our study pioneers a novel approach to monitor the Turbidity contaminant, integrating CatBoost Machine Learning (ML) with high-resolution data from Sentinel-2 Level-2A. Traditional methods are labor-intensive while CatBoost offers an efficient solution, excelling in predictive accuracy. Leveraging atmospherically corrected Sentinel-2 data through the Google Earth Engine (GEE), our study contributes to scalable and precise Turbidity monitoring. A specific tabular dataset derived from Hong Kong contaminants monitoring stations enriches our study, providing region-specific insights. Results showcase the viability of this integrated approach, laying the foundation for adopting advanced techniques in global water quality management.

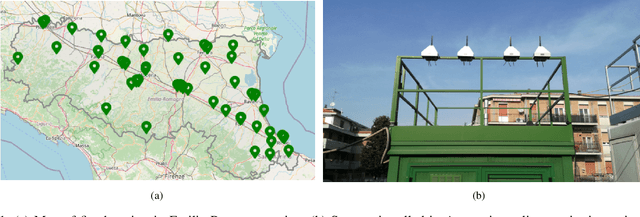

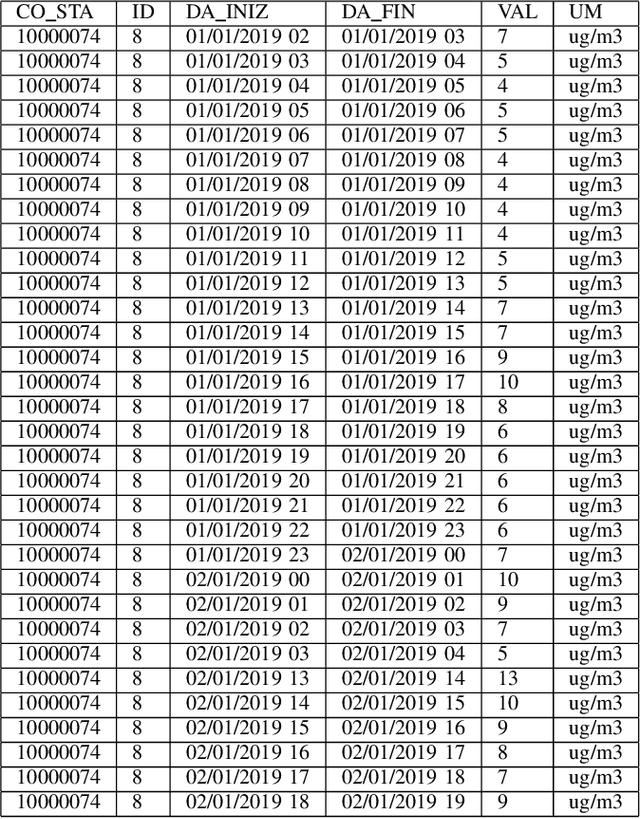

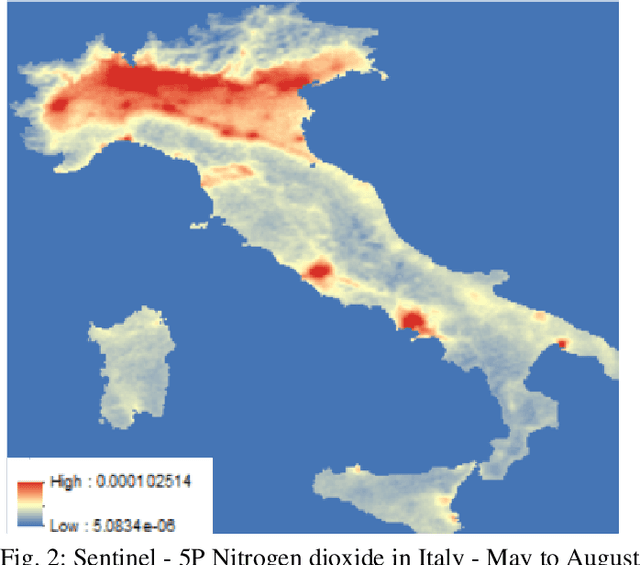

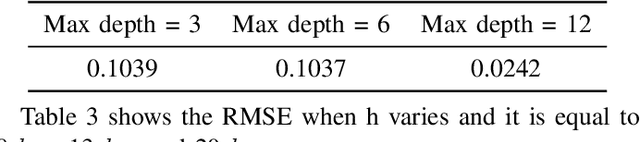

Estimation of Ground NO2 Measurements from Sentinel-5P Tropospheric Data through Categorical Boosting

Apr 08, 2023

Abstract:Atmospheric pollution has been largely considered by the scientific community as a primary threat to human health and ecosystems, above all for its impact on climate change. Therefore, its containment and reduction are gaining interest and commitment from institutions and researchers, although the solutions are not immediate. It becomes of primary importance to identify the distribution of air pollutants and evaluate their concentration levels in order to activate the right countermeasures. Among other tools, satellite-based measurements have been used for monitoring and obtaining information on air pollutants, and over the years their performance has increased in terms of both resolution and data reliability. This study aims to analyze the NO2 pollution in the Emilia Romagna Region (Northern Italy) during 2019, with the help of satellite retrievals from the {\nobreak Sentinel\nobreak-5P} mission of the European Copernicus Programme and ground-based measurements, obtained from the ARPA site (Regional Agency for the Protection of the Environment). The final goal is the estimation of ground NO2 measurements when only satellite data are available. For this task, we used a Machine Learning (ML) model, Categorical Boosting, which was demonstrated to work quite well and allowed us to achieve a Root-Mean-Square Error (RMSE) of 0.0242 over the 43 stations utilized to get the Ground Truth values. This procedure, applicable to other areas of Italy and the world and on longer timelines, represents the starting point to understand which other actions must be taken to improve its final performance.

A Machine Learning Approach to Long-Term Drought Prediction using Normalized Difference Indices Computed on a Spatiotemporal Dataset

Feb 05, 2023

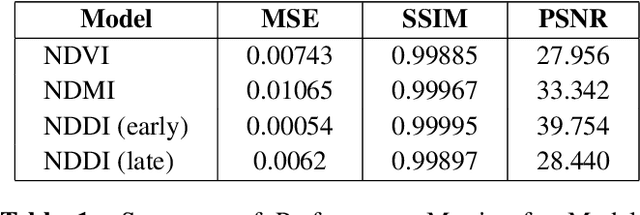

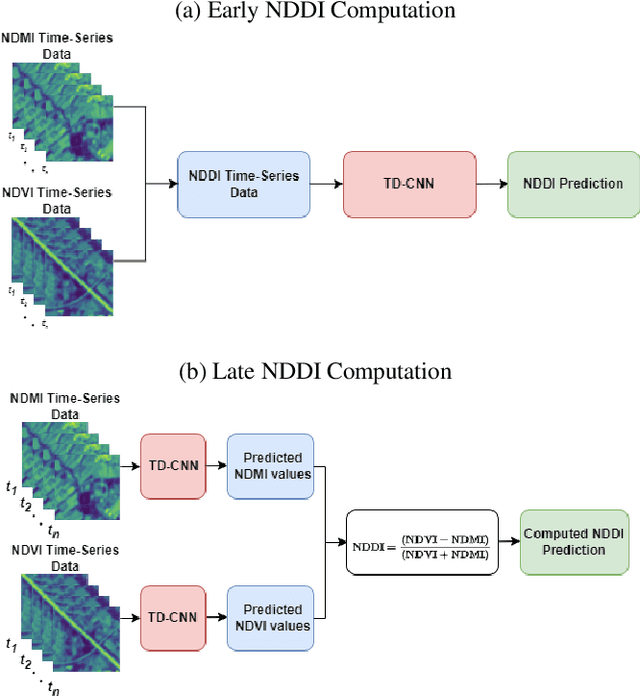

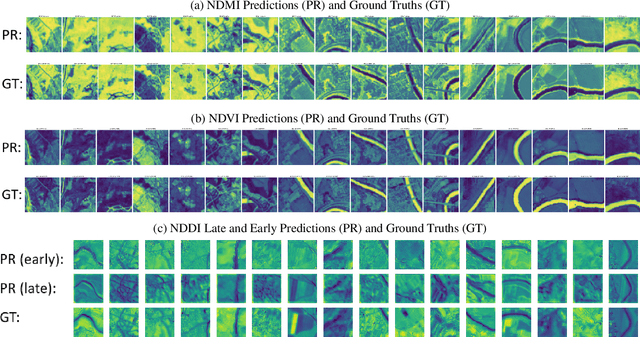

Abstract:Earlier research has shown that the Normalized Difference Drought Index (NDDI), combining information from both NDVI and NDMI, can be an accurate early indicator of drought conditions. NDDI is computed with information from visible, near-infrared, and short-wave infrared channels, and demonstrates increased sensitivity as a drought indicator than other indices. In this work, we aim to determine whether NDDI can serve as an early indicator of drought or dramatic environmental change, by computing NDDI using data from landscapes around bodies of water in Europe, which are not as drought-prone as the central US grasslands where NDDI was initially evaluated on. We use the dataset SEN2DWATER (SEN2DWATER: A Novel Multitemporal Dataset and Deep Learning Benchmark For Water Resources Analysis), a 2-Dimensional spatiotemporal dataset created from multispectral Sentinel-2 data collected over water bodies from July 2016 to December 2022. SEN2DWATER contains data from all 13 bands of Sentinel-2, making it a suitable dataset for our research. We leverage two CNNs, each learning trends in NDVI and NDMI values respectively using time series of images obtained from the SEN2DWATER dataset. By using the CNNs outputs, the predicted NDVI and NDMI values, we propose to compute a predicted NDDI, with the goal of investigating its accuracy. Preliminary results show that NDDI can be effectively forecasted with good accuracy by using ML methods, and the SEND2DWATER dataset could allow to calculate NDDI as a useful method for predicting climate and ecological change. Moreover, such predictions could be highly useful also in mitigating, or even preventing, any harmful effects of climate and ecological change, by supporting policy decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge